Article Structure

Abstract

This paper is concerned with the problem of question search.

Introduction

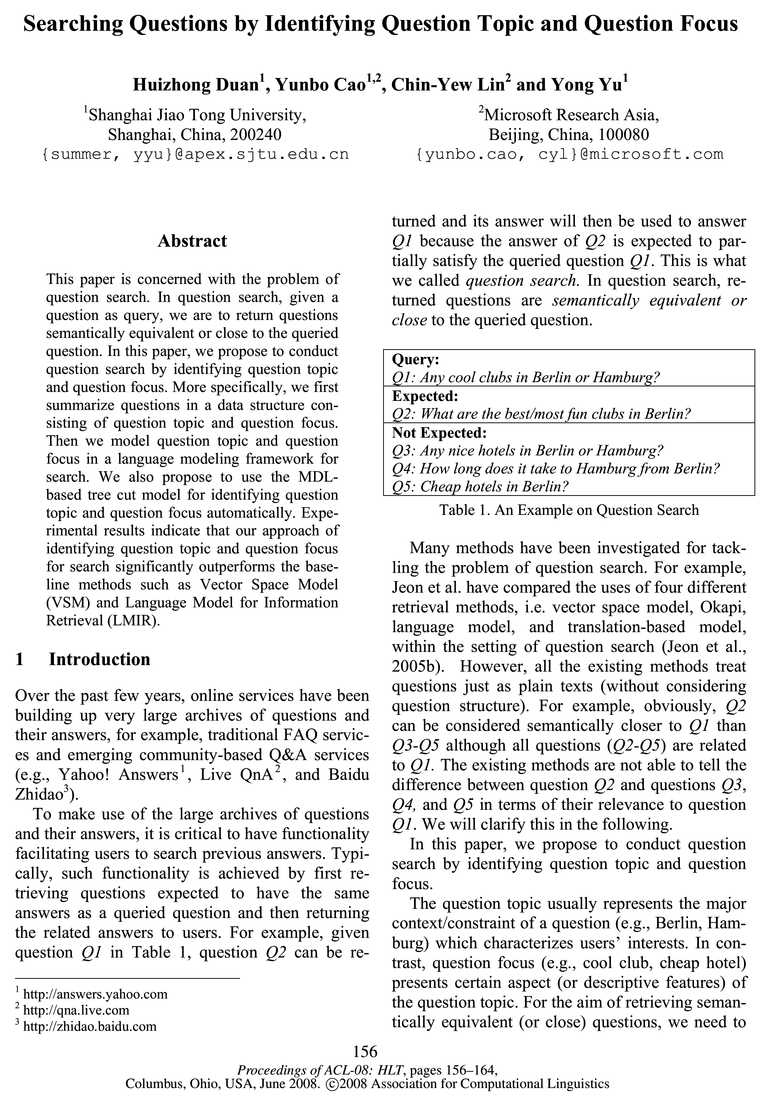

Over the past few years, online services have been building up very large archives of questions and their answers, for example, traditional FAQ services and emerging community-based Q&A services (e.g., Yahoo!

Our Approach to Question Search

Our approach to question search consists of two steps: (a) summarize questions in a data structure consisting of question topic and question focus; (b)

Experimental Results

We have conducted experiments to verify the effectiveness of our approach to question search.

Using Translation Probability

In the setting of question search, besides the topic what we address in the previous sections, another research topic is to fix lexical chasm between questions.

Topics

language modeling

- Then we model question topic and question focus in a language modeling framework for search.Page 1, “Abstract”

- Experimental results indicate that our approach of identifying question topic and question focus for search significantly outperforms the baseline methods such as Vector Space Model (VSM) and Language Model for Information Retrieval (LMIR).Page 1, “Abstract”

- vector space model, Okapi, language model , and translation-based model, within the setting of question search (Jeon et al., 2005b).Page 1, “Introduction”

- On the basis of this, we then propose to model question topic and question focus in a language modeling framework for search.Page 2, “Introduction”

- model question topic and question focus in a language modeling framework for search.Page 2, “Our Approach to Question Search”

- We employ the framework of language modeling (for information retrieval) to develop our approach to question search.Page 4, “Our Approach to Question Search”

- In the language modeling approach to information retrieval, the relevance of a targeted question q to a queried question q is given by the probability p(q|fi) of generating the queried question qPage 4, “Our Approach to Question Search”

- from the language model formed by the targeted question q.Page 5, “Our Approach to Question Search”

- In traditional language modeling , a single multinomial model p(t|q) over terms is estimated for each targeted question q.Page 5, “Our Approach to Question Search”

- If unigram document language models are used, the equation (9) can then be rewritten as,Page 5, “Our Approach to Question Search”

- To avoid zero probabilities and estimate more accurate language models , the HEAD and TAIL of questions are smoothed using background collection,Page 5, “Our Approach to Question Search”

See all papers in Proc. ACL 2008 that mention language modeling.

See all papers in Proc. ACL that mention language modeling.

Back to top.

vector space

- Experimental results indicate that our approach of identifying question topic and question focus for search significantly outperforms the baseline methods such as Vector Space Model (VSM) and Language Model for Information Retrieval (LMIR).Page 1, “Abstract”

- vector space model, Okapi, language model, and translation-based model, within the setting of question search (Jeon et al., 2005b).Page 1, “Introduction”

- To obtain the ground-truth of question search, we employed the Vector Space Model (VSM) (Salton et al., 1975) to retrieve the top 20 results and obtained manual judgments.Page 5, “Experimental Results”

- Conventional vector space models are used to calculate the statistical similarity and WordNet (Fellbaum, 1998) is used to estimate the semantic similarity.Page 8, “Using Translation Probability”

- vector space model, Okapi, language model (LM), and translation-based model, for automatically fixing the lexical chasm betweenPage 8, “Using Translation Probability”

See all papers in Proc. ACL 2008 that mention vector space.

See all papers in Proc. ACL that mention vector space.

Back to top.

Question answering

- (2005) used a Question Answer Database (known as QUAB) to support interactive question answering .Page 8, “Using Translation Probability”

- Question answering (e.g., Pasca and Harabagiu, 2001; Echihabi and Marcu, 2003; Voorhees, 2004; Metzler and Croft, 2005) relates to question search.Page 8, “Using Translation Probability”

- Question answering automatically extracts short answers for a relatively limited class of question types from document collections.Page 8, “Using Translation Probability”

- Though we only utilize data from community-based question answering service in our experiments, we could also use categorized questions from forum sites and FAQ sites.Page 8, “Using Translation Probability”

See all papers in Proc. ACL 2008 that mention Question answering.

See all papers in Proc. ACL that mention Question answering.

Back to top.

semantic similarities

- FAQ Finder (Burke et al., 1997) heuristically combines statistical similarities and semantic similarities between questions to rank FAQs.Page 8, “Using Translation Probability”

- Conventional vector space models are used to calculate the statistical similarity and WordNet (Fellbaum, 1998) is used to estimate the semantic similarity .Page 8, “Using Translation Probability”

- In contrast to that, question search retrieves answers for an unlimited range of questions by focusing on finding semantically similar questions in an archive.Page 8, “Using Translation Probability”

See all papers in Proc. ACL 2008 that mention semantic similarities.

See all papers in Proc. ACL that mention semantic similarities.

Back to top.

Translation Probability

- More specifically, by using translation probabilities , we can rewrite equation (11) and (12) as follow:Page 7, “Using Translation Probability”

- From Table 6, we see that the performance of our approach can be further boosted by using translation probability .Page 8, “Using Translation Probability”

- Using Translation Probability 5 Related WorkPage 8, “Using Translation Probability”

See all papers in Proc. ACL 2008 that mention Translation Probability.

See all papers in Proc. ACL that mention Translation Probability.

Back to top.