Article Structure

Abstract

Previous studies of data-driven dependency parsing have shown that the distribution of parsing errors are correlated with theoretical properties of the models used for learning and inference.

Introduction

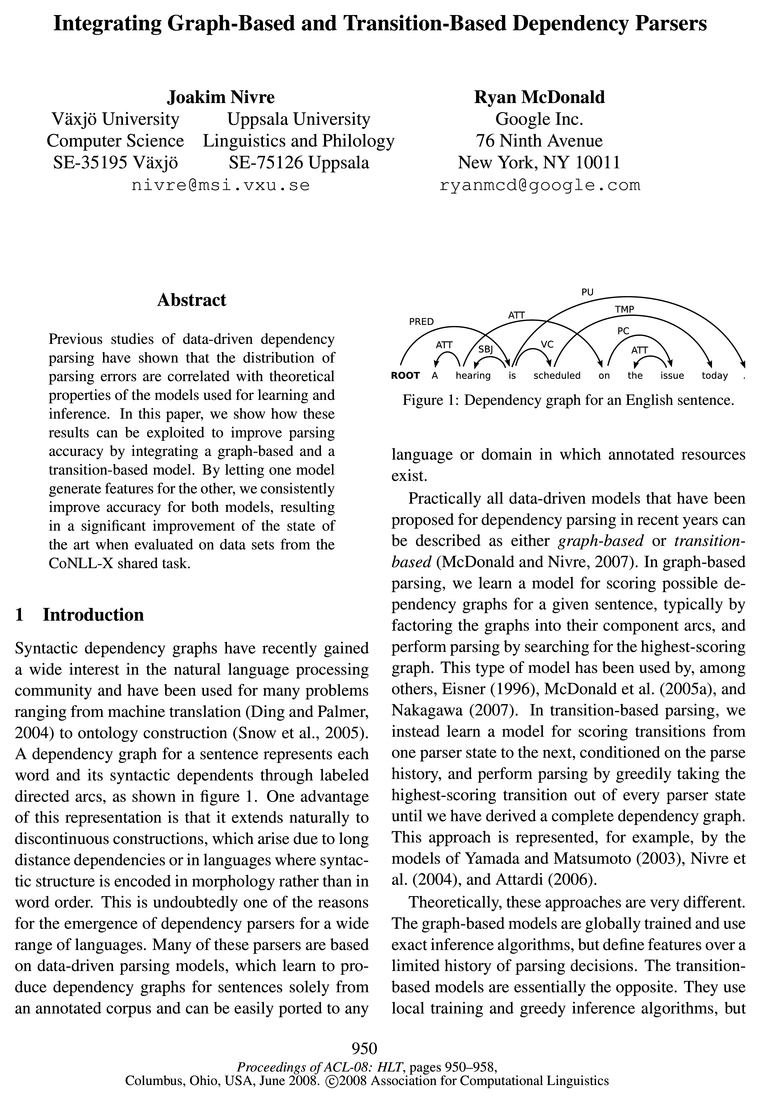

Syntactic dependency graphs have recently gained a wide interest in the natural language processing community and have been used for many problems ranging from machine translation (Ding and Palmer, 2004) to ontology construction (Snow et al., 2005).

Two Models for Dependency Parsing

2.1 Preliminaries

Integrated Models

There are many conceivable ways of combining the two parsers, including more or less complex ensemble systems and voting schemes, which only perform the integration at parsing time.

Experiments

In this section, we present an experimental evaluation of the two guided models based on data from the CoNLL—X shared task, followed by a comparative error analysis including both the base models and the guided models.

Related Work

Combinations of graph-based and transition-based models for data-driven dependency parsing have previously been explored by Sagae and Lavie (2006), who report improvements of up to 1.7 percentage points over the best single parser when combining three transition-based models and one graph-based model for unlabeled dependency parsing, evaluated on data from the Penn Treebank.

Conclusion

In this paper, we have demonstrated how the two dominant approaches to data-driven dependency parsing, graph-based models and transition-based models, can be integrated by letting one model learn from features generated by the other.

Topics

graph-based

- In this paper, we show how these results can be exploited to improve parsing accuracy by integrating a graph-based and a transition-based model.Page 1, “Abstract”

- Practically all data-driven models that have been proposed for dependency parsing in recent years can be described as either graph-based or transition-based (McDonald and Nivre, 2007).Page 1, “Introduction”

- In graph-based parsing, we learn a model for scoring possible dependency graphs for a given sentence, typically by factoring the graphs into their component arcs, and perform parsing by searching for the highest-scoring graph.Page 1, “Introduction”

- The graph-based models are globally trained and use exact inference algorithms, but define features over a limited history of parsing decisions.Page 1, “Introduction”

- In this paper, we consider a simple way of integrating graph-based and transition-based models in order to exploit their complementary strengths and thereby improve parsing accuracy beyond what is possible by either model in isolation.Page 2, “Introduction”

- 2.2 Graph-Based ModelsPage 2, “Two Models for Dependency Parsing”

- Graph-based dependency parsers parameterize a model over smaller substructures in order to search the space of valid dependency graphs and produce the most likely one.Page 2, “Two Models for Dependency Parsing”

- An advantage of graph-based methods is that tractable inference enables the use of standard structured learning techniques that globally set parameters to maximize parsing performance on the training set (McDonald et al., 2005a).Page 2, “Two Models for Dependency Parsing”

- The specific graph-based model studied in this work is that presented by McDonald et al.Page 2, “Two Models for Dependency Parsing”

- For the graph-based model, X is the set of possible dependency arcs (2,7,1); for the transition-based model, X is the set of possible configuration-transition pairs (0,75).Page 3, “Integrated Models”

- 3.2 The Guided Graph-Based ModelPage 4, “Integrated Models”

See all papers in Proc. ACL 2008 that mention graph-based.

See all papers in Proc. ACL that mention graph-based.

Back to top.

dependency parsing

- Previous studies of data-driven dependency parsing have shown that the distribution of parsing errors are correlated with theoretical properties of the models used for learning and inference.Page 1, “Abstract”

- This is undoubtedly one of the reasons for the emergence of dependency parsers for a wide range of languages.Page 1, “Introduction”

- Practically all data-driven models that have been proposed for dependency parsing in recent years can be described as either graph-based or transition-based (McDonald and Nivre, 2007).Page 1, “Introduction”

- Both models have been used to achieve state-of-the-art accuracy for a wide range of languages, as shown in the CoNLL shared tasks on dependency parsing (Buchholz and Marsi, 2006; Nivre et al., 2007), but McDonald and Nivre (2007) showed that a detailed error analysis reveals important differences in the distribution of errors associated with the two models.Page 2, “Introduction”

- This is a common constraint in many dependency parsing theories and their implementations.Page 2, “Two Models for Dependency Parsing”

- Graph-based dependency parsers parameterize a model over smaller substructures in order to search the space of valid dependency graphs and produce the most likely one.Page 2, “Two Models for Dependency Parsing”

- As a result, the dependency parsing problem is written:Page 2, “Two Models for Dependency Parsing”

- Transition-based dependency parsing systems use a model parameterized over transitions of an abstract machine for deriving dependency graphs, such that every transition sequence from the designated initial configuration to some terminal configuration derives a valid dependency graph.Page 2, “Two Models for Dependency Parsing”

- Many transition systems for data-driven dependency parsing are inspired by shift-reduce parsing,Page 2, “Two Models for Dependency Parsing”

- The data for the experiments are training and test sets for all thirteen languages from the CoNLL-X shared task on multilingual dependency parsing with training sets ranging in size from from 29,000 tokens (Slovene) to 1,249,000 tokens (Czech).Page 5, “Experiments”

- The experimental results presented so far show that feature-based integration is a viable approach for improving the accuracy of both graph-based and transition-based models for dependency parsing , but they say very little about how the integration benefitsPage 6, “Experiments”

See all papers in Proc. ACL 2008 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

feature vector

- by a k-dimensional feature vector f : X —> R’“.Page 4, “Integrated Models”

- In the feature-based integration we simply extend the feature vector for one model, called the base model, with a certain number of features generated by the other model, which we call the guide model in this context.Page 4, “Integrated Models”

- The additional features will be referred to as guide features, and the version of the base model trained with the extended feature vector will be called the guided model.Page 4, “Integrated Models”

- More precisely, dependency arcs (or pairs of arcs) are first represented by a high dimensional feature vector f (i, j, l) E R1“, where f is typically a binary feature vector over properties of the arc as well as the surrounding input (McDonald et al., 2005a; McDonald et al., 2006).Page 4, “Integrated Models”

- The set of training instances for this learning problem is the set of pairs (0, t) such that t is the correct transition out of c in the transition sequence that derives the correct dependency graph G95 for some sentence cc in the training set T. Each training instance (0, t) is represented by a feature vector f (c, t) E R1“, where features are defined in terms of arbitrary properties of the configuration 0, including the state of the stack 00, the input buffer BC, and the partially built dependency graph Go.Page 4, “Integrated Models”

- We define m additional guide features, based on properties of G3,“, and extend the feature vector accordingly to f (c, t, Gag/ET) E RMW.Page 5, “Integrated Models”

See all papers in Proc. ACL 2008 that mention feature vector.

See all papers in Proc. ACL that mention feature vector.

Back to top.

scoring function

- The simplest parameterization is the arc-factored model that defines a real-valued score function for arcs 3(2', j, l) and further defines the score of a dependency graph as the sum of thePage 2, “Two Models for Dependency Parsing”

- Given a real-valued score function 3(0, 25) (for transition 75 out of configuration 0), parsing can be performed by starting from the initial configuration and taking the optimal transition 75* = arg maxtET 3(0, 25) out of every configuration 0 until a terminal configuration is reached.Page 2, “Two Models for Dependency Parsing”

- To learn a scoring function on transitions, these systems rely on discriminative learning methods, such as memory-based learning or support vector machines, using a strictly local learning procedure where only single transitions are scored (not complete transition sequences).Page 3, “Two Models for Dependency Parsing”

- As explained in section 2, both models essentially learn a scoring function 3 : X —> R, where the domain X is different for the two models.Page 3, “Integrated Models”

- The graph-based model, MSTParser, learns a scoring function 3(2', j, l) E R over labeled dependencies.Page 4, “Integrated Models”

- The transition-based model, MaltParser, learns a scoring function 3(0, 25) E R over configurations and transitions.Page 4, “Integrated Models”

See all papers in Proc. ACL 2008 that mention scoring function.

See all papers in Proc. ACL that mention scoring function.

Back to top.

shared task

- By letting one model generate features for the other, we consistently improve accuracy for both models, resulting in a significant improvement of the state of the art when evaluated on data sets from the CoNLL-X shared task .Page 1, “Abstract”

- Both models have been used to achieve state-of-the-art accuracy for a wide range of languages, as shown in the CoNLL shared tasks on dependency parsing (Buchholz and Marsi, 2006; Nivre et al., 2007), but McDonald and Nivre (2007) showed that a detailed error analysis reveals important differences in the distribution of errors associated with the two models.Page 2, “Introduction”

- In this section, we present an experimental evaluation of the two guided models based on data from the CoNLL—X shared task , followed by a comparative error analysis including both the base models and the guided models.Page 5, “Experiments”

- The data for the experiments are training and test sets for all thirteen languages from the CoNLL-X shared task on multilingual dependency parsing with training sets ranging in size from from 29,000 tokens (Slovene) to 1,249,000 tokens (Czech).Page 5, “Experiments”

- Models are evaluated by their labeled attachment score (LAS) on the test set, i.e., the percentage of tokens that are assigned both the correct head and the correct label, using the evaluation software from the CoNLL-X shared task with default settings.4 Statistical significance was assessed using Dan Bikel’s randomized parsing evaluation comparator with the default setting of 10,000 iterations.5Page 5, “Experiments”

- (2007) to combine six transition-based parsers in the best performing system in the CoNLL 2007 shared task .Page 8, “Related Work”

See all papers in Proc. ACL 2008 that mention shared task.

See all papers in Proc. ACL that mention shared task.

Back to top.

gold standard

- This means that, for every sentence an E T, BC has access at training time to both the gold standard dependency graph G95 and the graph GS predicted by C, and it is the latter that forms the basis for the additional guide features.Page 4, “Integrated Models”

- Figure 3(a) plots precision (top) and recall (bottom) for dependency arcs of different lengths (predicted arcs for precision, gold standard arcs for recall).Page 6, “Experiments”

- Figure 3(b) shows precision (top) and recall (bottom) for dependency arcs at different distances from the root (predicted arcs for precision, gold standard arcs for recall).Page 7, “Experiments”

- The difference, of course, is that standard co-training is a weakly supervised method, where guide features replace, rather than complement, the gold standard annotation duringPage 8, “Related Work”

See all papers in Proc. ACL 2008 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

parsing models

- Many of these parsers are based on data-driven parsing models , which learn to produce dependency graphs for sentences solely from an annotated corpus and can be easily ported to anyPage 1, “Introduction”

- The combined parsing model is essentially an instance of the graph-based model, where arc scores are derived from the output of the different component parsers.Page 8, “Related Work”

- Directions for future research include a more detailed analysis of the effect of feature-based integration, as well as the exploration of other strategies for integrating different parsing models .Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention parsing models.

See all papers in Proc. ACL that mention parsing models.

Back to top.

part-of-speech

- All features are conjoined with the part-of-speech tags of the words involved in the dependency to allow the guided parser to learn weights relative to different surface syntactic environments.Page 4, “Integrated Models”

- Unlike MSTParser, features are not explicitly defined to conjoin guide features with part-of-speech features.Page 5, “Integrated Models”

- pendent part-of-speech .Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention part-of-speech.

See all papers in Proc. ACL that mention part-of-speech.

Back to top.

state of the art

- By letting one model generate features for the other, we consistently improve accuracy for both models, resulting in a significant improvement of the state of the art when evaluated on data sets from the CoNLL-X shared task.Page 1, “Abstract”

- Finally, given that the two base models had the previously best performance for these data sets, the guided models achieve a substantial improvement of the state of the art .Page 6, “Experiments”

- Our experimental results show that both models consistently improve their accuracy when given access to features generated by the other model, which leads to a significant advancement of the state of the art in data-driven dependency parsing.Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention state of the art.

See all papers in Proc. ACL that mention state of the art.

Back to top.