Article Structure

Abstract

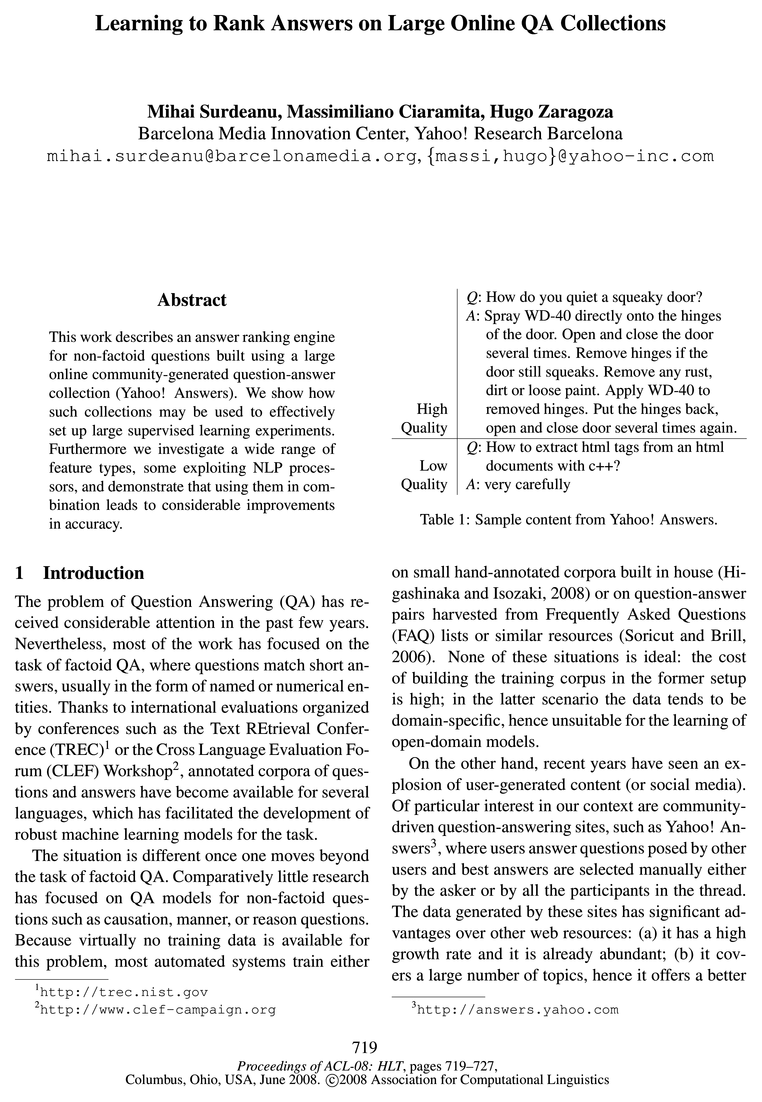

This work describes an answer ranking engine for non-factoid questions built using a large online community-generated question-answer collection (Yahoo!

Introduction

The problem of Question Answering (QA) has received considerable attention in the past few years.

Approach

The architecture of the QA system analyzed in the paper, summarized in Figure 1, follows that of the most successful TREC systems.

The Corpus

The corpus is extracted from a sample of the U.S Yahoo!

Experiments

We evaluate our results using two measures: mean Precision at rank=l (P@ l) — i.e., the percentage of questions with the correct answer on the first position — and Mean Reciprocal Rank (MRR) — i.e., the score of a question is 1/19, where k is the position of the correct answer.

Related Work

Content from community-built question-answer sites can be retrieved by searching for similar questions already answered (Jeon et al., 2005) and ranked using meta-data information like answerer authority (Jeon et al., 2006; Agichtein et al., 2008).

Conclusions

In this work we described an answer ranking engine for non-factoid questions built using a large community-generated question-answer collection.

Topics

translation models

- supervised IR models, the answer ranking is implemented using discriminative learning, and finally, some of the ranking features are produced by question-to-answer translation models , which use class-conditional learning.Page 3, “Approach”

- the similarity between questions and answers (FGl), features that encode question-to-answer transformations using a translation model (FG2), features that measure keyword density and frequency (FG3), and features that measure the correlation between question-answer pairs and other collections (FG4).Page 3, “Approach”

- One way to address this problem is to learn question-to-answer transformations using a translation model (Berger et al., 2000; Echihabi and Marcu, 2003; Soricut and Brill, 2006; Riezler et al., 2007).Page 4, “Approach”

- Our ranking model was tuned strictly on the development set (i.e., feature selection and parameters of the translation models ).Page 6, “Experiments”

- to improve lexical matching and translation models .Page 6, “Experiments”

- This indicates that, even though translation models are the most useful, it is worth exploring approaches that combine several strategies for answer ranking.Page 7, “Experiments”

- In the QA literature, answer ranking for non-factoid questions has typically been performed by learning question-to-answer transformations, either using translation models (Berger et al., 2000; Soricut and Brill, 2006) or by exploiting the redundancy of the Web (Agichtein et al., 2001).Page 8, “Related Work”

- On the other hand, our approach allows the learning of full transformations from question structures to answer structures using translation models applied to different text representations.Page 8, “Related Work”

See all papers in Proc. ACL 2008 that mention translation models.

See all papers in Proc. ACL that mention translation models.

Back to top.

feature set

- To answer the second research objective we will analyze the contribution of the proposed feature set to this function.Page 2, “Approach”

- For completeness we also include in the feature set the value of the t f - idf similarity measure.Page 3, “Approach”

- Feature Set MRR P@1Page 6, “Experiments”

- The algorithm incrementally adds to the feature set the feature that provides the highest MRR improvement in the development partition.Page 6, “Experiments”

- This approach allowed us to perform a systematic feature analysis on a large-scale real-world corpus and a comprehensive feature set .Page 8, “Related Work”

- Our model uses a larger feature set that includes correlation and transformation-based features and five different content representations.Page 8, “Related Work”

See all papers in Proc. ACL 2008 that mention feature set.

See all papers in Proc. ACL that mention feature set.

Back to top.

social media

- On the other hand, recent years have seen an explosion of user-generated content (or social media ).Page 1, “Introduction”

- In this paper we address the problem of answer ranking for non-factoid questions from social media content.Page 2, “Introduction”

- In fact, it is likely that an optimal retrieval engine from social media should combine all these three methodologies.Page 8, “Related Work”

- Moreover, our approach might have applications outside of social media (e.g., for open-domain web-based QA), because the ranking model built is based only on open-domain knowledge and the analysis of textual content.Page 8, “Related Work”

- On the other hand, we expect the outcome of this process to help several applications, such as open-domain QA on the Web and retrieval from social media .Page 8, “Conclusions”

- On social media , our system should be combined with a component that searches for similar questions already answered; this output can possibly be filtered further by a content-quality module that explores “social” features such as the authority of users, etc.Page 8, “Conclusions”

See all papers in Proc. ACL 2008 that mention social media.

See all papers in Proc. ACL that mention social media.

Back to top.

bigrams

- Bigrams (B) - the text is represented as a bag of bigrams (larger n-grams did not help).Page 3, “Approach”

- Generalized bigrams (Bg) - same as above, but the words are generalized to their WNSS.Page 3, “Approach”

- The first chosen feature is the translation probability computed between the B 9 question and answer representations ( bigrams with words generalized to their WNSS tags).Page 7, “Experiments”

- This is caused by the fact that the BM25 formula is less forgiving with errors of the NLP processors (due to the high idf scores assigned to bigrams and dependencies), and the WNSS tagger is the least robust component in our pipeline.Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention bigrams.

See all papers in Proc. ACL that mention bigrams.

Back to top.

WordNet

- Wherever applicable, we explore different syntactic and semantic representations of the textual content, e. g., extracting the dependency-based representation of the text or generalizing words to their WordNet supersenses (WNSS) (Ciaramita and Altun, 2006).Page 3, “Approach”

- In all these representations we skip stop words and normalize all words to their WordNet lemmas.Page 4, “Approach”

- Each word was morphologically simplified using the morphological functions of the WordNet library8.Page 5, “The Corpus”

- These tags, defined by WordNet lexicographers, provide a broad semantic categorization for nouns and verbs and include labels for nouns such as food, animal, body and feeling, and for verbs labels such as communication, contact, and possession.Page 5, “The Corpus”

See all papers in Proc. ACL 2008 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.

Perceptron

- For this reason we choose as a ranking algorithm the Perceptron which is both accurate and efficient and can be trained with online protocols.Page 3, “Approach”

- Specifically, we implement the ranking Perceptron proposed by Shen and J oshi (2005), which reduces the ranking problem to a binary classification problem.Page 3, “Approach”

- For regularization purposes, we use as a final model the average of all Perceptron models positedPage 3, “Approach”

See all papers in Proc. ACL 2008 that mention Perceptron.

See all papers in Proc. ACL that mention Perceptron.

Back to top.

Question Answering

- The problem of Question Answering (QA) has received considerable attention in the past few years.Page 1, “Introduction”

- Since our focus is on exploring the usability of the answer content, we do not perform retrieval by finding similar questions already answered (Jeon et al., 2005), i.e., our answer collection C contains only the site’s answers without the corresponding questions answered .Page 2, “Approach”

- We compute th< Pointwise Mutual Information (PMI) and Chi squar< (X2) association measures between each question answer word pair in the query-log corpus.Page 5, “Approach”

See all papers in Proc. ACL 2008 that mention Question Answering.

See all papers in Proc. ACL that mention Question Answering.

Back to top.

tree kernels

- Counting the number of matched dependencies is essentially a simplified tree kernel for QA (e.g., see (Moschitti et al., 2007)) matching only trees of depth 2.Page 4, “Approach”

- Experiments with full dependency tree kernels based on several variants of the convolution kernels of Collins and Duffy (2001) did not yield improvements.Page 4, “Approach”

- We conjecture that the mistakes of the syntactic parser may be amplified in tree kernels , which consider an exponential number of sub-trees.Page 4, “Approach”

See all papers in Proc. ACL 2008 that mention tree kernels.

See all papers in Proc. ACL that mention tree kernels.

Back to top.