Article Structure

Abstract

Manually annotated corpora are valuable but scarce resources, yet for many annotation tasks such as treebanking and sequence labeling there exist multiple corpora with diflerent and incompatible annotation guidelines or standards.

Introduction

Much of statistical NLP research relies on some sort of manually annotated corpora to train their models, but these resources are extremely expensive to build, especially at a large scale, for example in treebanking (Marcus et al., 1993).

Segmentation and Tagging as Character Classification

Before describing the adaptation algorithm, we give a brief introduction of the baseline character classification strategy for segmentation, as well as joint segmenation and tagging (henceforth “Joint S&T”).

Automatic Annotation Adaptation

From this section, several shortened forms are adopted for representation inconvenience.

Related Works

Co-training (Sarkar, 2001) and classifier combination (Nivre and McDonald, 2008) are two technologies for training improved dependency parsers.

Experiments

Our adaptation experiments are conducted from People’s Daily (PD) to Penn Chinese Treebank 5.0 (CTB).

Conclusion and Future Works

This paper presents an automatic annotation adaptation strategy, and conducts experiments on a classic problem: word segmentation and Joint

Topics

word segmentation

- We test the efficacy of this method in the context of Chinese word segmentation and part-of-speech tagging, where no segmentation and POS tagging standards are widely accepted due to the lack of morphology in Chinese.Page 1, “Abstract”

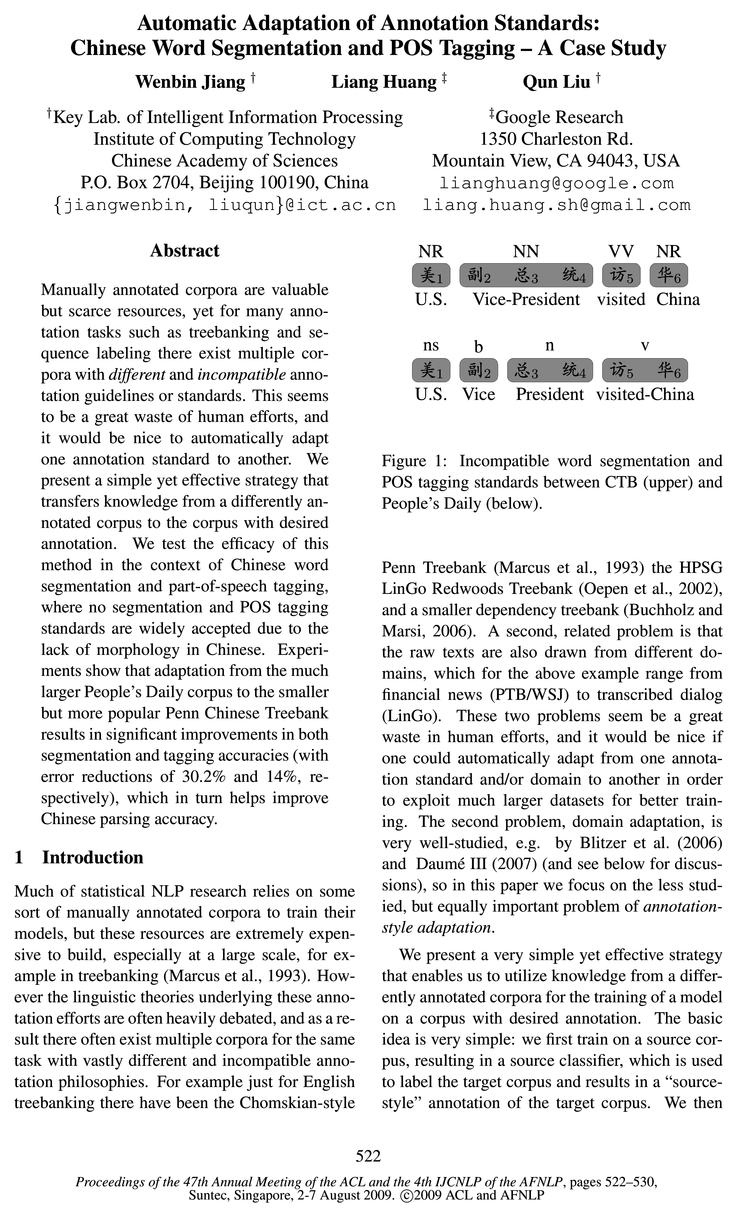

- Figure l: Incompatible word segmentation and POS tagging standards between CTB (upper) and People’s Daily (below).Page 1, “Introduction”

- To test the efficacy of our method we choose Chinese word segmentation and part-of-speech tagging, where the problem of incompatible annotation standards is one of the most evident: so far no segmentation standard is widely accepted due to the lack of a clear definition of Chinese words, and the (almost complete) lack of morphology results in much bigger ambiguities and heavy debates in tagging philosophies for Chinese parts-of-speech.Page 2, “Introduction”

- In addition, the improved accuracies from segmentation and tagging also lead to an improved parsing accuracy on CTB, reducing 38% of the error propagation from word segmentation to parsing.Page 2, “Introduction”

- In the rest of the paper we first briefly review the popular classification-based method for word segmentation and tagging (Section 2), and then describe our idea of annotation adaptation (Section 3).Page 2, “Introduction”

- 01 02 .. On where C,- is a character, word segmentation aims to split the sequence into m(§ n) words: 01161 Cel+lzeg -- Cem_1+1:emPage 2, “Segmentation and Tagging as Character Classification”

- Xue and Shen (2003) describe for the first time the character classification approach for Chinese word segmentation , Where each character is given a boundary tag denoting its relative position in a word.Page 3, “Segmentation and Tagging as Character Classification”

- In addition, Ng and Low (2004) find that, compared with POS tagging after word segmentation , Joint S&T can achieve higher accuracy on both segmentation and POS tagging.Page 3, “Segmentation and Tagging as Character Classification”

- For word segmentation only, there are four boundary tags:Page 3, “Segmentation and Tagging as Character Classification”

- It is an online training algorithm and has been successfully used in many NLP tasks, such as POS tagging (Collins, 2002), parsing (Collins and Roark, 2004), Chinese word segmentation (Zhang and Clark, 2007; J iang et al., 2008), and so on.Page 3, “Segmentation and Tagging as Character Classification”

- Considering that word segmentation and Joint S&T can be conducted in the same character classification manner, we can design an unified standard adaptation framework for the two tasks, by taking the source classifier’s classification result as the guide information for the target classifier’s classification decision.Page 4, “Automatic Annotation Adaptation”

See all papers in Proc. ACL 2009 that mention word segmentation.

See all papers in Proc. ACL that mention word segmentation.

Back to top.

POS tagging

- We test the efficacy of this method in the context of Chinese word segmentation and part-of-speech tagging, where no segmentation and POS tagging standards are widely accepted due to the lack of morphology in Chinese.Page 1, “Abstract”

- Figure l: Incompatible word segmentation and POS tagging standards between CTB (upper) and People’s Daily (below).Page 1, “Introduction”

- Our experiments show that adaptation from PD to CTB results in a significant improvement in segmentation and POS tagging , with error reductions of 30.2% and 14%, respectively.Page 2, “Introduction”

- While in Joint S&T, each word is further annotated with a POS tag:Page 3, “Segmentation and Tagging as Character Classification”

- Where tk(l<: = 1..m) denotes the POS tag for the word Cek_1+1;ek.Page 3, “Segmentation and Tagging as Character Classification”

- In Ng and Low (2004), Joint S&T can also be treated as a character classification problem, Where a boundary tag is combined with a POS tag in order to give the POS information of the word containing these characters.Page 3, “Segmentation and Tagging as Character Classification”

- In addition, Ng and Low (2004) find that, compared with POS tagging after word segmentation, Joint S&T can achieve higher accuracy on both segmentation and POS tagging .Page 3, “Segmentation and Tagging as Character Classification”

- while for Joint S&T, a POS tag is attached to the tail of a boundary tag, to incorporate the word boundary information and POS information together.Page 3, “Segmentation and Tagging as Character Classification”

- It is an online training algorithm and has been successfully used in many NLP tasks, such as POS tagging (Collins, 2002), parsing (Collins and Roark, 2004), Chinese word segmentation (Zhang and Clark, 2007; J iang et al., 2008), and so on.Page 3, “Segmentation and Tagging as Character Classification”

- For example, currently, most Chinese constituency and dependency parsers are trained on some version of CTB, using its segmentation and POS tagging as the defacto standards.Page 6, “Experiments”

- Therefore, we expect the knowledge adapted from PD will lead to more precise CTB-style segmenter and POS tagger , which would in turn reduce the error propagation to parsing (and translation).Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention POS tagging.

See all papers in Proc. ACL that mention POS tagging.

Back to top.

Treebank

- Manually annotated corpora are valuable but scarce resources, yet for many annotation tasks such as treebanking and sequence labeling there exist multiple corpora with diflerent and incompatible annotation guidelines or standards.Page 1, “Abstract”

- Experiments show that adaptation from the much larger People’s Daily corpus to the smaller but more popular Penn Chinese Treebank results in significant improvements in both segmentation and tagging accuracies (with error reductions of 30.2% and 14%, respectively), which in turn helps improve Chinese parsing accuracy.Page 1, “Abstract”

- Much of statistical NLP research relies on some sort of manually annotated corpora to train their models, but these resources are extremely expensive to build, especially at a large scale, for example in treebanking (Marcus et al., 1993).Page 1, “Introduction”

- For example just for English treebanking there have been the Chomskian-stylePage 1, “Introduction”

- Penn Treebank (Marcus et al., 1993) the HPSG LinGo Redwoods Treebank (Oepen et al., 2002), and a smaller dependency treebank (Buchholz and Marsi, 2006).Page 1, “Introduction”

- Annotation-style adaptation, however, tackles the problem where the guideline itself is changed, for example, one treebank might distinguish between transitive and intransitive verbs, while merging the different noun types (NN, NNS, etc.Page 2, “Introduction”

- ), and for example one treebank (PTB) might be much flatter than the other (LinGo), not to mention the fundamental disparities between their underlying linguistic representations (CFG vs. HPSG).Page 2, “Introduction”

- The two corpora used in this study are the much larger People’s Daily (PD) (5.86M words) corpus (Yu et al., 2001) and the smaller but more popular Penn Chinese Treebank (CTB) (0.47M words) (Xue et al., 2005).Page 2, “Introduction”

- In addition, many efforts have been devoted to manual treebank adaptation, where they adapt PTB to other grammar formalisms, such as such as CCG and LFG (Hockenmaier and Steedman, 2008; Cahill and Mccarthy, 2007).Page 5, “Related Works”

- Our adaptation experiments are conducted from People’s Daily (PD) to Penn Chinese Treebank 5.0 (CTB).Page 6, “Experiments”

- Especially, we will pay efforts to the annotation standard adaptation between different treebanks, for example, from HPSG LinGo Redwoods Treebank to PTB, or even from a dependency treebank to PTB, in order to obtain more powerful PTB annotation-style parsers.Page 8, “Conclusion and Future Works”

See all papers in Proc. ACL 2009 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.

F-measure

- For word segmentation, the model after annotation adaptation (row 4 in upper subtable) achieves an F-measure increment of 0.8 points over the baseline model, corresponding to an error reduction of 30.2%; while for Joint S&T, the F-measure increment of the adapted model (row 4 in subtable below) is 1 point, which corresponds to an error reduction of 14%.Page 7, “Experiments”

- Note that if we input the gold-standard segmented test set into the parser, the F-measure under the two definitions are the same.Page 7, “Experiments”

- The parsing F-measure corresponding to the gold-standard segmentation, 82.35, represents the “oracle” accuracy (i.e., upperbound) of parsing on top of automatic word segmention.Page 7, “Experiments”

- After integrating the knowledge from PD, the enhanced word segmenter gains an F-measure increment of 0.8 points, which indicates that 38% of the error propagation from word segmentation to parsing is reduced by our annotation adaptation strategy.Page 7, “Experiments”

- It obtains considerable F-measure increment, about 0.8 point for word segmentation and 1 point for Joint S&T, with corresponding error reductions of 30.2% and 14%.Page 8, “Conclusion and Future Works”

- Moreover, such improvement further brings striking F-measure increment for Chinese parsing, about 0.8 points, corresponding to an error propagation reduction of 38%.Page 8, “Conclusion and Future Works”

See all papers in Proc. ACL 2009 that mention F-measure.

See all papers in Proc. ACL that mention F-measure.

Back to top.

perceptron

- Algorithm 1 Perceptron training algorithm.Page 3, “Segmentation and Tagging as Character Classification”

- Several classification models can be adopted here, however, we choose the averaged perceptron algorithm (Collins, 2002) because of its simplicity and high accuracy.Page 3, “Segmentation and Tagging as Character Classification”

- 5.1 Baseline Perceptron ClassifierPage 6, “Experiments”

- We first report experimental results of the single perceptron classifier on CTB 5.0.Page 6, “Experiments”

- The first 3 rows in each subtable of Table 3 show the performance of the single perceptronPage 6, “Experiments”

- Figure 4: Averaged perceptron learning curves for segmentation and Joint S&T.Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.

Chinese word

- We test the efficacy of this method in the context of Chinese word segmentation and part-of-speech tagging, where no segmentation and POS tagging standards are widely accepted due to the lack of morphology in Chinese.Page 1, “Abstract”

- To test the efficacy of our method we choose Chinese word segmentation and part-of-speech tagging, where the problem of incompatible annotation standards is one of the most evident: so far no segmentation standard is widely accepted due to the lack of a clear definition of Chinese words , and the (almost complete) lack of morphology results in much bigger ambiguities and heavy debates in tagging philosophies for Chinese parts-of-speech.Page 2, “Introduction”

- where each subsequence Cm- indicates a Chinese word spanning from characters 0,- to Cj (both in-Page 2, “Segmentation and Tagging as Character Classification”

- Xue and Shen (2003) describe for the first time the character classification approach for Chinese word segmentation, Where each character is given a boundary tag denoting its relative position in a word.Page 3, “Segmentation and Tagging as Character Classification”

- It is an online training algorithm and has been successfully used in many NLP tasks, such as POS tagging (Collins, 2002), parsing (Collins and Roark, 2004), Chinese word segmentation (Zhang and Clark, 2007; J iang et al., 2008), and so on.Page 3, “Segmentation and Tagging as Character Classification”

See all papers in Proc. ACL 2009 that mention Chinese word.

See all papers in Proc. ACL that mention Chinese word.

Back to top.

dependency parsers

- This coincides with the stacking method for combining dependency parsers (Martins et al., 2008; Nivre and McDon-Page 4, “Automatic Annotation Adaptation”

- This is similar to feature design in discriminative dependency parsing (McDonald et al., 2005; Mc-Page 4, “Automatic Annotation Adaptation”

- Co-training (Sarkar, 2001) and classifier combination (Nivre and McDonald, 2008) are two technologies for training improved dependency parsers .Page 5, “Related Works”

- The classifier combination lets graph-based and transition-based dependency parsers to utilize the features extracted from each other’s parsing results, to obtain combined, enhanced parsers.Page 5, “Related Works”

- For example, currently, most Chinese constituency and dependency parsers are trained on some version of CTB, using its segmentation and POS tagging as the defacto standards.Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention dependency parsers.

See all papers in Proc. ACL that mention dependency parsers.

Back to top.

domain adaptation

- The second problem, domain adaptation , is very well-studied, e.g.Page 1, “Introduction”

- This method is very similar to some ideas in domain adaptation (Daume III and Marcu, 2006; Daume III, 2007), but we argue that the underlying problems are quite different.Page 2, “Introduction”

- Domain adaptation assumes the labeling guidelines are preserved between the two domains, e.g., an adjective is always labeled as JJ regardless of from Wall Street Journal (WSJ) or Biomedical texts, and only the distributions are different, e. g., the word “control” is most likely a verb in WSJ but often a noun in Biomedical texts (as in “control experiment”).Page 2, “Introduction”

- ald, 2008), and is also similar to the Pred baseline for domain adaptation in (Daumé III and Marcu, 2006; Daumé III, 2007).Page 4, “Automatic Annotation Adaptation”

- We are especially grateful to Fernando Pereira and the anonymous reviewers for pointing us to relevant domain adaption references.Page 8, “Conclusion and Future Works”

See all papers in Proc. ACL 2009 that mention domain adaptation.

See all papers in Proc. ACL that mention domain adaptation.

Back to top.

Chinese word segmentation

- We test the efficacy of this method in the context of Chinese word segmentation and part-of-speech tagging, where no segmentation and POS tagging standards are widely accepted due to the lack of morphology in Chinese.Page 1, “Abstract”

- To test the efficacy of our method we choose Chinese word segmentation and part-of-speech tagging, where the problem of incompatible annotation standards is one of the most evident: so far no segmentation standard is widely accepted due to the lack of a clear definition of Chinese words, and the (almost complete) lack of morphology results in much bigger ambiguities and heavy debates in tagging philosophies for Chinese parts-of-speech.Page 2, “Introduction”

- Xue and Shen (2003) describe for the first time the character classification approach for Chinese word segmentation , Where each character is given a boundary tag denoting its relative position in a word.Page 3, “Segmentation and Tagging as Character Classification”

- It is an online training algorithm and has been successfully used in many NLP tasks, such as POS tagging (Collins, 2002), parsing (Collins and Roark, 2004), Chinese word segmentation (Zhang and Clark, 2007; J iang et al., 2008), and so on.Page 3, “Segmentation and Tagging as Character Classification”

See all papers in Proc. ACL 2009 that mention Chinese word segmentation.

See all papers in Proc. ACL that mention Chinese word segmentation.

Back to top.

developing set

- The data splitting convention of other two corpora, People’s Daily doesn’t reserve the development sets , so in the following experiments, we simply choose the model after 7 iterations when training on this corpus.Page 6, “Experiments”

- Table 4: Error analysis for Joint S&T on the developing set of CTB.Page 7, “Experiments”

- To obtain further information about what kind of errors be alleviated by annotation adaptation, we conduct an initial error analysis for Joint S&T on the developing set of CTB.Page 7, “Experiments”

- From Table 4 we find that out of 30 word clusters appeared in the developing set of CTB, 13 clusters benefit from the annotation adaptation strategy, while 4 clusters suffer from it.Page 7, “Experiments”

See all papers in Proc. ACL 2009 that mention developing set.

See all papers in Proc. ACL that mention developing set.

Back to top.

gold-standard

- Input Type Parsing F1 % gold-standard segmentation 82.35 baseline segmentation 80.28 adapted segmentation 81.07Page 7, “Experiments”

- Note that if we input the gold-standard segmented test set into the parser, the F-measure under the two definitions are the same.Page 7, “Experiments”

- The parsing F-measure corresponding to the gold-standard segmentation, 82.35, represents the “oracle” accuracy (i.e., upperbound) of parsing on top of automatic word segmention.Page 7, “Experiments”

See all papers in Proc. ACL 2009 that mention gold-standard.

See all papers in Proc. ACL that mention gold-standard.

Back to top.

model trained

- While in the subtable below, JST F1 is also undefined since the model trained on PD gives a POS set different from that of CTB.Page 6, “Experiments”

- We also see that for both segmentation and Joint S&T, the performance sharply declines when a model trained on PD is tested on CTB (row 2 in each subtable).Page 6, “Experiments”

- This obviously fall behind those of the models trained on CTB itself (row 3 in each subtable), about 97% F1, which are used as the baselines of the following annotation adaptation experiments.Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention model trained.

See all papers in Proc. ACL that mention model trained.

Back to top.

sequence labeling

- Manually annotated corpora are valuable but scarce resources, yet for many annotation tasks such as treebanking and sequence labeling there exist multiple corpora with diflerent and incompatible annotation guidelines or standards.Page 1, “Abstract”

- We envision this technique to be general and widely applicable to many other sequence labeling tasks.Page 2, “Introduction”

- Similar to the situation in other sequence labeling problems, the training procedure is to learn a discriminative model mapping from inputs SE 6 X to outputs y E Y, where X is the set of sentences in the training corpus and Y is the set of corresponding labelled results.Page 3, “Segmentation and Tagging as Character Classification”

See all papers in Proc. ACL 2009 that mention sequence labeling.

See all papers in Proc. ACL that mention sequence labeling.

Back to top.