Article Structure

Abstract

Minimum Error Rate Training (MERT) and Minimum Bayes-Risk (MBR) decoding are used in most current state-of—the-art Statistical Machine Translation (SMT) systems.

Introduction

Statistical Machine Translation (SMT) systems have improved considerably by directly using the error criterion in both training and decoding.

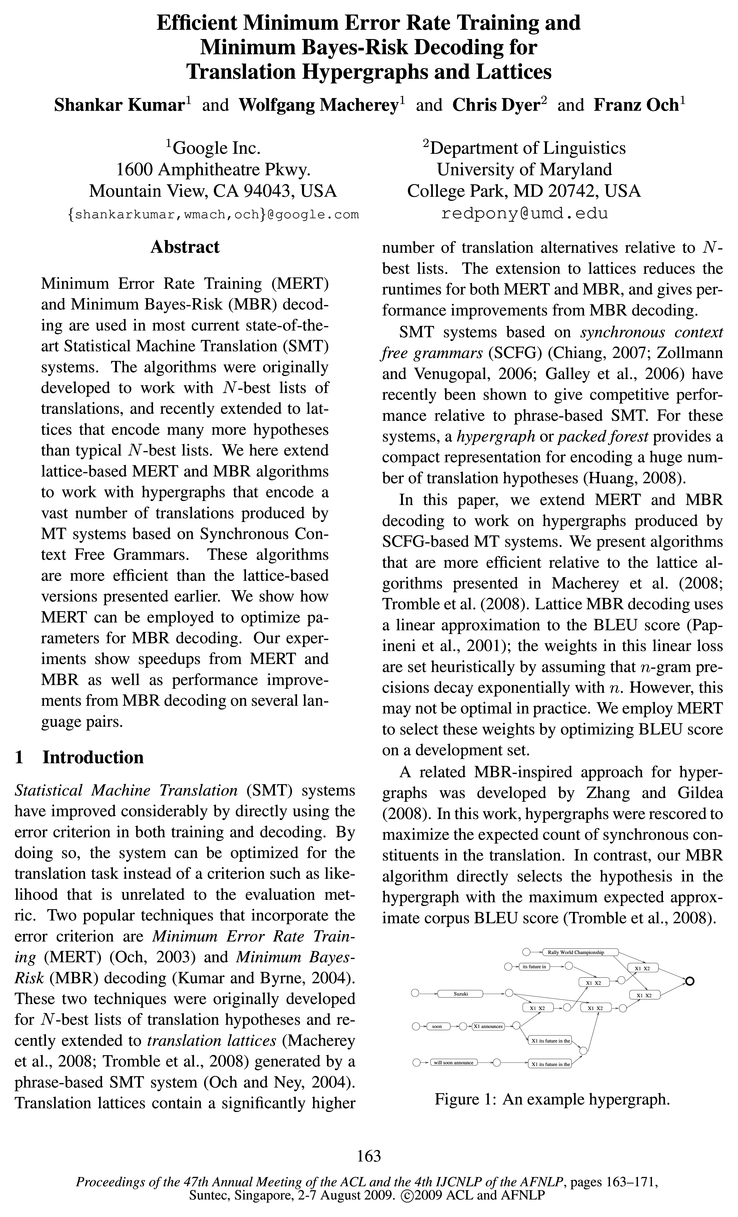

Translation Hypergraphs

A translation lattice compactly encodes a large number of hypotheses produced by a phrase-based SMT system.

Minimum Error Rate Training

Given a set of source sentences F15 with corresponding reference translations R5, the objective of MERT is to find a parameter set M” which minimizes an automated evaluation criterion under a linear model:

Minimum Bayes-Risk Decoding

We first review Minimum Bayes-Risk (MBR) decoding for statistical MT.

MERT for MBR Parameter Optimization

Lattice MBR Decoding (Equation 3) assumes a linear form for the gain function (Equation 2).

Experiments

We now describe our experiments to evaluate MERT and MBR on lattices and hypergraphs, and show how MERT can be used to tune MBR parameters.

Discussion

We have presented efficient algorithms which extend previous work on lattice-based MERT (Macherey et al., 2008) and MBR decoding (Tromble et al., 2008) to work with hypergraphs.

Topics

n-gram

- Lattice MBR decoding uses a linear approximation to the BLEU score (Pap-ineni et al., 2001); the weights in this linear loss are set heuristically by assuming that n-gram pre-cisions decay exponentially with n. However, this may not be optimal in practice.Page 1, “Introduction”

- They approximated log(BLEU) score by a linear function of n-gram matches and candidate length.Page 4, “Minimum Bayes-Risk Decoding”

- where w is an n-gram present in either E or E’, and 60, 61, ..., 6 N are weights which are determined empirically, where N is the maximum n-gram order.Page 4, “Minimum Bayes-Risk Decoding”

- Next, the posterior probability of each n-gram is computed.Page 4, “Minimum Bayes-Risk Decoding”

- A new automaton is then created by intersecting each n-gram with weight (from Equation 2) to an unweighted lattice.Page 4, “Minimum Bayes-Risk Decoding”

- The above steps are carried out one n-gram at a time.Page 4, “Minimum Bayes-Risk Decoding”

- The key idea behind this new algorithm is to rewrite the n-gram posterior probability (Equation 4) as follows:Page 5, “Minimum Bayes-Risk Decoding”

- p(wlg> = Z Z f(e, w, E>P(EIF> (5) E69 66E where f (e, w, E) is a score assigned to edge e on path E containing n-gram w:Page 5, “Minimum Bayes-Risk Decoding”

- We therefore approximate the quantity f(e, w, E) with f*(e, 212, Q) that counts the edge 6 with n-gram 212 that has the highest arc posterior probability relative to predecessors in the entire lattice Q. f *(e, 212, Q) can be computed locally, and the n-gram posterior probability based on f * can be determined as follows:Page 5, “Minimum Bayes-Risk Decoding”

- For each node 75 in the lattice, we maintain a quantity Score(w, t) for each n-gram 212 that lies on a path from the source node to t. Score(w, t) is the highest posterior probability among all edges on the paths that terminate on t and contain n-gram w. The forward pass requires computing the n-grams introduced by each edge; to do this, we propagate n-grams (up to maximum order — l) terminating on each node.Page 5, “Minimum Bayes-Risk Decoding”

- of each n-gram : for each edge e do Compute edge posterior probability P(e\g Compute 71- gram posterior probs.Page 5, “Minimum Bayes-Risk Decoding”

See all papers in Proc. ACL 2009 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

BLEU

- Lattice MBR decoding uses a linear approximation to the BLEU score (Pap-ineni et al., 2001); the weights in this linear loss are set heuristically by assuming that n-gram pre-cisions decay exponentially with n. However, this may not be optimal in practice.Page 1, “Introduction”

- We employ MERT to select these weights by optimizing BLEU score on a development set.Page 1, “Introduction”

- In contrast, our MBR algorithm directly selects the hypothesis in the hypergraph with the maximum expected approximate corpus BLEU score (Tromble et al., 2008).Page 1, “Introduction”

- This reranking can be done for any sentence-level loss function such as BLEU (Papineni et al., 2001), Word Error Rate, or Position-independent Error Rate.Page 4, “Minimum Bayes-Risk Decoding”

- (2008) extended MBR decoding to translation lattices under an approximate BLEU score.Page 4, “Minimum Bayes-Risk Decoding”

- They approximated log( BLEU ) score by a linear function of n-gram matches and candidate length.Page 4, “Minimum Bayes-Risk Decoding”

- However, this does not guarantee that the resulting linear score (Equation 2) is close to the corpus BLEU .Page 6, “MERT for MBR Parameter Optimization”

- We now describe how MERT can be used to estimate these factors to achieve a better approximation to the corpus BLEU .Page 6, “MERT for MBR Parameter Optimization”

- We recall that MERT selects weights in a linear model to optimize an error criterion (e. g. corpus BLEU ) on a training set.Page 6, “MERT for MBR Parameter Optimization”

- The linear approximation to BLEU may not hold in practice for unseen test sets or language-pairs.Page 6, “MERT for MBR Parameter Optimization”

- We now have a total of N +2 feature functions which we optimize using MERT to obtain highest BLEU score on a training set.Page 6, “MERT for MBR Parameter Optimization”

See all papers in Proc. ACL 2009 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

phrase-based

- These two techniques were originally developed for N -best lists of translation hypotheses and recently extended to translation lattices (Macherey et al., 2008; Tromble et al., 2008) generated by a phrase-based SMT system (Och and Ney, 2004).Page 1, “Introduction”

- SMT systems based on synchronous context free grammars (SCFG) (Chiang, 2007; Zollmann and Venugopal, 2006; Galley et al., 2006) have recently been shown to give competitive performance relative to phrase-based SMT.Page 1, “Introduction”

- A translation lattice compactly encodes a large number of hypotheses produced by a phrase-based SMT system.Page 2, “Translation Hypergraphs”

- Our phrase-based statistical MT system is similar to the alignment template system described in (Och and Ney, 2004; Tromble et al., 2008).Page 6, “Experiments”

- We also train two SCFG—based MT systems: a hierarchical phrase-based SMT (Chiang, 2007) system and a syntax augmented machine translation (SAMT) system using the approach described in Zollmann and Venugopal (2006).Page 6, “Experiments”

- Both systems are built on top of our phrase-based systems.Page 6, “Experiments”

- We first compare the new lattice MBR (Algorithm 3) with MBR decoding on 1000-best lists and FSAMBR (Tromble et al., 2008) on lattices generated by the phrase-based systems; evaluation is done using both BLEU and average runtime per sentence (Table 3).Page 7, “Experiments”

- Table 3: Lattice MBR for a phrase-based system.Page 7, “Experiments”

- We report results on nist03 set and present three systems for each language pair: phrase-based (pb), hierarchical (hier), and SAMT; Lattice MBR is done for the phrase-based system while HGMBR is used for the other two.Page 7, “Experiments”

- For the multi-language case, we train phrase-based systems and perform lattice MBR for all language pairs.Page 7, “Experiments”

- We believe that our efficient algorithms will make them more widely applicable in both SCFG—based and phrase-based MT systems.Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention phrase-based.

See all papers in Proc. ACL that mention phrase-based.

Back to top.

language pairs

- Our experiments show speedups from MERT and MBR as well as performance improvements from MBR decoding on several language pairs .Page 1, “Abstract”

- We report results on nist03 set and present three systems for each language pair : phrase-based (pb), hierarchical (hier), and SAMT; Lattice MBR is done for the phrase-based system while HGMBR is used for the other two.Page 7, “Experiments”

- For the multi-language case, we train phrase-based systems and perform lattice MBR for all language pairs .Page 7, “Experiments”

- When we optimize MBR features with MERT, the number of language pairs with gains/no changes/-drops is 22/5/12.Page 8, “Experiments”

- We hypothesize that the default MBR parameters are suboptimal for some language pairs and that MERT helps to find better parameter settings.Page 8, “Experiments”

- In particular, MERT avoids the need for manually tuning these parameters by language pair .Page 8, “Experiments”

- This may not be optimal in practice for unseen test sets and language pairs , and the resulting linear loss may be quite different from the corpus level BLEU.Page 8, “Discussion”

- On an experiment with 40 language pairs , we obtain improvements on 26 pairs, no difference on 8 pairs and drops on 5 pairs.Page 8, “Discussion”

- This was achieved without any need for manual tuning for each language pair .Page 8, “Discussion”

- The baseline model cost feature helps the algorithm effectively back off to the MAP translation in language pairs where MBR features alone would not have helped.Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention language pairs.

See all papers in Proc. ACL that mention language pairs.

Back to top.

MT systems

- We here extend lattice-based MERT and MBR algorithms to work with hypergraphs that encode a vast number of translations produced by MT systems based on Synchronous Context Free Grammars.Page 1, “Abstract”

- In this paper, we extend MERT and MBR decoding to work on hypergraphs produced by SCFG—based MT systems .Page 1, “Introduction”

- MBR decoding for translation can be performed by reranking an N -best list of hypotheses generated by an MT system (Kumar and Byme, 2004).Page 4, “Minimum Bayes-Risk Decoding”

- We next extend the Lattice MBR decoding algorithm (Algorithm 3) to rescore hypergraphs produced by a SCFG based MT system .Page 5, “Minimum Bayes-Risk Decoding”

- 6.2 MT System DescriptionPage 6, “Experiments”

- Our phrase-based statistical MT system is similar to the alignment template system described in (Och and Ney, 2004; Tromble et al., 2008).Page 6, “Experiments”

- We also train two SCFG—based MT systems : a hierarchical phrase-based SMT (Chiang, 2007) system and a syntax augmented machine translation (SAMT) system using the approach described in Zollmann and Venugopal (2006).Page 6, “Experiments”

- On hypergraphs produced by Hierarchical and Syntax Augmented MT systems , our MBR algorithm gives a 7X speedup relative to 1000-best MBR while giving comparable or even better performance.Page 8, “Discussion”

- We believe that our efficient algorithms will make them more widely applicable in both SCFG—based and phrase-based MT systems .Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention MT systems.

See all papers in Proc. ACL that mention MT systems.

Back to top.

NIST

- The first one is the constrained data track of the NIST Arabic-to-English (aren) and Chinese-to-English (zhen) translation taskl.Page 6, “Experiments”

- Table 1: Statistics over the NIST dev/test sets.Page 6, “Experiments”

- Our development set (dev) consists of the NIST 2005 eval set; we use this set for optimizing MBR parameters.Page 6, “Experiments”

- We report results on NIST 2002 and NIST 2003 evaluation sets.Page 6, “Experiments”

- Table 5 shows results for NIST systems.Page 7, “Experiments”

- We observed in the NIST systems that MERT resulted in short translations relative to MAP on the unseen test set.Page 8, “Experiments”

- In the NIST systems, MERT yields small improvements on top of MBR with default parameters.Page 8, “Experiments”

- Thus, MERT has a bigger impact here than in the NIST systems.Page 8, “Experiments”

- Table 5: MBR Parameter Tuning on NIST systemsPage 8, “Discussion”

See all papers in Proc. ACL 2009 that mention NIST.

See all papers in Proc. ACL that mention NIST.

Back to top.

BLEU score

- Lattice MBR decoding uses a linear approximation to the BLEU score (Pap-ineni et al., 2001); the weights in this linear loss are set heuristically by assuming that n-gram pre-cisions decay exponentially with n. However, this may not be optimal in practice.Page 1, “Introduction”

- We employ MERT to select these weights by optimizing BLEU score on a development set.Page 1, “Introduction”

- In contrast, our MBR algorithm directly selects the hypothesis in the hypergraph with the maximum expected approximate corpus BLEU score (Tromble et al., 2008).Page 1, “Introduction”

- (2008) extended MBR decoding to translation lattices under an approximate BLEU score .Page 4, “Minimum Bayes-Risk Decoding”

- We now have a total of N +2 feature functions which we optimize using MERT to obtain highest BLEU score on a training set.Page 6, “MERT for MBR Parameter Optimization”

- MERT is then performed to optimize the BLEU score on a development set; For MERT, we use 40 random initial parameters as well as parameters computed using corpus based statistics (Tromble et al., 2008).Page 7, “Experiments”

- We consider a BLEU score difference to be a) gain if is at least 0.2 points, b) drop if it is at most -0.2 points, and c) no change otherwise.Page 7, “Experiments”

- When MBR does not produce a higher BLEU score relative to MAP on the development set, MERT assigns a higher weight to this feature function.Page 8, “Experiments”

See all papers in Proc. ACL 2009 that mention BLEU score.

See all papers in Proc. ACL that mention BLEU score.

Back to top.

development set

- We employ MERT to select these weights by optimizing BLEU score on a development set .Page 1, “Introduction”

- Our development set (dev) consists of the NIST 2005 eval set; we use this set for optimizing MBR parameters.Page 6, “Experiments”

- MERT is then performed to optimize the BLEU score on a development set ; For MERT, we use 40 random initial parameters as well as parameters computed using corpus based statistics (Tromble et al., 2008).Page 7, “Experiments”

- We select the MBR scaling factor (Tromble et al., 2008) based on the development set ; it is set to 0.1, 0.01, 0.5, 0.2, 0.5 and 1.0 for the aren-phrase, aren-hier, aren-samt, zhen-phrase zhen-hier and zhen-samt systems respectively.Page 7, “Experiments”

- We tune 04 on the development set so that the brevity score of MBR translation is close to that of the MAP translation.Page 8, “Experiments”

- When MBR does not produce a higher BLEU score relative to MAP on the development set , MERT assigns a higher weight to this feature function.Page 8, “Experiments”

- In this paper, we have described how MERT can be employed to estimate the weights for the linear loss function to maximize BLEU on a development set .Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

n-grams

- First, the set of n-grams is extracted from the lattice.Page 4, “Minimum Bayes-Risk Decoding”

- For a moderately large lattice, there can be several thousands of n-grams and the procedure becomes expensive.Page 4, “Minimum Bayes-Risk Decoding”

- For each node 75 in the lattice, we maintain a quantity Score(w, t) for each n-gram 212 that lies on a path from the source node to t. Score(w, t) is the highest posterior probability among all edges on the paths that terminate on t and contain n-gram w. The forward pass requires computing the n-grams introduced by each edge; to do this, we propagate n-grams (up to maximum order — l) terminating on each node.Page 5, “Minimum Bayes-Risk Decoding”

- This is necessary because unlike a lattice, new n-grams may be created at subsequent nodes by concatenating words both to the left and the right side of the n-gram.Page 5, “Minimum Bayes-Risk Decoding”

- As a result, we need to consider all new sequences which can be created by the crossproduct of the n-grams on the two tail nodes.Page 5, “Minimum Bayes-Risk Decoding”

- This linear function contains n + 1 parameters 60, 61, ..., 6 N, where N is the maximum order of the n-grams involved.Page 6, “MERT for MBR Parameter Optimization”

See all papers in Proc. ACL 2009 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

SMT system

- These two techniques were originally developed for N -best lists of translation hypotheses and recently extended to translation lattices (Macherey et al., 2008; Tromble et al., 2008) generated by a phrase-based SMT system (Och and Ney, 2004).Page 1, “Introduction”

- SMT systems based on synchronous context free grammars (SCFG) (Chiang, 2007; Zollmann and Venugopal, 2006; Galley et al., 2006) have recently been shown to give competitive performance relative to phrase-based SMT.Page 1, “Introduction”

- A translation lattice compactly encodes a large number of hypotheses produced by a phrase-based SMT system .Page 2, “Translation Hypergraphs”

- The corresponding representation for an SMT system based on SCFGs (e.g.Page 2, “Translation Hypergraphs”

- MERT and MBR decoding are popular techniques for incorporating the final evaluation metric into the development of SMT systems .Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention SMT system.

See all papers in Proc. ACL that mention SMT system.

Back to top.

Machine Translation

- Minimum Error Rate Training (MERT) and Minimum Bayes-Risk (MBR) decoding are used in most current state-of—the-art Statistical Machine Translation (SMT) systems.Page 1, “Abstract”

- Statistical Machine Translation (SMT) systems have improved considerably by directly using the error criterion in both training and decoding.Page 1, “Introduction”

- In the context of statistical machine translation , the optimization procedure was first described in Och (2003) for N -best lists and later extended to phrase-lattices in Macherey et al.Page 2, “Minimum Error Rate Training”

- We also train two SCFG—based MT systems: a hierarchical phrase-based SMT (Chiang, 2007) system and a syntax augmented machine translation (SAMT) system using the approach described in Zollmann and Venugopal (2006).Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention Machine Translation.

See all papers in Proc. ACL that mention Machine Translation.

Back to top.

Error Rate

- Minimum Error Rate Training (MERT) and Minimum Bayes-Risk (MBR) decoding are used in most current state-of—the-art Statistical Machine Translation (SMT) systems.Page 1, “Abstract”

- Two popular techniques that incorporate the error criterion are Minimum Error Rate Training (MERT) (Och, 2003) and Minimum Bayes-Risk (MBR) decoding (Kumar and Byrne, 2004).Page 1, “Introduction”

- This reranking can be done for any sentence-level loss function such as BLEU (Papineni et al., 2001), Word Error Rate, or Position-independent Error Rate .Page 4, “Minimum Bayes-Risk Decoding”

See all papers in Proc. ACL 2009 that mention Error Rate.

See all papers in Proc. ACL that mention Error Rate.

Back to top.

loss function

- This reranking can be done for any sentence-level loss function such as BLEU (Papineni et al., 2001), Word Error Rate, or Position-independent Error Rate.Page 4, “Minimum Bayes-Risk Decoding”

- Note that N -best MBR uses a sentence BLEU loss function .Page 7, “Experiments”

- In this paper, we have described how MERT can be employed to estimate the weights for the linear loss function to maximize BLEU on a development set.Page 8, “Discussion”

See all papers in Proc. ACL 2009 that mention loss function.

See all papers in Proc. ACL that mention loss function.

Back to top.

Statistical Machine Translation

- Minimum Error Rate Training (MERT) and Minimum Bayes-Risk (MBR) decoding are used in most current state-of—the-art Statistical Machine Translation (SMT) systems.Page 1, “Abstract”

- Statistical Machine Translation (SMT) systems have improved considerably by directly using the error criterion in both training and decoding.Page 1, “Introduction”

- In the context of statistical machine translation , the optimization procedure was first described in Och (2003) for N -best lists and later extended to phrase-lattices in Macherey et al.Page 2, “Minimum Error Rate Training”

See all papers in Proc. ACL 2009 that mention Statistical Machine Translation.

See all papers in Proc. ACL that mention Statistical Machine Translation.

Back to top.