Article Structure

Abstract

We propose Bilingual Tree Kernels (BTKs) to capture the structural similarities across a pair of syntactic translational equivalences and apply BTKs to subtree alignment along with some plain features.

Introduction

Syntax based Statistical Machine Translation (SMT) systems allow the translation process to be more grammatically performed, which provides decent reordering capability.

Bilingual Tree Kernels

In this section, we propose the two BTKs and study their capability and complexity in modeling the bilingual structural similarity.

Substructure Spaces for BTKs

The syntactic translational equivalences under BTKs are evaluated with respective to the substructures factorized from the candidate subtree pairs.

Topics

tree kernels

- We propose Bilingual Tree Kernels (BTKs) to capture the structural similarities across a pair of syntactic translational equivalences and apply BTKs to subtree alignment along with some plain features.Page 1, “Abstract”

- Our study reveals that the structural features embedded in a bilingual parse tree pair are very effective for subtree alignment and the bilingual tree kernels can well capture such features.Page 1, “Abstract”

- Alternatively, convolution parse tree kernels (Collins and Duffy, 2001), which implicitly explore the tree structure information, have been successfully applied in many NLP tasks, such as Semantic parsing (Moschitti, 2004) and Relation Extraction (Zhang et al.Page 2, “Introduction”

- In multilingual tasks such as machine translation, tree kernels are seldom applied.Page 2, “Introduction”

- In this paper, we propose Bilingual Tree Kernels (BTKs) to model the bilingual translational equivalences, in our case, to conduct subtree alignment.Page 2, “Introduction”

- This is motivated by the decent effectiveness of tree kernels in expressing the similarity between tree structures.Page 2, “Introduction”

- We propose two kinds of BTKs named dependent Bilingual Tree Kernel (dBTK), which takes the subtree pair as a whole and independent Bilingual Tree Kernel (iBTK), which individually models the source and the target sub-trees.Page 2, “Introduction”

- 2.1 Independent Bilingual Tree Kernel (iBTK)Page 2, “Bilingual Tree Kernels”

- In order to compute the dot product of the feature vectors in the exponentially high dimensional feature space, we introduce the tree kernel functions as follows:Page 2, “Bilingual Tree Kernels”

- The iBTK is defined as a composite kernel consisting of a source tree kernel and a target tree kernel which measures the source and the target structural similarity respectively.Page 2, “Bilingual Tree Kernels”

- Therefore, the composite kernel can be computed using the ordinary monolingual tree kernels (Collins and Duffy, 2001)Page 2, “Bilingual Tree Kernels”

See all papers in Proc. ACL 2010 that mention tree kernels.

See all papers in Proc. ACL that mention tree kernels.

Back to top.

parse tree

- Our study reveals that the structural features embedded in a bilingual parse tree pair are very effective for subtree alignment and the bilingual tree kernels can well capture such features.Page 1, “Abstract”

- The experimental results show that our approach achieves a significant improvement on both gold standard tree bank and automatically parsed tree pairs against a heuristic similarity based method.Page 1, “Abstract”

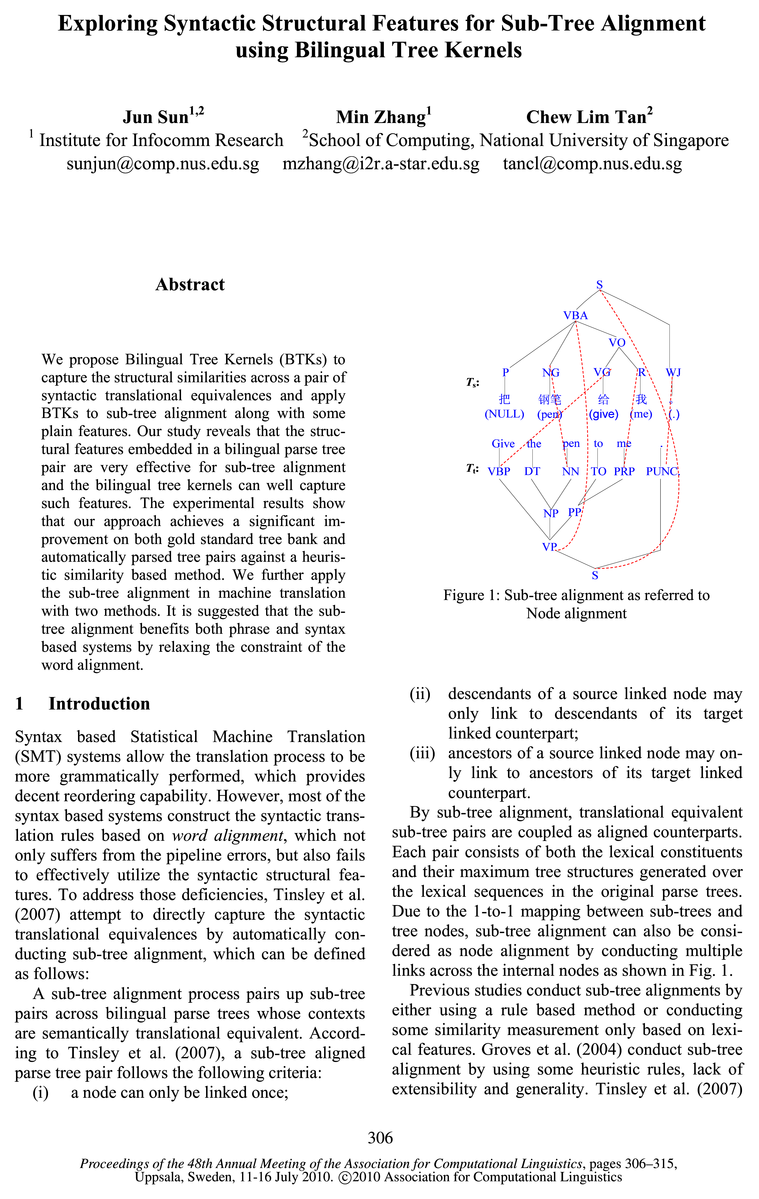

- A subtree alignment process pairs up subtree pairs across bilingual parse trees whose contexts are semantically translational equivalent.Page 1, “Introduction”

- (2007), a subtree aligned parse tree pair follows the following criteria:Page 1, “Introduction”

- Each pair consists of both the lexical constituents and their maximum tree structures generated over the lexical sequences in the original parse trees .Page 1, “Introduction”

- This may be due to the fact that the syntactic structures in a parse tree pair are hard to describe using plain features.Page 2, “Introduction”

- Alternatively, convolution parse tree kernels (Collins and Duffy, 2001), which implicitly explore the tree structure information, have been successfully applied in many NLP tasks, such as Semantic parsing (Moschitti, 2004) and Relation Extraction (Zhang et al.Page 2, “Introduction”

- The plain syntactic structural features can deal with the structural divergence of bilingual parse trees in a more general perspective.Page 4, “Substructure Spaces for BTKs”

- _ lin(S)| lin(T)I $161) _ lin(S)I lin(T)I S and T refer to the entire source and target parse trees respectively.Page 5, “Substructure Spaces for BTKs”

- Therefore, |in(S)| and |in(T)| are the respective span length of the parse tree used for normalization.Page 5, “Substructure Spaces for BTKs”

- Tree Depth difference: Intuitively, translational equivalent subtree pairs tend to have similar depth from the root of the parse tree .Page 5, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

word alignment

- It is suggested that the subtree alignment benefits both phrase and syntax based systems by relaxing the constraint of the word alignment .Page 1, “Abstract”

- However, most of the syntax based systems construct the syntactic translation rules based on word alignment , which not only suffers from the pipeline errors, but also fails to effectively utilize the syntactic structural features.Page 1, “Introduction”

- 4.1 Lexical and Word Alignment FeaturesPage 4, “Substructure Spaces for BTKs”

- Internal Word Alignment Features: The word alignment links account much for the co-occurrence of the aligned terms.Page 5, “Substructure Spaces for BTKs”

- We define the internal word alignment features as follows:Page 5, “Substructure Spaces for BTKs”

- The binary function 6 (u, v) is employed to trigger the computation only when a word aligned link exists for the two words (u, 12) within the subtree span.Page 5, “Substructure Spaces for BTKs”

- Internal—External Word Alignment Features: Similar to the lexical features, we also introduce the intemal-extemal word alignment features as follows:Page 5, “Substructure Spaces for BTKs”

- Only sure links are conducted in the internal node level, without considering possible links adopted in word alignment .Page 6, “Substructure Spaces for BTKs”

- To learn the lexical and word alignment features for both the proposed model and the baseline method, we train GIZA++ on the entire FBIS bilingual corpus (240k).Page 7, “Substructure Spaces for BTKs”

- As for the alignment setting, we use the word alignment trained on the entire FBIS (240k) corpus by GIZA++ with heuristic grow-diag-fmal for both Moses and the syntax system.Page 9, “Substructure Spaces for BTKs”

- For sub-tree-alignment, we use the above word alignment to learn lexical/word alignment feature, and train with the FBIS training corpus (200) using the composite kernel of Plain+dBTK-Root+iBTK-RdSTT.Page 9, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention word alignment.

See all papers in Proc. ACL that mention word alignment.

Back to top.

structural features

- Our study reveals that the structural features embedded in a bilingual parse tree pair are very effective for subtree alignment and the bilingual tree kernels can well capture such features.Page 1, “Abstract”

- However, most of the syntax based systems construct the syntactic translation rules based on word alignment, which not only suffers from the pipeline errors, but also fails to effectively utilize the syntactic structural features .Page 1, “Introduction”

- These works fail to utilize the structural features , rendering the syntactic rich task of subtree alignment less convincing and attractive.Page 2, “Introduction”

- Along with BTKs, various lexical and syntactic structural features are proposed to capture the correspondence between bilingual sub-trees using a polynomial kernel.Page 2, “Introduction”

- Experimental results show that the proposed BTKs benefit subtree alignment on both corpora, along with the lexical features and the plain structural features .Page 2, “Introduction”

- Besides BTKs, we introduce various plain lexical features and structural features which can be expressed as feature functions.Page 4, “Substructure Spaces for BTKs”

- The plain syntactic structural features can deal with the structural divergence of bilingual parse trees in a more general perspective.Page 4, “Substructure Spaces for BTKs”

- 4.2 Online Structural FeaturesPage 5, “Substructure Spaces for BTKs”

- In addition to the lexical correspondence, we also capture the structural divergence by introducing the following tree structural features .Page 5, “Substructure Spaces for BTKs”

- Since the negative training instances largely overwhelm the positive instances, we prune the negative instances using the thresholds according to the lexical feature functions ((1)1, (1)2, (1)3, (p4) and online structural feature functions (gal, gag, gag ).Page 7, “Substructure Spaces for BTKs”

- o All the settings with structural features of the proposed approach achieve better performance than the baseline method.Page 7, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention structural features.

See all papers in Proc. ACL that mention structural features.

Back to top.

feature space

- Both kernels can be utilized within different feature spaces using various representations of the substructures.Page 2, “Introduction”

- In order to compute the dot product of the feature vectors in the exponentially high dimensional feature space , we introduce the tree kernel functions as follows:Page 2, “Bilingual Tree Kernels”

- As a result, we propose the dependent Bilingual Tree kernel (dBTK) to jointly evaluate the similarity across subtree pairs by enlarging the feature space to the Cartesian product of the two substructure sets.Page 3, “Bilingual Tree Kernels”

- Here we verify the correctness of the kernel by directly constructing the feature space for the inner product.Page 3, “Bilingual Tree Kernels”

- Given feature spaces defined in the last two sections, we propose a 2-phase subtree alignment model as follows:Page 5, “Substructure Spaces for BTKs”

- Feature Space P R FPage 7, “Substructure Spaces for BTKs”

- Feature Space P R FPage 7, “Substructure Spaces for BTKs”

- In Tables 3 and 4, we incrementally enlarge the feature spaces in certain order for both corpora and examine the feature contribution to the alignment results.Page 7, “Substructure Spaces for BTKs”

- After comparing iBTKs with the corresponding dBTKs, we find that for FBIS corpus, iBTK greatly outperforms dBTK in any feature space except the Root space.Page 8, “Substructure Spaces for BTKs”

- This finding can be explained by the relationship between the amount of training data and the high dimensional feature space .Page 8, “Substructure Spaces for BTKs”

- Since dBTKs are constructed in a joint manner which obtains a much larger high dimensional feature space than those of iBTKs, dBTKs require more training data to excel its capability, otherwise it will suffer from the data sparseness problem.Page 8, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention feature space.

See all papers in Proc. ACL that mention feature space.

Back to top.

machine translation

- We further apply the subtree alignment in machine translation with two methods.Page 1, “Abstract”

- Syntax based Statistical Machine Translation (SMT) systems allow the translation process to be more grammatically performed, which provides decent reordering capability.Page 1, “Introduction”

- In multilingual tasks such as machine translation , tree kernels are seldom applied.Page 2, “Introduction”

- Further experiments in machine translation also suggest that the obtained subtree alignment can improve the performance of both phrase and syntax based SMT systems.Page 2, “Introduction”

- Due to the above issues, we annotate a new data set to apply the subtree alignment in machine translation .Page 6, “Substructure Spaces for BTKs”

- 7 Experiments on Machine TranslationPage 8, “Substructure Spaces for BTKs”

- However, utilizing syntactic translational equivalences alone for machine translation loses the capability of modeling non-syntactic phrases (Koehn et al., 2003).Page 9, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

gold standard

- The experimental results show that our approach achieves a significant improvement on both gold standard tree bank and automatically parsed tree pairs against a heuristic similarity based method.Page 1, “Abstract”

- We evaluate the subtree alignment on both the gold standard tree bank and an automatically parsed corpus.Page 2, “Introduction”

- The first is HIT gold standard English Chinese parallel tree bank referred as HIT corpusl.Page 6, “Substructure Spaces for BTKs”

- HIT corpus, which is collected from English leam-ing text books in China as well as example sentences in dictionaries, is used for the gold standard corpus evaluation.Page 6, “Substructure Spaces for BTKs”

- We also find that the introduction of BTKs gains more improvement in HIT gold standard corpusPage 8, “Substructure Spaces for BTKs”

- We use both gold standard tree bank and the automatically parsed corpus for the subtree alignment evaluation.Page 9, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

score function

- and Imamura (2001) propose some score functions based on the lexical similarity and co-occurrence.Page 2, “Introduction”

- The baseline system uses many heuristics in searching the optimal solutions with alternative score functions .Page 7, “Substructure Spaces for BTKs”

- The baseline method proposes two score functions based on the lexical translation probability.Page 7, “Substructure Spaces for BTKs”

- They also compute the score function by splitting the tree into the internal and external components.Page 7, “Substructure Spaces for BTKs”

- 2 s1 denotes score function 1 in Tinsley et a1.Page 7, “Substructure Spaces for BTKs”

- skip2_sl_spanl denotes the utilization of heuristics skip2 and spanl while using score function 1Page 7, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention score function.

See all papers in Proc. ACL that mention score function.

Back to top.

translation probabilities

- The lexical features with directions are defined as conditional feature functions based on the conditional lexical translation probabilities .Page 4, “Substructure Spaces for BTKs”

- where P(v|u) refers to the lexical translation probability from the source word u to the target word 12 within the subtree spans, while P(u|v) refers to that from target to source; in(S) refers to the word set for the internal span of the source subtree 5, while in(T) refers to that of the target subtree T.Page 5, “Substructure Spaces for BTKs”

- Internal—External Lexical Features: These features are motivated by the fact that lexical translation probabilities within the translational equivalence tend to be high, and that of the non-Page 5, “Substructure Spaces for BTKs”

- By using the lexical translation probabilities , each hypothesis is assigned an alignment score.Page 6, “Substructure Spaces for BTKs”

- The baseline method proposes two score functions based on the lexical translation probability .Page 7, “Substructure Spaces for BTKs”

- (2007) adopt the lexical translation probabilities dumped by GIZA++ (Och and Ney, 2003) to compute the span based scores for each pair of sub-trees.Page 7, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention translation probabilities.

See all papers in Proc. ACL that mention translation probabilities.

Back to top.

sentence pairs

- Chinese I English # of Sentence pair 5000 Avg.Page 6, “Substructure Spaces for BTKs”

- We randomly select 300 bilingual sentence pairs from the Chinese-English FBIS corpus with the length S 30 in both the source and target sides.Page 6, “Substructure Spaces for BTKs”

- The selected plain sentence pairs are further parsed by Stanford parser (Klein and Manning, 2003) on both the English and Chinese sides.Page 6, “Substructure Spaces for BTKs”

- Chinese I English # of Sentence pair 300 Avg.Page 6, “Substructure Spaces for BTKs”

- In the experiments, we train the translation model on FBIS corpus (7.2M (Chinese) + 9.2M (English) words in 240,000 sentence pairs ) and train a 4-gram language model on the Xinhua portion of the English Gigaword corpus (181M words) using the SRILM Toolkits (Stolcke, 2002).Page 8, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention sentence pairs.

See all papers in Proc. ACL that mention sentence pairs.

Back to top.

feature vectors

- In addition, explicitly utilizing syntactic tree fragments results in exponentially high dimensional feature vectors , which is hard to compute.Page 2, “Introduction”

- In order to compute the dot product of the feature vectors in the exponentially high dimensional feature space, we introduce the tree kernel functions as follows:Page 2, “Bilingual Tree Kernels”

- It is infeasible to explicitly compute the kernel function by expressing the sub-trees as feature vectors .Page 3, “Bilingual Tree Kernels”

- The feature vector of the classifier is computed using a composite kernel:Page 5, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention feature vectors.

See all papers in Proc. ACL that mention feature vectors.

Back to top.

SVM

- In the 1st phase, a kernel based classifier, SVM in our study, is employed to classify each candidate subtree pair as aligned or unaligned.Page 5, “Substructure Spaces for BTKs”

- Since SVM is a large margin based discriminative classifier rather than a probabilistic model, we introduce a sigmoid function to convert the distance against the hyperplane to a posterior alignment probability as follows:Page 5, “Substructure Spaces for BTKs”

- We use SVM with binary classes as the classifier.Page 7, “Substructure Spaces for BTKs”

- We empirically set C=2.4 for SVM and use a = 0.23, the default parameter A = 0.4 for BTKs.Page 7, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention SVM.

See all papers in Proc. ACL that mention SVM.

Back to top.

SMT systems

- Further experiments in machine translation also suggest that the obtained subtree alignment can improve the performance of both phrase and syntax based SMT systems .Page 2, “Introduction”

- Most linguistically motivated syntax based SMT systems require an automatic parser to perform the rule induction.Page 6, “Substructure Spaces for BTKs”

- We explore the effectiveness of subtree alignment for both phrase based and linguistically motivated syntax based SMT systems .Page 8, “Substructure Spaces for BTKs”

- The findings suggest that with the modeling of non-syntactic phrases maintained, more emphasis on syntactic phrases can benefit both the phrase and syntax based SMT systems .Page 9, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention SMT systems.

See all papers in Proc. ACL that mention SMT systems.

Back to top.

semantic similarity

- In this section, we define seven lexical features to measure semantic similarity of a given subtree pair.Page 4, “Substructure Spaces for BTKs”

- baseline only assesses semantic similarity using the lexical features.Page 8, “Substructure Spaces for BTKs”

- In other words, to capture the semantic similarity , structure features requires lexical features to cooperate.Page 8, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention semantic similarity.

See all papers in Proc. ACL that mention semantic similarity.

Back to top.

TreeBank

- Compared with the widely used Penn TreeBank annotation, the new criterion utilizes some different grammar tags and is able to effectively describe some rare language phenomena in Chinese.Page 6, “Substructure Spaces for BTKs”

- The annotator still uses Penn TreeBank annotation on the English side.Page 6, “Substructure Spaces for BTKs”

- In addition, HIT corpus is not applicable for MT experiment due to the problems of domain divergence, annotation discrepancy (Chinese parse tree employs a different grammar from Penn Treebank annotations) and degree of tolerance for parsing errors.Page 6, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention TreeBank.

See all papers in Proc. ACL that mention TreeBank.

Back to top.

significant improvement

- The experimental results show that our approach achieves a significant improvement on both gold standard tree bank and automatically parsed tree pairs against a heuristic similarity based method.Page 1, “Abstract”

- By introducing BTKs to construct a composite kernel, the performance in both corpora is significantly improved against only using the polynomial kernel for plain features.Page 8, “Substructure Spaces for BTKs”

- Recent research on tree based systems shows that relaxing the restriction from tree structure to tree sequence structure (Synchronous Tree Sequence Substitution Grammar: STSSG) significantly improves the translation performance (Zhang et al., 2008).Page 9, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention significant improvement.

See all papers in Proc. ACL that mention significant improvement.

Back to top.

alignment model

- 5 Alignment ModelPage 5, “Substructure Spaces for BTKs”

- Given feature spaces defined in the last two sections, we propose a 2-phase subtree alignment model as follows:Page 5, “Substructure Spaces for BTKs”

- In order to evaluate the effectiveness of the alignment model and its capability in the applications requiring syntactic translational equivalences, we employ two corpora to carry out the subtree alignment evaluation.Page 6, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention alignment model.

See all papers in Proc. ACL that mention alignment model.

Back to top.

Penn TreeBank

- Compared with the widely used Penn TreeBank annotation, the new criterion utilizes some different grammar tags and is able to effectively describe some rare language phenomena in Chinese.Page 6, “Substructure Spaces for BTKs”

- The annotator still uses Penn TreeBank annotation on the English side.Page 6, “Substructure Spaces for BTKs”

- In addition, HIT corpus is not applicable for MT experiment due to the problems of domain divergence, annotation discrepancy (Chinese parse tree employs a different grammar from Penn Treebank annotations) and degree of tolerance for parsing errors.Page 6, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention Penn TreeBank.

See all papers in Proc. ACL that mention Penn TreeBank.

Back to top.

F-measure

- The coefficient Oi for the composite kernel are tuned with respect to F-measure (F) on the development set of HIT corpus.Page 7, “Substructure Spaces for BTKs”

- Those thresholds are also tuned on the development set of HIT corpus with respect to F-measure .Page 7, “Substructure Spaces for BTKs”

- The evaluation is conducted by means of Precision (P), Recall (R) and F-measure (F).Page 7, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention F-measure.

See all papers in Proc. ACL that mention F-measure.

Back to top.

development set

- The coefficient Oi for the composite kernel are tuned with respect to F-measure (F) on the development set of HIT corpus.Page 7, “Substructure Spaces for BTKs”

- Those thresholds are also tuned on the development set of HIT corpus with respect to F-measure.Page 7, “Substructure Spaces for BTKs”

- We use these sentences with less than 50 characters from the NIST MT-2002 test set as the development set (to speed up tuning for syntax based system) and the NIST MT-2005 test set as our test set.Page 8, “Substructure Spaces for BTKs”

See all papers in Proc. ACL 2010 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.