Article Structure

Abstract

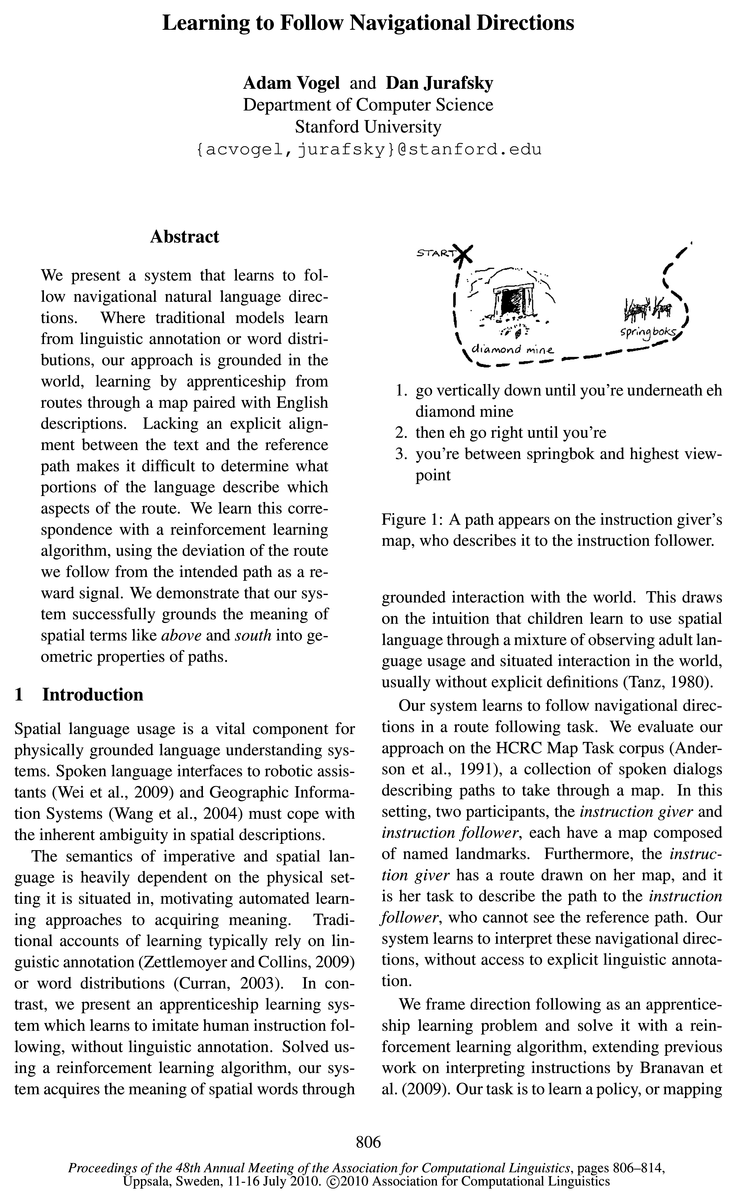

We present a system that learns to follow navigational natural language directions.

Introduction

Spatial language usage is a vital component for physically grounded language understanding systems.

Related Work

Levit and Roy (2007) developed a spatial semantics for the Map Task corpus.

The Map Task Corpus

The HCRC Map Task Corpus (Anderson et al., 1991) is a set of dialogs between an instruction giver and an instruction follower.

Reinforcement Learning Formulation

We frame the direction following task as a sequential decision making problem.

Approximate Dynamic Programming

Given this feature representation, our problem is to find a parameter vector 6 E RK for which Q(s,a) = 6Tgb(s,a) most closely approximates 1E[R(s,a)].

Topics

learning algorithm

- We learn this correspondence with a reinforcement learning algorithm , using the deviation of the route we follow from the intended path as a reward signal.Page 1, “Abstract”

- Solved using a reinforcement learning algorithm , our system acquires the meaning of spatial words throughPage 1, “Introduction”

- We frame direction following as an apprenticeship learning problem and solve it with a reinforcement learning algorithm , extending previous work on interpreting instructions by Branavan et al.Page 1, “Introduction”

- 2Our learning algorithm is not dependent on a determin-Page 3, “Reinforcement Learning Formulation”

- Learning exactly which words influence decision making is difficult; reinforcement learning algorithms have problems with the large, sparse feature vectors common in natural language processing.Page 4, “Reinforcement Learning Formulation”

- To learn these weights 6 we use SARSA (Sutton and Barto, 1998), an online learning algorithm similar to Q-learning (Watkins and Dayan, 1992).Page 5, “Approximate Dynamic Programming”

- Algorithm 1 details the learning algorithm , which we follow here.Page 5, “Approximate Dynamic Programming”

- We also compare against the policy gradient learning algorithm of Branavan et al.Page 7, “Approximate Dynamic Programming”

- During training, the learning algorithm adjusts the weights 6 according to the gradient of the value function defined by this distribution.Page 7, “Approximate Dynamic Programming”

- Reinforcement learning algorithms can be classified into value based and policy based.Page 7, “Approximate Dynamic Programming”

- Policy learning algorithms directly search through the space of policies.Page 7, “Approximate Dynamic Programming”

See all papers in Proc. ACL 2010 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

natural language

- We present a system that learns to follow navigational natural language directions.Page 1, “Abstract”

- However, they do not learn these representations from text, leaving natural language processing as an open problem.Page 2, “Related Work”

- Additionally, the instruction giver has a path drawn on her map, and must communicate this path to the instruction follower in natural language .Page 3, “The Map Task Corpus”

- Learning exactly which words influence decision making is difficult; reinforcement learning algorithms have problems with the large, sparse feature vectors common in natural language processing.Page 4, “Reinforcement Learning Formulation”

- We presented a reinforcement learning system which learns to interpret natural language directions.Page 8, “Approximate Dynamic Programming”

- While our results are still preliminary, we believe our model represents a significant advance in learning natural language meaning, drawing its supervision from human demonstration rather than word distributions or hand-labeled semantic tags.Page 8, “Approximate Dynamic Programming”

See all papers in Proc. ACL 2010 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

feature vector

- Thus, we represent state/action pairs with a feature vector gb(s, a) E RK.Page 4, “Reinforcement Learning Formulation”

- Learning exactly which words influence decision making is difficult; reinforcement learning algorithms have problems with the large, sparse feature vectors common in natural language processing.Page 4, “Reinforcement Learning Formulation”

- For a given state 3 = (u, l, c) and action a = (l’, 0’), our feature vector gb(s, a) is composed of the following:Page 4, “Reinforcement Learning Formulation”

- Furthermore, as the size of the feature vector K increases, the space becomes even more difficult to search.Page 8, “Approximate Dynamic Programming”

See all papers in Proc. ACL 2010 that mention feature vector.

See all papers in Proc. ACL that mention feature vector.

Back to top.