Article Structure

Abstract

Resolving polysemy and synonymy is required for hi gh—quality information extraction.

Introduction

Many information extraction systems construct knowledge bases by extracting structured assertions from free text (e.g., NELL (Carlson et al., 2010), TextRunner (Banko et al., 2007)).

Prior Work

Previous work on concept discovery has focused on the subproblems of word sense induction and synonym resolution.

Background: Never-Ending Language Learner

ConceptResolver is designed as a component for the Never-Ending Language Learner (NELL) (Carlson et al., 2010).

ConceptResolver

This section describes ConceptResolver, our new component which creates concepts from NELL’s extractions.

Evaluation

We perform several experiments to measure ConceptResolver’s performance at each of its respective tasks.

Discussion

In order for information extraction systems to accurately represent knowledge, they must represent noun phrases, concepts, and the many-to-many mapping from noun phrases to concepts they denote.

Topics

word senses

- ConceptResolver performs both word sense induction and synonym resolution on relations extracted from text using an ontology and a small amount of labeled data.Page 1, “Abstract”

- Word sense induction is performed by inferring a set of semantic types for each noun phrase.Page 1, “Abstract”

- When ConceptResolver is run on N ELL’s knowledge base, 87% of the word senses it creates correspond to real-world concepts, and 85% of noun phrases that it suggests refer to the same concept are indeed synonyms.Page 1, “Abstract”

- Induce Word Senses i.Page 2, “Introduction”

- Cluster word senses with semantic type C using classifier’s predictions.Page 2, “Introduction”

- It first performs word sense induction, using the extracted category instances to create one or more unambiguous word senses for each noun phrase in the knowledge base.Page 2, “Introduction”

- Each word sense is a copy of the original noun phrase paired with a semantic type (a category) that restricts the concepts it can refer to.Page 2, “Introduction”

- ConceptResolver then performs synonym resolution on these word senses .Page 2, “Introduction”

- The result of this process is clusters of synonymous word senses , which are output as concepts.Page 2, “Introduction”

- Concepts inherit the semantic type of the word senses they contain.Page 2, “Introduction”

- The evaluation shows that, on average, 87% of the word senses created by ConceptResolver correspond to real-world concepts.Page 2, “Introduction”

See all papers in Proc. ACL 2011 that mention word senses.

See all papers in Proc. ACL that mention word senses.

Back to top.

noun phrases

- We present ConceptResolver, a component for the N ever-Ending Language Learner (NELL) (Carlson et al., 2010) that handles both phenomena by identifying the latent concepts that noun phrases refer to.Page 1, “Abstract”

- When ConceptResolver is run on N ELL’s knowledge base, 87% of the word senses it creates correspond to real-world concepts, and 85% of noun phrases that it suggests refer to the same concept are indeed synonyms.Page 1, “Abstract”

- A major limitation of many of these systems is that they fail to distinguish between noun phrases and the underlying concepts they refer to.Page 1, “Introduction”

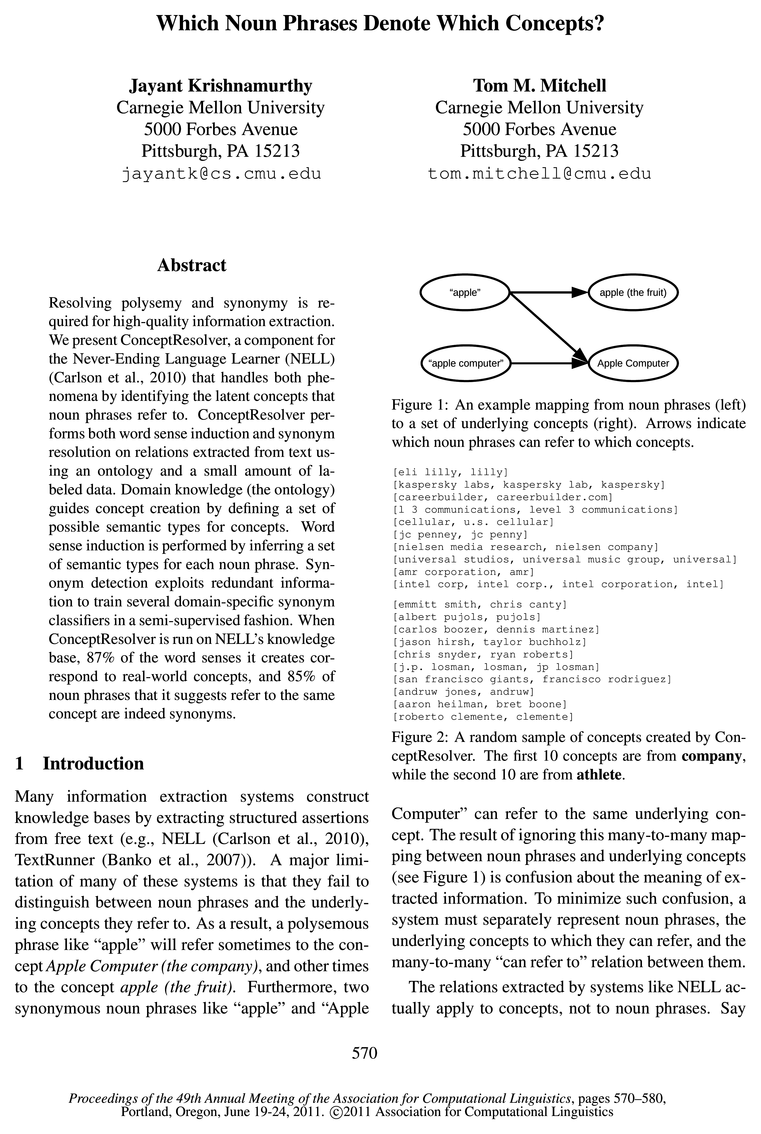

- Furthermore, two synonymous noun phrases like “apple” and “ApplePage 1, “Introduction”

- Figure 1: An example mapping from noun phrases (left) to a set of underlying concepts (right).Page 1, “Introduction”

- Arrows indicate which noun phrases can refer to which concepts.Page 1, “Introduction”

- The result of ignoring this many-to-many mapping between noun phrases and underlying concepts (see Figure 1) is confusion about the meaning of extracted information.Page 1, “Introduction”

- To minimize such confusion, a system must separately represent noun phrases , the underlying concepts to which they can refer, and the many-to-many “can refer to” relation between them.Page 1, “Introduction”

- The relations extracted by systems like NELL actually apply to concepts, not to noun phrases .Page 1, “Introduction”

- the system extracts the relation ceoOf(:c1, 302) between the noun phrases :c1 and :52.Page 2, “Introduction”

- A similar interpretation holds for one-place category predicates like person(:c1 We define concept discovery as the problem of (1) identifying concepts like 01 and 02 from extracted predicates like ceoOf(:c1, m2) and (2) mapping noun phrases like :51, :32 to the concepts they can refer to.Page 2, “Introduction”

See all papers in Proc. ACL 2011 that mention noun phrases.

See all papers in Proc. ACL that mention noun phrases.

Back to top.

knowledge base

- When ConceptResolver is run on N ELL’s knowledge base , 87% of the word senses it creates correspond to real-world concepts, and 85% of noun phrases that it suggests refer to the same concept are indeed synonyms.Page 1, “Abstract”

- Many information extraction systems construct knowledge bases by extracting structured assertions from free text (e.g., NELL (Carlson et al., 2010), TextRunner (Banko et al., 2007)).Page 1, “Introduction”

- It first performs word sense induction, using the extracted category instances to create one or more unambiguous word senses for each noun phrase in the knowledge base .Page 2, “Introduction”

- We evaluate ConceptResolver using a subset of NELL’s knowledge base , presenting separate results for the concepts of each semantic type.Page 2, “Introduction”

- 1More information about NELL, including browsable and downloadable versions of its knowledge base , is available from http://rtw.ml.cmu.edu.Page 3, “Background: Never-Ending Language Learner”

- NELL’s knowledge base contains both definitions for predicates and extracted instances of each predicate.Page 4, “Background: Never-Ending Language Learner”

- At present, NELL’s knowledge base defines approximately 500 predicates and contains over half a million extracted instances of these predicates with an accuracy of approximately 0.85.Page 4, “Background: Never-Ending Language Learner”

- The system runs four independent extractors for each predicate: the first uses web co-occurrence statistics, the second uses HTML structures on webpages, the third uses the morphological structure of the noun phrase itself, and the fourth exploits empirical regularities within the knowledge base .Page 4, “Background: Never-Ending Language Learner”

- NELL learns using a bootstrapping process, iteratively retraining these extractors using instances in the knowledge base, then adding some predictions of the learners to the knowledge base .Page 4, “Background: Never-Ending Language Learner”

- The final output of sense induction is a sense-disambiguated knowledge base , where each noun phrase has been converted into one or more word senses, and relations hold between pairs of senses.Page 5, “ConceptResolver”

- For both experiments, we used a knowledge base created by running 140 iterations of NELL.Page 6, “Evaluation”

See all papers in Proc. ACL 2011 that mention knowledge base.

See all papers in Proc. ACL that mention knowledge base.

Back to top.

semi-supervised

- Synonym detection exploits redundant information to train several domain-specific synonym classifiers in a semi-supervised fashion.Page 1, “Abstract”

- Train a semi-supervised classifier to predict synonymy.Page 2, “Introduction”

- used to train a semi-supervised classifier.Page 2, “Introduction”

- However, our evaluation shows that ConceptResolver has higher synonym resolution precision than Resolver, which we attribute to our semi-supervised approach and the known relation schema.Page 3, “Prior Work”

- ConceptResolver’s approach lies between these two extremes: we label a small number of synonyms (10 pairs), then use semi-supervised training to learn a similarity function.Page 3, “Prior Work”

- ConceptResolver uses a novel algorithm for semi-supervised clustering which is conceptually similar to other work in the area.Page 3, “Prior Work”

- As far as we know, ConceptResolver is the first application of semi-supervised clustering to relational data — where the items being clustered are connected by relations (Getoor and Diehl, 2005).Page 3, “Prior Work”

- Interestingly, the relational setting also provides us with the independent views that are beneficial to semi-supervised training.Page 3, “Prior Work”

- NELL is an information extraction system that has been running 24x7 for over a year, using coupled semi-supervised learning to populate an ontology from unstructured text found on the web.Page 3, “Background: Never-Ending Language Learner”

- After mapping each noun phrase to one or more senses (each with a distinct category type), ConceptResolver performs semi-supervised clustering to find synonymous senses.Page 5, “ConceptResolver”

- For each category, ConceptResolver trains a semi-supervised synonym classifier then uses its predictions to cluster word senses.Page 5, “ConceptResolver”

See all papers in Proc. ACL 2011 that mention semi-supervised.

See all papers in Proc. ACL that mention semi-supervised.

Back to top.

gold standard

- The second experiment evaluates synonym resolution by comparing ConceptResolver’s sense clusters to a gold standard clustering.Page 6, “Evaluation”

- Our second experiment evaluates synonym resolution by comparing the concepts created by ConceptResolver to a gold standard set of concepts.Page 8, “Evaluation”

- Specifically, the gold standard clustering contains noun phrases that refer to multiple concepts within the same category.Page 8, “Evaluation”

- (It is unclear how to create a gold standard clustering without allowing such mappings.)Page 8, “Evaluation”

- The Resolver metric aligns each proposed cluster containing 2 2 senses with a gold standard cluster (i.e., a real-world concept) by selecting the cluster that a plurality of the senses in the proposed cluster refer to.Page 8, “Evaluation”

- in the gold standard cluster; recall is computed analogously by swapping the roles of the proposed and gold standard clusters.Page 8, “Evaluation”

- This process mimics aligning each sampled concept with its best possible match in a gold standard clustering, then measuring precision with respect to the gold standard .Page 8, “Evaluation”

- Incorrectly categorized noun phrases were not included in the gold standard as they do not correspond to any real-world entities.Page 8, “Evaluation”

See all papers in Proc. ACL 2011 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

random sample

- Figure 2: A random sample of concepts created by ConceptResolver.Page 1, “Introduction”

- The regularization parameter A is automatically selected on each iteration by searching for a value which maximizes the loglikelihood of a validation set, which is constructed by randomly sampling 25% of L on each iteration.Page 5, “ConceptResolver”

- Resolver precision can be interpreted as the probability that a randomly sampled sense (in a cluster with at least 2 senses) is in a cluster representing its true meaning.Page 8, “Evaluation”

- To create this set, we randomly sampled noun phrases from each category and manually matched each noun phrase to one or more real-world entities.Page 8, “Evaluation”

- To make this difference concrete, Figure 2 (first page) shows a random sample of 10 concepts from both company and athlete.Page 8, “Evaluation”

See all papers in Proc. ACL 2011 that mention random sample.

See all papers in Proc. ACL that mention random sample.

Back to top.

coreference

- Concept discovery is also related to coreference resolution (Ng, 2008; Poon and Domingos, 2008).Page 3, “Prior Work”

- The difference between the two problems is that coreference resolution finds noun phrases that refer to the same concept within a specific document.Page 3, “Prior Work”

- We think the concepts produced by a system like ConceptResolver could be used to improve coreference resolution by providing prior knowledge about noun phrases that can refer to the same concept.Page 3, “Prior Work”

- This knowledge could be especially helpful for cross-document coreference resolution systems (Haghighi and Klein, 2010), which actually represent concepts and track mentions of them across documents.Page 3, “Prior Work”

See all papers in Proc. ACL 2011 that mention coreference.

See all papers in Proc. ACL that mention coreference.

Back to top.

coreference resolution

- Concept discovery is also related to coreference resolution (Ng, 2008; Poon and Domingos, 2008).Page 3, “Prior Work”

- The difference between the two problems is that coreference resolution finds noun phrases that refer to the same concept within a specific document.Page 3, “Prior Work”

- We think the concepts produced by a system like ConceptResolver could be used to improve coreference resolution by providing prior knowledge about noun phrases that can refer to the same concept.Page 3, “Prior Work”

- This knowledge could be especially helpful for cross-document coreference resolution systems (Haghighi and Klein, 2010), which actually represent concepts and track mentions of them across documents.Page 3, “Prior Work”

See all papers in Proc. ACL 2011 that mention coreference resolution.

See all papers in Proc. ACL that mention coreference resolution.

Back to top.

extraction systems

- Many information extraction systems construct knowledge bases by extracting structured assertions from free text (e.g., NELL (Carlson et al., 2010), TextRunner (Banko et al., 2007)).Page 1, “Introduction”

- NELL is an information extraction system that has been running 24x7 for over a year, using coupled semi-supervised learning to populate an ontology from unstructured text found on the web.Page 3, “Background: Never-Ending Language Learner”

- As in other information extraction systems , the category and relation instances extracted by NELL contain polysemous and synonymous noun phrases.Page 4, “Background: Never-Ending Language Learner”

- In order for information extraction systems to accurately represent knowledge, they must represent noun phrases, concepts, and the many-to-many mapping from noun phrases to concepts they denote.Page 9, “Discussion”

See all papers in Proc. ACL 2011 that mention extraction systems.

See all papers in Proc. ACL that mention extraction systems.

Back to top.

labeled data

- ConceptResolver performs both word sense induction and synonym resolution on relations extracted from text using an ontology and a small amount of labeled data .Page 1, “Abstract”

- These approaches use large amounts of labeled data , which can be difficult to create.Page 3, “Prior Work”

- A is re-selected on each iteration because the initial labeled data set is extremely small, so the initial validation set is not necessarily representative of the actual data.Page 5, “ConceptResolver”

- l. Initialize labeled data L with 10 positive and 50 negative examples (pairs of senses)Page 6, “ConceptResolver”

See all papers in Proc. ACL 2011 that mention labeled data.

See all papers in Proc. ACL that mention labeled data.

Back to top.

similarity score

- The first three algorithms produce similarity scores by matching words in the two phrases and the fourth is an edit distance.Page 5, “ConceptResolver”

- The algorithm is essentially bottom-up agglomerative clustering of word senses using a similarity score derived from P(Y|X1, X2).Page 6, “ConceptResolver”

- The similarity score for two senses is defined as:Page 6, “ConceptResolver”

- The similarity score for two clusters is the sum of the similarity scores for all pairs of senses.Page 6, “ConceptResolver”

See all papers in Proc. ACL 2011 that mention similarity score.

See all papers in Proc. ACL that mention similarity score.

Back to top.

logistic regression

- Both the string similarity classifier and the relation classifier are trained using Lg-regularized logistic regression .Page 5, “ConceptResolver”

- As we trained both classifiers using logistic regression , we have models for the probabilities P(Y|X1) and P(Y|X2).Page 6, “ConceptResolver”

- (typically poorly calibrated) probability estimates of logistic regression .Page 6, “ConceptResolver”

See all papers in Proc. ACL 2011 that mention logistic regression.

See all papers in Proc. ACL that mention logistic regression.

Back to top.

relations extracted

- ConceptResolver performs both word sense induction and synonym resolution on relations extracted from text using an ontology and a small amount of labeled data.Page 1, “Abstract”

- The relations extracted by systems like NELL actually apply to concepts, not to noun phrases.Page 1, “Introduction”

- Synonym resolution on relations extracted from web text has been previously studied by Resolver (Yates and Etzioni, 2007), which finds synonyms in relation triples extracted by TextRunner (Banko et al., 2007).Page 3, “Prior Work”

See all papers in Proc. ACL 2011 that mention relations extracted.

See all papers in Proc. ACL that mention relations extracted.

Back to top.

relation instances

- The main input to ConceptResolver is a set of extracted category and relation instances over noun phrases, like person(:c1) and ceoOf(:c1, :52), produced by running NELL.Page 2, “Introduction”

- As in other information extraction systems, the category and relation instances extracted by NELL contain polysemous and synonymous noun phrases.Page 4, “Background: Never-Ending Language Learner”

- Both extracting more relation instances and adding new relations to the ontology will improve synonym res-Page 9, “Discussion”

See all papers in Proc. ACL 2011 that mention relation instances.

See all papers in Proc. ACL that mention relation instances.

Back to top.

similarity measure

- Like other approaches (Basu et al., 2004; Xing et al., 2003; Klein et al., 2002), we learn a similarity measure for clustering based on a set of must-link and cannot-link constraints.Page 3, “Prior Work”

- Unlike prior work, our algorithm exploits multiple views of the data to improve the similarity measure .Page 3, “Prior Work”

- We use several string similarity measures as features, including SoftTFIDF (Cohen et al., 2003), Level 2 JaroWinkler (Cohen et al., 2003), Fellegi-Sunter (Fellegi and Sunter, 1969), and Monge-Elkan (Monge and Elkan, 1996).Page 5, “ConceptResolver”

See all papers in Proc. ACL 2011 that mention similarity measure.

See all papers in Proc. ACL that mention similarity measure.

Back to top.