Article Structure

Abstract

In this work, we present a novel approach to the generation task of ordering prenominal modifiers.

Introduction

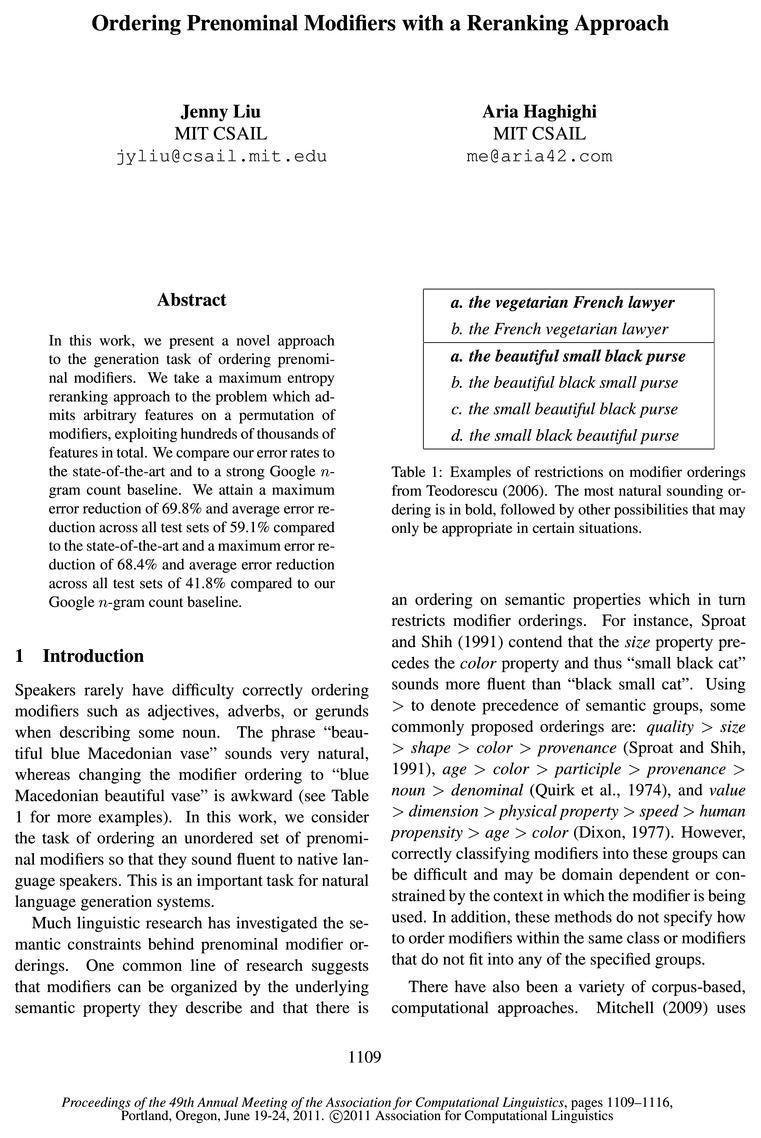

Speakers rarely have difficulty correctly ordering modifiers such as adjectives, adverbs, or gerunds when describing some noun.

Related Work

Mitchell (2009) orders sequences of at most 4 modifiers and defines nine classes that express the broad positional preferences of modifiers, where position 1 is closest to the noun phrase (NP) head and position 4 is farthest from it.

Model

We treat the problem of prenominal modifier ordering as a reranking problem.

Experiments

4.1 Data Preprocessing and Selection

Results

The MAXENT model consistently outperforms CLASS BASED across all test corpora and sequence lengths for both tokens and types, except when testing on the Brown and Switchboard corpora for modifier sequences of length 5, for which neither approach is able to make any correct predictions.

Analysis

MAXENT seems to outperform the CLASS BASED baseline because it learns more from the training data.

Conclusion

The straightforward maximum entropy reranking approach is able to significantly outperform preVious computational approaches by allowing for a richer model of the prenominal modifier ordering process.

Topics

MAXENT

- To evaluate our system ( MAXENT ) and our baselines, we partitioned the corpora into training and testing data.Page 5, “Experiments”

- For each NP in the test data, we generated a set of modifiers and looked at the predicted orderings of the MAXENT , CLASS BASED, and GOOGLE N-GRAM methods.Page 5, “Experiments”

- The MAXENT model consistently outperforms CLASS BASED across all test corpora and sequence lengths for both tokens and types, except when testing on the Brown and Switchboard corpora for modifier sequences of length 5, for which neither approach is able to make any correct predictions.Page 5, “Results”

- MAXENT also outperforms the GOOGLE N-GRAM baseline for almost all test corpora and sequence lengths.Page 6, “Results”

- For the Switchboard test corpus token and type accuracies, the GOOGLE N-GRAM baseline is more accurate than MAXENT for sequences of length 2 and overall, but the accuracy of MAXENT is competitive with that of GOOGLE N-GRAM.Page 6, “Results”

- If we examine the error reduction between MAXENT and CLASS BASED, we attain a maximum error reduction of 69.8% for the WSJ test corpus across modifier sequence tokens, and an average error reduction of 59.1% across all test corpora for tokens.Page 6, “Results”

- MAXENT also attains a maximum error reduction of 68.4% for the WSJ test corpus and an average error reduction of 41.8% when compared to GOOGLE N-GRAM.Page 6, “Results”

- MAXENT seems to outperform the CLASS BASED baseline because it learns more from the training data.Page 6, “Analysis”

- ' —E|— MaxEnt —@— ClassBased I I I I I I I I I I I I I I I I I I I I I I I I IPage 7, “Analysis”

- ' —E|— MaXEnt —9— ClassB asedPage 7, “Analysis”

- —E|— MaxEnt —9— ClassBasedPage 7, “Analysis”

See all papers in Proc. ACL 2011 that mention MAXENT.

See all papers in Proc. ACL that mention MAXENT.

Back to top.

n-gram

- We compare our error rates to the state-of-the-art and to a strong Google n-gram count baseline.Page 1, “Abstract”

- We attain a maximum error reduction of 69.8% and average error reduction across all test sets of 59.1% compared to the state-of-the-art and a maximum error reduction of 68.4% and average error reduction across all test sets of 41.8% compared to our Google n-gram count baseline.Page 1, “Abstract”

- We keep a table mapping each unique n-gram to the number of times it has been seen in the training data.Page 4, “Experiments”

- 4.2 Google n-gram BaselinePage 5, “Experiments”

- The Google n-gram corpus is a collection of n-gram counts drawn from public webpages with a total of one trillion tokens — around 1 billion each of unique 3-grams, 4—grams, and 5-grams, and around 300,000 unique bigrams.Page 5, “Experiments”

- We created a Google n-gram baseline that takes a set of modifiers B, determines the Google n-gram count for each possible permutation in 7T(B), and selects the permutation with the highest n-gram count as the winning ordering 55*.Page 5, “Experiments”

- We will refer to this baseline as GOOGLE N-GRAM .Page 5, “Experiments”

- For each NP in the test data, we generated a set of modifiers and looked at the predicted orderings of the MAXENT, CLASS BASED, and GOOGLE N-GRAM methods.Page 5, “Experiments”

- MAXENT also outperforms the GOOGLE N-GRAM baseline for almost all test corpora and sequence lengths.Page 6, “Results”

- For the Switchboard test corpus token and type accuracies, the GOOGLE N-GRAM baseline is more accurate than MAXENT for sequences of length 2 and overall, but the accuracy of MAXENT is competitive with that of GOOGLE N-GRAM .Page 6, “Results”

- MAXENT also attains a maximum error reduction of 68.4% for the WSJ test corpus and an average error reduction of 41.8% when compared to GOOGLE N-GRAM .Page 6, “Results”

See all papers in Proc. ACL 2011 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

reranking

- We take a maximum entropy reranking approach to the problem which admits arbitrary features on a permutation of modifiers, exploiting hundreds of thousands of features in total.Page 1, “Abstract”

- By mapping a set of features across the training data and using a maximum entropy reranking model, we can learn optimal weights for these features and then order each set of modifiers in the test data according to our features and the learned weights.Page 2, “Introduction”

- In Section 3 we present the details of our maximum entropy reranking approach.Page 2, “Introduction”

- In this next section, we describe our maximum entropy reranking approach that tries to develop a more comprehensive model of the modifier ordering process to avoid the sparsity issues that previous ap-Page 2, “Related Work”

- We treat the problem of prenominal modifier ordering as a reranking problem.Page 3, “Model”

- At test time, we choose an ordering cc 6 7r(B) using a maximum entropy reranking approach (Collins and Koo, 2005).Page 3, “Model”

- The straightforward maximum entropy reranking approach is able to significantly outperform preVious computational approaches by allowing for a richer model of the prenominal modifier ordering process.Page 8, “Conclusion”

See all papers in Proc. ACL 2011 that mention reranking.

See all papers in Proc. ACL that mention reranking.

Back to top.

bigram

- Shaw and Hatzivassiloglou also use a transitivity method to fill out parts of the Count table where bigrams are not actually seen in the training data but their counts can be inferred from other entries in the table, and they use a clustering method to group together modifiers with similar positional preferences.Page 2, “Related Work”

- Shaw and Hatzivassiloglou report a highest accuracy of 94.93% and a lowest accuracy of 65.93%, but since their methods depend heavily on bigram counts in the training corpus, they are also limited in how informed their decisions can be if modifiers in the test data are not present at training time.Page 2, “Related Work”

- We also kept NPs with only 1 modifier to be used for generating <m0difie1; head n0un> bigram counts at training time.Page 4, “Experiments”

- For example, the NP “the beautiful blue Macedonian vase” generates the following bigrams : <beautzful blue>, <blue Macedonian>, and <beautlful Macedonian>, along with the 3-gram <beautlful blue Macedonian>.Page 4, “Experiments”

- In addition, we also store a table that keeps track of bigram counts for < M, H >, where H is the head noun of an NP and M is the modifier closest to it.Page 4, “Experiments”

- The Google n-gram corpus is a collection of n-gram counts drawn from public webpages with a total of one trillion tokens — around 1 billion each of unique 3-grams, 4—grams, and 5-grams, and around 300,000 unique bigrams .Page 5, “Experiments”

See all papers in Proc. ACL 2011 that mention bigram.

See all papers in Proc. ACL that mention bigram.

Back to top.

maximum entropy

- We take a maximum entropy reranking approach to the problem which admits arbitrary features on a permutation of modifiers, exploiting hundreds of thousands of features in total.Page 1, “Abstract”

- By mapping a set of features across the training data and using a maximum entropy reranking model, we can learn optimal weights for these features and then order each set of modifiers in the test data according to our features and the learned weights.Page 2, “Introduction”

- In Section 3 we present the details of our maximum entropy reranking approach.Page 2, “Introduction”

- In this next section, we describe our maximum entropy reranking approach that tries to develop a more comprehensive model of the modifier ordering process to avoid the sparsity issues that previous ap-Page 2, “Related Work”

- At test time, we choose an ordering cc 6 7r(B) using a maximum entropy reranking approach (Collins and Koo, 2005).Page 3, “Model”

- The straightforward maximum entropy reranking approach is able to significantly outperform preVious computational approaches by allowing for a richer model of the prenominal modifier ordering process.Page 8, “Conclusion”

See all papers in Proc. ACL 2011 that mention maximum entropy.

See all papers in Proc. ACL that mention maximum entropy.

Back to top.

n-grams

- Previous counting approaches can be expressed as a real-valued feature that, given all n-grams generated by a permutation of modifiers, returns the count of all these n-grams in the original training data.Page 3, “Model”

- We might also expect permutations that contain n-grams previously seen in the training data to be more natural sounding than other permutations that generate n-grams that have not been seen before.Page 3, “Model”

- To collect the statistics, we take each NP in the training data and consider all possible 2-gms through 5-gms that are present in the NP’s modifier sequence, allowing for nonconsecutive n-grams .Page 4, “Experiments”

See all papers in Proc. ACL 2011 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.