Article Structure

Abstract

We describe a joint model for understanding user actions in natural language utterances.

Introduction

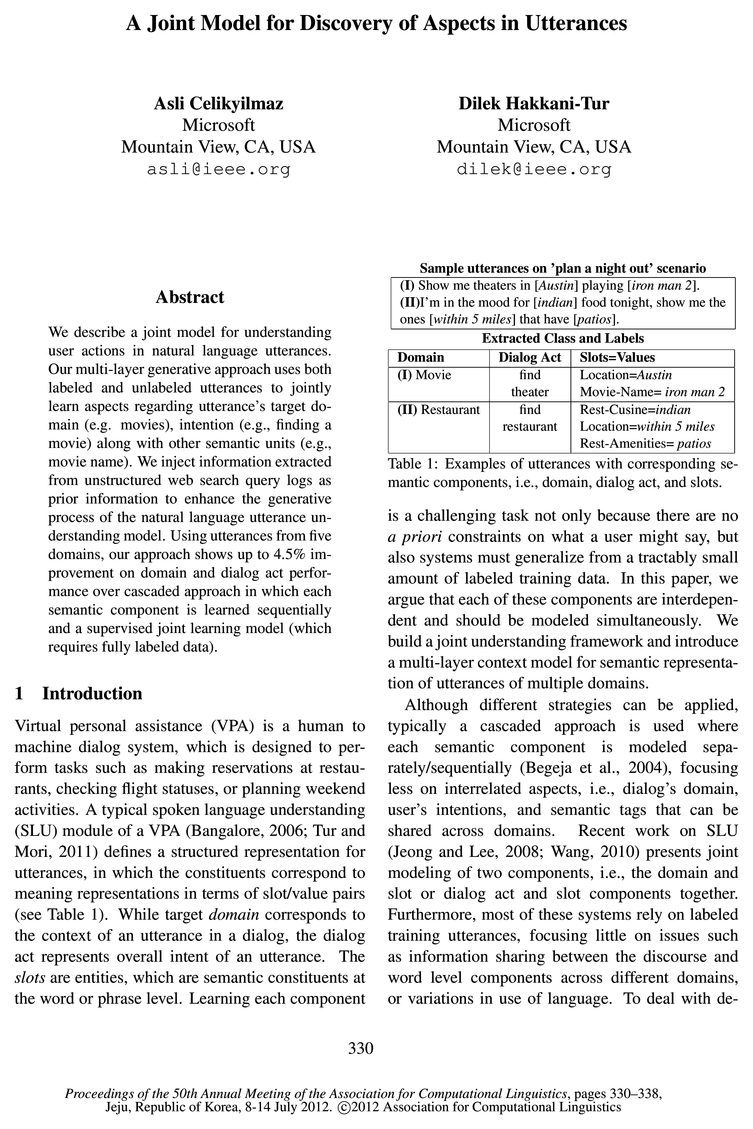

Virtual personal assistance (VPA) is a human to machine dialog system, which is designed to perform tasks such as making reservations at restaurants, checking flight statuses, or planning weekend activities.

Background

Language understanding has been well studied in the context of questiorflanswering (Harabagiu and Hickl, 2006; Liang et al., 2011), entailment (Sam-mons et al., 2010), summarization (Hovy et al., 2005; Daumé-III and Marcu, 2006), spoken language understanding (Tur and Mori, 2011; Dinarelli et al., 2009), query understanding (Popescu et al., 2010; Li, 2010; Reisinger and Pasca, 2011), etc.

Data and Approach Overview

Here we define several abstractions of our joint model as depicted in Fig.

MultiLayer Context Model - MCM

The generative process of our multilayer context model (MCM) (Fig.

Experiments

We performed several experiments to evaluate our proposed approach.

Conclusions

In this work, we introduced a joint approach to spoken language understanding that integrates two properties (i) identifying user actions in multiple domains in relation to semantic units, (ii) utilizing large amounts of unlabeled web search queries that suggest the user’s hidden intentions.

Topics

joint model

- We describe a joint model for understanding user actions in natural language utterances.Page 1, “Abstract”

- Recent work on SLU (Jeong and Lee, 2008; Wang, 2010) presents joint modeling of two components, i.e., the domain and slot or dialog act and slot components together.Page 1, “Introduction”

- Only recent research has focused on the joint modeling of SLU (Jeong and Lee, 2008; Wang, 2010) taking into account the dependencies at learning time.Page 2, “Background”

- Our joint model can discover domain D, and user’s act A as higher layer latent concepts of utterances in relation to lower layer latent semantic topics (slots) S such as named-entities (”New York”) or context bearing non-named entities (”vegan ”).Page 2, “Background”

- Here we define several abstractions of our joint model as depicted in Fig.Page 3, “Data and Approach Overview”

- * Tri—CRF: We used Triangular Chain CRF (J eong and Lee, 2008) as our supervised joint model baseline.Page 7, “Experiments”

- We evaluate the performance of our joint model on two experiments using two metrics.Page 7, “Experiments”

- The results show that our joint modeling approach has an advantage over the other joint models (i.e., Tri—CRF) in that it can leverage unlabeled NL utterances.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention joint model.

See all papers in Proc. ACL that mention joint model.

Back to top.

labeled data

- Using utterances from five domains, our approach shows up to 4.5% improvement on domain and dialog act performance over cascaded approach in which each semantic component is learned sequentially and a supervised joint learning model (which requires fully labeled data ).Page 1, “Abstract”

- The contributions of this paper are as follows: (i) construction of a novel Bayesian framework for semantic parsing of natural language (NL) utterances in a unifying framework in §4, (ii) representation of seed labeled data and information from web queries as informative prior to design a novel utterance understanding model in §3 & §4, (iii) comparison of our results to supervised sequential and joint learning methods on NL utterances in §5.Page 2, “Introduction”

- We conclude that our generative model achieves noticeable improvement compared to discriminative models when labeled data is scarce.Page 2, “Introduction”

- Our algorithm assigns domairfldialog-act/slot labels to each topic at each layer in the hierarchy using labeled data (explained in §4.)Page 3, “Data and Approach Overview”

- Here, we not only want to demonstrate the performance of each component of MCM but also their performance under limited amount of labeled data .Page 7, “Experiments”

- When the number of labeled data is small (niL £25%*nL), our WebPrior—MCM has a better performance on domain and act predictions compared to the two baselines.Page 7, “Experiments”

- Adding labeled data improves the performance of all models however supervised models benefit more compared to MCM models.Page 8, “Experiments”

- We use only 10% of the utterances as labeled data in this experiment and incrementally add unlabeled data (90% of labeled data are treated as unlabeled).Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention labeled data.

See all papers in Proc. ACL that mention labeled data.

Back to top.

n-grams

- We represent each utterance a as a vector wu of Nu word n-grams (segments), wuj, each of which are chosen from a vocabulary W of fixed-size V. We use entity lists obtained from web sources (explained next) to identify segments in the corpus.Page 3, “Data and Approach Overview”

- Web n-Grams (G).Page 3, “Data and Approach Overview”

- * Web n-Gram Context Base Measure (2%): As explained in §3, we use the web n-grams as additional information for calculating the base measures of the Dirichlet topic distributions.Page 5, “MultiLayer Context Model - MCM”

- In (1) we assume that entities (E) are more indicative of the domain compared to other n-grams (G) and should be more dominant in sampling decision for domain topics.Page 5, “MultiLayer Context Model - MCM”

- During Gibbs sampling, we keep track of the frequency of draws of domain, dialog act and slot indicating n-grams wj, in M D, M A and MS matrices, respectively.Page 6, “MultiLayer Context Model - MCM”

- Our vocabulary consists of n—grams and segments (phrases) in utterances that are extracted using web n-grams and entity lists of §3.Page 6, “Experiments”

See all papers in Proc. ACL 2012 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

unlabeled data

- At each random selection, the rest of the utterances are used as unlabeled data to boost the performance of MCM.Page 7, “Experiments”

- Being Bayesian, our model can incorporate unlabeled data at training time.Page 8, “Experiments”

- Here, we evaluate the performance gain on domain, act and slot predictions as more unlabeled data is introduced at learning time.Page 8, “Experiments”

- We use only 10% of the utterances as labeled data in this experiment and incrementally add unlabeled data (90% of labeled data are treated as unlabeled).Page 8, “Experiments”

- 71% (n=lO,25,..) unlabeled data indicates that the WebPrior—MCM is trained using 71% of unlabeled utterances along with training utterances.Page 8, “Experiments”

- Adding unlabeled data has a positive impact on the performance of all three se-Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention unlabeled data.

See all papers in Proc. ACL that mention unlabeled data.

Back to top.

n-gram

- (Ilia) Entity List Prior (Illa) Web N-Gram Context PriorPage 4, “Data and Approach Overview”

- n-gram ——> web quer logs domain specific entity act parameters prior \Page 4, “Data and Approach Overview”

- * Web n-Gram Context Base Measure (2%): As explained in §3, we use the web n-grams as additional information for calculating the base measures of the Dirichlet topic distributions.Page 5, “MultiLayer Context Model - MCM”

- * Corpus n-Gram Base Measure (2%): Similar to other measures, MCM also encodes n-gram constraints as word-frequency features extracted from labeled utterances.Page 5, “MultiLayer Context Model - MCM”

- * Base—MCM: Our first version injects an informative prior for domain, dialog act and slot topic distributions using information extracted from only labeled training utterances and inject as prior constraints (corpus n-gram base measure during topic assignments.Page 7, “Experiments”

See all papers in Proc. ACL 2012 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

natural language

- We describe a joint model for understanding user actions in natural language utterances.Page 1, “Abstract”

- We inject information extracted from unstructured web search query logs as prior information to enhance the generative process of the natural language utterance understanding model.Page 1, “Abstract”

- The contributions of this paper are as follows: (i) construction of a novel Bayesian framework for semantic parsing of natural language (NL) utterances in a unifying framework in §4, (ii) representation of seed labeled data and information from web queries as informative prior to design a novel utterance understanding model in §3 & §4, (iii) comparison of our results to supervised sequential and joint learning methods on NL utterances in §5.Page 2, “Introduction”

- However data sources in VPA systems pose new challenges, such as variability and ambiguities in natural language , or short utterances that rarely contain contextual information, etc.Page 2, “Background”

- Experimental results using the new Bayesian model indicate that we can effectively learn and discover meta-aspects in natural language utterances, outperforming the supervised baselines, especially when there are fewer labeled and more unlabeled utterances.Page 8, “Conclusions”

See all papers in Proc. ACL 2012 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

generative process

- We inject information extracted from unstructured web search query logs as prior information to enhance the generative process of the natural language utterance understanding model.Page 1, “Abstract”

- The generative process of our multilayer context model (MCM) (Fig.Page 4, “MultiLayer Context Model - MCM”

- This is because we utilize domain priors obtained from the web sources as supervision during generative process as well as unlabeled utterances that enable handling language variability.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention generative process.

See all papers in Proc. ACL that mention generative process.

Back to top.

Gibbs sampling

- Thus, we use Markov Chain Monte Carlo (MCMC) method,specifically Gibbs sampling , to model the posterior distribution Pym/(Du, Aud, Sujdla‘fi‘, a‘f, cuff, fl) by obtaining samples (Du, Aud, Sujd) drawn from this distribution.Page 5, “MultiLayer Context Model - MCM”

- During Gibbs sampling , we keep track of the frequency of draws of domain, dialog act and slot indicating n-grams wj, in M D, M A and MS matrices, respectively.Page 6, “MultiLayer Context Model - MCM”

- For Base—MGM and WebPrior—MCM, we run Gibbs sampler for 2000 iterations with the first 500 samples as bum-in.Page 7, “Experiments”

See all papers in Proc. ACL 2012 that mention Gibbs sampling.

See all papers in Proc. ACL that mention Gibbs sampling.

Back to top.

maXS

- and predicted dialog act by arg maxa 13(a|ud*):Page 6, “MultiLayer Context Model - MCM”

- * N M311 a; = arg maXa [6351 a * HF”, M1: ] (6)Page 6, “MultiLayer Context Model - MCM”

- For each segment wuj in u, its predicted slot are determined by arg maXS P(sj|wuj,d*,sj_1):Page 6, “MultiLayer Context Model - MCM”

See all papers in Proc. ACL 2012 that mention maXS.

See all papers in Proc. ACL that mention maXS.

Back to top.

topic distributions

- In the topic model literature, such constraints are sometimes used to deterministically allocate topic assignments to known labels (Labeled Topic Modeling (Ramage et al., 2009)) or in terms of pre-learnt topics encoded as prior knowledge on topic distributions in documents (Reisinger and Pasca, 2009).Page 4, “MultiLayer Context Model - MCM”

- * Web n-Gram Context Base Measure (2%): As explained in §3, we use the web n-grams as additional information for calculating the base measures of the Dirichlet topic distributions .Page 5, “MultiLayer Context Model - MCM”

- * Base—MCM: Our first version injects an informative prior for domain, dialog act and slot topic distributions using information extracted from only labeled training utterances and inject as prior constraints (corpus n-gram base measure during topic assignments.Page 7, “Experiments”

See all papers in Proc. ACL 2012 that mention topic distributions.

See all papers in Proc. ACL that mention topic distributions.

Back to top.

topic models

- In hierarchical topic models (Blei et al., 2003; Mimno et al., 2007), etc., topics are represented as distributions over words, and each document expresses an admixture of these topics, both of which have symmetric Dirichlet (Dir) prior distributions.Page 4, “MultiLayer Context Model - MCM”

- In the topic model literature, such constraints are sometimes used to deterministically allocate topic assignments to known labels (Labeled Topic Modeling (Ramage et al., 2009)) or in terms of pre-learnt topics encoded as prior knowledge on topic distributions in documents (Reisinger and Pasca, 2009).Page 4, “MultiLayer Context Model - MCM”

- 3See (Wallach, 2008) Chapter 3 for analysis of hyper-priors on topic models .Page 5, “MultiLayer Context Model - MCM”

See all papers in Proc. ACL 2012 that mention topic models.

See all papers in Proc. ACL that mention topic models.

Back to top.