Article Structure

Abstract

When automatically translating from a weakly inflected source language like English to a target language with richer grammatical features such as gender and dual number, the output commonly contains morpho-syntactic agreement errors.

Introduction

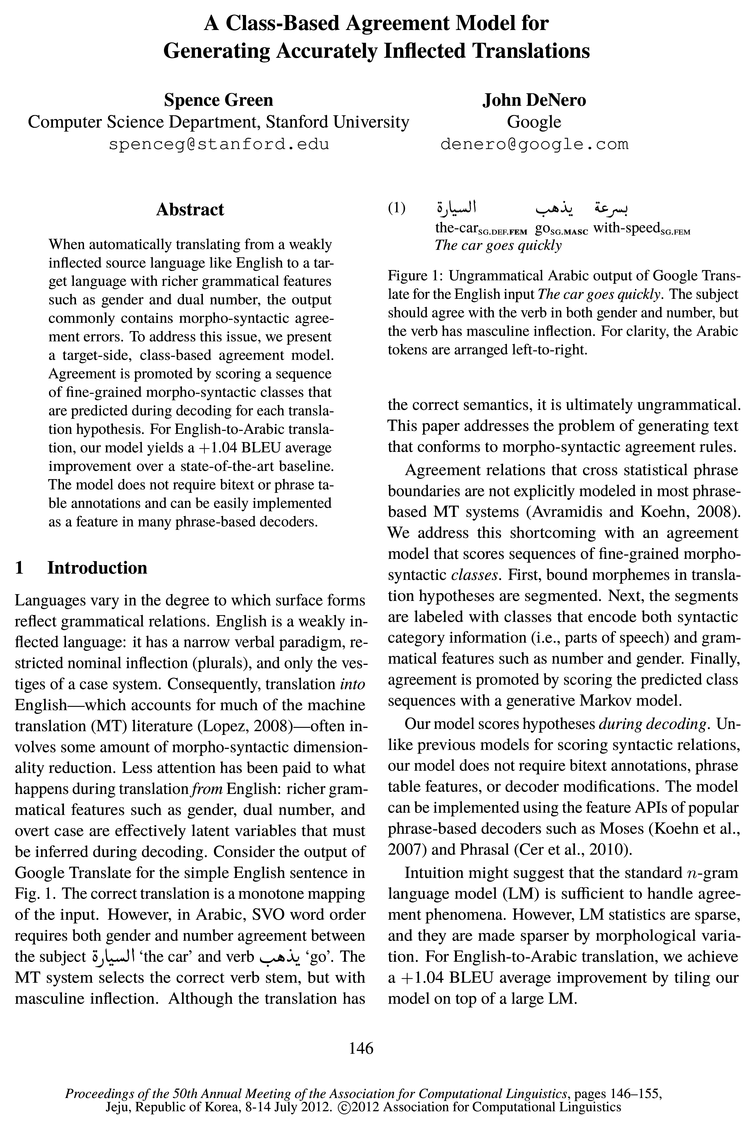

Languages vary in the degree to which surface forms reflect grammatical relations.

A Class-based Model of Agreement

2.1 Morpho-syntactic Agreement

Inference during Translation Decoding

Scoring the agreement model as part of translation decoding requires a novel inference procedure.

Related Work

We compare our class-based model to previous approaches to scoring syntactic relations in MT.

Experiments

We first evaluate the Arabic segmenter and tagger components independently, then provide English-Arabic translation quality results.

Discussion of Translation Results

Tbl.

Conclusion and Outlook

Our class-based agreement model improves translation quality by promoting local agreement, but with a minimal increase in decoding time and no additional storage requirements for the phrase table.

Topics

phrase-based

- The model does not require bitext or phrase table annotations and can be easily implemented as a feature in many phrase-based decoders.Page 1, “Abstract”

- Agreement relations that cross statistical phrase boundaries are not explicitly modeled in most phrase-based MT systems (Avramidis and Koehn, 2008).Page 1, “Introduction”

- The model can be implemented using the feature APIs of popular phrase-based decoders such as Moses (Koehn et al., 2007) and Phrasal (Cer et al., 2010).Page 1, “Introduction”

- We chose a bigram model due to the aggressive recombination strategy in our phrase-based decoder.Page 4, “A Class-based Model of Agreement”

- 3.1 Phrase-based Translation DecodingPage 4, “Inference during Translation Decoding”

- We consider the standard phrase-based approach to MT (Och and Ney, 2004).Page 4, “Inference during Translation Decoding”

- Subotin (2011) recently extended factored translation models to hierarchical phrase-based translation and developed a discriminative model for predicting target-side morphology in English-Czech.Page 5, “Related Work”

- Experimental Setup Our decoder is based on the phrase-based approach to translation (Och and Ney, 2004) and contains various feature functions including phrase relative frequency, word-level alignment statistics, and lexicalized reordering models (Tillmann, 2004; Och et al., 2004).Page 6, “Experiments”

- Phrase Table Coverage In a standard phrase-based system, effective translation into a highly inflected target language requires that the phrase table contain the inflected word forms necessary to construct an output with correct agreement.Page 8, “Discussion of Translation Results”

- This large gap between the unigram recall of the actual translation output (top) and the lexical coverage of the phrase-based model (bottom) indicates that translation performance can be improved dramatically by altering the translation model through features such as ours, without expanding the search space of the decoder.Page 8, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention phrase-based.

See all papers in Proc. ACL that mention phrase-based.

Back to top.

CRF

- We treat segmentation as a character-level sequence modeling problem and train a linear-chain conditional random field ( CRF ) model (Lafferty et al., 2001).Page 2, “A Class-based Model of Agreement”

- Class-based Agreement Model t E T Set of morpho-syntactic classes 3 E S Set of all word segments 6569 Learned weights for the CRF-based segmenter 0mg Learned weights for the CRF-based tagger gbo, gbt CRF potential functions (emission and transition)Page 3, “A Class-based Model of Agreement”

- For this task we also train a standard CRF model on full sentences with gold classes and segmentation.Page 3, “A Class-based Model of Agreement”

- The CRF tagging model predicts a target-side class sequence 7*Page 3, “A Class-based Model of Agreement”

- Features The tagging CRF includes emission features gbo that indicate a class 7-,; appearing with various orthographic characteristics of the word sequence being tagged.Page 3, “A Class-based Model of Agreement”

- In typical CRF inference, the entire observation sequence is available throughout inference, so these features can be scored on observed words in an arbitrary neighborhood around the current position i.Page 3, “A Class-based Model of Agreement”

- However, we conduct CRF inference in tandem with the translation decoding procedure (§3), creating an environment in which subsequent words of the observation are not available; the MT system has yet to generate the rest of the translation when the tagging features for a position are scored.Page 3, “A Class-based Model of Agreement”

- The CRF tagger model defines a conditional distribution p(7'|e; 6mg) for a class sequence 7' given a sentence e and model parameters 6mg.Page 4, “A Class-based Model of Agreement”

- The model can be implemented with a standard CRF package, trained on existing treebanks for many languages, and integrated easily with many MT feature APIs.Page 8, “Conclusion and Outlook”

See all papers in Proc. ACL 2012 that mention CRF.

See all papers in Proc. ACL that mention CRF.

Back to top.

LM

- Intuition might suggest that the standard 71- gram language model ( LM ) is suflicient to handle agreement phenomena.Page 1, “Introduction”

- However, LM statistics are sparse, and they are made sparser by morphological variation.Page 1, “Introduction”

- For English-to-Arabic translation, we achieve a +1.04 BLEU average improvement by tiling our model on top of a large LM .Page 1, “Introduction”

- For contexts in which the LM is guaranteed to back off (for instance, after an unseen bigram), our decoder maintains only the minimal state needed (perhaps only a single word).Page 4, “A Class-based Model of Agreement”

- initialize 77 to —oo set 77(t) = 0 compute 7* from parameters <8, 5%, 7r, is_goal> compute q(e{,+1) 2 p(7'*) under the generative LM set model state anew = <§L, 7-2) for prefix ef Output: q(e£+1)Page 4, “Inference during Translation Decoding”

- They used a target-side LM over Combinatorial Categorial Grammar (CCG) supertags, along with a penalty for the number of operator violations, and also modified the phrase probabilities based on the tags.Page 5, “Related Work”

- Then they mixed the classes into a word-based LM .Page 6, “Related Work”

- Target-Side Syntactic LMs Our agreement model is a form of syntactic LM , of which there is a long history of research, especially in speech processing.5 Syntactic LMs have traditionally been too slow for scoring during MT decoding.Page 6, “Related Work”

- The target-side structure enables scoring hypotheses with a trigram dependency LM .Page 6, “Related Work”

See all papers in Proc. ACL 2012 that mention LM.

See all papers in Proc. ACL that mention LM.

Back to top.

translation quality

- However, using lexical coverage experiments, we show that there is ample room for translation quality improvements through better selection of forms that already exist in the translation model.Page 2, “Introduction”

- Segmentation is typically applied as a bitext preprocessing step, and there is a rich literature on the effect of different segmentation schemata on translation quality (Koehn and Knight, 2003; Habash and Sadat, 2006; El Kholy and Habash, 2012).Page 2, “A Class-based Model of Agreement”

- We first evaluate the Arabic segmenter and tagger components independently, then provide English-Arabic translation quality results.Page 6, “Experiments”

- 5.2 Translation QualityPage 6, “Experiments”

- We evaluated translation quality with BLEU-4 (Pa-pineni et al., 2002) and computed statistical significance with the approximate randomization method of Riezler and Maxwell (2005).9Page 7, “Experiments”

- 2 shows translation quality results on newswire, while Tbl.Page 7, “Discussion of Translation Results”

- Our class-based agreement model improves translation quality by promoting local agreement, but with a minimal increase in decoding time and no additional storage requirements for the phrase table.Page 8, “Conclusion and Outlook”

See all papers in Proc. ACL 2012 that mention translation quality.

See all papers in Proc. ACL that mention translation quality.

Back to top.

translation model

- However, using lexical coverage experiments, we show that there is ample room for translation quality improvements through better selection of forms that already exist in the translation model .Page 2, “Introduction”

- Translation Model 6 Target sequence of I words f Source sequence of J words a Sequence of K phrase alignments for (e, f) H Permutation of the alignments for target word order 6 h Sequence of M feature functions A Sequence of learned weights for the M features H A priority queue of hypothesesPage 3, “A Class-based Model of Agreement”

- 3.3 Translation Model FeaturesPage 5, “Inference during Translation Decoding”

- Factored Translation Models Factored translation models (Koehn and Hoang, 2007) facilitate a more data-oriented approach to agreement modeling.Page 5, “Related Work”

- Subotin (2011) recently extended factored translation models to hierarchical phrase-based translation and developed a discriminative model for predicting target-side morphology in English-Czech.Page 5, “Related Work”

- We trained the translation model on 502 million words of parallel text collected from a variety of sources, including the Web.Page 7, “Experiments”

- This large gap between the unigram recall of the actual translation output (top) and the lexical coverage of the phrase-based model (bottom) indicates that translation performance can be improved dramatically by altering the translation model through features such as ours, without expanding the search space of the decoder.Page 8, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention translation model.

See all papers in Proc. ACL that mention translation model.

Back to top.

language model

- Intuition might suggest that the standard 71- gram language model (LM) is suflicient to handle agreement phenomena.Page 1, “Introduction”

- However, in MT, we seek a measure of sentence quality (1(6) that is comparable across different hypotheses on the beam (much like the n-gram language model score).Page 4, “A Class-based Model of Agreement”

- We trained a simple add-1 smoothed bigram language model over gold class sequences in the same treebank training data:Page 4, “A Class-based Model of Agreement”

- With a trigram language model , the state might be the last two words of the translation prefix.Page 4, “Inference during Translation Decoding”

- Monz (2011) recently investigated parameter estimation for POS-based language models , but his classes did not include inflectional features.Page 6, “Related Work”

- One exception was the quadratic-time dependency language model presented by Galley and Manning (2009).Page 6, “Related Work”

- Our distributed 4—gram language model was trained on 600 million words of Arabic text, also collected from many sources including the Web (Brants et al., 2007).Page 7, “Experiments”

See all papers in Proc. ACL 2012 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

bigram

- The features are indicators for (character, position, label) triples for a five Character window and bigram label transition indicators.Page 3, “A Class-based Model of Agreement”

- Bigram transition features gbt encode local agreement relations.Page 3, “A Class-based Model of Agreement”

- We trained a simple add-1 smoothed bigram language model over gold class sequences in the same treebank training data:Page 4, “A Class-based Model of Agreement”

- We chose a bigram model due to the aggressive recombination strategy in our phrase-based decoder.Page 4, “A Class-based Model of Agreement”

- For contexts in which the LM is guaranteed to back off (for instance, after an unseen bigram ), our decoder maintains only the minimal state needed (perhaps only a single word).Page 4, “A Class-based Model of Agreement”

See all papers in Proc. ACL 2012 that mention bigram.

See all papers in Proc. ACL that mention bigram.

Back to top.

treebanks

- More than 25 treebanks (in 22 languages) can be automatically mapped to this tag set, which includes “Noun” (nominals), “Verb” (verbs), “Adj” (adjectives), and “ADP” (pre-and postpositions).Page 3, “A Class-based Model of Agreement”

- Many of these treebanks also contain per-token morphological annotations.Page 3, “A Class-based Model of Agreement”

- We trained a simple add-1 smoothed bigram language model over gold class sequences in the same treebank training data:Page 4, “A Class-based Model of Agreement”

- Experimental Setup All experiments use the Penn Arabic Treebank (ATB) (Maamouri et al., 2004) parts 1—3 divided into training/dev/test sections according to the canonical split (Rambow et al., 2005).7Page 6, “Experiments”

- The model can be implemented with a standard CRF package, trained on existing treebanks for many languages, and integrated easily with many MT feature APIs.Page 8, “Conclusion and Outlook”

See all papers in Proc. ACL 2012 that mention treebanks.

See all papers in Proc. ACL that mention treebanks.

Back to top.

model scores

- Our model scores hypotheses during decoding.Page 1, “Introduction”

- The agreement model scores sequences of morpho-syntactic word classes, which express grammatical features relevant to agreement.Page 2, “A Class-based Model of Agreement”

- However, in MT, we seek a measure of sentence quality (1(6) that is comparable across different hypotheses on the beam (much like the n-gram language model score ).Page 4, “A Class-based Model of Agreement”

- Discriminative model scores have been used as MT features (Galley and Manning, 2009), but we obtained better results by scoring the l-best class sequences with a generative model.Page 4, “A Class-based Model of Agreement”

- The agreement model score is one decoder feature function.Page 5, “Inference during Translation Decoding”

See all papers in Proc. ACL 2012 that mention model scores.

See all papers in Proc. ACL that mention model scores.

Back to top.

phrase table

- The model does not require bitext or phrase table annotations and can be easily implemented as a feature in many phrase-based decoders.Page 1, “Abstract”

- Unlike previous models for scoring syntactic relations, our model does not require bitext annotations, phrase table features, or decoder modifications.Page 1, “Introduction”

- Phrase Table Coverage In a standard phrase-based system, effective translation into a highly inflected target language requires that the phrase table contain the inflected word forms necessary to construct an output with correct agreement.Page 8, “Discussion of Translation Results”

- During development, we observed that the phrase table of our large-scale English-Arabic system did often contain the inflected forms that we desired the system to select.Page 8, “Discussion of Translation Results”

- Our class-based agreement model improves translation quality by promoting local agreement, but with a minimal increase in decoding time and no additional storage requirements for the phrase table .Page 8, “Conclusion and Outlook”

See all papers in Proc. ACL 2012 that mention phrase table.

See all papers in Proc. ACL that mention phrase table.

Back to top.

MT system

- The MT system selects the correct verb stem, but with masculine inflection.Page 1, “Introduction”

- Agreement relations that cross statistical phrase boundaries are not explicitly modeled in most phrase-based MT systems (Avramidis and Koehn, 2008).Page 1, “Introduction”

- However, we conduct CRF inference in tandem with the translation decoding procedure (§3), creating an environment in which subsequent words of the observation are not available; the MT system has yet to generate the rest of the translation when the tagging features for a position are scored.Page 3, “A Class-based Model of Agreement”

- To our knowledge, Uszkoreit and Brants (2008) are the only recent authors to show an improvement in a state-of-the-art MT system using class-based LMs.Page 5, “Related Work”

See all papers in Proc. ACL 2012 that mention MT system.

See all papers in Proc. ACL that mention MT system.

Back to top.

statistically significant

- The MT06 result is statistically significant at p g 0.01; MT08 is significant at p g 0.02.Page 7, “Experiments”

- We evaluated translation quality with BLEU-4 (Pa-pineni et al., 2002) and computed statistical significance with the approximate randomization method of Riezler and Maxwell (2005).9Page 7, “Experiments”

- We realized smaller, yet statistically significant , gains on the mixed genre data sets.Page 7, “Discussion of Translation Results”

- The baseline contained 78 errors, while our system produced 66 errors, a statistically significant 15.4% error reduction at p S 0.01 according to a paired t-test.Page 8, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention statistically significant.

See all papers in Proc. ACL that mention statistically significant.

Back to top.

beam search

- This decoding problem is NP—hard, thus a beam search is often used (Fig.Page 4, “Inference during Translation Decoding”

- The beam search relies on three operations, two of which affect the agreement model:Page 4, “Inference during Translation Decoding”

- Figure 4: Breadth-first beam search algorithm of Och and Ney (2004).Page 4, “Inference during Translation Decoding”

- The beam search maintains state for each derivation, the score of which is a linear combination of the feature values.Page 4, “Inference during Translation Decoding”

See all papers in Proc. ACL 2012 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

fine-grained

- We address this shortcoming with an agreement model that scores sequences of fine-grained morpho-syntactic classes.Page 1, “Introduction”

- After segmentation, we tag each segment with a fine-grained morpho-syntactic class.Page 3, “A Class-based Model of Agreement”

- For training the tagger, we automatically converted the ATE morphological analyses to the fine-grained class set.Page 6, “Experiments”

- Finally, +POS+Agr shows the class-based model with the fine-grained classes (e. g., “Noun+Fem+S g”).Page 7, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention fine-grained.

See all papers in Proc. ACL that mention fine-grained.

Back to top.

phrase pair

- 0 Extend a hypothesis with a new phrase pair 0 Recombine hypotheses with identical statesPage 4, “Inference during Translation Decoding”

- Och (1999) showed a method for inducing bilingual word classes that placed each phrase pair into a two-dimensional equivalence class.Page 5, “Related Work”

- 0 Baseline system translation output: 44.6% o Phrase pairs matching source n-grams: 67.8%Page 8, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention phrase pair.

See all papers in Proc. ACL that mention phrase pair.

Back to top.

POS tags

- The coarse categories are the universal POS tag set described by Petrov et al.Page 3, “A Class-based Model of Agreement”

- For Arabic, we used the coarse POS tags plus definiteness and the so-called phi features (gender, number, and person).4 For example, SJWl ‘the car’ would be tagged “Noun+Def+Sg+Fem”.Page 3, “A Class-based Model of Agreement”

- For comparison, +POS indicates our class-based model trained on the 11 coarse POS tags only (e.g., “Noun”).Page 7, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention POS tags.

See all papers in Proc. ACL that mention POS tags.

Back to top.

model training

- For comparison, +POS indicates our class-based model trained on the 11 coarse POS tags only (e.g., “Noun”).Page 7, “Discussion of Translation Results”

- The best result—a +1.04 BLEU average gain—was achieved when the class-based model training data, MT tuning set, and MT evaluation set contained the same genre.Page 7, “Discussion of Translation Results”

- We achieved best results when the model training data, MT tuning set, and MT evaluation set contained roughly the same genre.Page 8, “Conclusion and Outlook”

See all papers in Proc. ACL 2012 that mention model training.

See all papers in Proc. ACL that mention model training.

Back to top.

development set

- Table 1: Intrinsic evaluation accuracy [‘70] ( development set ) for Arabic segmentation and tagging.Page 6, “Experiments”

- 1 shows development set accuracy for two settings.Page 6, “Experiments”

- We tuned the feature weights on a development set using lattice-based minimum error rate training (MERT) (Macherey et al.,Page 6, “Experiments”

See all papers in Proc. ACL 2012 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

BLEU

- For English-to-Arabic translation, our model yields a +1.04 BLEU average improvement over a state-of-the-art baseline.Page 1, “Abstract”

- For English-to-Arabic translation, we achieve a +1.04 BLEU average improvement by tiling our model on top of a large LM.Page 1, “Introduction”

- The best result—a +1.04 BLEU average gain—was achieved when the class-based model training data, MT tuning set, and MT evaluation set contained the same genre.Page 7, “Discussion of Translation Results”

See all papers in Proc. ACL 2012 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

Viterbi

- It can be learned from gold-segmented data, generally applies to languages with bound morphemes, and does not require a hand-compiled lexicon.3 Moreover, it has only four labels, so Viterbi decoding is very fast.Page 3, “A Class-based Model of Agreement”

- Incremental Greedy Decoding Decoding with the CRF—based tagger model in this setting requires some slight modifications to the Viterbi algorithm.Page 5, “Inference during Translation Decoding”

- This forces the Viterbi path to go through If.Page 5, “Inference during Translation Decoding”

See all papers in Proc. ACL 2012 that mention Viterbi.

See all papers in Proc. ACL that mention Viterbi.

Back to top.