Article Structure

Abstract

This paper presents a joint model for template filling, where the goal is to automatically specify the fields of target relations such as seminar announcements or corporate acquisition events.

Introduction

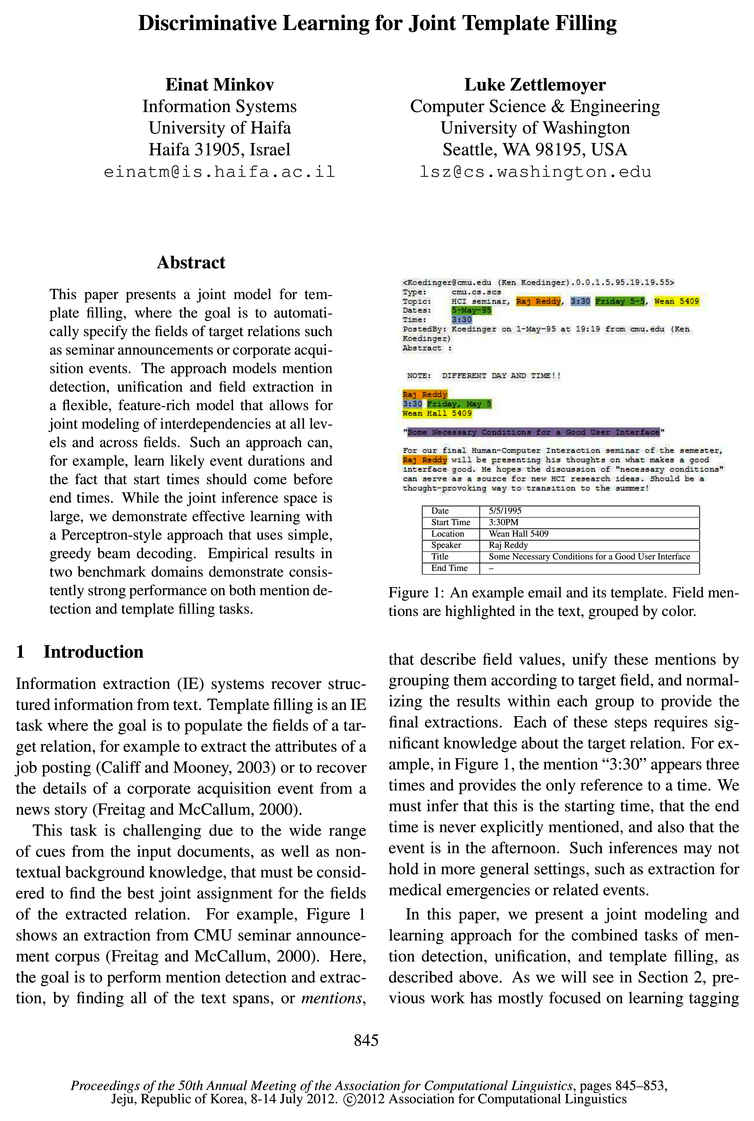

Information extraction (IE) systems recover structured information from text.

Related Work

Research on the task of template filling has focused on the extraction of field value mentions from the underlying text.

Problem Setting

In the template filling task, a target relation 7“ is provided, comprised of attributes (also referred to as

Structured Learning

Next, we describe how valid candidate extractions are instantiated (Sec.

Seminar Extraction Task

Dataset The CMU seminar announcement dataset (Freitag and McCallum, 2000) includes 485 emails containing seminar announcements.

Corporate Acquisitions

Dataset The corporate acquisitions corpus contains 600 newswire articles, describing factual or potential corporate acquisition events.

Summary and Future Work

We presented a joint approach for template filling that models mention detection, unification, and field extraction in a flexible, feature-rich model.

Topics

named entity

- In addition to proper nouns ( named entity mentions) that are considered in this work, they also account for nominal and pronominal noun mentions.Page 2, “Related Work”

- Interestingly, several researchers have attempted to model label consistency and high-level relational constraints using state-of-the-art sequential models of named entity recognition (NER).Page 2, “Related Work”

- In the proposed framework we take a bottom-up approach to identifying entity mentions in text, where given a noisy set of candidate named entities , described using rich semantic and surface features, discriminative learning is applied to label these mentions.Page 2, “Related Work”

- In Figure 2, these relations are denoted by double-line boundary, including location, person, title, date and time; every tuple of these relations maps to a named entity mention.1Page 3, “Problem Setting”

- Figure 3 demonstrates the correct mapping of named entity mentions to tuples, as well as tuple unification, for the example shown in Figure 1.Page 3, “Problem Setting”

- Named entity recognition.Page 4, “Structured Learning”

- The candidate tuples generated using this procedure are structured entities, constructed using typed named entity recognition, unification, and hierarchical assignment of field values (Figure 3).Page 4, “Structured Learning”

- We used a set of rules to extract candidate named entities per the types specified in Figure 2.4 The rules encode information typically used in NER, including content and contextual patterns, as well as lookups in available dictionaries (Finkel et al., 2005; Minkov et al., 2005).Page 5, “Seminar Extraction Task”

- recall for the named entities of type date and time is near perfect, and is estimated at 96%, 91% and 90% for location, speaker and title, respectively.Page 5, “Seminar Extraction Task”

- Another feature encodes the size of the most semantically detailed named entity that maps to a field; for example, the most detailed entity mention of type stime in Figure l is “3:30”, comprising of two attribute values, namely hour and minutes.Page 6, “Seminar Extraction Task”

- We have experimented with features that encode the shortest distance between named entity mentions mapping to different fields (measured in terms of separating lines or sentences), based on the hypothesis that field values typically co-appear in the same segments of the document.Page 6, “Seminar Extraction Task”

See all papers in Proc. ACL 2012 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.

joint modeling

- This paper presents a joint model for template filling, where the goal is to automatically specify the fields of target relations such as seminar announcements or corporate acquisition events.Page 1, “Abstract”

- The approach models mention detection, unification and field extraction in a flexible, feature-rich model that allows for joint modeling of interdependencies at all levels and across fields.Page 1, “Abstract”

- In this paper, we present a joint modeling and learning approach for the combined tasks of mention detection, unification, and template filling, as described above.Page 1, “Introduction”

- We also demonstrate, through ablation studies on the feature set, the need for joint modeling and the relative importance of the different types of joint constraints.Page 2, “Introduction”

- An important question to be addressed in evaluation is to what extent the joint modeling approach contributes to performance.Page 6, “Seminar Extraction Task”

- This is largely due to erroneous assignments of named entities of other types (mainly, person) as titles; such errors are avoided in the full joint model , where tuple validity is enforced.Page 6, “Seminar Extraction Task”

- As argued before, joint modeling is especially important for irregular fields, such as title; we provide first results on this field.Page 7, “Seminar Extraction Task”

- We believe that these results demonstrate the benefit of performing mention recognition as part of a joint model that takes into account detailed semantics of the underlying relational schema, when available.Page 7, “Seminar Extraction Task”

- Unfortunately, we can not directly compare against a generative joint model evaluated on this dataset (Haghighi and Klein, 2010).7 The best results per attribute are shown in boldface.Page 8, “Corporate Acquisitions”

- This approach allows for joint modeling of interdependen-cies at all levels and across fields.Page 8, “Summary and Future Work”

- Finally, it is worth exploring scaling the approach to unrestricted event extraction, and jointly model extracting more than one relation per document.Page 8, “Summary and Future Work”

See all papers in Proc. ACL 2012 that mention joint modeling.

See all papers in Proc. ACL that mention joint modeling.

Back to top.

entity mentions

- In their model, slot-filling entities are first generated, and entity mentions are then realized in text.Page 2, “Related Work”

- In addition to proper nouns (named entity mentions ) that are considered in this work, they also account for nominal and pronominal noun mentions.Page 2, “Related Work”

- Compared with the extraction of tuples of entity mention pairs, template filling is associated with a more complex target relational schema.Page 2, “Related Work”

- In the proposed framework we take a bottom-up approach to identifying entity mentions in text, where given a noisy set of candidate named entities, described using rich semantic and surface features, discriminative learning is applied to label these mentions.Page 2, “Related Work”

- Generally, parent-free relations in the hierarchy correspond to generic entities, realized as entity mentions in the text.Page 3, “Problem Setting”

- Figure 3 demonstrates the correct mapping of named entity mentions to tuples, as well as tuple unification, for the example shown in Figure 1.Page 3, “Problem Setting”

- Another feature encodes the size of the most semantically detailed named entity that maps to a field; for example, the most detailed entity mention of type stime in Figure l is “3:30”, comprising of two attribute values, namely hour and minutes.Page 6, “Seminar Extraction Task”

- We have experimented with features that encode the shortest distance between named entity mentions mapping to different fields (measured in terms of separating lines or sentences), based on the hypothesis that field values typically co-appear in the same segments of the document.Page 6, “Seminar Extraction Task”

- Table 5 further shows results on NER, the task of recovering the sets of named entity mentions pertaining to each target field.Page 8, “Corporate Acquisitions”

See all papers in Proc. ACL 2012 that mention entity mentions.

See all papers in Proc. ACL that mention entity mentions.

Back to top.

beam search

- 4.2), where beam search is used to find the top scoring candidates efficiently (Sec.Page 4, “Structured Learning”

- Algorithm 1: The beam search procedure 1.Page 4, “Structured Learning”

- 4.3 Beam SearchPage 4, “Structured Learning”

- We therefore propose using beam search to efficiently find the top scoring candidate.Page 4, “Structured Learning”

- that rather than instantiate the full space of valid candidate records (Section 4.1), we are interested in in-stantiating only those candidates that are likely to be assigned a high score by F. Algorithm 1 outlines the proposed beam search procedure.Page 5, “Structured Learning”

- While beam search is efficient, performance may be compromised compared with an unconstrained search.Page 5, “Structured Learning”

- Experiments We applied beam search , where corp tuples are extracted first, and acquisition tuples are constructed using the top scoring corp entities.Page 8, “Corporate Acquisitions”

See all papers in Proc. ACL 2012 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

NER

- Interestingly, several researchers have attempted to model label consistency and high-level relational constraints using state-of-the-art sequential models of named entity recognition ( NER ).Page 2, “Related Work”

- We will show that this approach yields better performance on the CMU seminar announcement dataset when evaluated in terms of NER .Page 2, “Related Work”

- Our approach is complimentary to NER methods, as it can consolidate noisy overlapping predictions from multiple systems into coherent sets.Page 2, “Related Work”

- We used a set of rules to extract candidate named entities per the types specified in Figure 2.4 The rules encode information typically used in NER , including content and contextual patterns, as well as lookups in available dictionaries (Finkel et al., 2005; Minkov et al., 2005).Page 5, “Seminar Extraction Task”

- Lexical features of this form are commonly used in NER (Finkel et al., 2005; Minkov et al., 2005).Page 5, “Seminar Extraction Task”

- (2005), applied sequential models to perform NER on this dataset, identifying named entities that pertain to the template slots.Page 7, “Seminar Extraction Task”

- Table 5 further shows results on NER , the task of recovering the sets of named entity mentions pertaining to each target field.Page 8, “Corporate Acquisitions”

See all papers in Proc. ACL 2012 that mention NER.

See all papers in Proc. ACL that mention NER.

Back to top.

beam size

- As detailed, only a set of top scoring tuples of size 1:: ( beam size ) is maintained per relation 7“ E T during candidate generation.Page 5, “Structured Learning”

- The beam size 1:: allows controlling the tradeoff between performance and cost.Page 5, “Structured Learning”

- Table 1 shows the results of our full model using beam size 1:: = 10, as well as model variants.Page 6, “Seminar Extraction Task”

- We used a default beam size 1:: = 10.Page 8, “Corporate Acquisitions”

See all papers in Proc. ACL 2012 that mention beam size.

See all papers in Proc. ACL that mention beam size.

Back to top.

structural features

- No structural features No semantic features No unification Individual fieldsPage 6, “Seminar Extraction Task”

- As shown in the table, removing the structural features hurt performance consistently across fields.Page 6, “Seminar Extraction Task”

- While newswire documents are mostly unstructured, structural features are used to indicate whether any of the purchaser, acquired and seller text spans appears inPage 7, “Corporate Acquisitions”

- Removing the inter type and structural features mildly hurt performance, on average.Page 8, “Corporate Acquisitions”

See all papers in Proc. ACL 2012 that mention structural features.

See all papers in Proc. ACL that mention structural features.

Back to top.

cross validation

- Experiments We conducted 5-fold cross validation experiments using the seminar extraction dataset.Page 6, “Seminar Extraction Task”

- For comparison, we used 5-fold cross validation , where only a subset of each train fold that corresponds to 50% of the corpus was used for training.Page 7, “Seminar Extraction Task”

- We compare our approach to their work, having obtained and used the same 5-fold cross validation splits as both works.Page 7, “Seminar Extraction Task”

See all papers in Proc. ACL 2012 that mention cross validation.

See all papers in Proc. ACL that mention cross validation.

Back to top.