Article Structure

Abstract

Bootstrapping a classifier from a small set of seed rules can be viewed as the propagation of labels between examples via features shared between them.

Introduction

In this paper, we are concerned with a case of semi-supervised learning that is close to unsupervised learning, in that the labelled and unlabelled data points are from the same domain and only a small set of seed rules is used to derive the labelled points.

Bootstrapping

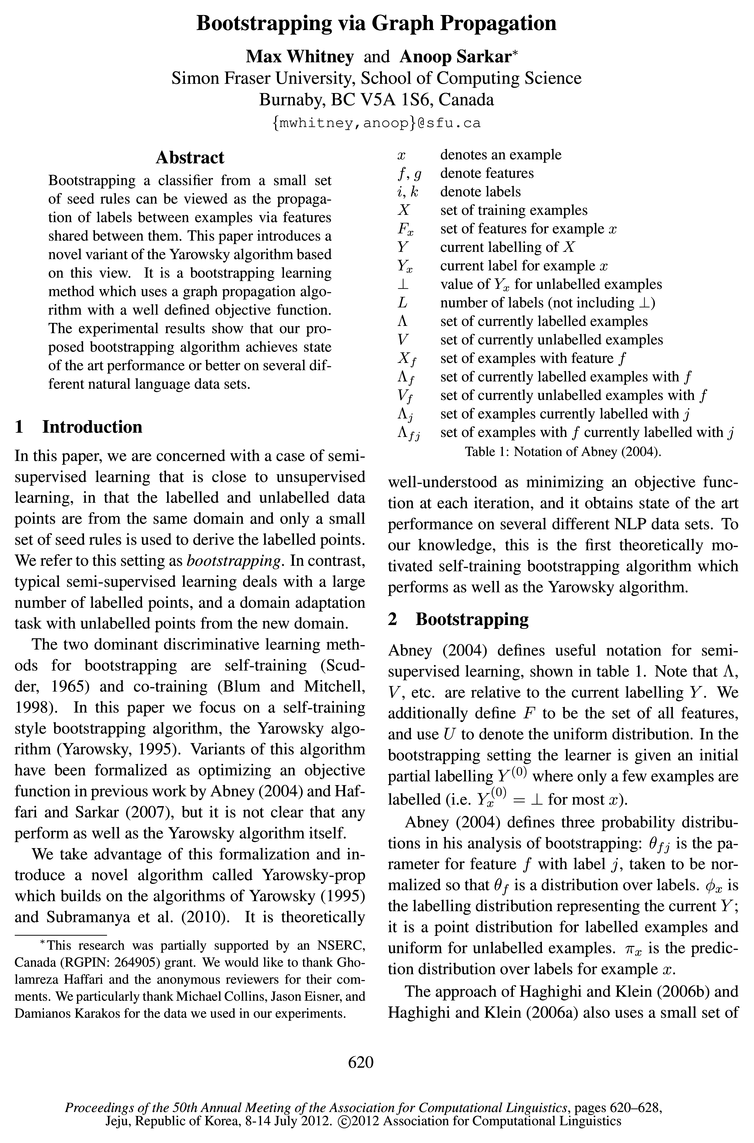

Abney (2004) defines useful notation for semi-supervised learning, shown in table 1.

Existing algorithms 3.1 Yarowsky

A decision list (DL) is a (ordered) list of feature-label pairs (rules) which is produced by assigning a score to each rule and sorting on this score.

Graph propagation

The graph propagation of Subramanya et al.

Topics

named entity

- Figure 2: A DL from iteration 5 of Yarowsky on the named entity task.Page 5, “Graph propagation”

- The task of Collins and Singer (1999) is named entity classification on data from New York Times text.7 The data set was preprocessed by a statistical parser (Collins, 1997) and all noun phrases that are potential named entities were extracted from the parse tree.Page 5, “Graph propagation”

- The test data additionally contains some noise examples which are not in the three named entity categories.Page 5, “Graph propagation”

- The named entity test set contains some examples that are neither person, organization, nor location.Page 6, “Graph propagation”

- Figure 3: Named entity test set examples where Yarowsky—prop 0—only is correct and no other tested algorithms are correct.Page 6, “Graph propagation”

- It numerically outperforms DL-CoTrain on the named entity task, is not (statistically) significantly worse on the drug and land tasks, and is significantly better on the sentence task.Page 6, “Graph propagation”

- It also numerically outperforms Yarowsky-cautious on the named entity task and is significantly better on the drug task.Page 6, “Graph propagation”

- Figure 3 shows (all) three examples from the named entity test set where Yarowsky-prop-cautious 6-only is correct but none of the other Yarowsky variants are.Page 6, “Graph propagation”

- rithms on the named entity task.Page 7, “Graph propagation”

- To evaluate the effects of cautiousness we eXamine the Yarowsky-prop 6-only algorithm on the named entity task in more detail.Page 7, “Graph propagation”

- Figure 4: Non-seeded test accuracy versus iteration for various algorithms on named entity .Page 8, “Graph propagation”

See all papers in Proc. ACL 2012 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.

semi-supervised

- In this paper, we are concerned with a case of semi-supervised learning that is close to unsupervised learning, in that the labelled and unlabelled data points are from the same domain and only a small set of seed rules is used to derive the labelled points.Page 1, “Introduction”

- In contrast, typical semi-supervised learning deals with a large number of labelled points, and a domain adaptation task with unlabelled points from the new domain.Page 1, “Introduction”

- Abney (2004) defines useful notation for semi-supervised learning, shown in table 1.Page 1, “Bootstrapping”

- Haffari and Sarkar (2007) suggest a bipartite graph framework for semi-supervised learning based on their analysis of Y— l/DL-l-VS and objective (2).Page 3, “Existing algorithms 3.1 Yarowsky”

- 3.7 Semi-supervised learning algorithm of Subramanya et al.Page 3, “Existing algorithms 3.1 Yarowsky”

- (2010) give a semi-supervised algorithm for part of speech tagging.Page 3, “Existing algorithms 3.1 Yarowsky”

- Note that (3) is independent of their specific graph structure, distributions, and semi-supervised learning algorithm.Page 4, “Graph propagation”

See all papers in Proc. ACL 2012 that mention semi-supervised.

See all papers in Proc. ACL that mention semi-supervised.

Back to top.

objective function

- It is a bootstrapping learning method which uses a graph propagation algorithm with a well defined objective function .Page 1, “Abstract”

- Variants of this algorithm have been formalized as optimizing an objective function in previous work by Abney (2004) and Haffari and Sarkar (2007), but it is not clear that any perform as well as the Yarowsky algorithm itself.Page 1, “Introduction”

- well-understood as minimizing an objective function at each iteration, and it obtains state of the art performance on several different NLP data sets.Page 1, “Introduction”

- (2007) provide an objective function for this algorithm using a generalized definition of cross-entropy in terms of Bregman distance, which motivates our objective in section 4.Page 3, “Existing algorithms 3.1 Yarowsky”

- 6.5 Objective functionPage 8, “Graph propagation”

See all papers in Proc. ACL 2012 that mention objective function.

See all papers in Proc. ACL that mention objective function.

Back to top.

CRF

- Note that this is a linear model similar to a conditional random field ( CRF ) (Lafferty et al., 2001) for unstructured multiclass problems.Page 3, “Existing algorithms 3.1 Yarowsky”

- It uses a CRF (Lafferty et al., 2001) as the underlying supervised learner.Page 3, “Existing algorithms 3.1 Yarowsky”

- It differs significantly from Yarowsky in two other ways: First, instead of only training a CRF it also uses a step of graph propagation between distributions over the n-grams in the data.Page 3, “Existing algorithms 3.1 Yarowsky”

See all papers in Proc. ACL 2012 that mention CRF.

See all papers in Proc. ACL that mention CRF.

Back to top.

word sense

- th is similar to that of Yarowsky (1995) but is better specified and omits word sense disambiguation optimizations.Page 2, “Existing algorithms 3.1 Yarowsky”

- The tasks of Eisner and Karakos (2005) are word sense disambiguation on several English words which have two senses corresponding to two different words in French.Page 5, “Graph propagation”

- There is no difference on the word sense data sets.Page 6, “Graph propagation”

See all papers in Proc. ACL 2012 that mention word sense.

See all papers in Proc. ACL that mention word sense.

Back to top.