Article Structure

Abstract

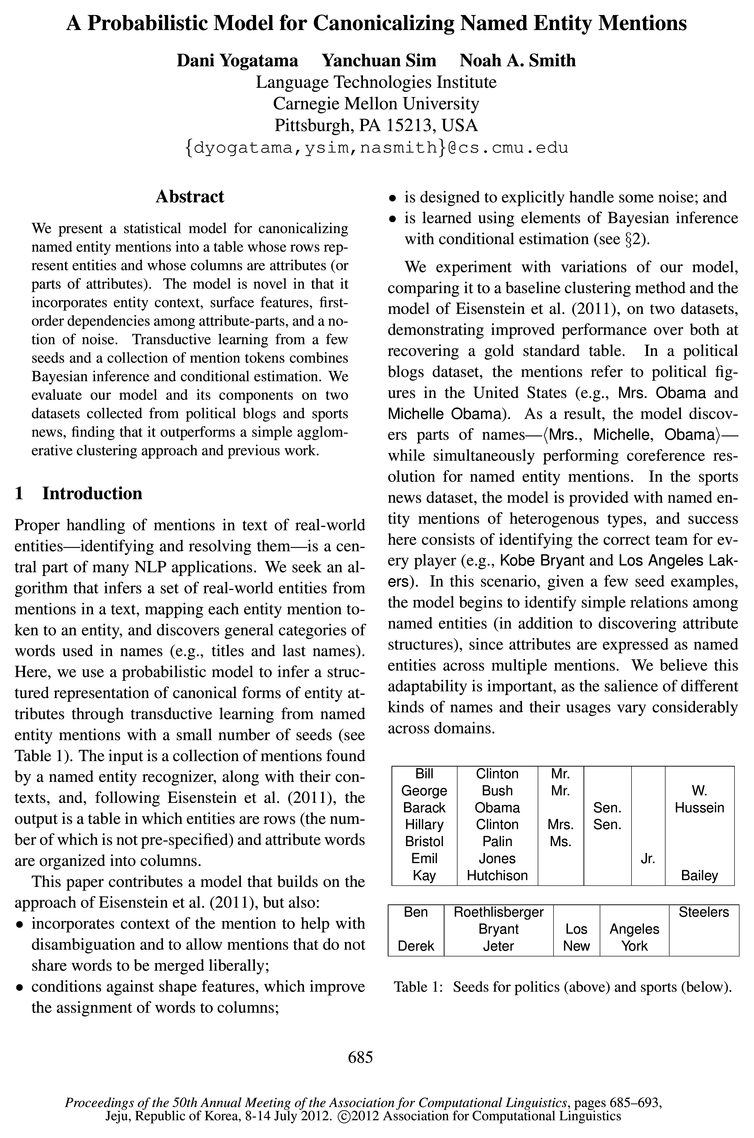

We present a statistical model for canonicalizing named entity mentions into a table whose rows represent entities and whose columns are attributes (or parts of attributes).

Introduction

Proper handling of mentions in text of real-world entities—identifying and resolving them—is a central part of many NLP applications.

Model

We begin by assuming as input a set of mention tokens, each one or more words.

Learning and Inference

Our learning procedure is an iterative algorithm consisting of two steps.

Experiments

We compare several variations of our model to Eisenstein et al.

Related Work

There has been work that attempts to fill predefined templates using Bayesian nonparametrics (Haghighi and Klein, 2010) and automatically learns template structures using agglomerative clustering (Chambers and Jurafsky, 2011).

Conclusions

We presented an improved probabilistic model for canonicalizing named entities into a table.

Topics

named entity

- We present a statistical model for canonicalizing named entity mentions into a table whose rows represent entities and whose columns are attributes (or parts of attributes).Page 1, “Abstract”

- tributes through transductive learning from named entity mentions with a small number of seeds (seePage 1, “Introduction”

- by a named entity recognizer, along with their con-Page 1, “Introduction”

- As a result, the model discovers parts of names—(Mrs., Michelle, Obama)—while simultaneously performing coreference resolution for named entity mentions.Page 1, “Introduction”

- In the sports news dataset, the model is provided with named entity mentions of heterogenous types, and success here consists of identifying the correct team for every player (e.g., Kobe Bryant and Los Angeles Lakers).Page 1, “Introduction”

- In this scenario, given a few seed examples, the model begins to identify simple relations among named entities (in addition to discovering attribute structures), since attributes are expressed as named entities across multiple mentions.Page 1, “Introduction”

- In our experiments these are obtained by running a named entity recognizer.Page 2, “Model”

- We collected named entity mentions from two corpora: political blogs and sports news.Page 5, “Experiments”

- Due to the large size of the corpora, we uniformly sampled a subset of documents for each corpus and ran the Stanford NER tagger (Finkel et al., 2005), which tagged named entities mentions as person, location, and organization.Page 5, “Experiments”

- We used named entity of type person from the political blogs corpus, while we are interested in person and organization entities for the sports news corpus.Page 5, “Experiments”

- Table 2 summarizes statistics for both datasets of named entity mentions.Page 5, “Experiments”

See all papers in Proc. ACL 2012 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.

entity mentions

- We present a statistical model for canonicalizing named entity mentions into a table whose rows represent entities and whose columns are attributes (or parts of attributes).Page 1, “Abstract”

- We seek an algorithm that infers a set of real-world entities from mentions in a text, mapping each entity mention token to an entity, and discovers general categories of words used in names (e.g., titles and last names).Page 1, “Introduction”

- tributes through transductive learning from named entity mentions with a small number of seeds (seePage 1, “Introduction”

- As a result, the model discovers parts of names—(Mrs., Michelle, Obama)—while simultaneously performing coreference resolution for named entity mentions .Page 1, “Introduction”

- In the sports news dataset, the model is provided with named entity mentions of heterogenous types, and success here consists of identifying the correct team for every player (e.g., Kobe Bryant and Los Angeles Lakers).Page 1, “Introduction”

- We collected named entity mentions from two corpora: political blogs and sports news.Page 5, “Experiments”

- Due to the large size of the corpora, we uniformly sampled a subset of documents for each corpus and ran the Stanford NER tagger (Finkel et al., 2005), which tagged named entities mentions as person, location, and organization.Page 5, “Experiments”

- Table 2 summarizes statistics for both datasets of named entity mentions .Page 5, “Experiments”

See all papers in Proc. ACL 2012 that mention entity mentions.

See all papers in Proc. ACL that mention entity mentions.

Back to top.

Gibbs sampling

- In the E-step, we perform collapsed Gibbs sampling to obtain distributions over row and column indices for every mention, given the current value of the hyperparamaters.Page 3, “Learning and Inference”

- Also, our model has interdependencies among column indices of a mention.2 Standard Gibbs sampling procedure breaks down these dependencies.Page 4, “Learning and Inference”

- This kind of blocked Gibbs sampling was proposed by Jensen et al.Page 4, “Learning and Inference”

- Alternatively, we can perform standard Gibbs sampling and drop the dependencies between columns, which makes the model rely more heavily on the features.Page 4, “Learning and Inference”

- We ran Gibbs sampling for 500 iterations,3 discarding the first 200 for burn-in and averaging counts over every 10th sample to reduce autocorrelation.Page 5, “Learning and Inference”

- The initializer was constructed once and not tuned across experiments.4 The M- step was performed every 50 Gibbs sampling iterations.Page 5, “Learning and Inference”

See all papers in Proc. ACL 2012 that mention Gibbs sampling.

See all papers in Proc. ACL that mention Gibbs sampling.

Back to top.

context information

- We further incorporate context information and a notion of noise.Page 3, “Learning and Inference”

- It is important to be able to se context information to determine which row mention should go into.Page 3, “Learning and Inference”

- We also analyze how much each of our four main extensions (shape features, context information , noise, and first-order column dependencies) to EEA contributes to overall performance by ablating each in turn (also shown in Fig.Page 6, “Experiments”

- Our model can do better, since it makes use of context information and features, and it can put a person and an organization in one row even though they do not share common words.Page 8, “Experiments”

- It shows that in some cases context information is not adequate, and a possible improvement might be obtained by providing more context to the model.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention context information.

See all papers in Proc. ACL that mention context information.

Back to top.

probabilistic model

- Here, we use a probabilistic model to infer a struc-Page 1, “Introduction”

- These choices highlight that the design of a probabilistic model can draw from both Bayesian and discriminative tools.Page 3, “Model”

- Our model is focused on the problem of canonicalizing mention strings into their parts, though its 7“ variables (which map mentions to rows) could be interpreted as (within-document and cross-document) coreference resolution, which has been tackled using a range of probabilistic models (Li et al., 2004; Haghighi and Klein, 2007; Poon and Domingos, 2008; Singh et al., 2011).Page 8, “Related Work”

- We presented an improved probabilistic model for canonicalizing named entities into a table.Page 8, “Conclusions”

See all papers in Proc. ACL 2012 that mention probabilistic model.

See all papers in Proc. ACL that mention probabilistic model.

Back to top.

coreference

- As a result, the model discovers parts of names—(Mrs., Michelle, Obama)—while simultaneously performing coreference resolution for named entity mentions.Page 1, “Introduction”

- Therefore, besides canonicalizing named entities, the model also resolves within—document and cross-document coreference , since it assigned a row index for every mention.Page 7, “Experiments”

- Our model is focused on the problem of canonicalizing mention strings into their parts, though its 7“ variables (which map mentions to rows) could be interpreted as (within-document and cross-document) coreference resolution, which has been tackled using a range of probabilistic models (Li et al., 2004; Haghighi and Klein, 2007; Poon and Domingos, 2008; Singh et al., 2011).Page 8, “Related Work”

See all papers in Proc. ACL 2012 that mention coreference.

See all papers in Proc. ACL that mention coreference.

Back to top.

gold standard

- (2011), on two datasets, demonstrating improved performance over both at recovering a gold standard table.Page 1, “Introduction”

- The gold standard table for the politics dataset is incomplete, whereas it is complete for the sports dataset.Page 5, “Experiments”

- The authors thank Jacob Eisenstein and Tae Yano for helpful discussions and providing us with the implementation of their model, Tim Hawes for helpful discussions, Naomi Saphra for assistance in developing the gold standard for the politics dataset, and three anonymous reviewers for comments on an earlier draft of this paper.Page 8, “Conclusions”

See all papers in Proc. ACL 2012 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

log-linear

- This uses a log-linear distribution with partition function Z.Page 2, “Model”

- These features are incorporated as a log-linear dis-Page 4, “Learning and Inference”

- For each word in a mention 10, we introduced 12 binary features f for our featurized log-linear distribution (§3.1.2).Page 5, “Learning and Inference”

See all papers in Proc. ACL 2012 that mention log-linear.

See all papers in Proc. ACL that mention log-linear.

Back to top.