Article Structure

Abstract

This paper presents HEADY: a novel, abstractive approach for headline generation from news collections.

Introduction

Motivation.

Related work

Headline generation and summarization.

Headline generation

In this section, we describe the HEADY system for news headline abstraction.

Experiment settings

In our method we use patterns that are fully lex-icalized (with the exception of entity placehold-ers) and enriched with syntactic data.

Results

The COLLECTIONTOPATTERNS algorithm was run on the training set, producing a 230 million

Conclusions

We have presented HEADY, an abstractive headline generation system based on the generalization of syntactic patterns by means of a Noisy-OR Bayesian network.

Topics

manual evaluation

- HEADY improves over a state-of-the-art open-domain title abstraction method, bridging half of the gap that separates it from extractive methods using human-generated titles in manual evaluations , and performs comparably to human-generated headlines as evaluated with ROUGE.Page 1, “Abstract”

- Table 3: Results from the manual evaluation .Page 8, “Experiment settings”

- Table 3 lists the results of the manual evaluation of readability and informativeness of the generated headlines.Page 8, “Results”

- In fact, in the DUC competitions, the gap between human summaries and automatic summaries was also more apparent in the manual evaluations than using ROUGE.Page 8, “Results”

- The manual evaluation is asking raters to judge whether real, human-written titles that were actually used for those news are grammatical and informative.Page 8, “Results”

- As could be expected, as these are published titles, the real titles score very good on the manual evaluation .Page 8, “Results”

See all papers in Proc. ACL 2013 that mention manual evaluation.

See all papers in Proc. ACL that mention manual evaluation.

Back to top.

news articles

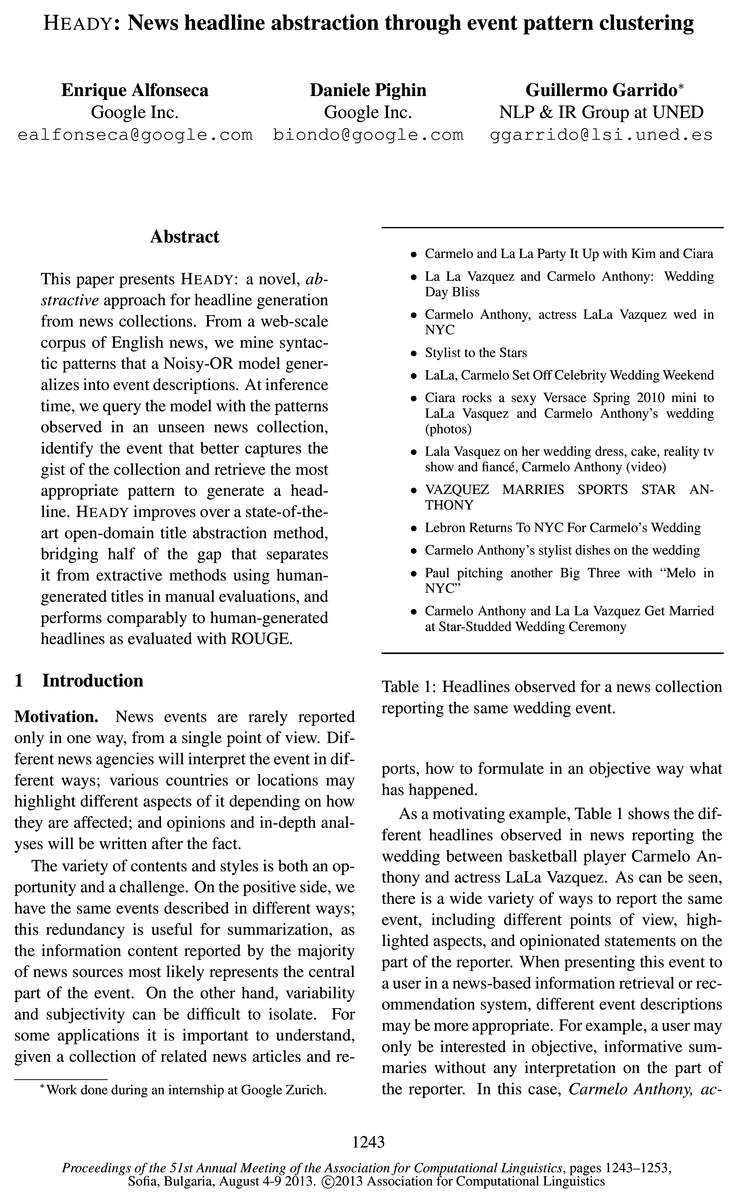

- For some applications it is important to understand, given a collection of related news articles and re-Page 1, “Introduction”

- Most headline generation work in the past has focused on the problem of single-document summarization: given the main passage of a single news article , generate a very short summary of the article.Page 2, “Related work”

- Filippova (2010) reports a system that is very close to our settings: the input is a collection of related news articles , and the system generates a headline that describes the main event.Page 3, “Related work”

- Our approach takes as input, for training, a corpus of news articles organized in news collections.Page 3, “Headline generation”

- Algorithm 2 EXTRACTPATTERNSq;(n, E): n is the list of sentences in a news article .Page 5, “Headline generation”

- All six are large collections with 50 news articles , so this baseline is significantly different from a random baseline.Page 7, “Experiment settings”

See all papers in Proc. ACL 2013 that mention news articles.

See all papers in Proc. ACL that mention news articles.

Back to top.

entity types

- Chambers and Jurafsky (2009) present an unsupervised method for learning narrative schemas from news, i.e., coherent sets of events that involve specific entity types (semantic roles).Page 3, “Related work”

- Similarly to them, we move from the assumptions that 1) utterances involving the same entity types within the same document (in our case, a collection of related documents) are likely describing aspects of the same event, and 2) meaningful representations of the underlying events can be learned by clustering these utterances in a principled way.Page 3, “Related work”

- (1): an MST is extracted from the entity pair 61, 62 (2); nodes are heuristically added to the MST to enforce grammaticality (3); entity types are recombined to generate the final patterns (4).Page 4, “Headline generation”

- COMBINEENTITYTYPES: Finally, a distinct pattern is generated from each possible combination of entity type assignments for the participating entities.Page 5, “Headline generation”

- While in many cases information about entity types would be sufficient to decide about the order of the entities in the generated sentences (e. g., “[person] married in [location]” for the entity set {ea 2 “Mr.Page 6, “Headline generation”

See all papers in Proc. ACL 2013 that mention entity types.

See all papers in Proc. ACL that mention entity types.

Back to top.

dependency parse

- PREPROCESSDATA: We start by preprocessing all the news in the news collections with a standard NLP pipeline: tokenization and sentence boundary detection (Gillick, 2009), part-of-speech tagging, dependency parsing (Nivre, 2006), co-reference resolution (Haghighi and Klein, 2009) and entity linking based on Wikipedia and Freebase.Page 4, “Headline generation”

- Figure 1: Pattern extraction process from an annotated dependency parse .Page 4, “Headline generation”

- GETMENTIONNODES: Using the dependency parse T for a sentence 3, we first identify the set of nodes M,- that mention the entities in E. If T does not contain exactly one mention of each target entity in E1, then the sentence is ignored.Page 4, “Headline generation”

- Sentences are POS-tagged, dependency parsed and annotated with respect to a set of entities E 2 E,-79 <— (Z) for all s E n[0 : 2) do T <— DEPPARSE(s) M,- <— GETMENTIONNODES(t, E) if Ele E E1, count(e, 75 1 then continue P,- <— GETMINIMUMSPANNINGTREEq;(Mi)Page 5, “Headline generation”

See all papers in Proc. ACL 2013 that mention dependency parse.

See all papers in Proc. ACL that mention dependency parse.

Back to top.

entities mentioned

- In the end, for each entity mentioned in the document we have a unique identifier, a list with all its mentions in the document and a list of class labels from Freebase.Page 4, “Headline generation”

- GETRELEVANTENTITIES: For each news collection N we collect the set E of the entities mentioned most often within the collection.Page 4, “Headline generation”

- We invoke again INFERENCE, now using at the same time all the patterns extracted for every subset of E, g E. This computes a probability distribution w over all patterns involving any admissible subset of the entities mentioned in the collection.Page 6, “Headline generation”

See all papers in Proc. ACL 2013 that mention entities mentioned.

See all papers in Proc. ACL that mention entities mentioned.

Back to top.

language model

- (2007) generate novel utterances by combining Prim’s maximum-spanning-tree algorithm with an n-gram language model to enforce fluency.Page 2, “Related work”

- o TopicSum: we use TopicSum (Haghighi and Vanderwende, 2009), a 3-layer hierarchical topic model, to infer the language model that is most central for the collection.Page 7, “Experiment settings”

- divergence with respect the collection language model is the one chosen.Page 7, “Experiment settings”

See all papers in Proc. ACL 2013 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

statistically significantly

- When compared to a state-of-the-art open-domain headline abstraction system (Filippova, 2010), the new headlines are statistically significantly better both in terms of readability and informativeness.Page 2, “Introduction”

- 0 Amongst the automatic systems, HEADY performed better than MSC, with statistical significance at 95% for all the metrics.Page 9, “Results”

- o The most frequent pattern baseline and HEADY have comparable performance across all the metrics (not statistically significantly different), although HEADY has slightly better scores for all metrics except for informativeness.Page 9, “Results”

See all papers in Proc. ACL 2013 that mention statistically significantly.

See all papers in Proc. ACL that mention statistically significantly.

Back to top.