Article Structure

Abstract

The evaluation of whole-sentence semantic structures plays an important role in semantic parsing and large-scale semantic structure annotation.

Introduction

The goal of semantic parsing is to generate all semantic relationships in a text.

Semantic Overlap

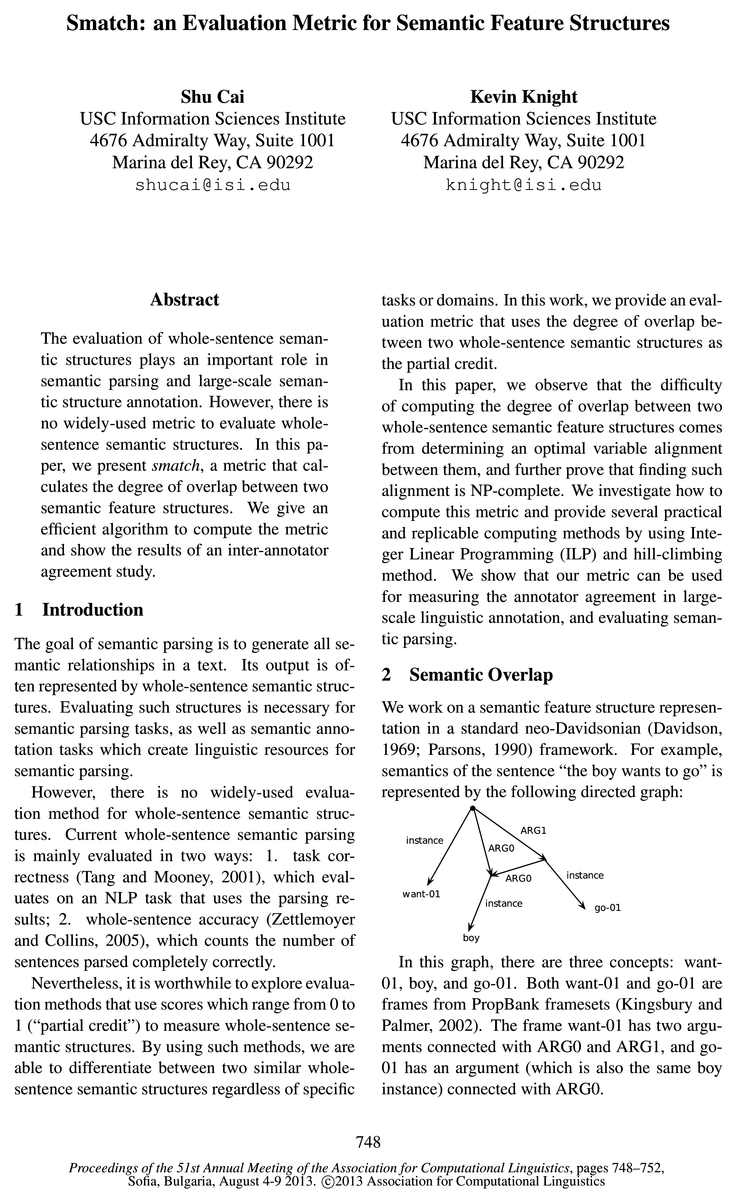

We work on a semantic feature structure representation in a standard neo-Davidsonian (Davidson, 1969; Parsons, 1990) framework.

Computing the Metric

This section describes how to compute the smatch score.

Using Smatch

We report an AMR inter-annotator agreement study using smatch.

Related Work

Related work on directly measuring the semantic representation includes the method in (Dri-dan and Oepen, 2011), which evaluates semantic parser output directly by comparing semantic substructures, though they require an alignment between sentence spans and semantic substructures.

Conclusion and Future Work

We present an evaluation metric for whole-sentence semantic analysis, and show that it can be computed efficiently.

Topics

ILP

- We investigate how to compute this metric and provide several practical and replicable computing methods by using Integer Linear Programming ( ILP ) and hill-climbing method.Page 1, “Introduction”

- ILP method.Page 2, “Computing the Metric”

- We can get an optimal solution using integer linear programming ( ILP ).Page 2, “Computing the Metric”

- Finally, we ask the ILP solver to maximize:Page 3, “Computing the Metric”

- Finally, we develop a portable heuristic algorithm that does not require an ILP solverl.Page 3, “Computing the Metric”

- 0 ILP : Integer Linear ProgrammingPage 3, “Using Smatch”

- Each individual smatch score is a document-level score of 4 AMR pairs.3 ILP scores are optimal, so lower scores (in bold) indicate search errors.Page 3, “Using Smatch”

- Table 2 summarizes search accuracy as a percentage of smatch scores that equal that of ILP .Page 3, “Using Smatch”

- Base ILP R 10R S S+4R S+9RPage 4, “Using Smatch”

See all papers in Proc. ACL 2013 that mention ILP.

See all papers in Proc. ACL that mention ILP.

Back to top.

semantic parsing

- The evaluation of whole-sentence semantic structures plays an important role in semantic parsing and large-scale semantic structure annotation.Page 1, “Abstract”

- The goal of semantic parsing is to generate all semantic relationships in a text.Page 1, “Introduction”

- Evaluating such structures is necessary for semantic parsing tasks, as well as semantic annotation tasks which create linguistic resources for semantic parsing .Page 1, “Introduction”

- Current whole-sentence semantic parsing is mainly evaluated in two ways: 1. task correctness (Tang and Mooney, 2001), which evaluates on an NLP task that uses the parsing results; 2. whole-sentence accuracy (Zettlemoyer and Collins, 2005), which counts the number of sentences parsed completely correctly.Page 1, “Introduction”

- We show that our metric can be used for measuring the annotator agreement in large-scale linguistic annotation, and evaluating semantic parsing .Page 1, “Introduction”

- (Jones et al., 2012) use it to evaluate automatic semantic parsing in a narrow domain, while Ulf Her-mjakob4 has developed a heuristic algorithm that exploits and supplements Ontonotes annotations (Pradhan et al., 2007) in order to automatically create AMRs for Ontonotes sentences, with a smatch score of 0.74 against human consensus AMRs.Page 4, “Using Smatch”

- Related work on directly measuring the semantic representation includes the method in (Dri-dan and Oepen, 2011), which evaluates semantic parser output directly by comparing semantic substructures, though they require an alignment between sentence spans and semantic substructures.Page 4, “Related Work”

See all papers in Proc. ACL 2013 that mention semantic parsing.

See all papers in Proc. ACL that mention semantic parsing.

Back to top.

evaluation metric

- In this work, we provide an evaluation metric that uses the degree of overlap between two whole-sentence semantic structures as the partial credit.Page 1, “Introduction”

- Our evaluation metric measures precision, recall, and f-score of the triples in the second AMR against the triples in the first AMR, i.e., the amount of propositional overlap.Page 2, “Semantic Overlap”

- We present an evaluation metric for whole-sentence semantic analysis, and show that it can be computed efficiently.Page 4, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention evaluation metric.

See all papers in Proc. ACL that mention evaluation metric.

Back to top.

Linear Programming

- We investigate how to compute this metric and provide several practical and replicable computing methods by using Integer Linear Programming (ILP) and hill-climbing method.Page 1, “Introduction”

- We can get an optimal solution using integer linear programming (ILP).Page 2, “Computing the Metric”

- 0 ILP: Integer Linear ProgrammingPage 3, “Using Smatch”

See all papers in Proc. ACL 2013 that mention Linear Programming.

See all papers in Proc. ACL that mention Linear Programming.

Back to top.

semantic representation

- Following (Langkilde and Knight, 1998) and (Langkilde-Geary, 2002), we refer to this semantic representation as AMR (Abstract Meaning Representation).Page 2, “Semantic Overlap”

- Related work on directly measuring the semantic representation includes the method in (Dri-dan and Oepen, 2011), which evaluates semantic parser output directly by comparing semantic substructures, though they require an alignment between sentence spans and semantic substructures.Page 4, “Related Work”

- In the future, we plan to investigate how to adapt smatch to other semantic representations .Page 4, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention semantic representation.

See all papers in Proc. ACL that mention semantic representation.

Back to top.