Article Structure

Abstract

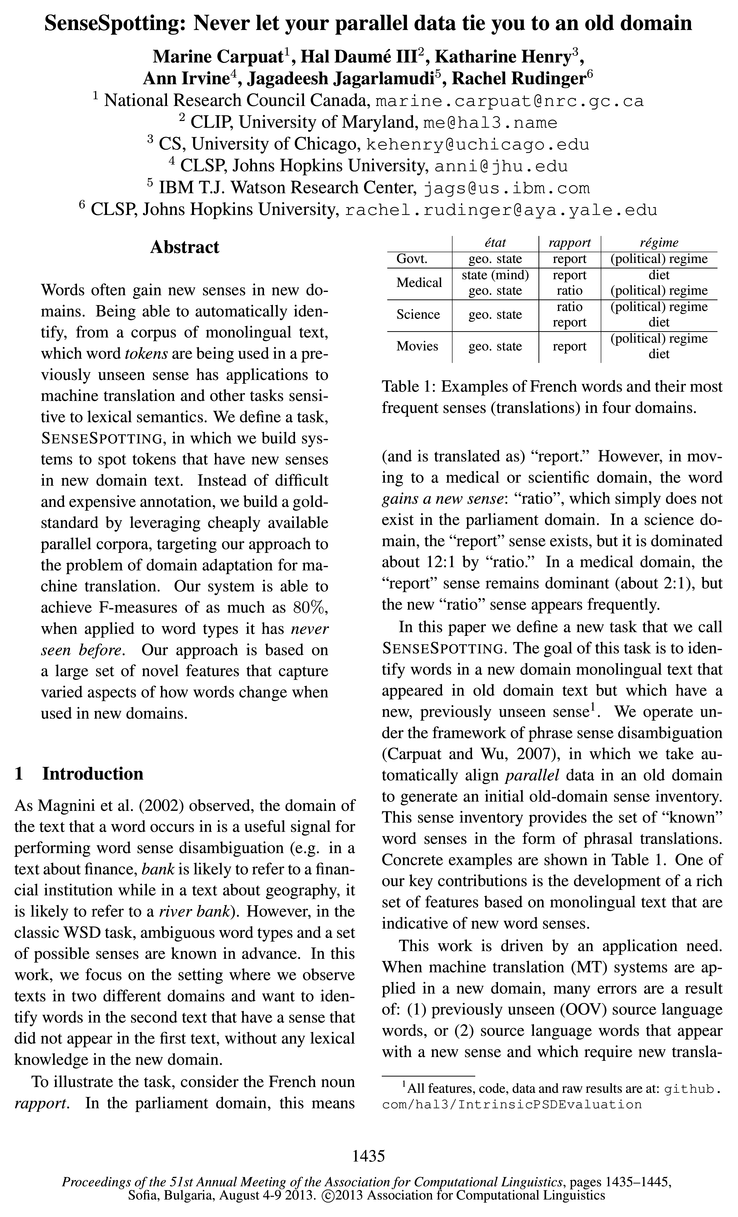

Words often gain new senses in new domains.

Introduction

As Magnini et al.

Task Definition

Our task is defined by two data components.

Related Work

While word senses have been studied extensively in lexical semantics, research has focused on word sense disambiguation, the task of disambiguating words in context given a predefined sense inventory (e.g., Agirre and Edmonds (2006)), and word sense induction, the task of learning sense inventories from text (e. g., Agirre and Soroa (2007)).

New Sense Indicators

We define features over both word types and word tokens.

Data and Gold Standard

The first component of our task is a parallel corpus of old domain data, for which we use the French-English Hansard parliamentary proceedings (http: //WWW .parl .

Experiments

6.1 Experimental setup

Topics

parallel data

- We operate under the framework of phrase sense disambiguation (Carpuat and Wu, 2007), in which we take automatically align parallel data in an old domain to generate an initial old-domain sense inventory.Page 1, “Introduction”

- From an applied perspective, the assumption of a small amount of parallel data in the new domain is reasonable: if we want an MT system for a new domain, we will likely have some data for system tuning and evaluation.Page 2, “Task Definition”

- In contrast, the SENSESPOTTING task consists of detecting when senses are unknown in parallel data .Page 2, “Related Work”

- Table 2: Basic characteristics of the parallel data .Page 5, “New Sense Indicators”

- of the full parallel text; we do not use the English side of the parallel data for actually building systems.Page 5, “Experiments”

- One disadvantage to the previous method for evaluating the SENSESPOTTING task is that it requires parallel data in a new domain.Page 8, “Experiments”

- Suppose we have no parallel data in the new domain at all, yet still want to attack the SENSESPOTTING task.Page 8, “Experiments”

- to train a system on domains for which we do have parallel data , and then apply it in a new domain.Page 8, “Experiments”

- This classifier will almost certainly be worse than one trained on NEW (Science) but does not require any parallel data in that domain.Page 8, “Experiments”

- To ease comparison to the results that do not suffer from domain shift, we also present “XV-ALLFEATURES”, which are results copied from Table 4 in which parallel data from NEW is used.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention parallel data.

See all papers in Proc. ACL that mention parallel data.

Back to top.

probability distribution

- Second, given a source word 3, we use this classifier to compute the probability distribution of target translations (p(t|s)).Page 4, “New Sense Indicators”

- Subsequently, we use this probability distribution to define new features for the SENSESPOTTING task.Page 4, “New Sense Indicators”

- Entropy is the entropy of the probability distribution : — 2t p(t|s) log p(t|s).Page 4, “New Sense Indicators”

- Spread is the difference between maximum and minimum probabilities of the probability distribution : (maxtp(t|s) — mintp(t|8)).Page 4, “New Sense Indicators”

- one classifier per word type, we can define additional features based on the prior (with out the word context) and posterior (given the word’s context) probability distributions output by the classifier, i.e.Page 4, “New Sense Indicators”

- While the SENSESPOTTING task has MT utility in suggesting which new domain words demand a new translation, the MOSTFRE-QSENSECHANGE task has utility in suggesting which words demand a new translation probability distribution when shifting to a new domain.Page 9, “Experiments”

See all papers in Proc. ACL 2013 that mention probability distribution.

See all papers in Proc. ACL that mention probability distribution.

Back to top.

n-gram

- N-gram Probability Features The goal of the Type:NgramProb feature is to capture the fact that “unusual contexts” might imply new senses.Page 3, “New Sense Indicators”

- To capture this, we can look at the log probability of the word under consideration given its N-gram context, both according to an old-domain language model (call this 6°“) and a new-domain languagePage 3, “New Sense Indicators”

- From these four values, we compute corpus-level (and therefore type-based) statistics of the new domain n-gram log probability (Eflgw, the difference between the n-gram probabilities in each domain (623” — 6:51), the difference between the n-gram and unigram probabilities in the new domain (EQSW — 633‘”), and finally the combined difference: 623"” — [SSW + 63:: — 635’).Page 3, “New Sense Indicators”

- N-gram Probability Features Akin to the N-gram probability features at the type level (namely, Token:NgramPr0b), we compute the same values at the token level (new/old domain and un-igram/trigram).Page 4, “New Sense Indicators”

- Context Features Following the type-level n-gram feature, we define features for a particular word token based on its n-gram context.Page 4, “New Sense Indicators”

See all papers in Proc. ACL 2013 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

sense disambiguation

- (2002) observed, the domain of the text that a word occurs in is a useful signal for performing word sense disambiguation (e.g.Page 1, “Introduction”

- We operate under the framework of phrase sense disambiguation (Carpuat and Wu, 2007), in which we take automatically align parallel data in an old domain to generate an initial old-domain sense inventory.Page 1, “Introduction”

- While word senses have been studied extensively in lexical semantics, research has focused on word sense disambiguation , the task of disambiguating words in context given a predefined sense inventory (e.g., Agirre and Edmonds (2006)), and word sense induction, the task of learning sense inventories from text (e. g., Agirre and Soroa (2007)).Page 2, “Related Work”

- Chan and Ng (2007) notably show that detecting changes in predominant sense as modeled by domain sense priors can improve sense disambiguation , even after performing adaptation using active learning.Page 3, “Related Work”

- Towards this end, first, we pose the problem as a phrase sense disambiguation (PSD) problem over the known sense inventory.Page 4, “New Sense Indicators”

See all papers in Proc. ACL 2013 that mention sense disambiguation.

See all papers in Proc. ACL that mention sense disambiguation.

Back to top.

word sense

- (2002) observed, the domain of the text that a word occurs in is a useful signal for performing word sense disambiguation (e.g.Page 1, “Introduction”

- This sense inventory provides the set of “known” word senses in the form of phrasal translations.Page 1, “Introduction”

- One of our key contributions is the development of a rich set of features based on monolingual text that are indicative of new word senses .Page 1, “Introduction”

- While word senses have been studied extensively in lexical semantics, research has focused on word sense disambiguation, the task of disambiguating words in context given a predefined sense inventory (e.g., Agirre and Edmonds (2006)), and word sense induction, the task of learning sense inventories from text (e. g., Agirre and Soroa (2007)).Page 2, “Related Work”

- In contrast, detecting novel senses has not received as much attention, and is typically addressed within word sense induction, rather than as a distinct SENSESPOTTING task.Page 2, “Related Work”

See all papers in Proc. ACL 2013 that mention word sense.

See all papers in Proc. ACL that mention word sense.

Back to top.

machine translation

- Being able to automatically identify, from a corpus of monolingual text, which word tokens are being used in a previously unseen sense has applications to machine translation and other tasks sensitive to lexical semantics.Page 1, “Abstract”

- Instead of difficult and expensive annotation, we build a gold-standard by leveraging cheaply available parallel corpora, targeting our approach to the problem of domain adaptation for machine translation .Page 1, “Abstract”

- When machine translation (MT) systems are applied in a new domain, many errors are a result of: (1) previously unseen (OOV) source language words, or (2) source language words that appear with a new sense and which require new transla-Page 1, “Introduction”

- Work on active learning for machine translation has focused on collecting translations for longer unknown segments (e. g., Bloodgood and Callison-Burch (2010)).Page 3, “Related Work”

See all papers in Proc. ACL 2013 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

parallel corpora

- Instead of difficult and expensive annotation, we build a gold-standard by leveraging cheaply available parallel corpora , targeting our approach to the problem of domain adaptation for machine translation.Page 1, “Abstract”

- In contrast, our SENSESPOTTING task leverages automatically word-aligned parallel corpora as a source of annotation for supervision during training and evaluation.Page 2, “Related Work”

- In all parallel corpora , we normalize the English for American spelling.Page 5, “Data and Gold Standard”

- “representative tokens”) extracted from fairly large new domain parallel corpora (see Table 3), consisting of between 22 and 36 thousand parallel sentences, which yield between 8 and 35 thousand representative tokens.Page 9, “Experiments”

See all papers in Proc. ACL 2013 that mention parallel corpora.

See all papers in Proc. ACL that mention parallel corpora.

Back to top.

unigram

- As such, we compute unigram log probabilities (via smoothed relative frequencies) of each word under consideration in the old domain and the new domain.Page 3, “New Sense Indicators”

- However, we do not simply want to capture unusual words, but words that are unlikely in context, so we also need to look at the respective unigram log probabilities: 635' and Eflgw.Page 3, “New Sense Indicators”

- From these four values, we compute corpus-level (and therefore type-based) statistics of the new domain n-gram log probability (Eflgw, the difference between the n-gram probabilities in each domain (623” — 6:51), the difference between the n-gram and unigram probabilities in the new domain (EQSW — 633‘”), and finally the combined difference: 623"” — [SSW + 63:: — 635’).Page 3, “New Sense Indicators”

- The six features we construct are: unigram (and trigram) log probabilities in the old domain, the new domain, and their difference.Page 4, “New Sense Indicators”

See all papers in Proc. ACL 2013 that mention unigram.

See all papers in Proc. ACL that mention unigram.

Back to top.

f-measure

- Each learner uses a small amount of development data to tune a threshold on scores for predicting new-sense or not-a-new-sense, using macro F-measure as an objective.Page 6, “Experiments”

- are relatively weak for predicting new senses on EMEA data but stronger on Subs (TYPEONLY AUC performance is higher than both baselines) and even stronger on Science data (TYPEONLY AUC and f-measure performance is higher than both baselines as well as the ALLFEA-TURESmodel).Page 7, “Experiments”

- Recall that the microlevel evaluation computes precision, recall, and f-measure for all word tokens of a given word type and then averages across word types.Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention f-measure.

See all papers in Proc. ACL that mention f-measure.

Back to top.

phrase table

- The ground truth labels (target translation for a given source word) for this classifier are generated from the phrase table of the old domain data.Page 4, “New Sense Indicators”

- We first used the phrase table fromPage 5, “Data and Gold Standard”

- We then looked at the different translations that each had in the phrase table and a French speaker selected a subset that have multiple senses.3Page 5, “Data and Gold Standard”

See all papers in Proc. ACL 2013 that mention phrase table.

See all papers in Proc. ACL that mention phrase table.

Back to top.