Article Structure

Abstract

Many models in NLP involve latent variables, such as unknown parses, tags, or alignments.

Introduction

Rich models with latent linguistic variables are popular in computational linguistics, but in general it is not known how to find their optimal parameters.

The Constrained Optimization Task

We begin by describing how for our typical model, the Viterbi EM objective can be formulated as a mixed integer quadratic programming (MIQP) problem with nonlinear constraints (Figure 2).

A Branch-and-Bound Algorithm

The mixed integer quadratic program with nonlinear constraints, given in the previous section, maximizes the nonconvex Viterbi EM objective and is NP-hard to solve (Cohen and Smith, 2010).

Relaxations

The relaxation in the bounding step computes an optimistic bound for a subspace of the model parameters.

Projections

A pessimistic bound, from the projecting step, will correspond to a feasible but not necessarily optimal solution to the original problem.

Related Work

The goal of this work was to better understand and address the non-convexity of maximum-likelihood training with latent variables, especially parses.

Experiments

We first analyze the behavior of our method on a toy synthetic dataset.

Discussion

In principle, our branch-and-bound method can approach e-optimal solutions to Viterbi training of locally normalized generative models, including the NP-hard case of grammar induction with the DMV.

Topics

Viterbi

- Although these techniques do not yet provide a practical solution to our instance of this NP-hard problem, they sometimes find better solutions than Viterbi EM with random restarts, in the same time.Page 1, “Abstract”

- Many parameter estimation techniques have been attempted, including expectation-maximization (EM) (Klein and Manning, 2004; Spitkovsky et al., 2010a), contrastive estimation (Smith and Eisner, 2006; Smith, 2006), Viterbi EM (Spitkovsky et al., 2010b), and variational EM (Naseem et al., 2010; Cohen et al., 2009; Cohen and Smith, 2009).Page 1, “Introduction”

- Note that this objective is that of hard ( Viterbi ) EM—we do not marginalize over the parses as in classical EM.1Page 2, “Introduction”

- Our results demonstrate that our method can obtain higher likelihoods than Viterbi EM with random restarts.Page 2, “Introduction”

- We begin by describing how for our typical model, the Viterbi EM objective can be formulated as a mixed integer quadratic programming (MIQP) problem with nonlinear constraints (Figure 2).Page 2, “The Constrained Optimization Task”

- Concretely, (1) specifies the Viterbi EM objective: the total log-probability of the best parse trees under the parameters 6, given by a sum of log-probabilities 6m of the individual steps needed to generate the tree, as encoded by the features fm.Page 2, “The Constrained Optimization Task”

- Figure 2: Viterbi EM as a mathematical programPage 3, “The Constrained Optimization Task”

- The mixed integer quadratic program with nonlinear constraints, given in the previous section, maximizes the nonconvex Viterbi EM objective and is NP-hard to solve (Cohen and Smith, 2010).Page 4, “A Branch-and-Bound Algorithm”

- The standard approach to optimizing this program is local search by the hard ( Viterbi ) EM algorithm.Page 4, “A Branch-and-Bound Algorithm”

- Since we optimize the Viterbi EM objective, we directly constrain the counts in the single corpus parse rather than expected counts from a distribution over parses.Page 7, “Relaxations”

- These correspond to the Viterbi E step and M step, respectively.Page 7, “Projections”

See all papers in Proc. ACL 2013 that mention Viterbi.

See all papers in Proc. ACL that mention Viterbi.

Back to top.

model parameters

- Finding the optimal model parameters is then usually a difficult nonconvex optimization problem.Page 1, “Abstract”

- We search for the maximum-likelihood model parameters and corpus parse, subject to posterior constraints.Page 1, “Abstract”

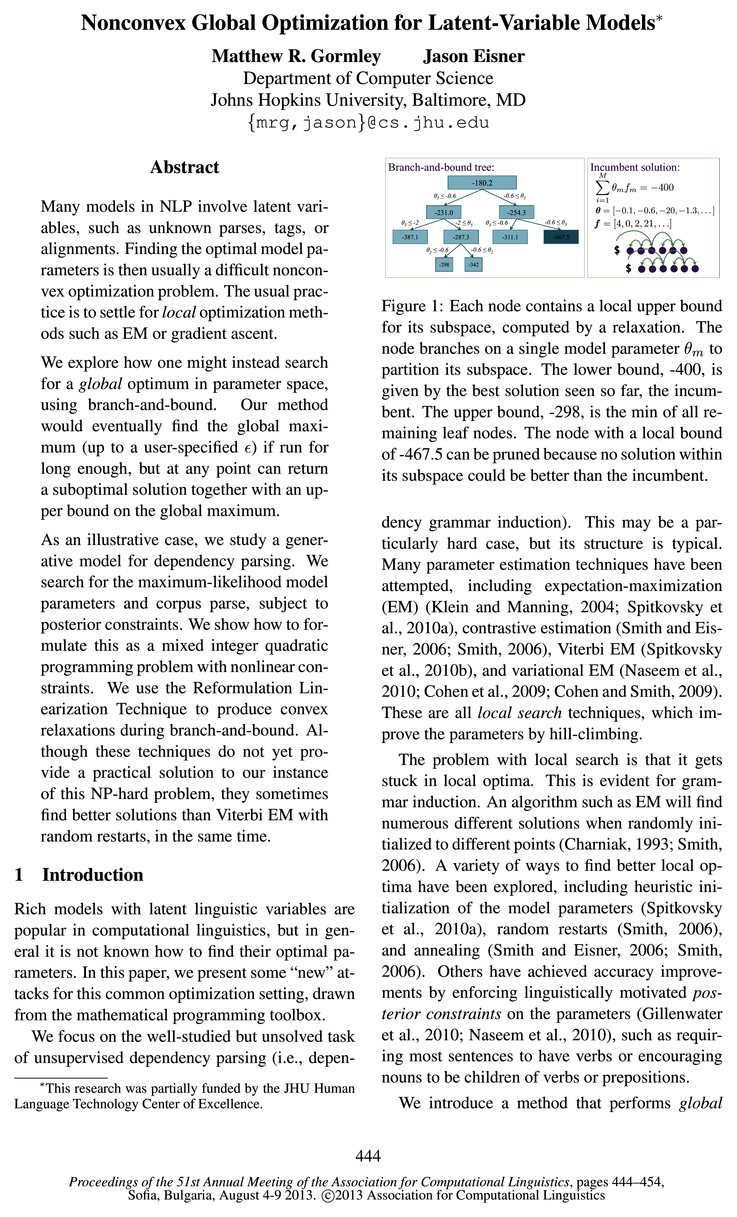

- The node branches on a single model parameter 6m to partition its subspace.Page 1, “Introduction”

- A variety of ways to find better local optima have been explored, including heuristic initialization of the model parameters (Spitkovsky et al., 2010a), random restarts (Smith, 2006), and annealing (Smith and Eisner, 2006; Smith, 2006).Page 1, “Introduction”

- search with certificates of e-optimality for both the corpus parse and the model parameters .Page 2, “Introduction”

- To globally optimize the objective function, we employ a branch-and-bound algorithm that searches the continuous space of the model parameters by branching on individual parameters (see Figure 1).Page 2, “Introduction”

- In the relaxation, the model parameters might sum to slightly more thanPage 2, “Introduction”

- The nonlinear constraints ensure that the model parameters are true log-probabilities.Page 2, “The Constrained Optimization Task”

- Feature / model parameter index Sentence indexPage 3, “The Constrained Optimization Task”

- Conditional distribution index Number of model parametersPage 3, “The Constrained Optimization Task”

- The final constraints (4) simply specify the range of possible values for the model parameters and their integer count variables.Page 3, “The Constrained Optimization Task”

See all papers in Proc. ACL 2013 that mention model parameters.

See all papers in Proc. ACL that mention model parameters.

Back to top.

grammar induction

- dency grammar induction ).Page 1, “Introduction”

- This is evident for grammar induction .Page 1, “Introduction”

- (2010b) present evidence that hard EM can outperform soft EM for grammar induction in a hill-climbing setting.Page 2, “Introduction”

- In this section, we will show how the RLT can be applied to our grammar induction problem and contrast it with the concave envelope relaxation presented in section 4.2.Page 6, “Relaxations”

- Alas, there are not yet any spectral learning methods that recover latent tree structure, as in grammar induction .Page 7, “Related Work”

- Several integer linear programming (ILP) formulations of dependency parsing (Riedel and Clarke, 2006; Martins et al., 2009; Riedel et al., 2012) inspired our definition of grammar induction as a MP.Page 7, “Related Work”

- In principle, our branch-and-bound method can approach e-optimal solutions to Viterbi training of locally normalized generative models, including the NP-hard case of grammar induction with the DMV.Page 9, “Discussion”

See all papers in Proc. ACL 2013 that mention grammar induction.

See all papers in Proc. ACL that mention grammar induction.

Back to top.

dependency parsing

- As an illustrative case, we study a generative model for dependency parsing .Page 1, “Abstract”

- We focus on the well-studied but unsolved task of unsupervised dependency parsing (i.e., depen-Page 1, “Introduction”

- Gimpel and Smith (2012) proposed a concave model for unsupervised dependency parsing using IBM Model 1.Page 7, “Related Work”

- Several integer linear programming (ILP) formulations of dependency parsing (Riedel and Clarke, 2006; Martins et al., 2009; Riedel et al., 2012) inspired our definition of grammar induction as a MP.Page 7, “Related Work”

- For semi-supervised dependency parsing , Wang et al.Page 7, “Related Work”

- All our experiments use the DMV for unsupervised dependency parsing of part-of-speech (POS) tag sequences.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

dependency tree

- The linear constraints in (3) will ensure that the arc variables for each sentence es encode a valid latent dependency tree , and that the f variables count up the features of these trees.Page 3, “The Constrained Optimization Task”

- This generative model defines a joint distribution over the sentences and their dependency trees .Page 3, “The Constrained Optimization Task”

- The constraints must declaratively specify that the arcs form a valid dependency tree and that the resulting feature values are as defined by the DMV.Page 3, “The Constrained Optimization Task”

- Tree Constraints To ensure that our arc variables, es, form a dependency tree , we employ the same single-commodity flow constraints of Magnanti and Wolsey (1994) as adapted by Martins et al.Page 3, “The Constrained Optimization Task”

- DMV Feature Counts The DMV generates a dependency tree recursively as follows.Page 3, “The Constrained Optimization Task”

See all papers in Proc. ACL 2013 that mention dependency tree.

See all papers in Proc. ACL that mention dependency tree.

Back to top.

latent variables

- Many models in NLP involve latent variables , such as unknown parses, tags, or alignments.Page 1, “Abstract”

- The feature counts are constrained to be derived from the latent variables (e.g., parses), which are unknown discrete structures that must be encoded with integer variables.Page 2, “The Constrained Optimization Task”

- Given a relaxed joint solution to the parameters and the latent variables, one must be able to project it to a nearby feasible one, by projecting either the fractional parameters or the fractional latent variables into the feasible space and then solving exactly for the other.Page 7, “Projections”

- The goal of this work was to better understand and address the non-convexity of maximum-likelihood training with latent variables , especially parses.Page 7, “Related Work”

- For supervised parsing, spectral leam-ing has been used to learn latent variable PCFGs (Cohen et al., 2012) and hidden-state dependency grammars (Luque et al., 2012).Page 7, “Related Work”

See all papers in Proc. ACL 2013 that mention latent variables.

See all papers in Proc. ACL that mention latent variables.

Back to top.

generative model

- As an illustrative case, we study a generative model for dependency parsing.Page 1, “Abstract”

- Other locally normalized log-linear generative models (Berg-Kirkpatrick et al., 2010) would have a similar formulation.Page 2, “The Constrained Optimization Task”

- This generative model defines a joint distribution over the sentences and their dependency trees.Page 3, “The Constrained Optimization Task”

- In principle, our branch-and-bound method can approach e-optimal solutions to Viterbi training of locally normalized generative models , including the NP-hard case of grammar induction with the DMV.Page 9, “Discussion”

See all papers in Proc. ACL 2013 that mention generative model.

See all papers in Proc. ACL that mention generative model.

Back to top.

linear programming

- At each node, our relaxation derives a linear programming problem (LP) that can be efficiently solved by the dual simplex method.Page 2, “Introduction”

- We replace our objective 2m 6m fm with 2m zm, where we would like to constrain each auxiliary variable zm to be 2 mem or (equivalently) g mem, but instead settle for making it g the concave envelope—a linear programming problem:Page 5, “Relaxations”

- Several integer linear programming (ILP) formulations of dependency parsing (Riedel and Clarke, 2006; Martins et al., 2009; Riedel et al., 2012) inspired our definition of grammar induction as a MP.Page 7, “Related Work”

- First, we run a relaxed linear programming (LP) parser, then project the (possibly fractional) parses back to the feasible region.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention linear programming.

See all papers in Proc. ACL that mention linear programming.

Back to top.