Article Structure

Abstract

In this paper, we combine easy-first dependency parsing and POS tagging algorithms with beam search and structured perceptron.

Introduction

The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) is attractive due to its good accuracy, fast speed and simplicity.

Easy-first dependency parsing

The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) builds a dependency tree by performing two types of actions LEFT(i) and RIGHT(i) to a list of subtree structures p1,.

Bk <— BESTS(X,Bk_1,W)

4 return Bn_1[0](x) // tree built by the best sequence

Training

To learn the weight vector w, we use the percep-tron-based global learning3 (Collins, 2002) which updates w by rewarding the feature weights fired in the correct action sequence and punish those fired in the predicted incorrect action sequence.

Experiments

For English, we use PTB as our data set.

Conclusion and related work

This work directly extends (Goldberg and Elhadad, 2010) with beam search and global learning.

Topics

dependency parsing

- In this paper, we combine easy-first dependency parsing and POS tagging algorithms with beam search and structured perceptron.Page 1, “Abstract”

- The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) is attractive due to its good accuracy, fast speed and simplicity.Page 1, “Introduction”

- However, to the best of our knowledge, no work in the literature has ever applied the two techniques to easy-first dependency parsing .Page 1, “Introduction”

- While applying beam-search is relatively straightforward, the main difficulty comes from combining easy-first dependency parsing with perceptron-based global learning.Page 1, “Introduction”

- While for easy-first dependency parsing , there could be multiple action sequences that yield the gold result (C1 and C2 in figure 1).Page 2, “Introduction”

- The proposed solution is general and can also be applied to other algorithms that exhibit spurious ambiguity, such as easy-first POS tagging (Ma et al., 2012) and transition-based dependency parsing with dynamic oracle (Goldberg and Nivre, 2012).Page 2, “Introduction”

- In this paper, we report experimental results on both easy-first dependency parsing and POS tagging (Ma et al., 2012).Page 2, “Introduction”

- We show that both easy-first POS tagging and dependency parsing can be improved significantly from beam search and global learning.Page 2, “Introduction”

- The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) builds a dependency tree by performing two types of actions LEFT(i) and RIGHT(i) to a list of subtree structures p1,.Page 2, “Easy-first dependency parsing”

- 4 As shown in (Goldberg and Nivre 2012), most transition-based dependency parsers (Nivre et al., 2003; Huang and Sagae 2010;Zhang and Clark 2008) ignores spurious ambiguity by using a static oracle which maps a dependency tree to a single action sequence.Page 3, “Training”

- Table 1: Feature templates for English dependency parsing .Page 3, “Training”

See all papers in Proc. ACL 2013 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

beam search

- In this paper, we combine easy-first dependency parsing and POS tagging algorithms with beam search and structured perceptron.Page 1, “Abstract”

- The proposed solution can also be applied to combine beam search and structured perceptron with other systems that exhibit spurious ambiguity.Page 1, “Abstract”

- To enlarge the search space, a natural extension to greedy search is beam search .Page 1, “Introduction”

- Recent work also shows that beam search together with perceptron-based global learning (Collins, 2002) enable the use of nonlocal features that are helpful to improve parsing performance without overfitting (Zhang and Nivre, 2012).Page 1, “Introduction”

- Due to these advantages, beam search and global learning has been applied to many NLP tasks (Collins andPage 1, “Introduction”

- We show that both easy-first POS tagging and dependency parsing can be improved significantly from beam search and global learning.Page 2, “Introduction”

- Algorithm 1: Easy-first with beam searchPage 2, “Easy-first dependency parsing”

- 3 Easy-first with beam searchPage 2, “Bk <— BESTS(X,Bk_1,W)”

- In this section, we introduce easy-first with beam search in our own notations that will be used throughout the rest of this paper.Page 2, “Bk <— BESTS(X,Bk_1,W)”

- Following (Huang et al., 2012), in order to formalize beam search , we also use the argtopffleyw - <p(y) operation which returns the top s action sequences in Y according to w - (p(y).Page 2, “Bk <— BESTS(X,Bk_1,W)”

- Pseudo-code of easy-first with beam search is shown in algorithm 1.Page 2, “Bk <— BESTS(X,Bk_1,W)”

See all papers in Proc. ACL 2013 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

POS tagging

- In this paper, we combine easy-first dependency parsing and POS tagging algorithms with beam search and structured perceptron.Page 1, “Abstract”

- The proposed solution is general and can also be applied to other algorithms that exhibit spurious ambiguity, such as easy-first POS tagging (Ma et al., 2012) and transition-based dependency parsing with dynamic oracle (Goldberg and Nivre, 2012).Page 2, “Introduction”

- In this paper, we report experimental results on both easy-first dependency parsing and POS tagging (Ma et al., 2012).Page 2, “Introduction”

- We show that both easy-first POS tagging and dependency parsing can be improved significantly from beam search and global learning.Page 2, “Introduction”

- wp denotes the head word of p, tp denotes the POS tag of wp.Page 3, “Training”

- We use the standard split for dependency parsing and the split used by (Ratnaparkhi, 1996) for POS tagging .Page 3, “Experiments”

- For dependency parsing, POS tags of the training set are generated using 10-fold jackknifing.Page 3, “Experiments”

- For dependency parsing, we assume gold segmentation and POS tags for the input.Page 3, “Experiments”

- For English POS tagging , we use the same features as in (Shen et al., 2007).Page 4, “Experiments”

- For Chinese POS tagging and dependency parsing, we use the same features as (Ma et al., 2012).Page 4, “Experiments”

- We can see that Chinese POS tagging , dependency parsing as well as English dependency parsing greatly benefit from beam search.Page 4, “Experiments”

See all papers in Proc. ACL 2013 that mention POS tagging.

See all papers in Proc. ACL that mention POS tagging.

Back to top.

dependency tree

- The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) builds a dependency tree by performing two types of actions LEFT(i) and RIGHT(i) to a list of subtree structures p1,.Page 2, “Easy-first dependency parsing”

- Input: sentence x of n words, beam width s Output: one best dependency treePage 2, “Easy-first dependency parsing”

- The algorithm proceeds until only one subtree left which is the dependency tree of the input sentence (see the example in figure 2).Page 2, “Bk <— BESTS(X,Bk_1,W)”

- Once an incorrect action is selected, it can never yield the correct dependency tree .Page 2, “Bk <— BESTS(X,Bk_1,W)”

- Finally, it returns the dependency tree built by the top action sequence in Bn_1.Page 3, “Bk <— BESTS(X,Bk_1,W)”

- 4 As shown in (Goldberg and Nivre 2012), most transition-based dependency parsers (Nivre et al., 2003; Huang and Sagae 2010;Zhang and Clark 2008) ignores spurious ambiguity by using a static oracle which maps a dependency tree to a single action sequence.Page 3, “Training”

See all papers in Proc. ACL 2013 that mention dependency tree.

See all papers in Proc. ACL that mention dependency tree.

Back to top.

model score

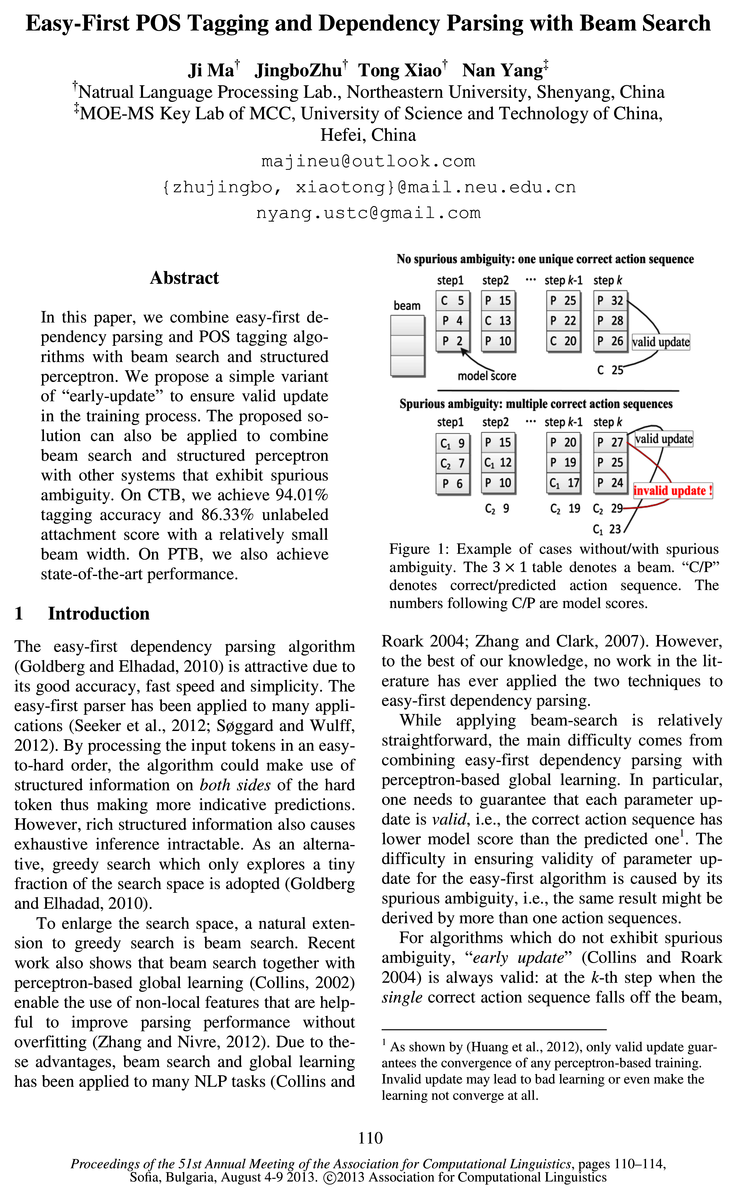

- step1 step2 stepk-1 stepk beam C 5 P 15 P 25 P 32 P 4 C 13 P 22 P 28 P 2_ P 10 C 20 P 26 alid updat model score C 25Page 1, “Introduction”

- The numbers following C/P are model scores .Page 1, “Introduction”

- In particular, one needs to guarantee that each parameter update is valid, i.e., the correct action sequence has lower model score than the predicted onel.Page 1, “Introduction”

- its model score must be lower than those still in the beam (as illustrated in figure 1, also see the proof in (Huang et al., 2012)).Page 2, “Introduction”

- When all correct sequences fall off the beam, some may indeed have higher model score than those still in the beam (C2 in figure 1), causing invalid update.Page 2, “Introduction”

- beam 13, (sequences in B are sorted in terms of model score , i.e., w-(p(13[0]) > w-(p(13[1]) ...).Page 3, “Bk <— BESTS(X,Bk_1,W)”

See all papers in Proc. ACL 2013 that mention model score.

See all papers in Proc. ACL that mention model score.

Back to top.

uas

- In particular, we achieve 86.33% uas on CTB which is 1.54% uas improvement over the greedy baseline parser.Page 4, “Experiments”

- PTB CTB uas compl uas compl 91.77 45.29 84.54 33.75 221 92.29 46.28 85.11 34.62 124 92.50 46.82 85.62 37.11 71 92.74 48.12 86.00 35.87 39Page 4, “Conclusion and related work”

- ‘uas’ and ‘compl’ denote unlabeled score and complete match rate respectively (all excluding punctuations).Page 4, “Conclusion and related work”

- Systems s uas complPage 4, “Conclusion and related work”

- Systems uas compl (Huang and Sagae, 2010) 8 92.10 —(Zhang and Nivre, 2011) 64 92.90 48.50Page 4, “Conclusion and related work”

See all papers in Proc. ACL 2013 that mention uas.

See all papers in Proc. ACL that mention uas.

Back to top.

parsing algorithm

- The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) is attractive due to its good accuracy, fast speed and simplicity.Page 1, “Introduction”

- The easy-first dependency parsing algorithm (Goldberg and Elhadad, 2010) builds a dependency tree by performing two types of actions LEFT(i) and RIGHT(i) to a list of subtree structures p1,.Page 2, “Easy-first dependency parsing”

- The parsing algorithm is greedy which explores a tiny fraction of the search space.Page 2, “Bk <— BESTS(X,Bk_1,W)”

See all papers in Proc. ACL 2013 that mention parsing algorithm.

See all papers in Proc. ACL that mention parsing algorithm.

Back to top.

perceptron

- In this paper, we combine easy-first dependency parsing and POS tagging algorithms with beam search and structured perceptron .Page 1, “Abstract”

- The proposed solution can also be applied to combine beam search and structured perceptron with other systems that exhibit spurious ambiguity.Page 1, “Abstract”

- Current work (Huang et al., 2012) rigorously explained that only valid update ensures convergence of any perceptron variants.Page 3, “Training”

See all papers in Proc. ACL 2013 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.