Article Structure

Abstract

Empty categories (EC) are artificial elements in Penn Treebanks motivated by the govemment-binding (GB) theory to explain certain language phenomena such as pro-drop.

Introduction

One of the key challenges in statistical machine translation (SMT) is to effectively model inherent differences between the source and the target language.

Chinese Empty Category Prediction

The empty categories in the Chinese Treebank (CTB) include trace markers for A’- and A-movement, dropped pronoun, big PRO etc.

Integrating Empty Categories in Machine Translation

In this section, we explore multiple approaches of utilizing recovered ECs in machine translation.

Experimental Results

4.1 Empty Category Prediction

Related Work

Empty categories have been studied in recent years for several languages, mostly in the context of reference resolution and syntactic processing for English, such as in (Johnson, 2002; Dienes and Dubey, 2003; Gabbard et al., 2006).

Conclusions and Future Work

In this paper, we presented a novel structured approach to EC prediction, which utilizes a maximum entropy model with various syntactic features and shows significantly higher accuracy than the state-of-the-art approaches.

Topics

parse trees

- For instance, Yang and Xue (2010) attempted to predict the existence of an EC before a word; Luo and Zhao (2011) predicted ECs on parse trees , but the position information of some ECs is partially lost in their representation.Page 2, “Chinese Empty Category Prediction”

- Furthermore, Luo and Zhao (2011) conducted experiments on gold parse trees only.Page 2, “Chinese Empty Category Prediction”

- our opinion, recovering ECs from machine parse trees is more meaningful since that is what one would encounter when developing a downstream application such as machine translation.Page 2, “Chinese Empty Category Prediction”

- In this paper, we aim to have a more comprehensive treatment of the problem: all EC types along with their locations are predicted, and we will report the results on both human parse trees and machine-generated parse trees .Page 2, “Chinese Empty Category Prediction”

- For this reason, we choose to project them onto a parse tree node.Page 2, “Chinese Empty Category Prediction”

- Notice that the input to such a predictor is a syntactic tree without ECs, e.g., the parse tree on the right hand of Figure 2 without the EC tag *pro* @ l is such an example.Page 3, “Chinese Empty Category Prediction”

- Feature 1 to 10 are computed directly from parse trees , and are straightforward.Page 3, “Chinese Empty Category Prediction”

- As mentioned in the previous section, the output of our EC predictor is a new parse tree with the labels and positionsPage 4, “Integrating Empty Categories in Machine Translation”

- In this work we also take advantages of the augmented Chinese parse trees (with ECs projected to the surface) and extract tree-to-string grammar (Liu et al., 2006) for a tree-to-string MT system.Page 4, “Integrating Empty Categories in Machine Translation”

- Due to the recovered ECs in the source parse trees , the tree-to-string grammar extracted from such trees can be more discriminative, with an increased capability of distinguishing different context.Page 4, “Integrating Empty Categories in Machine Translation”

- An example of an augmented Chinese parse tree aligned to an English string is shown in Figure 3, in which the incorrect alignment in Figure l is fixed.Page 4, “Integrating Empty Categories in Machine Translation”

See all papers in Proc. ACL 2013 that mention parse trees.

See all papers in Proc. ACL that mention parse trees.

Back to top.

word alignment

- We show that the recovered empty categories not only improve the word alignment quality, but also lead to significant improvements in a large-scale state-of-the-art syntactic MT system.Page 1, “Abstract”

- In addition, the pro-drop problem can also degrade the word alignment quality in the training data.Page 1, “Introduction”

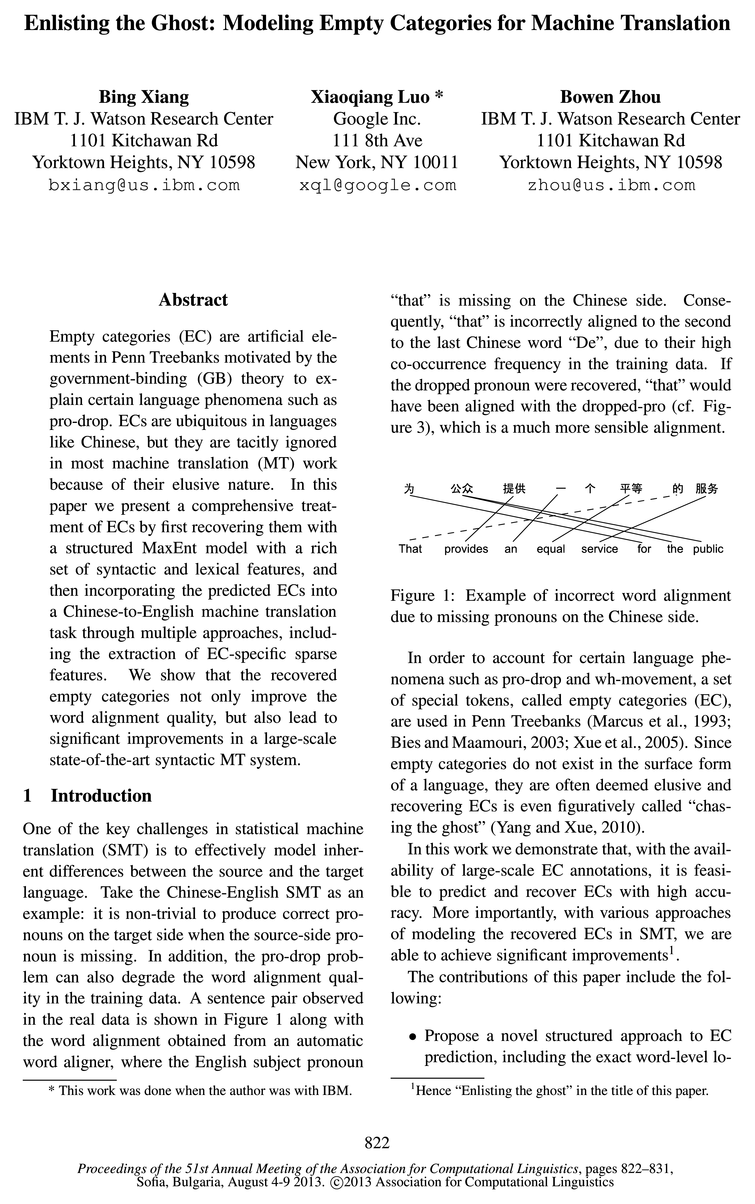

- A sentence pair observed in the real data is shown in Figure 1 along with the word alignment obtained from an automatic word aligner , where the English subject pronounPage 1, “Introduction”

- Figure 1: Example of incorrect word alignment due to missing pronouns on the Chinese side.Page 1, “Introduction”

- 0 Measure the effect of ECs on automatic word alignment for machine translation after integrating recovered ECs into the MT data;Page 2, “Introduction”

- With the preprocessed MT training corpus, an unsupervised word aligner, such as GIZA++, can be used to generate automatic word alignment , as the first step of a system training pipeline.Page 4, “Integrating Empty Categories in Machine Translation”

- The effect of inserting ECs is twofold: first, it can impact the automatic word alignment since now it allows the target-side words, especially the function words, to align to the inserted ECs and fix some errors in the original word alignment ; second, new phrases and rules can be extracted from the preprocessed training data.Page 4, “Integrating Empty Categories in Machine Translation”

- A few examples of the extracted Hiero rules and tree-to-string rules are also listed, which we would not have been able to extract from the original incorrect word alignment when the *pro* was missing.Page 4, “Integrating Empty Categories in Machine Translation”

- Figure 3: Fixed word alignment and examples of extracted Hiero rules and tree-to-string rules.Page 5, “Integrating Empty Categories in Machine Translation”

- Table 3 listed some of the most frequent English words aligned to *pro* or *PRO* in a Chinese-English parallel corpus with 2M sentence pairs.Page 5, “Integrating Empty Categories in Machine Translation”

- Then we run GIZA++ (Och and Ney, 2000) to generate the word alignment for each direction and apply grow-diagonal-final (Koehn et al., 2003), same as in the baseline.Page 7, “Experimental Results”

See all papers in Proc. ACL 2013 that mention word alignment.

See all papers in Proc. ACL that mention word alignment.

Back to top.

machine translation

- ECs are ubiquitous in languages like Chinese, but they are tacitly ignored in most machine translation (MT) work because of their elusive nature.Page 1, “Abstract”

- In this paper we present a comprehensive treatment of ECs by first recovering them with a structured MaxEnt model with a rich set of syntactic and lexical features, and then incorporating the predicted ECs into a Chinese-to-English machine translation task through multiple approaches, including the extraction of EC-specific sparse features.Page 1, “Abstract”

- One of the key challenges in statistical machine translation (SMT) is to effectively model inherent differences between the source and the target language.Page 1, “Introduction”

- ty Categories for Machine TranslationPage 1, “Introduction”

- 0 Measure the effect of ECs on automatic word alignment for machine translation after integrating recovered ECs into the MT data;Page 2, “Introduction”

- 0 Show significant improvement on top of the state-of-the-art large-scale hierarchical and syntactic machine translation systems.Page 2, “Introduction”

- our opinion, recovering ECs from machine parse trees is more meaningful since that is what one would encounter when developing a downstream application such as machine translation .Page 2, “Chinese Empty Category Prediction”

- In this section, we explore multiple approaches of utilizing recovered ECs in machine translation .Page 4, “Integrating Empty Categories in Machine Translation”

- There exists only a handful of previous work on applying ECs explicitly to machine translation so far.Page 8, “Related Work”

- First, in addition to the preprocessing of training data and inserting recovered empty categories, we implement sparse features to further boost the performance, and tune the feature weights directly towards maximizing the machine translation metric.Page 8, “Related Work”

- We also applied the predicted ECs to a large-scale Chinese-to-English machine translation task and achieved significant improvement over two strong MT base-Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2013 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

F1 scores

- We compute the precision, recall and F1 scores for each EC on the test set, and collect their counts in the reference and system output.Page 5, “Experimental Results”

- The F1 scores for majority of the ECs are above 70%, except for “*”, which is relatively rare in the data.Page 5, “Experimental Results”

- For the two categories that are interesting to MT, *pro* and *PRO*, the predictor achieves 74.3% and 81.5% in F1 scores , respectively.Page 5, “Experimental Results”

- Compared to those in Table 4, the F1 scores dropped by different degrees for dif-Page 5, “Experimental Results”

- The F1 scores for *pro* and *PRO* when using system-generated parse trees are between 50% to 60%.Page 6, “Experimental Results”

- The F1 score for *PRO* drops by 0.2% slightly.Page 6, “Experimental Results”

- The model we proposed achieves 6% higher F1 score than that in (Yang and Xue, 2010) and 2.6% higher than that in (Cai et a1., 2011), which is significant.Page 6, “Experimental Results”

- Table 8: Word alignment F1 scores with or without *pro* and *PRO*.Page 7, “Experimental Results”

See all papers in Proc. ACL 2013 that mention F1 scores.

See all papers in Proc. ACL that mention F1 scores.

Back to top.

MT system

- We show that the recovered empty categories not only improve the word alignment quality, but also lead to significant improvements in a large-scale state-of-the-art syntactic MT system .Page 1, “Abstract”

- We conducted some initial error analysis on our MT system output and found that most of the errors that are related to ECs are due to the missing *pro* and *PRO*.Page 4, “Integrating Empty Categories in Machine Translation”

- For example, for a hierarchical MT system , some phrase pairs and Hiero (Chiang, 2005) rules can be extracted with recovered *pro* and *PRO* at the Chinese side.Page 4, “Integrating Empty Categories in Machine Translation”

- In this work we also take advantages of the augmented Chinese parse trees (with ECs projected to the surface) and extract tree-to-string grammar (Liu et al., 2006) for a tree-to-string MT system .Page 4, “Integrating Empty Categories in Machine Translation”

- For each phrase pair, Hiero rule or tree-to-string rule in the MT system , a binary feature fk, fires if there exists a *pro* on the source side and it aligns to one of its most frequently aligned target words found in the training corpus.Page 4, “Integrating Empty Categories in Machine Translation”

- In the Chinese-to-English MT experiments, we test two state-of-the-art MT systems .Page 6, “Experimental Results”

- The MT systems are optimized with pairwise ranking optimization (Hopkins and May, 2011) to maximize BLEU (Papineni et al., 2002).Page 7, “Experimental Results”

- We directly take advantage of the augmented parse trees in the tree-to-string grammar, which could have larger impact on the MT system performance.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention MT system.

See all papers in Proc. ACL that mention MT system.

Back to top.

BLEU

- The MT systems are optimized with pairwise ranking optimization (Hopkins and May, 2011) to maximize BLEU (Papineni et al., 2002).Page 7, “Experimental Results”

- The BLEU scores from different systems are shown in Table 10 and Table 11, respectively.Page 7, “Experimental Results”

- Preprocessing of the data with ECs inserted improves the BLEU scores by about 0.6 for newswire and 0.2 to 0.3 for the weblog data, compared to each baseline separately.Page 7, “Experimental Results”

- Table 10: BLEU scores in the Hiero system.Page 7, “Experimental Results”

- Table 11: BLEU scores in the tree-to-string system with Hiero rules as backoff.Page 8, “Experimental Results”

See all papers in Proc. ACL 2013 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

phrase pairs

- For example, for a hierarchical MT system, some phrase pairs and Hiero (Chiang, 2005) rules can be extracted with recovered *pro* and *PRO* at the Chinese side.Page 4, “Integrating Empty Categories in Machine Translation”

- For each phrase pair , Hiero rule or tree-to-string rule in the MT system, a binary feature fk, fires if there exists a *pro* on the source side and it aligns to one of its most frequently aligned target words found in the training corpus.Page 4, “Integrating Empty Categories in Machine Translation”

- The motivation for such sparse features is to reward those phrase pairs and rules that have highly confident lexical pairs specifically related to ECs, and penalize those who don’t have such lexical pairs.Page 5, “Integrating Empty Categories in Machine Translation”

- One is an re-imp1ementation of Hiero (Chiang, 2005), and the other is a hybrid syntax-based tree-to-string system (Zhao and Al-onaizan, 2008), where normal phrase pairs and Hiero rules are used as a backoff for tree-to-string rules.Page 6, “Experimental Results”

- Next we extract phrase pairs , Hiero rules and tree-to-string rules from the original word alignment and the improved word alignment, and tune all the feature weights on the tuning set.Page 7, “Experimental Results”

See all papers in Proc. ACL 2013 that mention phrase pairs.

See all papers in Proc. ACL that mention phrase pairs.

Back to top.

Treebank

- Empty categories (EC) are artificial elements in Penn Treebanks motivated by the govemment-binding (GB) theory to explain certain language phenomena such as pro-drop.Page 1, “Abstract”

- In order to account for certain language phenomena such as pro-drop and wh-movement, a set of special tokens, called empty categories (EC), are used in Penn Treebanks (Marcus et al., 1993; Bies and Maamouri, 2003; Xue et al., 2005).Page 1, “Introduction”

- The empty categories in the Chinese Treebank (CTB) include trace markers for A’- and A-movement, dropped pronoun, big PRO etc.Page 2, “Chinese Empty Category Prediction”

- Our effort of recovering ECs is a two-step process: first, at training time, ECs in the Chinese Treebank are moved and preserved in the portion of the tree structures pertaining to surface words only.Page 2, “Chinese Empty Category Prediction”

- We use Chinese Treebank (CTB) V7.0 to train and test the EC prediction model.Page 5, “Experimental Results”

See all papers in Proc. ACL 2013 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.

BLEU scores

- The BLEU scores from different systems are shown in Table 10 and Table 11, respectively.Page 7, “Experimental Results”

- Preprocessing of the data with ECs inserted improves the BLEU scores by about 0.6 for newswire and 0.2 to 0.3 for the weblog data, compared to each baseline separately.Page 7, “Experimental Results”

- Table 10: BLEU scores in the Hiero system.Page 7, “Experimental Results”

- Table 11: BLEU scores in the tree-to-string system with Hiero rules as backoff.Page 8, “Experimental Results”

See all papers in Proc. ACL 2013 that mention BLEU scores.

See all papers in Proc. ACL that mention BLEU scores.

Back to top.

sentence pairs

- A sentence pair observed in the real data is shown in Figure 1 along with the word alignment obtained from an automatic word aligner, where the English subject pronounPage 1, “Introduction”

- Table 3 listed some of the most frequent English words aligned to *pro* or *PRO* in a Chinese-English parallel corpus with 2M sentence pairs .Page 5, “Integrating Empty Categories in Machine Translation”

- The MT training data includes 2 million sentence pairs from the parallel corpora released byPage 6, “Experimental Results”

- We append a 300-sentence set, which we have human hand alignment available as reference, to the 2M training sentence pairs before running GIZA++.Page 7, “Experimental Results”

See all papers in Proc. ACL 2013 that mention sentence pairs.

See all papers in Proc. ACL that mention sentence pairs.

Back to top.

Chinese word

- Consequently, “that” is incorrectly aligned to the second to the last Chinese word “De”, due to their high co-occurrence frequency in the training data.Page 1, “Introduction”

- One of the other frequent ECs, *OP *, appears in the Chinese relative clauses, which usually have a Chinese word “De” aligned to the target side “that” or “which”.Page 4, “Integrating Empty Categories in Machine Translation”

- We first predict *pro* and *PRO* with our annotation model for all Chinese sentences in the parallel training data, with *pro* and *PRO* inserted between the original Chinese words .Page 7, “Experimental Results”

See all papers in Proc. ACL 2013 that mention Chinese word.

See all papers in Proc. ACL that mention Chinese word.

Back to top.

feature weights

- The feature weights can be tuned on a tuning set in a log-linear model along with other usual features/costs, including language model scores, bi-direction translation probabilities, etc.Page 5, “Integrating Empty Categories in Machine Translation”

- Next we extract phrase pairs, Hiero rules and tree-to-string rules from the original word alignment and the improved word alignment, and tune all the feature weights on the tuning set.Page 7, “Experimental Results”

- First, in addition to the preprocessing of training data and inserting recovered empty categories, we implement sparse features to further boost the performance, and tune the feature weights directly towards maximizing the machine translation metric.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention feature weights.

See all papers in Proc. ACL that mention feature weights.

Back to top.

MaXEnt

- In this paper we present a comprehensive treatment of ECs by first recovering them with a structured MaxEnt model with a rich set of syntactic and lexical features, and then incorporating the predicted ECs into a Chinese-to-English machine translation task through multiple approaches, including the extraction of EC-specific sparse features.Page 1, “Abstract”

- We propose a structured MaXEnt model for predicting ECs.Page 3, “Chinese Empty Category Prediction”

- (1) is the familiar log linear (or MaXEnt ) model, where fk,(ei_1,T, 6,) is the feature function andPage 3, “Chinese Empty Category Prediction”

See all papers in Proc. ACL 2013 that mention MaXEnt.

See all papers in Proc. ACL that mention MaXEnt.

Back to top.

maximum entropy

- We parse our test set with a maximum entropy based statistical parser (Ratna—parkhi, 1997) first.Page 5, “Experimental Results”

- A maximum entropy model is utilized to predict the tags, but different types of ECs are not distinguished.Page 8, “Related Work”

- In this paper, we presented a novel structured approach to EC prediction, which utilizes a maximum entropy model with various syntactic features and shows significantly higher accuracy than the state-of-the-art approaches.Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2013 that mention maximum entropy.

See all papers in Proc. ACL that mention maximum entropy.

Back to top.

POS tag

- leftmost child label or POS tag rightmost child label or POS tag label or POS tag of the head child the number of child nodesPage 4, “Chinese Empty Category Prediction”

- left-sibling label or POS tagPage 4, “Chinese Empty Category Prediction”

- 0 right-sibling label or POS tagPage 4, “Chinese Empty Category Prediction”

See all papers in Proc. ACL 2013 that mention POS tag.

See all papers in Proc. ACL that mention POS tag.

Back to top.

significant improvement

- We show that the recovered empty categories not only improve the word alignment quality, but also lead to significant improvements in a large-scale state-of-the-art syntactic MT system.Page 1, “Abstract”

- 0 Show significant improvement on top of the state-of-the-art large-scale hierarchical and syntactic machine translation systems.Page 2, “Introduction”

- We also applied the predicted ECs to a large-scale Chinese-to-English machine translation task and achieved significant improvement over two strong MT base-Page 8, “Conclusions and Future Work”

See all papers in Proc. ACL 2013 that mention significant improvement.

See all papers in Proc. ACL that mention significant improvement.

Back to top.