Article Structure

Abstract

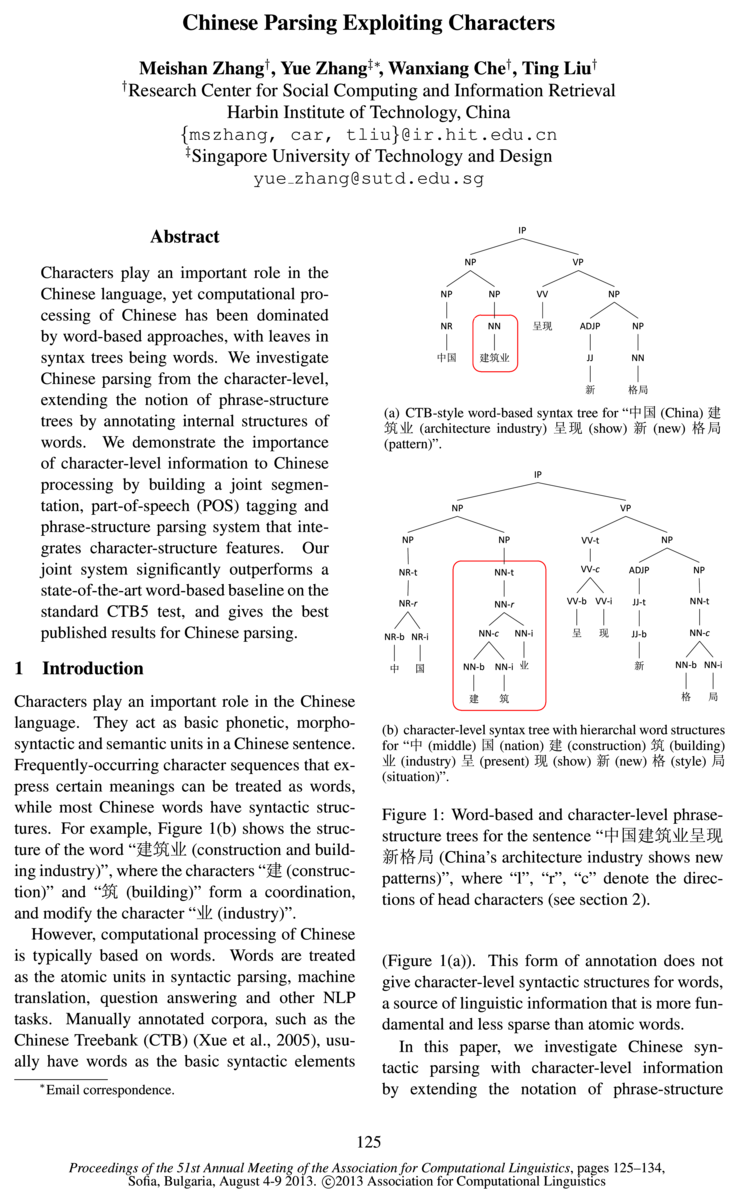

Characters play an important role in the Chinese language, yet computational processing of Chinese has been dominated by word-based approaches, with leaves in syntax trees being words.

Introduction

Characters play an important role in the Chinese language.

Word Structures and Syntax Trees

The Chinese language is a character-based language.

Character-based Chinese Parsing

To produce character-level trees for Chinese NLP tasks, we develop a character-based parsing model, which can jointly perform word segmentation, POS tagging and phrase-structure parsing.

Experiments

4.1 Setting

Related Work

Recent work on using the internal structure of words to help Chinese processing gives important motivations to our work.

Conclusions and Future Work

We studied the internal structures of more than 37,382 Chinese words, analyzing their structures as the recursive combinations of characters.

Topics

POS tagging

- Compared to a pipeline system, the advantages of a joint system include reduction of error propagation, and the integration of segmentation, POS tagging and syntax features.Page 2, “Introduction”

- To analyze word structures in addition to phrase structures, our character-based parser naturally performs joint word segmentation, POS tagging and parsing jointly.Page 2, “Introduction”

- We extend their shift-reduce framework, adding more transition actions for word segmentation and POS tagging , and defining novel features that capture character information.Page 2, “Introduction”

- art joint segmenter and POS tagger , and our baseline word—based parser.Page 2, “Introduction”

- Our parser work falls in line with recent work of joint segmentation, POS tagging and parsing (Hatori et al., 2012; Li and Zhou, 2012; Qian and Liu, 2012).Page 2, “Introduction”

- Compared with related work, our model gives the best published results for joint segmentation and POS tagging , as well as joint phrase-structure parsing on standard CTB5 evaluations.Page 2, “Introduction”

- They made use of this information to help joint word segmentation and POS tagging .Page 3, “Word Structures and Syntax Trees”

- In particular, we mark the original nodes that represent POS tags in CTB-style trees with “-t”, and insert our word structures as unary subnodes of the “-t” nodes.Page 3, “Word Structures and Syntax Trees”

- To produce character-level trees for Chinese NLP tasks, we develop a character-based parsing model, which can jointly perform word segmentation, POS tagging and phrase-structure parsing.Page 4, “Character-based Chinese Parsing”

- We make two extensions to their work to enable joint segmentation, POS tagging and phrase-structure parsing from the character level.Page 4, “Character-based Chinese Parsing”

- First, we split the original SHIFT action into SHIFT—SEPARATE (t) and SHIFT—APPEND, which jointly perform the word segmentation and POS tagging tasks.Page 5, “Character-based Chinese Parsing”

See all papers in Proc. ACL 2013 that mention POS tagging.

See all papers in Proc. ACL that mention POS tagging.

Back to top.

word segmentation

- With regard to task of parsing itself, an important advantage of the character-level syntax trees is that they allow word segmentation , part-of-speech (POS) tagging and parsing to be performed jointly, using an efficient CKY-style or shift-reduce algorithm.Page 2, “Introduction”

- To analyze word structures in addition to phrase structures, our character-based parser naturally performs joint word segmentation , POS tagging and parsing jointly.Page 2, “Introduction”

- We extend their shift-reduce framework, adding more transition actions for word segmentation and POS tagging, and defining novel features that capture character information.Page 2, “Introduction”

- They made use of this information to help joint word segmentation and POS tagging.Page 3, “Word Structures and Syntax Trees”

- For leaf characters, we follow previous work on word segmentation (Xue, 2003; Ng and Low, 2004), and use “b” and “i” to indicate the beginning and non-beginning characters of a word, respectively.Page 3, “Word Structures and Syntax Trees”

- To produce character-level trees for Chinese NLP tasks, we develop a character-based parsing model, which can jointly perform word segmentation , POS tagging and phrase-structure parsing.Page 4, “Character-based Chinese Parsing”

- First, we split the original SHIFT action into SHIFT—SEPARATE (t) and SHIFT—APPEND, which jointly perform the word segmentation and POS tagging tasks.Page 5, “Character-based Chinese Parsing”

- The string features are used for word segmentation and POS tagging, and are adapted from a state-of-the-art joint segmentation and tagging model (Zhang and Clark, 2010).Page 6, “Character-based Chinese Parsing”

- In summary, our character-based parser contains the word-based features of constituent parser presented in Zhang and Clark (2009), the word-based and shallow character-based features of joint word segmentation and POS tagging presented in Zhang and Clark (2010), and additionally the deep character-based features that encode word structure information, which are the first presented by this paper.Page 6, “Character-based Chinese Parsing”

- Since our model can jointly process word segmentation , POS tagging and phrase-structure parsing, we evaluate our model for the three tasks, respectively.Page 6, “Experiments”

- For word segmentation and POS tagging, standard metrics of word precision, recall and F-score are used, where the tagging accuracy is the joint accuracy of word segmentation and POS tagging.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention word segmentation.

See all papers in Proc. ACL that mention word segmentation.

Back to top.

Chinese words

- Frequently-occurring character sequences that express certain meanings can be treated as words, while most Chinese words have syntactic structures.Page 1, “Introduction”

- Unlike alphabetical languages, Chinese characters convey meanings, and the meaning of most Chinese words takes roots in their character.Page 2, “Word Structures and Syntax Trees”

- Chinese words have internal structures (Xue, 2001; Ma et al., 2012).Page 2, “Word Structures and Syntax Trees”

- Zhang and Clark (2010) found that the first character in a Chinese word is a useful indicator of the word’s POS.Page 3, “Word Structures and Syntax Trees”

- Li (2011) studied the morphological structures of Chinese words , showing that 35% percent of the words in CTB5 can be treated as having morphemes.Page 3, “Word Structures and Syntax Trees”

- We take one step further in this line of work, annotating the full syntactic structures of 37,382 Chinese words in the form of Figure 2 and Figure 3.Page 3, “Word Structures and Syntax Trees”

- Trained using annotated word structures, our parser also analyzes the internal structures of Chinese words .Page 4, “Character-based Chinese Parsing”

- Zhao (2009) studied character-level dependencies for Chinese word segmentation by formalizing segmentsion task in a dependency parsing framework.Page 8, “Related Work”

- They use it as a joint framework to perform Chinese word segmentation, POS tagging and syntax parsing.Page 8, “Related Work”

- They exploit a generative maximum entropy model for character-based constituent parsing, and find that POS information is very useful for Chinese word segmentation, but high-level syntactic information seems to have little effect on segmentation.Page 8, “Related Work”

- In addition, instead of using flat structures, we manually annotate hierarchal tree structures of Chinese words for converting word-based constituent trees into character-based constituent trees.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention Chinese words.

See all papers in Proc. ACL that mention Chinese words.

Back to top.

parsing model

- We build a character-based Chinese parsing model to parse the character-level syntax trees.Page 2, “Introduction”

- To produce character-level trees for Chinese NLP tasks, we develop a character-based parsing model , which can jointly perform word segmentation, POS tagging and phrase-structure parsing.Page 4, “Character-based Chinese Parsing”

- Our character-based Chinese parsing model is based on the work of Zhang and Clark (2009), which is a transition-based model for lexicalized constituent parsing.Page 4, “Character-based Chinese Parsing”

- The character-level parsing model has the advantage that deep character information can be extracted as features for parsing.Page 6, “Experiments”

- Zhang and Clark (2010), and the phrase-structure parsing model of Zhang and Clark (2009).Page 7, “Experiments”

- The phrase-structure parsing model is trained with a 64-beam.Page 7, “Experiments”

- We train the parsing model using the automatically generated POS tags by 10-way jackknifing, which gives about 1.5% increases in parsing accuracy when tested on automatic segmented and POS tagged inputs.Page 7, “Experiments”

- (2011), which additionally uses the Chinese Gigaword Corpus; Li ’11 denotes a generative model that can perform word segmentation, POS tagging and phrase-structure parsing jointly (Li, 2011); Li+ ’12 denotes a unified dependency parsing model that can perform joint word segmentation, POS tagging and dependency parsing (Li and Zhou, 2012); Li ’11 and Li+ ’12 exploited annotated morphological-level word structures for Chinese; Hatori+ ’12 denotes an incremental joint model for word segmentation, POS tagging and dependency parsing (Hatori et al., 2012); they use external dictionary resources including HowNet Word List and page names from the Chinese Wikipedia; Qian+ ’12 denotes a joint segmentation, POS tagging and parsing system using a unified framework for decoding, incorporating a word segmentation model, a POS tagging model and a phrase-structure parsing model together (Qian and Liu, 2012); their word segmentation model is a combination of character-based model and word-based model.Page 7, “Experiments”

- (2007) achieves 83.45%5 in parsing accuracy on the test corpus, and our pipeline constituent parsing model achieves 83.55% with gold segmentation.Page 8, “Experiments”

- The main differences between word-based and character-level parsing models are that character-level model can exploit character features.Page 8, “Experiments”

- Our character-level parsing model is inspired by the work of Zhang and Clark (2009), which is a transition-based model with a beam-search decoder for word-based constituent parsing.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention parsing model.

See all papers in Proc. ACL that mention parsing model.

Back to top.

constituent parsing

- Our character-based Chinese parsing model is based on the work of Zhang and Clark (2009), which is a transition-based model for lexicalized constituent parsing .Page 4, “Character-based Chinese Parsing”

- In summary, our character-based parser contains the word-based features of constituent parser presented in Zhang and Clark (2009), the word-based and shallow character-based features of joint word segmentation and POS tagging presented in Zhang and Clark (2010), and additionally the deep character-based features that encode word structure information, which are the first presented by this paper.Page 6, “Character-based Chinese Parsing”

- (a) Joint segmentation and (b) Joint constituent parsing POS tagging F—scores.Page 6, “Experiments”

- Our final performance on constituent parsing is by far the best that we are aware of for the Chinese data, and even better than some state-of-the-art models with gold segmentation.Page 7, “Experiments”

- (2007) achieves 83.45%5 in parsing accuracy on the test corpus, and our pipeline constituent parsing model achieves 83.55% with gold segmentation.Page 8, “Experiments”

- Our character-level parsing model is inspired by the work of Zhang and Clark (2009), which is a transition-based model with a beam-search decoder for word-based constituent parsing .Page 8, “Related Work”

- In addition, we propose novel features related to word structures and interactions between word segmentation, POS tagging and word-based constituent parsing .Page 8, “Related Work”

- They exploit a generative maximum entropy model for character-based constituent parsing , and find that POS information is very useful for Chinese word segmentation, but high-level syntactic information seems to have little effect on segmentation.Page 8, “Related Work”

- Qian and Liu (2012) proposed a joint decoder for word segmentation, POS tagging and word-based constituent parsing , although they trained models for the three tasks separately.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention constituent parsing.

See all papers in Proc. ACL that mention constituent parsing.

Back to top.

dependency parsing

- They studied the influence of such morphology to Chinese dependency parsing (Li and Zhou, 2012).Page 3, “Word Structures and Syntax Trees”

- (2011), which additionally uses the Chinese Gigaword Corpus; Li ’11 denotes a generative model that can perform word segmentation, POS tagging and phrase-structure parsing jointly (Li, 2011); Li+ ’12 denotes a unified dependency parsing model that can perform joint word segmentation, POS tagging and dependency parsing (Li and Zhou, 2012); Li ’11 and Li+ ’12 exploited annotated morphological-level word structures for Chinese; Hatori+ ’12 denotes an incremental joint model for word segmentation, POS tagging and dependency parsing (Hatori et al., 2012); they use external dictionary resources including HowNet Word List and page names from the Chinese Wikipedia; Qian+ ’12 denotes a joint segmentation, POS tagging and parsing system using a unified framework for decoding, incorporating a word segmentation model, a POS tagging model and a phrase-structure parsing model together (Qian and Liu, 2012); their word segmentation model is a combination of character-based model and word-based model.Page 7, “Experiments”

- Zhao (2009) studied character-level dependencies for Chinese word segmentation by formalizing segmentsion task in a dependency parsing framework.Page 8, “Related Work”

- Li and Zhou (2012) also exploited the morphological-level word structures for Chinese dependency parsing .Page 8, “Related Work”

- (2012) proposed the first joint work for the word segmentation, POS tagging and dependency parsing .Page 8, “Related Work”

- Their work demonstrates that a joint model can improve the performance of the three tasks, particularly for POS tagging and dependency parsing .Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

beam size

- With linear-time complexity, our parser is highly efficient, processing over 30 sentences per second with a beam size of 16.Page 2, “Introduction”

- Figure 6: Accuracies against the training epoch for joint segmentation and tagging as well as joint phrase-structure parsing using beam sizes 1, 4, l6 and 64, respectively.Page 6, “Experiments”

- Figure 6 shows the accuracies of our model using different beam sizes with respect to the training epoch.Page 6, “Experiments”

- The performance of our model increases as the beam size increases.Page 6, “Experiments”

- Tested using gcc 4.7.2 and Fedora 17 on an Intel Core i5-3470 CPU (3.20GHz), the decoding speeds are 318.2, 98.0, 30.3 and 7.9 sentences per second with beam size 1, 4, l6 and 64, respectively.Page 6, “Experiments”

- Based on this experiment, we set the beam size 64 for the rest of our experiments.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention beam size.

See all papers in Proc. ACL that mention beam size.

Back to top.

manually annotate

- Manually annotated corpora, such as the Chinese Treebank (CTB) (Xue et al., 2005), usually have words as the basic syntactic elementsPage 1, “Introduction”

- We manually annotate the structures of 37,382 words, which cover the entire CTB5.Page 2, “Introduction”

- Luo (2003) exploited this advantage by adding flat word structures without manually annotation to CTB trees, and building a generative character-based parser.Page 2, “Introduction”

- In addition, instead of using flat structures, we manually annotate hierarchal tree structures of Chinese words for converting word-based constituent trees into character-based constituent trees.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention manually annotate.

See all papers in Proc. ACL that mention manually annotate.

Back to top.

syntactic parsing

- Words are treated as the atomic units in syntactic parsing , machine translation, question answering and other NLP tasks.Page 1, “Introduction”

- In this paper, we investigate Chinese syntactic parsing with character—level information by extending the notation of phrase-structurePage 1, “Introduction”

- The results also demonstrate that the annotated word structures are highly effective for syntactic parsing , giving an absolute improvement of 0.82% in phrase-structure parsing accuracy over the joint model with flat word structures.Page 7, “Experiments”

- Our parser jointly performs word segmentation, POS tagging and syntactic parsing .Page 9, “Conclusions and Future Work”

See all papers in Proc. ACL 2013 that mention syntactic parsing.

See all papers in Proc. ACL that mention syntactic parsing.

Back to top.

shift-reduce

- With regard to task of parsing itself, an important advantage of the character-level syntax trees is that they allow word segmentation, part-of-speech (POS) tagging and parsing to be performed jointly, using an efficient CKY-style or shift-reduce algorithm.Page 2, “Introduction”

- Our model is based on the discriminative shift-reduce parser of Zhang and Clark (2009; 2011), which is a state-of-the-art word-based phrase-structure parser for Chinese.Page 2, “Introduction”

- We extend their shift-reduce framework, adding more transition actions for word segmentation and POS tagging, and defining novel features that capture character information.Page 2, “Introduction”

- Our work is based on the shift-reduce operations of their work, while we introduce additional operations for segmentation and POS tagging.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention shift-reduce.

See all papers in Proc. ACL that mention shift-reduce.

Back to top.

Seg

- Pipeline Seg 97.35 98.02 97.69 Tag 93.51 94.15 93.83 Parse 81.58 82.95 82.26Page 7, “Experiments”

- Flat word Seg 97.32 98.13 97.73 structures Tag 94.09 94.88 94.48 Parse 83.39 83.84 83.61Page 7, “Experiments”

- Annotated Seg 97.49 98.18 97.84 word structures Tag 94.46 95.14 94.80 Parse 84.42 84.43 84.43 WS 94.02 94.69 94.35Page 7, “Experiments”

- System Seg Tag ParsePage 8, “Experiments”

See all papers in Proc. ACL 2013 that mention Seg.

See all papers in Proc. ACL that mention Seg.

Back to top.

recursive

- (constituent) trees, adding recursive structures of characters for words.Page 2, “Introduction”

- Multi-character words can also have recursive syntactic structures.Page 3, “Word Structures and Syntax Trees”

- Our annotations are binarized recursive wordPage 3, “Word Structures and Syntax Trees”

- We studied the internal structures of more than 37,382 Chinese words, analyzing their structures as the recursive combinations of characters.Page 9, “Conclusions and Future Work”

See all papers in Proc. ACL 2013 that mention recursive.

See all papers in Proc. ACL that mention recursive.

Back to top.

joint model

- We can see that both character-level joint models outperform the pipelined system; our model with annotated word structures gives an improvement of 0.97% in tagging accuracy and 2.17% in phrase-structure parsing accuracy.Page 7, “Experiments”

- The results also demonstrate that the annotated word structures are highly effective for syntactic parsing, giving an absolute improvement of 0.82% in phrase-structure parsing accuracy over the joint model with flat word structures.Page 7, “Experiments”

- (2011), which additionally uses the Chinese Gigaword Corpus; Li ’11 denotes a generative model that can perform word segmentation, POS tagging and phrase-structure parsing jointly (Li, 2011); Li+ ’12 denotes a unified dependency parsing model that can perform joint word segmentation, POS tagging and dependency parsing (Li and Zhou, 2012); Li ’11 and Li+ ’12 exploited annotated morphological-level word structures for Chinese; Hatori+ ’12 denotes an incremental joint model for word segmentation, POS tagging and dependency parsing (Hatori et al., 2012); they use external dictionary resources including HowNet Word List and page names from the Chinese Wikipedia; Qian+ ’12 denotes a joint segmentation, POS tagging and parsing system using a unified framework for decoding, incorporating a word segmentation model, a POS tagging model and a phrase-structure parsing model together (Qian and Liu, 2012); their word segmentation model is a combination of character-based model and word-based model.Page 7, “Experiments”

- Their work demonstrates that a joint model can improve the performance of the three tasks, particularly for POS tagging and dependency parsing.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention joint model.

See all papers in Proc. ACL that mention joint model.

Back to top.

binarization

- Our annotations are binarized recursive wordPage 3, “Word Structures and Syntax Trees”

- (Los Angeles)”, have flat structures, and we use “coordination” for their left binarization .Page 3, “Word Structures and Syntax Trees”

- The system can provide bina-rzied CFG trees in Chomsky Norm Form, and they present a reversible conversion procedure to map arbitrary CFG trees into binarized trees.Page 4, “Character-based Chinese Parsing”

- In this work, we remain consistent with their work, using the head-finding rules of Zhang and Clark (2008), and the same binarization algorithm.1 We apply the same beam-search algorithm for decoding, and employ the averaged perceptron with early-update to train our model.Page 4, “Character-based Chinese Parsing”

See all papers in Proc. ACL 2013 that mention binarization.

See all papers in Proc. ACL that mention binarization.

Back to top.

parse trees

- With richer information than word-level trees, this form of parse trees can be useful for all the aforementioned Chinese NLP applications.Page 2, “Introduction”

- As shown in Figure 5, a state ST consists of a stack S and a queue Q, where S = -- ,81,SO) contains partially constructed parse trees , and Q = (Q07Q1,°°° 7Qn—j) = (Cj,Cj+1,°°° ,Cn) iS th€ sequence of input characters that have not been processed.Page 4, “Character-based Chinese Parsing”

- parse tree 80 must correspond to a fullwordPage 4, “Character-based Chinese Parsing”

See all papers in Proc. ACL 2013 that mention parse trees.

See all papers in Proc. ACL that mention parse trees.

Back to top.

feature templates

- Table 1 shows the feature templates of our model.Page 5, “Character-based Chinese Parsing”

- The feature templates in bold are novel, are designed to encode head character information.Page 6, “Character-based Chinese Parsing”

- We find that the parsing accuracy decreases about 0.6% when the head character related features (the bold feature templates in Table l) are removed, which demonstrates the usefulness of these features.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention feature templates.

See all papers in Proc. ACL that mention feature templates.

Back to top.

Chinese word segmentation

- Zhao (2009) studied character-level dependencies for Chinese word segmentation by formalizing segmentsion task in a dependency parsing framework.Page 8, “Related Work”

- They use it as a joint framework to perform Chinese word segmentation , POS tagging and syntax parsing.Page 8, “Related Work”

- They exploit a generative maximum entropy model for character-based constituent parsing, and find that POS information is very useful for Chinese word segmentation , but high-level syntactic information seems to have little effect on segmentation.Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention Chinese word segmentation.

See all papers in Proc. ACL that mention Chinese word segmentation.

Back to top.