Article Structure

Abstract

Conventional n-best reranking techniques often suffer from the limited scope of the n-best list, which rules out many potentially good alternatives.

Introduction

Discriminative reranking has become a popular technique for many NLP problems, in particular, parsing (Collins, 2000) and machine translation (Shen et al., 2005).

Packed Forests as Hypergraphs

Informally, a packed parse forest, or forest in short, is a compact representation of all the derivations (i.e., parse trees) for a given sentence under a context-free grammar (Billot and Lang, 1989).

Forest Reranking

3.1 Generic Reranking with the Perceptron

Supporting Forest Algorithms

4.1 Forest Oracle Recall that the Parseval F-score is the harmonic mean of labelled precision P and labelled recall R: 2PR _ 2|y fl y*| P + R lyl + |y*|

Experiments

We compare the performance of our forest reranker against n-best reranking on the Penn English Treebank (Marcus et al., 1993).

Conclusion

We have presented a framework for reranking on packed forests which compactly encodes many more candidates than n-best lists.

Topics

reranking

- Conventional n-best reranking techniques often suffer from the limited scope of the n-best list, which rules out many potentially good alternatives.Page 1, “Abstract”

- We instead propose forest reranking, a method that reranks a packed forest of exponentially many parses.Page 1, “Abstract”

- Our final result, an F—score of 91.7, outperforms both 50-best and 100-best reranking baselines, and is better than any previously reported systems trained on the Treebank.Page 1, “Abstract”

- Discriminative reranking has become a popular technique for many NLP problems, in particular, parsing (Collins, 2000) and machine translation (Shen et al., 2005).Page 1, “Introduction”

- Typically, this method first generates a list of top-n candidates from a baseline system, and then reranks this n-best list with arbitrary features that are not computable or intractable to compute within the baseline system.Page 1, “Introduction”

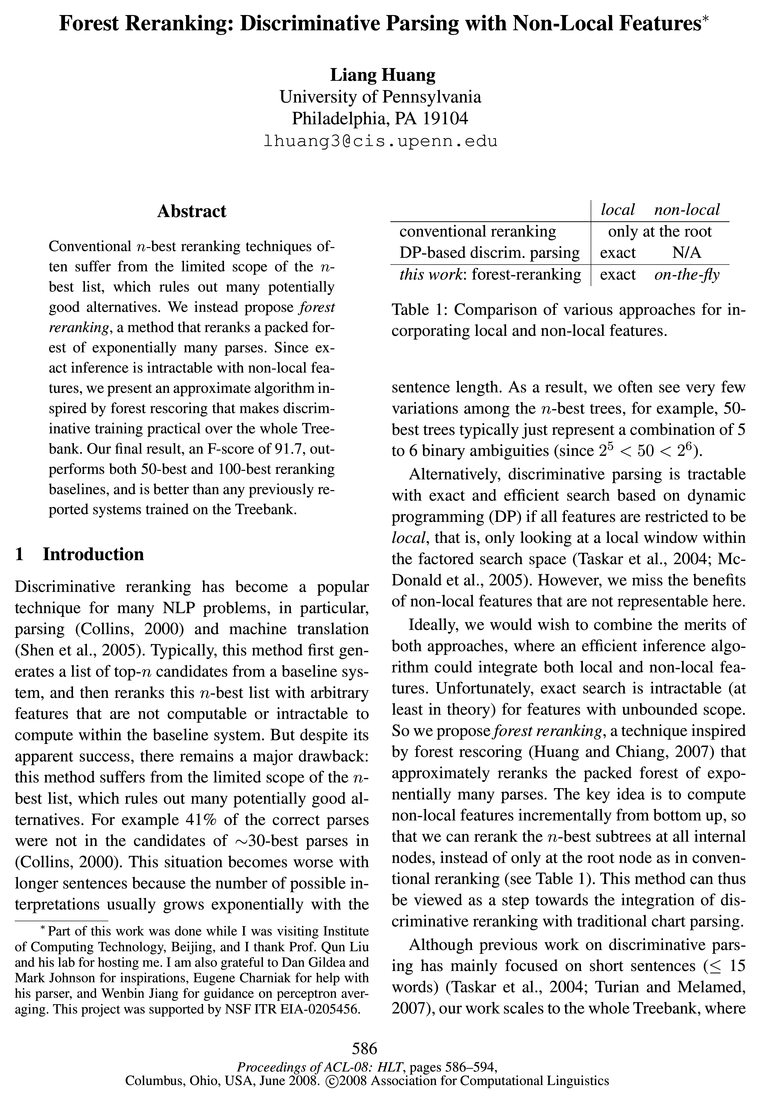

- conventional reranking only at the root DP-based discrim.Page 1, “Introduction”

- So we propose forest reranking, a technique inspired by forest rescoring (Huang and Chiang, 2007) that approximately reranks the packed forest of exponentially many parses.Page 1, “Introduction”

- The key idea is to compute nonlocal features incrementally from bottom up, so that we can rerank the n-best subtrees at all internal nodes, instead of only at the root node as in conventional reranking (see Table 1).Page 1, “Introduction”

- This method can thus be viewed as a step towards the integration of discriminative reranking with traditional chart parsing.Page 1, “Introduction”

- we achieved an F-score of 91.7, which is a 19% error reduction from the l-best baseline, and outperforms both 50-best and 100-best reranking .Page 2, “Introduction”

- Such a Treebank-style forest is easier to work with for reranking , since many features can be directly expressed in it.Page 2, “Packed Forests as Hypergraphs”

See all papers in Proc. ACL 2008 that mention reranking.

See all papers in Proc. ACL that mention reranking.

Back to top.

perceptron

- his parser, and Wenbin Jiang for guidance on perceptron averaging.Page 1, “Introduction”

- 3.1 Generic Reranking with the PerceptronPage 2, “Forest Reranking”

- In this work we use the averaged perceptron algorithm (Collins, 2002) since it is an online algorithm much simpler and orders of magnitude faster than Boosting and MaxEnt methods.Page 3, “Forest Reranking”

- Shown in Pseudocode l, the perceptron algorithm makes several passes over the whole training data, and in each iteration, for each sentence 3,, it tries to predict a best parse 3),- among the candidates cand(si) using the current weight setting.Page 3, “Forest Reranking”

- 1If one uses the gold y: for oracle , the perceptron willPage 3, “Forest Reranking”

- Pseudocode 1 Perceptron for Generic RerankingPage 3, “Forest Reranking”

- This result confirms that our feature set design is appropriate, and the averaged perceptron learner is a reasonable candidate for reranking.Page 7, “Experiments”

- We use the development set to determine the optimal number of iterations for averaged perceptron , and report the F1 score on the test set.Page 7, “Experiments”

- column is for feature extraction, and training column shows the number of perceptron iterations that achieved best results on the dev set, and average time per iteration.Page 8, “Experiments”

- 7It is surprising that 50-best reranking with local features achieves an even higher F—score of 91.28, and we suspect this is due to the aggressive updates and instability of the perceptron , as we do observe the learning curves to be non-monotonic.Page 8, “Experiments”

- With efficient approximate decoding, perceptron training on the whole Treebank becomes practical, which can be done in about a day even with a Python implementation.Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.

Treebank

- Since exact inference is intractable with nonlocal features, we present an approximate algorithm inspired by forest rescoring that makes discriminative training practical over the whole Treebank .Page 1, “Abstract”

- Our final result, an F—score of 91.7, outperforms both 50-best and 100-best reranking baselines, and is better than any previously reported systems trained on the Treebank .Page 1, “Abstract”

- Although previous work on discriminative parsing has mainly focused on short sentences (3 15 words) (Taskar et al., 2004; Turian and Melamed, 2007), our work scales to the whole Treebank , wherePage 1, “Introduction”

- This result is also better than any previously reported systems trained on the Treebank .Page 2, “Introduction”

- However, in this work, we use forests from a Treebank parser (Charniak, 2000) whose grammar is often flat in many productions.Page 2, “Packed Forests as Hypergraphs”

- We compare the performance of our forest reranker against n-best reranking on the Penn English Treebank (Marcus et al., 1993).Page 6, “Experiments”

- We use the standard split of the Treebank : sections 02-21 as the training data (39832 sentences), section 22 as the development set (1700 sentences), and section 23 as the test set (2416 sentences).Page 7, “Experiments”

- Our final result (91.7) is better than any previously reported system trained on the Treebank , althoughPage 8, “Experiments”

- In comparison, thanks to the efficient decoding, our work not only scaled to the whole Treebank , but also successfully incorporated nonlocal features, which showed an absolute improvement of 0.44% over that of local features alone.Page 8, “Experiments”

- With efficient approximate decoding, perceptron training on the whole Treebank becomes practical, which can be done in about a day even with a Python implementation.Page 8, “Conclusion”

- Our final result outperforms both 50-best and 100-best reranking baselines, and is better than any previously reported systems trained on the Treebank .Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.

F-score

- we achieved an F-score of 91.7, which is a 19% error reduction from the l-best baseline, and outperforms both 50-best and 100-best reranking.Page 2, “Introduction”

- yEeand where function F returns the F-score .Page 3, “Forest Reranking”

- 2In case multiple candidates get the same highest F-score , we choose the parse with the highest log probability from the baseline parser to be the oracle parse (Collins, 2000).Page 3, “Forest Reranking”

- 4.1 Forest Oracle Recall that the Parseval F-score is the harmonic mean of labelled precision P and labelled recall R: 2PR _ 2|y fl y*| P + R lyl + |y*|Page 5, “Supporting Forest Algorithms”

- In other words, the optimal F-score tree in a forest is not guaranteed to be composed of two optimal F-score subtrees.Page 5, “Supporting Forest Algorithms”

- Shown in Pseudocode 4, we perform these computations in a bottom-up topological order, and finally at the root node TOP, we can compute the best global F-score by maximizing over different numbers of test brackets (line 7).Page 6, “Supporting Forest Algorithms”

- tures in the updated version.5 However, our initial experiments show that, even with this much simpler feature set, our 50-best reranker performed equally well as theirs (both with an F-score of 91.4, see Tables 3 and 4).Page 7, “Experiments”

- With only local features, our forest reranker achieves an F-score of 91.25, and with the addition of non-Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention F-score.

See all papers in Proc. ACL that mention F-score.

Back to top.

dynamic programming

- Alternatively, discriminative parsing is tractable with exact and efficient search based on dynamic programming (DP) if all features are restricted to be local, that is, only looking at a local window within the factored search space (Taskar et al., 2004; McDonald et al., 2005).Page 1, “Introduction”

- We will present a dynamic programming algorithm for this problem in Sec.Page 3, “Forest Reranking”

- Analogous to the language model cost in forest rescoring, the unit feature cost here is a non-monotonic score in the dynamic programming backbone, and the derivations may thus be extracted oat-of-order.Page 5, “Forest Reranking”

- We instead propose a dynamic programming algorithm which optimizes the number of matched brackets for a given number of test brackets.Page 5, “Supporting Forest Algorithms”

- We also devised a dynamic programming algorithm for forest oracles, an interesting problem by itself.Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention dynamic programming.

See all papers in Proc. ACL that mention dynamic programming.

Back to top.

subtrees

- The key idea is to compute nonlocal features incrementally from bottom up, so that we can rerank the n-best subtrees at all internal nodes, instead of only at the root node as in conventional reranking (see Table 1).Page 1, “Introduction”

- unit NGramTree instance is for the pair (wj_1, 2123-) on the boundary between the two subtrees , whose smallest common ancestor is the current node.Page 4, “Forest Reranking”

- Other unit NGramTree instances within this span have already been computed in the subtrees , except those for the boundary words of the whole node, 212,- and wk,_1, which will be computed when this node is further combined with other nodes in the future.Page 4, “Forest Reranking”

- In other words, the optimal F-score tree in a forest is not guaranteed to be composed of two optimal F-score subtrees .Page 5, “Supporting Forest Algorithms”

- Indeed, this demonstrates the severe redundancies as another disadvantage of n-best lists, where many subtrees are repeated across different parses, while the packed forest reduces space dramatically by sharing common sub-derivations (see Fig.Page 8, “Experiments”

See all papers in Proc. ACL 2008 that mention subtrees.

See all papers in Proc. ACL that mention subtrees.

Back to top.

weight vector

- As usual, we define the score of a parse y to be the dot product between a high dimensional feature representation and a weight vector w:Page 2, “Forest Reranking”

- Using a machine learning algorithm, the weight vector w can be estimated from the training data where each sentence 3,- is labelled with its correct (“gold-standard”) parse As for the learner, Collins (2000) uses the boosting algorithm and Charniak and Johnson (2005) use the maximum entropy estimator.Page 3, “Forest Reranking”

- Now we train the reranker to pick the oracle parses as often as possible, and in case an error is made (line 6), perform an update on the weight vector (line 7), by adding the difference between two feature representations.Page 3, “Forest Reranking”

- We also use a refinement called “averaged parameters” where the final weight vector is the average of weight vectors after each sentence in each iteration over the training data.Page 3, “Forest Reranking”

See all papers in Proc. ACL 2008 that mention weight vector.

See all papers in Proc. ACL that mention weight vector.

Back to top.

Bigram

- 2 (d) returns the minimum tree fragement spanning a bigram , in this case “saw” and “the”, and should thus be computed at the smallest common ancestor of the two, which is the VP node in this example.Page 4, “Forest Reranking”

- Local instances NonLocal instances Rule 10, 85 1 ParentRule 18, 019 Word 20, 328 WProj 27, 417 WordEdges 454, 101 Heads 70, 013 CoLenPar 22 HeadTree 67, 836 Bigram <> 10, 292 Heavy 1, 401 Trigram<> 24, 677 NGramTree 67, 559 HeadMod<> 12, 047 RightBranch 2 DistMod<> 16, 017 Total Feature Instances: 800, 582Page 7, “Experiments”

- We also restricted NGramTree to be on bigrams only.Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention Bigram.

See all papers in Proc. ACL that mention Bigram.

Back to top.

development set

- We use the standard split of the Treebank: sections 02-21 as the training data (39832 sentences), section 22 as the development set (1700 sentences), and section 23 as the test set (2416 sentences).Page 7, “Experiments”

- The development set and the test set are parsed with a model trained on all 39832 training sentences.Page 7, “Experiments”

- We use the development set to determine the optimal number of iterations for averaged perceptron, and report the F1 score on the test set.Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

feature set

- Our feature set is summarized in Table 2, which closely follows Chamiak and Johnson (2005), except that we excluded the nonlocal features Edges, NGram, and CoPar, and simplified Rule and NGramTree features, since they were too complicated to compute.4 We also added four unlexicalized local features from Collins (2000) to cope with data-sparsity.Page 7, “Experiments”

- tures in the updated version.5 However, our initial experiments show that, even with this much simpler feature set , our 50-best reranker performed equally well as theirs (both with an F-score of 91.4, see Tables 3 and 4).Page 7, “Experiments”

- This result confirms that our feature set design is appropriate, and the averaged perceptron learner is a reasonable candidate for reranking.Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention feature set.

See all papers in Proc. ACL that mention feature set.

Back to top.

machine translation

- Discriminative reranking has become a popular technique for many NLP problems, in particular, parsing (Collins, 2000) and machine translation (Shen et al., 2005).Page 1, “Introduction”

- For nonlocal features, we adapt cube pruning from forest rescoring (Chiang, 2007; Huang and Chiang, 2007), since the situation here is analogous to machine translation decoding with integrated language models: we can View the scores of unit nonlocal features as the language model cost, computed on-the-fly when combining sub-constituents.Page 5, “Forest Reranking”

- We believe this general framework could also be applied to other problems involving forests or lattices, such as sequence labeling and machine translation .Page 8, “Conclusion”

See all papers in Proc. ACL 2008 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

Viterbi

- The exact decoding algorithm, shown in Pseudocode 2, is an instance of the bottom-up Viterbi algorithm, which traverses the hypergraph in a topological order, and at each node v, calculates its l-best derivation using each incoming hyperedge e E IN(v).Page 4, “Forest Reranking”

- function VITERBI (<V, E)) for v E V in topological order do for e E IN(v) doPage 5, “Forest Reranking”

- Basically, we use an Inside-Outside algorithm to compute the Viterbi inside cost 6(2)) and the Viterbi outside cost 04(2)) for each node v, and then compute the merit 046(6) for each hyperedge:Page 6, “Supporting Forest Algorithms”

See all papers in Proc. ACL 2008 that mention Viterbi.

See all papers in Proc. ACL that mention Viterbi.

Back to top.