Article Structure

Abstract

This paper proposes a method to correct English verb form errors made by nonnative speakers.

Introduction

In order to describe the nuances of an action, a verb may be associated with various concepts such as tense, aspect, voice, mood, person and number.

Background

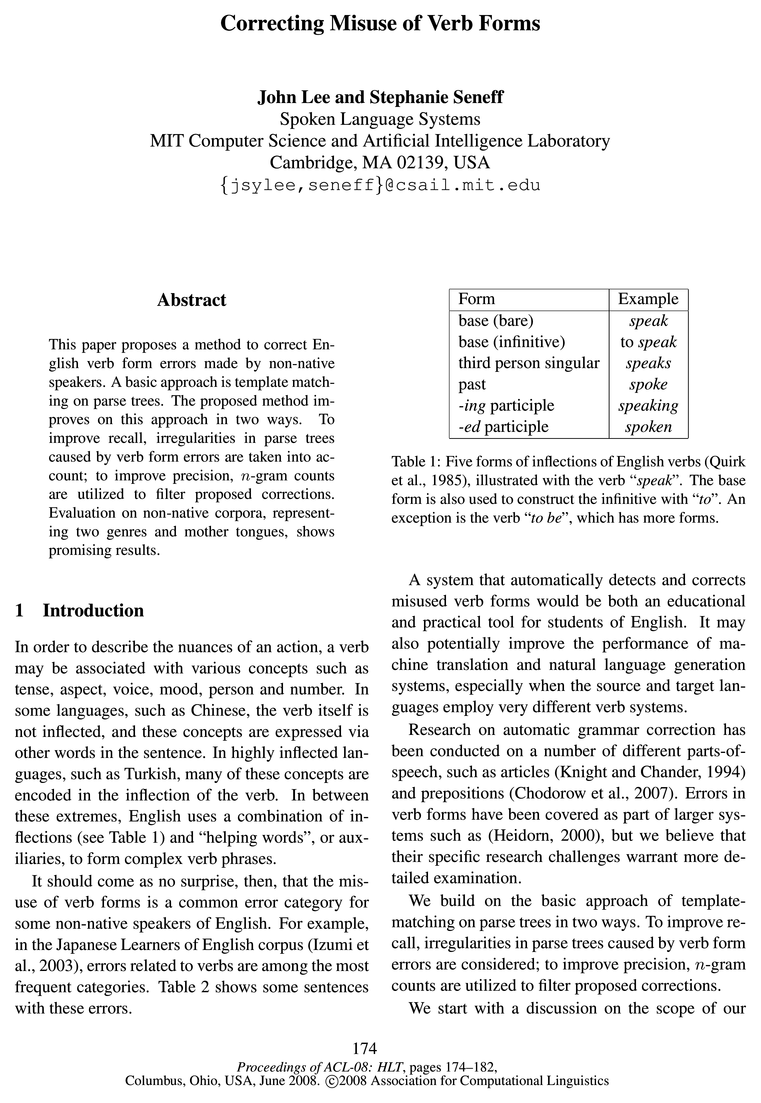

An English verb can be inflected in five forms (see Table 1).

Research Issues

One strategy for correcting verb form errors is to identify the intended syntactic relationships between the verb in question and its neighbors.

Previous Research

This section discusses previous research on processing verb form errors, and contrasts verb form errors with those of the other parts-of—speech.

Data 5.1 Development Data

To investigate irregularities in parse tree patterns (see §3.2), we utilized the AQUAINT Corpus of English News Text.

Experiments

Corresponding to the issues discussed in §3.2 and §3.3, our experiment consists of two main steps.

Conclusion

We have presented a method for correcting verb form errors.

Acknowledgments

We thank Prof. John Milton for the HKUST corpus, Tom Lee and Ken Schutte for their assistance

Topics

parse trees

- A basic approach is template matching on parse trees .Page 1, “Abstract”

- To improve recall, irregularities in parse trees caused by verb form errors are taken into account; to improve precision, n-gram counts are utilized to filter proposed corrections.Page 1, “Abstract”

- We build on the basic approach of template-matching on parse trees in two ways.Page 1, “Introduction”

- To improve recall, irregularities in parse trees caused by verb form errors are considered; to improve precision, n-gram counts are utilized to filter proposed corrections.Page 1, “Introduction”

- The success of this strategy, then, hinges on accurate identification of these items, for example, from parse trees .Page 3, “Research Issues”

- In other words, sentences containing verb form errors are more likely to yield an “incorrect” parse tree , sometimes with significant differences.Page 3, “Research Issues”

- One goal of this paper is to recognize irregularities in parse trees caused by verb form errors, in order to increase recall.Page 4, “Research Issues”

- One potential consequence of allowing for irregularities in parse tree patterns is overgeneralization.Page 4, “Research Issues”

- Similar strategies with parse trees are pursued in (Bender et al., 2004), and error templates are utilized in (Heidom, 2000) for a word processor.Page 4, “Previous Research”

- Relative to verb forms, errors in these categories do not “disturb” the parse tree as much.Page 4, “Previous Research”

- To investigate irregularities in parse tree patterns (see §3.2), we utilized the AQUAINT Corpus of English News Text.Page 4, “Data 5.1 Development Data”

See all papers in Proc. ACL 2008 that mention parse trees.

See all papers in Proc. ACL that mention parse trees.

Back to top.

n-gram

- To improve recall, irregularities in parse trees caused by verb form errors are taken into account; to improve precision, n-gram counts are utilized to filter proposed corrections.Page 1, “Abstract”

- To improve recall, irregularities in parse trees caused by verb form errors are considered; to improve precision, n-gram counts are utilized to filter proposed corrections.Page 1, “Introduction”

- We propose using n-gram counts as a filter to counter this kind of overgeneralization.Page 4, “Research Issues”

- A second goal is to show that n-gram counts can eflectively serve as a filter; in order to increase precision.Page 4, “Research Issues”

- For those categories with a high rate of false positives (all except BASEmd, BASEdO and FINITE), we utilized n-grams as filters, allowing a correction only when its n-gram count in the WEB 1T 5-GRAMPage 7, “Experiments”

- Some kind of confidence measure on the n-gram counts might be appropriate for reducing such false alarms.Page 8, “Experiments”

See all papers in Proc. ACL 2008 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

n-grams

- For disambiguation with n-grams (see §3.3), we made use of the WEB lT 5-GRAM corpus.Page 4, “Data 5.1 Development Data”

- Prepared by Google Inc., it contains English n-grams , up to 5-grams, with their observed frequency counts from a large number of web pages.Page 4, “Data 5.1 Development Data”

- Table 6: The n-grams used for filtering, with examples of sentences which they are intended to differentiate.Page 7, “Experiments”

- 6.2 Disambiguation with N-gramsPage 7, “Experiments”

- For those categories with a high rate of false positives (all except BASEmd, BASEdO and FINITE), we utilized n-grams as filters, allowing a correction only when its n-gram count in the WEB 1T 5-GRAMPage 7, “Experiments”

- Table 6 shows the n-grams , and Table 7 provides a breakdown of false positives in AQUAINT after 71- gram filtering.Page 7, “Experiments”

See all papers in Proc. ACL 2008 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.