Article Structure

Abstract

In this paper, we present a reinforcement learning approach for mapping natural language instructions to sequences of executable actions.

Introduction

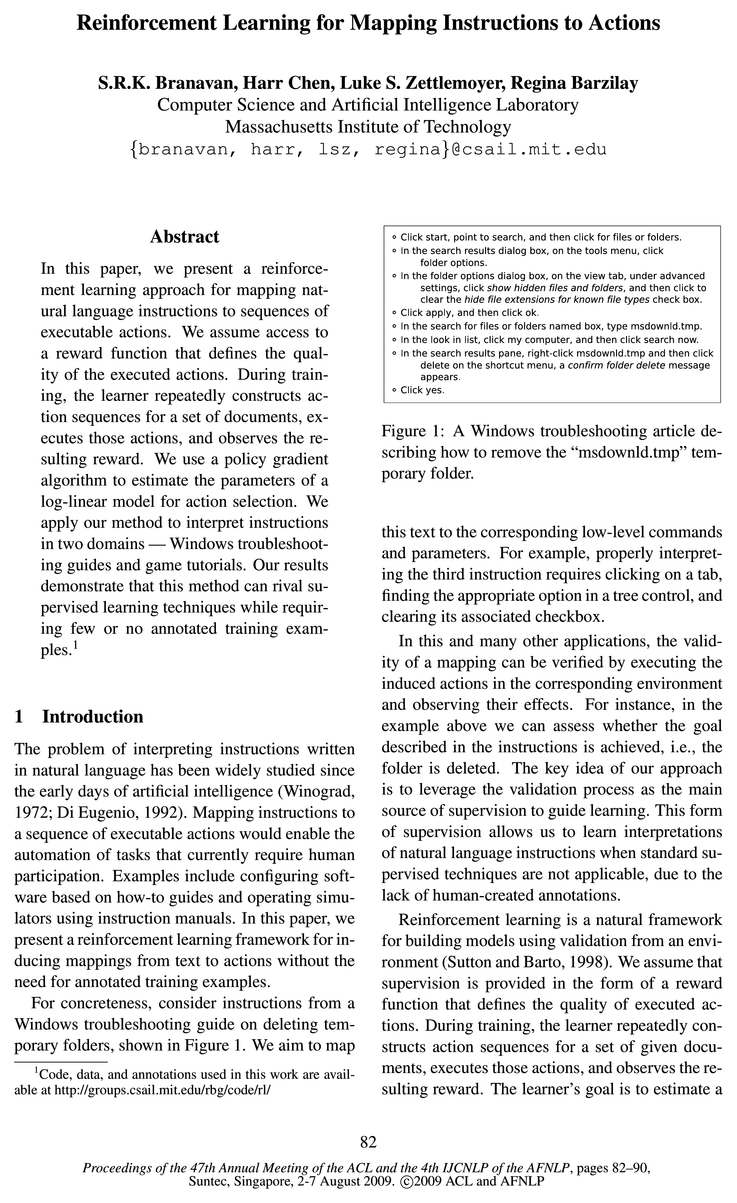

The problem of interpreting instructions written in natural language has been widely studied since the early days of artificial intelligence (Winograd, 1972; Di Eugenio, 1992).

Related Work

Grounded Language Acquisition Our work fits into a broader class of approaches that aim to learn language from a situated context (Mooney, 2008a; Mooney, 2008b; Fleischman and Roy, 2005; Yu and Ballard, 2004; Siskind, 2001; Oates, 2001).

Problem Formulation

Our task is to learn a mapping between documents and the sequence of actions they express.

A Log-Linear Model for Actions

Our goal is to predict a sequence of actions.

Reinforcement Learning

During training, our goal is to find the optimal policy p(a|s; 6).

Applying the Model

We study two applications of our model: following instructions to perform software tasks, and solving a puzzle game using tutorial guides.

Experimental Setup

Datasets For the Windows domain, our dataset consists of 128 documents, divided into 70 for training, 18 for development, and 40 for test.

Results

Table 2 presents evaluation results on the test sets.

Conclusions

In this paper, we presented a reinforcement leam-ing approach for inducing a mapping between instructions and actions.

Topics

natural language

- In this paper, we present a reinforcement learning approach for mapping natural language instructions to sequences of executable actions.Page 1, “Abstract”

- The problem of interpreting instructions written in natural language has been widely studied since the early days of artificial intelligence (Winograd, 1972; Di Eugenio, 1992).Page 1, “Introduction”

- This form of supervision allows us to learn interpretations of natural language instructions when standard supervised techniques are not applicable, due to the lack of human-created annotations.Page 1, “Introduction”

- These systems converse with a human user by taking actions that emit natural language utterances.Page 2, “Related Work”

- Our formulation is unique in how it represents natural language in the reinforcement learning framework.Page 5, “Reinforcement Learning”

See all papers in Proc. ACL 2009 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

log-linear

- We use a policy gradient algorithm to estimate the parameters of a log-linear model for action selection.Page 1, “Abstract”

- Our policy is modeled in a log-linear fashion, allowing us to incorporate features of both the instruction text and the environment.Page 2, “Introduction”

- Given a state 3 = (5, d, j, W), the space of possible next actions is defined by enumerating sub-spans of unused words in the current sentence (i.e., subspans of the jth sentence of d not in W), and the possible commands and parameters in environment state 5.4 We model the policy distribution p(a|s; 6) over this action space in a log-linear fashion (Della Pietra et al., 1997; Lafferty et al., 2001), giving us the flexibility to incorporate a diverse range of features.Page 3, “A Log-Linear Model for Actions”

- which is the derivative of a log-linear distribution.Page 4, “Reinforcement Learning”

See all papers in Proc. ACL 2009 that mention log-linear.

See all papers in Proc. ACL that mention log-linear.

Back to top.

edit distance

- edit distance between 7.0 E W and object labels in sPage 5, “Applying the Model”

- All features are binary, except for the normalized edit distance which is real-valued.Page 5, “Applying the Model”

- 6We assume that a word maps to an environment object if the edit distance between the word and the object’s name is below a threshold value.Page 6, “Applying the Model”

See all papers in Proc. ACL 2009 that mention edit distance.

See all papers in Proc. ACL that mention edit distance.

Back to top.

word alignment

- Additionally, we compute a word alignment score to investigate the extent to which the input text is used to construct correct analyses.Page 7, “Experimental Setup”

- The word alignment results from Table 2 indicate that the learners are mapping the correct words to actions for documents that are successfully completed.Page 8, “Results”

- For example, the models that perform best in the Windows domain achieve nearly perfect word alignment scores.Page 8, “Results”

See all papers in Proc. ACL 2009 that mention word alignment.

See all papers in Proc. ACL that mention word alignment.

Back to top.