Article Structure

Abstract

We present a new approach to learning a semantic parser (a system that maps natural language sentences into logical form).

Introduction

Semantic parsing is the task of mapping a natural language (NL) sentence into a completely formal meaning representation (MR) or logical form.

Background

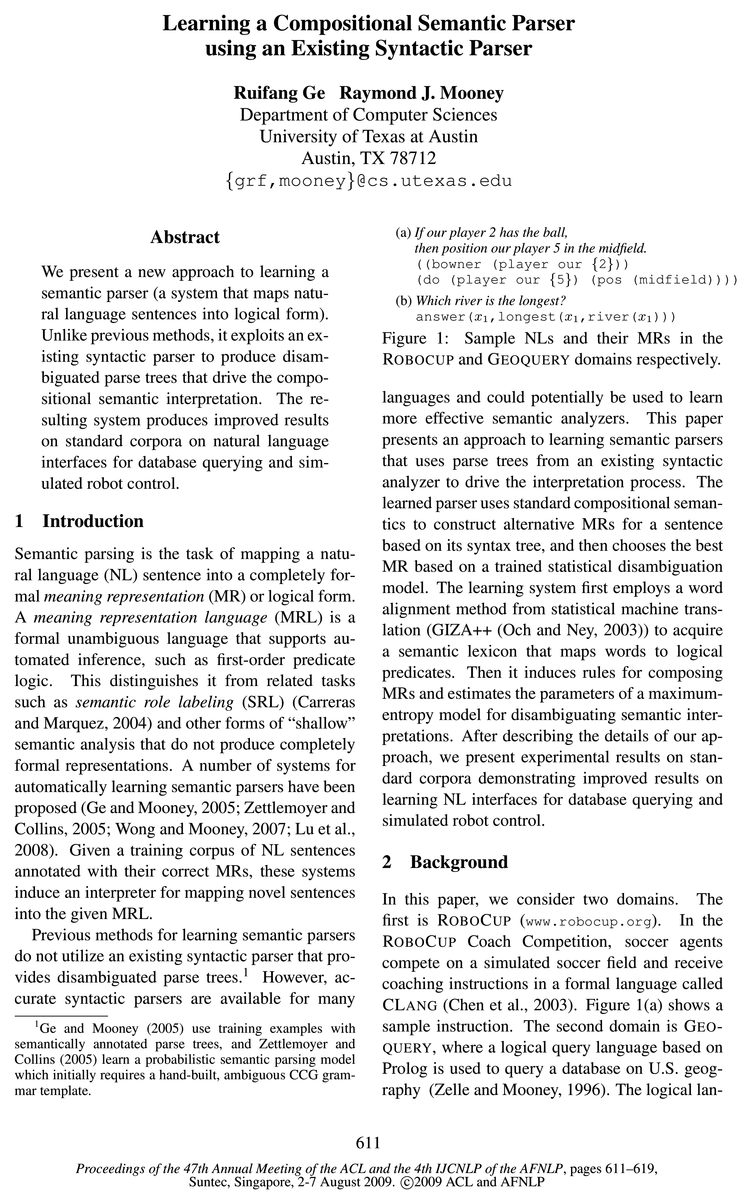

In this paper, we consider two domains.

Semantic Parsing Framework

This section describes our basic framework, whicl is based on a fairly standard approach to computa tional semantics (Blackburn and Bos, 2005).

Ensuring Meaning Composition

The basic compositional method in Sec.

Learning Semantic Knowledge

Learning semantic knowledge starts from learning the mapping from words to predicates.

Learning a Disambiguation Model

Usually, multiple possible semantic derivations for an NL sentence are warranted by the acquired semantic knowledge, thus disambiguation is needed.

Experimental Evaluation

We evaluated our approach on two standard corpora in CLANG and GEOQUERY.

Conclusion and Future work

We have presented a new approach to learning a semantic parser that utilizes an existing syntactic parser to drive compositional semantic interpretation.

Topics

syntactic parse

- Unlike previous methods, it exploits an existing syntactic parser to produce disam-biguated parse trees that drive the compositional semantic interpretation.Page 1, “Abstract”

- Previous methods for learning semantic parsers do not utilize an existing syntactic parser that provides disambiguated parse trees.1 However, accurate syntactic parsers are available for manyPage 1, “Introduction”

- Th framework is composed of three components: 1 an existing syntactic parser to produce parse tree for NL sentences; 2) learned semantic knowledgPage 2, “Semantic Parsing Framework”

- 5), including a semantic lexicon to assign possible predicates (meanings) to words, and a set of semantic composition rules to construct possible MRs for each internal node in a syntactic parse given its children’s MRs; and 3) a statistical disambiguation model (cf.Page 2, “Semantic Parsing Framework”

- First, the syntactic parser produces a parse tree for the NL sentence.Page 2, “Semantic Parsing Framework”

- Third, all possible MRs for the sentence are constructed compositionally in a recursive, bottom-up fashion following its syntactic parse using composition rules.Page 2, “Semantic Parsing Framework”

- 1(a) given its syntactic parse in Fig.Page 2, “Semantic Parsing Framework”

- A SAPT adds a semantic label to each non-leaf node in the syntactic parse tree.Page 2, “Semantic Parsing Framework”

- 3 only works if the syntactic parse tree strictly follows the predicate-argument structure of the MR, since meaning composition at each node is assumed to combine a predicate with one of its arguments.Page 3, “Ensuring Meaning Composition”

- 1(a) according to the syntactic parse in Fig.Page 3, “Ensuring Meaning Composition”

- Macro-predicates are introduced as needed during training in order to ensure that each MR in the training set can be composed using the syntactic parse of its corresponding NL given reasonable assignments of predicates to words.Page 4, “Ensuring Meaning Composition”

See all papers in Proc. ACL 2009 that mention syntactic parse.

See all papers in Proc. ACL that mention syntactic parse.

Back to top.

semantic parser

- We present a new approach to learning a semantic parser (a system that maps natural language sentences into logical form).Page 1, “Abstract”

- Semantic parsing is the task of mapping a natural language (NL) sentence into a completely formal meaning representation (MR) or logical form.Page 1, “Introduction”

- A number of systems for automatically learning semantic parsers have been proposed (Ge and Mooney, 2005; Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Lu et al., 2008).Page 1, “Introduction”

- Previous methods for learning semantic parsers do not utilize an existing syntactic parser that provides disambiguated parse trees.1 However, accurate syntactic parsers are available for manyPage 1, “Introduction”

- 1Ge and Mooney (2005) use training examples with semantically annotated parse trees, and Zettlemoyer and Collins (2005) learn a probabilistic semantic parsing modelPage 1, “Introduction”

- This paper presents an approach to learning semantic parsers that uses parse trees from an existing syntactic analyzer to drive the interpretation process.Page 1, “Introduction”

- The process of generating the semantic parse for an NL sentence is as follows.Page 2, “Semantic Parsing Framework”

- Here, unique word alignments are not required, and alternative interpretations compete for the best semantic parse .Page 6, “Learning a Disambiguation Model”

- Second, a semantic parser was learned from the training set augmented with their syntactic parses.Page 7, “Experimental Evaluation”

- Finally, the learned semantic parser was used to generate the MRs for the test sentences using their syntactic parses.Page 7, “Experimental Evaluation”

- We measured the performance of semantic parsing using precision (percentage of returned MRs that were correct), recall (percentage of test examples with correct MRs returned), and F -measure (harmonic mean of precision and recall).Page 7, “Experimental Evaluation”

See all papers in Proc. ACL 2009 that mention semantic parser.

See all papers in Proc. ACL that mention semantic parser.

Back to top.

word alignment

- The learning system first employs a word alignment method from statistical machine translation (GIZA++ (Och and Ney, 2003)) to acquire a semantic lexicon that maps words to logical predicates.Page 1, “Introduction”

- We use an approach based on Wong and Mooney (2006), which constructs word alignments between NL sentences and their MRs.Page 4, “Learning Semantic Knowledge”

- Normally, word alignment is used in statistical machine translation to match words in one NL to words in another; here it is used to align words with predicates based on a ”parallel corpus” of NL sentences and MRs. We assume that each word alignment defines a possible mapping from words to predicates for building a SAPT and semantic derivation which compose the correct MR. A semantic lexicon and composition rules are then extracted directly from thePage 4, “Learning Semantic Knowledge”

- Generation of word alignments for each training example proceeds as follows.Page 5, “Learning Semantic Knowledge”

- After predicates are assigned to words using word alignment , for each alignment of a training example and its syntactic parse, a SAPT is generated for composing the correct MR using the processes discussed in Sections 3 and 4.Page 5, “Learning Semantic Knowledge”

- Here, unique word alignments are not required, and alternative interpretations compete for the best semantic parse.Page 6, “Learning a Disambiguation Model”

- We also evaluated the impact of the word alignment component by replacing Giza++ by gold-standard word alignments manually annotated for the CLANG corpus.Page 8, “Experimental Evaluation”

- The results consistently showed that compared to using gold-standard word alignment , Giza++ produced lower semantic parsing accuracy when given very little training data, but similar or better results when given sufficient training data (> 160 examples).Page 8, “Experimental Evaluation”

- This suggests that, given sufficient data, Giza++ can produce effective word alignments, and that imperfect word alignments do not seriously impair our semantic parsers since the disambiguation model evaluates multiple possible interpretations of ambiguous words.Page 8, “Experimental Evaluation”

- Using multiple potential alignments from Giza++ sometimes performs even better than using a single gold-standard word alignment because it allows multiple interpretations to be evaluated by the global disambiguation model.Page 8, “Experimental Evaluation”

- The approach also exploits methods from statistical MT ( word alignment ) and therefore integrates techniques from statistical syntactic parsing, MT, and compositional semantics to produce an effective semantic parser.Page 8, “Conclusion and Future work”

See all papers in Proc. ACL 2009 that mention word alignment.

See all papers in Proc. ACL that mention word alignment.

Back to top.

parse tree

- Unlike previous methods, it exploits an existing syntactic parser to produce disam-biguated parse trees that drive the compositional semantic interpretation.Page 1, “Abstract”

- 1Ge and Mooney (2005) use training examples with semantically annotated parse trees , and Zettlemoyer and Collins (2005) learn a probabilistic semantic parsing modelPage 1, “Introduction”

- This paper presents an approach to learning semantic parsers that uses parse trees from an existing syntactic analyzer to drive the interpretation process.Page 1, “Introduction”

- Th framework is composed of three components: 1 an existing syntactic parser to produce parse tree for NL sentences; 2) learned semantic knowledgPage 2, “Semantic Parsing Framework”

- First, the syntactic parser produces a parse tree for the NL sentence.Page 2, “Semantic Parsing Framework”

- 3(a) shows one possible semantically-augmented parse tree (SAPT) (Ge and Mooney, 2005) for the condition part of the example in Fig.Page 2, “Semantic Parsing Framework”

- A SAPT adds a semantic label to each non-leaf node in the syntactic parse tree .Page 2, “Semantic Parsing Framework”

- The compositional process assumes a binary parse tree suitable for predicate-argument composition; parses in Penn-treebank style are binarized using Collins’ (1999) method.Page 2, “Semantic Parsing Framework”

- 3 only works if the syntactic parse tree strictly follows the predicate-argument structure of the MR, since meaning composition at each node is assumed to combine a predicate with one of its arguments.Page 3, “Ensuring Meaning Composition”

- Next, each resulting parse tree is linearized to produce a sequence of predicates by using a top-down, left-to-right traversal of the parse tree .Page 5, “Learning Semantic Knowledge”

See all papers in Proc. ACL 2009 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

gold-standard

- Note that unlike SCISSOR (Ge and Mooney, 2005), training our method does not require gold-standard SAPTs.Page 4, “Ensuring Meaning Composition”

- For GEOQUERY, an MR was correct if it retrieved the same answer as the gold-standard query, thereby reflecting the quality of the final result returned to the user.Page 7, “Experimental Evaluation”

- Listed together with their PARSEVAL F-measures these are: gold-standard parses from the treebank (GoldSyn, 100%), a parser trained on WSJ plus a small number of in-domain training sentences required to achieve good performance, 20 for CLANG (Syn20, 88.21%) and 40 for GEOQUERY (Syn40, 91.46%), and a parser trained on no in-domain data (Syn0, 82.15% for CLANG and 76.44% for GEOQUERY).Page 7, “Experimental Evaluation”

- Note that some of these approaches require additional human supervision, knowledge, or engineered features that are unavailable to the other systems; namely, SCISSOR requires gold-standard SAPTs, Z&C requires hand-built template grammar rules, LU requires a reranking model using specially designed global features, and our approach requires an existing syntactic parser.Page 7, “Experimental Evaluation”

- We also evaluated the impact of the word alignment component by replacing Giza++ by gold-standard word alignments manually annotated for the CLANG corpus.Page 8, “Experimental Evaluation”

- The results consistently showed that compared to using gold-standard word alignment, Giza++ produced lower semantic parsing accuracy when given very little training data, but similar or better results when given sufficient training data (> 160 examples).Page 8, “Experimental Evaluation”

- Using multiple potential alignments from Giza++ sometimes performs even better than using a single gold-standard word alignment because it allows multiple interpretations to be evaluated by the global disambiguation model.Page 8, “Experimental Evaluation”

See all papers in Proc. ACL 2009 that mention gold-standard.

See all papers in Proc. ACL that mention gold-standard.

Back to top.

treebank

- Experiments on CLANG and GEOQUERY showed that the performance can be greatly improved by adding a small number of treebanked examples from the corresponding training set together with the WSJ corpus.Page 7, “Experimental Evaluation”

- Listed together with their PARSEVAL F-measures these are: gold-standard parses from the treebank (GoldSyn, 100%), a parser trained on WSJ plus a small number of in-domain training sentences required to achieve good performance, 20 for CLANG (Syn20, 88.21%) and 40 for GEOQUERY (Syn40, 91.46%), and a parser trained on no in-domain data (Syn0, 82.15% for CLANG and 76.44% for GEOQUERY).Page 7, “Experimental Evaluation”

- This demonstrates the advantage of utilizing existing syntactic parsers that are learned from large open domain treebanks instead of relying just on the training data.Page 8, “Experimental Evaluation”

- By exploiting an existing syntactic parser trained on a large treebank , our approach produces improved results on standard corpora, particularly when training data is limited or sentences are long.Page 8, “Conclusion and Future work”

See all papers in Proc. ACL 2009 that mention treebank.

See all papers in Proc. ACL that mention treebank.

Back to top.

in-domain

- Listed together with their PARSEVAL F-measures these are: gold-standard parses from the treebank (GoldSyn, 100%), a parser trained on WSJ plus a small number of in-domain training sentences required to achieve good performance, 20 for CLANG (Syn20, 88.21%) and 40 for GEOQUERY (Syn40, 91.46%), and a parser trained on no in-domain data (Syn0, 82.15% for CLANG and 76.44% for GEOQUERY).Page 7, “Experimental Evaluation”

- ones trained on more in-domain data) improved our approach.Page 7, “Experimental Evaluation”

- Table 5: Performance on GEO25 0 (20 in-domain sentences are used in SYN20 to train the syntactic parser).Page 8, “Experimental Evaluation”

See all papers in Proc. ACL 2009 that mention in-domain.

See all papers in Proc. ACL that mention in-domain.

Back to top.

meaning representation

- Semantic parsing is the task of mapping a natural language (NL) sentence into a completely formal meaning representation (MR) or logical form.Page 1, “Introduction”

- A meaning representation language (MRL) is a formal unambiguous language that supports automated inference, such as first-order predicate logic.Page 1, “Introduction”

- Note the results for SCISSOR, KRISP and LU on GEOQUERY are based on a different meaning representation language, FUNQL, which has been shown to produce lower results (Wong and Mooney, 2007).Page 8, “Experimental Evaluation”

See all papers in Proc. ACL 2009 that mention meaning representation.

See all papers in Proc. ACL that mention meaning representation.

Back to top.

natural language

- We present a new approach to learning a semantic parser (a system that maps natural language sentences into logical form).Page 1, “Abstract”

- The resulting system produces improved results on standard corpora on natural language interfaces for database querying and simulated robot control.Page 1, “Abstract”

- Semantic parsing is the task of mapping a natural language (NL) sentence into a completely formal meaning representation (MR) or logical form.Page 1, “Introduction”

See all papers in Proc. ACL 2009 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

parsing model

- 1Ge and Mooney (2005) use training examples with semantically annotated parse trees, and Zettlemoyer and Collins (2005) learn a probabilistic semantic parsing modelPage 1, “Introduction”

- Both subtasks require a training set of NLs paired with their MRs. Each NL sentence also requires a syntactic parse generated using Bikel’s (2004) implementation of Collins parsing model 2.Page 4, “Ensuring Meaning Composition”

- First Bikel’s implementation of Collins parsing model 2 was trained to generate syntactic parses.Page 7, “Experimental Evaluation”

See all papers in Proc. ACL 2009 that mention parsing model.

See all papers in Proc. ACL that mention parsing model.

Back to top.

reranking

- available): SCISSOR (Ge and Mooney, 2005), an integrated syntactic-semantic parser; KRISP (Kate and Mooney, 2006), an SVM-based parser using string kernels; WASP (Wong and Mooney, 2006; Wong and Mooney, 2007), a system based on synchronous grammars; Z&C (Zettlemoyer and Collins, 2007)3, a probabilistic parser based on relaxed CCG grammars; and LU (Lu et a1., 2008), a generative model with discriminative reranking .Page 7, “Experimental Evaluation”

- Note that some of these approaches require additional human supervision, knowledge, or engineered features that are unavailable to the other systems; namely, SCISSOR requires gold-standard SAPTs, Z&C requires hand-built template grammar rules, LU requires a reranking model using specially designed global features, and our approach requires an existing syntactic parser.Page 7, “Experimental Evaluation”

- Reranking could also potentially improve the results (Ge and Mooney, 2006; Lu et al., 2008).Page 8, “Conclusion and Future work”

See all papers in Proc. ACL 2009 that mention reranking.

See all papers in Proc. ACL that mention reranking.

Back to top.