Article Structure

Abstract

Can we automatically compose a large set of Wiktionaries and translation dictionaries to yield a massive, multilingual dictionary whose coverage is substantially greater than that of any of its constituent dictionaries?

Introduction and Motivation

In the era of globalization, interlingual communication is becoming increasingly important.

Building a Translation Graph

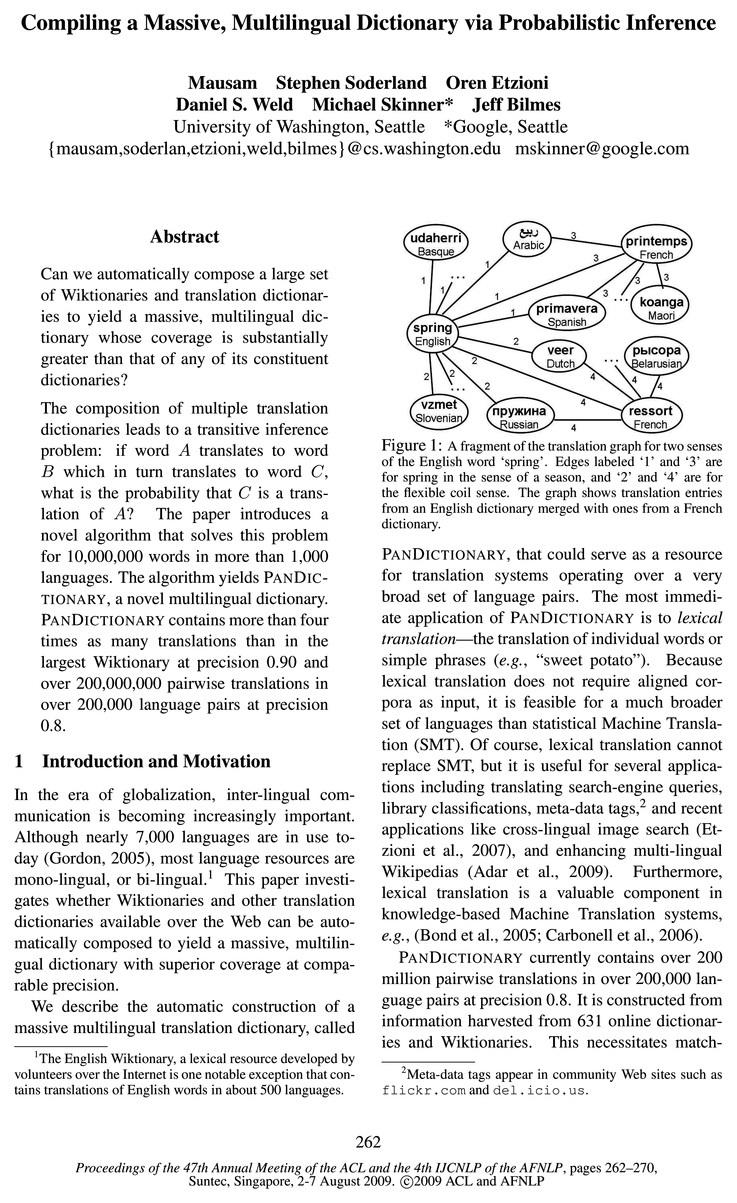

In previous work (Etzioni et al., 2007) we introduced an approach to sense matching that is based on translation graphs (see Figure 1 for an example).

Translation Inference Algorithms

In essence, inference over a translation graph amounts to transitive sense matching: if word A translates to word B, which translates in turn to word 0, what is the probability that C is a translation of A?

Empirical Evaluation

In our experiments we investigate three key questions: (1) which of the three algorithms (TG, uSP and SP) is superior for translation inference (Section 4.2)?

Related Work

Because we are considering a relatively new problem (automatically building a panlingual translation resource) there is little work that is directly related to our own.

Conclusions

We have described the automatic construction of a unique multilingual translation resource, called PANDICTIONARY, by performing probabilistic inference over the translation graph.

Topics

word sense

- ing word senses across multiple, independently-authored dictionaries.Page 2, “Introduction and Motivation”

- Undirected edges in the graph denote translations between words: an edge e E 5 between (ml, [1) and (mg, [2) represents the belief that ml and ’LU2 share at least one word sense .Page 2, “Building a Translation Graph”

- TRANS GRAPH searched for paths in the graph between two vertices and estimated the probability that the path maintains the same word sense along all edges in the path, even when the edges come from different dictionaries.Page 2, “Building a Translation Graph”

- One formula estimates the probability that two multilingual dictionary entries represent the same word sense , based on the proportion of overlapping translations for the two entries.Page 2, “Building a Translation Graph”

- In such cases, there is a high probability that all 3 nodes share a word sense .Page 3, “Building a Translation Graph”

- However, if A, B, and C are on a circuit that starts at A, passes through B and C and returns to A, there is a high probability that all nodes on that circuit share a common word sense , given certain restrictions that we enumerate later.Page 3, “Translation Inference Algorithms”

- Each clique in the graph represents a set of vertices that share a common word sense .Page 5, “Translation Inference Algorithms”

- When two cliques intersect in two or more vertices, the intersecting vertices share the word sense of both cliques.Page 5, “Translation Inference Algorithms”

- This may either mean that both cliques represent the same word sense , or that the intersecting vertices form an ambiguity set.Page 5, “Translation Inference Algorithms”

- All nodes of the clique V1, V2, A, B, C, D share a word sense, and all nodes of the clique B, C, E, F, G, H also share a word sense .Page 5, “Translation Inference Algorithms”

- Our goal is to automatically compile PANDICTIONARY, a sense-distinguished lexical translation resource, where each entry is a distinct word sense .Page 5, “Translation Inference Algorithms”

See all papers in Proc. ACL 2009 that mention word sense.

See all papers in Proc. ACL that mention word sense.

Back to top.

language pairs

- PANDICTIONARY contains more than four times as many translations than in the largest Wiktionary at precision 0.90 and over 200,000,000 pairwise translations in over 200,000 language pairs at precision 0.8.Page 1, “Abstract”

- PANDICTIONARY, that could serve as a resource for translation systems operating over a very broad set of language pairs .Page 1, “Introduction and Motivation”

- PANDICTIONARY currently contains over 200 million pairwise translations in over 200,000 language pairs at precision 0.8.Page 1, “Introduction and Motivation”

- We describe the design and construction of PAN DICTIONARY—a novel lexical resource that spans over 200 million pairwise translations in over 200,000 language pairs at 0.8 precision, a fourfold increase when compared to the union of its input translation dictionaries.Page 2, “Introduction and Motivation”

- Such people are hard to find and may not even exist for many language pairs (e. g., Basque and Maori).Page 6, “Empirical Evaluation”

- For this study we tagged 7 language pairs : Hindi-Hebrew,Page 7, “Empirical Evaluation”

- lingual corpora, which may scale to several language pairs in future (Haghighi et al., 2008).Page 8, “Related Work”

See all papers in Proc. ACL 2009 that mention language pairs.

See all papers in Proc. ACL that mention language pairs.

Back to top.

random sample

- Ideally, we would like to evaluate a random sample of the more than 1,000 languages represented in PANDICTIONARY.5 However, a high-quality evaluation of translation between two languages requires a person who is fluent in both languages.Page 6, “Empirical Evaluation”

- We provided our evaluators with a random sample of translations into their native language.Page 6, “Empirical Evaluation”

- To carry out this comparison, we randomly sampled 1,000 senses from English Wiktionary and ran the three algorithms over them.Page 6, “Empirical Evaluation”

- We randomly sampled 200 translations per language, which resulted in about 2,500 tags.Page 7, “Empirical Evaluation”

See all papers in Proc. ACL 2009 that mention random sample.

See all papers in Proc. ACL that mention random sample.

Back to top.