Article Structure

Abstract

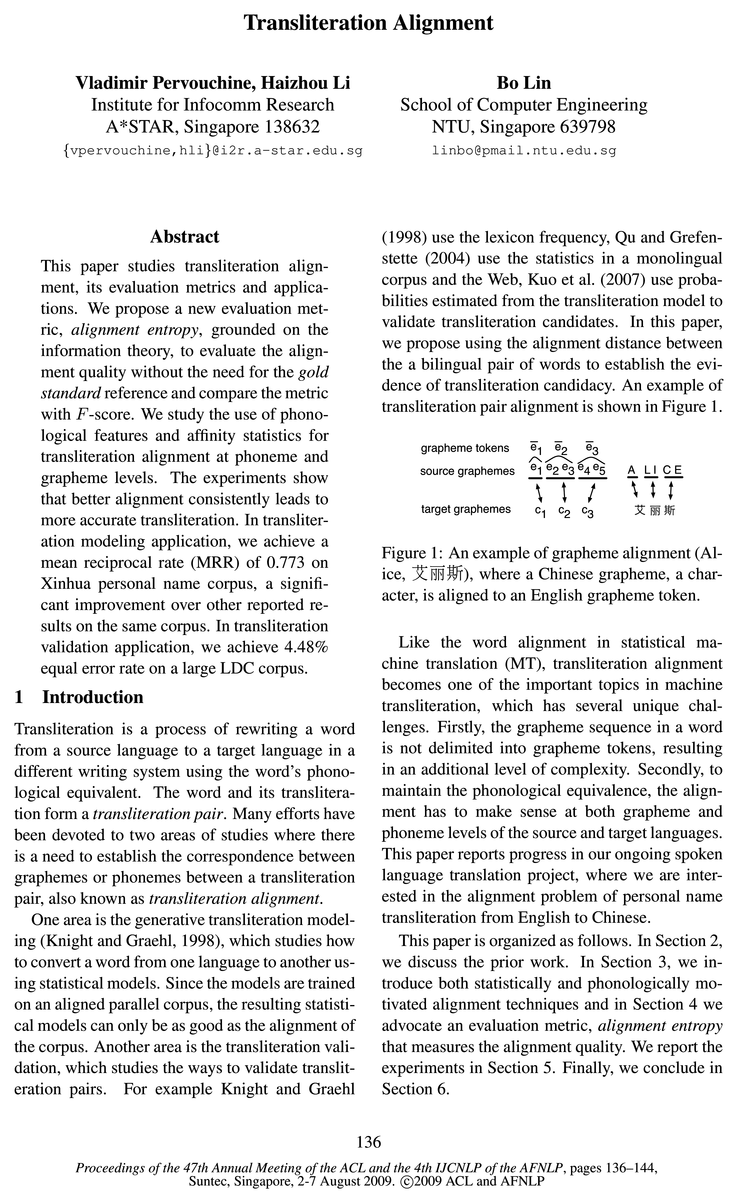

This paper studies transliteration alignment, its evaluation metrics and applications.

Introduction

Transliteration is a process of rewriting a word from a source language to a target language in a different writing system using the word’s phonological equivalent.

Related Work

A number of transliteration studies have touched on the alignment issue as a part of the transliteration modeling process, where alignment is needed at levels of graphemes and phonemes.

Transliteration alignment techniques

We assume in this paper that the source language is English and the target language is Chinese, although the technique is not restricted to English-Chinese alignment.

Transliteration alignment entropy

Having aligned the graphemes between two languages, we want to measure how good the alignment is.

Experiments

We use two transliteration corpora: Xinhua corpus (Xinhua News Agency, 1992) of 37,637 personal name pairs and LDC Chinese-English

Conclusions

We conclude that the alignment entropy is a reliable indicator of the alignment quality, as confirmed by our experiments on both Xinhua and LDC corpora.

Topics

F-score

- We propose a new evaluation metric, alignment entropy, grounded on the information theory, to evaluate the alignment quality without the need for the gold standard reference and compare the metric with F-score .Page 1, “Abstract”

- Denoting the number of cross-lingual mappings that are common in both A and Q as CA0, the number of cross-lingual mappings in A as CA and the number of cross-lingual mappings in Q as Cg, precision Pr is given as CAglCA, recall Be as GAO/CG and F-score as 2P7“ - Rc/(Pr + Re).Page 2, “Related Work”

- We expect and will show that this estimate is a good indicator of the alignment quality, and is as effective as the F-score , but without the need for a gold standard reference.Page 4, “Transliteration alignment entropy”

- Next we conduct three experiments to study 1) alignment entropy vs. F-score , 2) the impact of alignment quality on transliteration accuracy, and 3) how to validate transliteration using alignment metrics.Page 5, “Experiments”

- 5.1 Alignment entropy vs. F-scorePage 5, “Experiments”

- We have manually aligned a random set of 3,000 transliteration pairs from the Xinhua training set to serve as the gold standard, on which we calculate the precision, recall and F-score as well as alignment entropy for each alignment.Page 5, “Experiments”

- From the figures, we can observe a clear correlation between the alignment entropy and F-score , that validates the effectiveness of alignment entropy as an evaluation metric.Page 5, “Experiments”

- We also notice that the data points seem to form clusters inside which the value of F-score changes insignificantly as the alignment entropy changes.Page 5, “Experiments”

- F-score requires a large gold standard which is not always available.Page 5, “Experiments”

- F-score 0.94 0.92 0.90 0.88 0.86 0.84 0.82Page 6, “Experiments”

- F-score 0.94 0.92 0.90 0.88 0.86 0.84 0.82Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention F-score.

See all papers in Proc. ACL that mention F-score.

Back to top.

gold standard

- We propose a new evaluation metric, alignment entropy, grounded on the information theory, to evaluate the alignment quality without the need for the gold standard reference and compare the metric with F-score.Page 1, “Abstract”

- the gold standard , or the ground truth alignment Q, which is a manual alignment of the corpus or a part of it.Page 2, “Related Work”

- They indicate how close the alignment under investigation is to the gold standard alignment (Mihalcea and Pedersen, 2003).Page 2, “Related Work”

- Note that these metrics hinge on the availability of the gold standard , which is often not available.Page 2, “Related Work”

- One important property of this metric is that it does not require a gold standard alignment as a reference.Page 2, “Related Work”

- We expect and will show that this estimate is a good indicator of the alignment quality, and is as effective as the F-score, but without the need for a gold standard reference.Page 4, “Transliteration alignment entropy”

- We have manually aligned a random set of 3,000 transliteration pairs from the Xinhua training set to serve as the gold standard , on which we calculate the precision, recall and F-score as well as alignment entropy for each alignment.Page 5, “Experiments”

- Note that we don’t need the gold standard reference for reporting the alignment entropy.Page 5, “Experiments”

- Further investigation reveals that this could be due to the limited number of entries in the gold standard .Page 5, “Experiments”

- The 3,000 names in the gold standard are not enough to effectively reflect the change across different alignments.Page 5, “Experiments”

- F-score requires a large gold standard which is not always available.Page 5, “Experiments”

See all papers in Proc. ACL 2009 that mention gold standard.

See all papers in Proc. ACL that mention gold standard.

Back to top.

evaluation metric

- This paper studies transliteration alignment, its evaluation metrics and applications.Page 1, “Abstract”

- We propose a new evaluation metric , alignment entropy, grounded on the information theory, to evaluate the alignment quality without the need for the gold standard reference and compare the metric with F-score.Page 1, “Abstract”

- In Section 3, we introduce both statistically and phonologically motivated alignment techniques and in Section 4 we advocate an evaluation metric , alignment entropy that measures the alignment quality.Page 1, “Introduction”

- Although there are many studies of evaluation metrics of word alignment for MT (Lambert, 2008), there has been much less reported work on evaluation metrics of transliteration alignment.Page 2, “Related Work”

- Three evaluation metrics are used: precision, recall, and F -sc0re, the latter being a function of the former two.Page 2, “Related Work”

- In this paper we propose a novel evaluation metric for transliteration alignment grounded on the information theory.Page 2, “Related Work”

- From the figures, we can observe a clear correlation between the alignment entropy and F-score, that validates the effectiveness of alignment entropy as an evaluation metric .Page 5, “Experiments”

- This once again demonstrates the desired property of alignment entropy as an evaluation metric of alignment.Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention evaluation metric.

See all papers in Proc. ACL that mention evaluation metric.

Back to top.

feature vector

- Withgott and Chen (1993) define a feature vector of phonological descriptors for English sounds.Page 4, “Transliteration alignment techniques”

- We extend the idea by defining a 21-element binary feature vector for each English and Chinese phoneme.Page 4, “Transliteration alignment techniques”

- Each element of the feature vector represents presence or absence of a phonological descriptor that differentiates various kinds of phonemes, e.g.Page 4, “Transliteration alignment techniques”

- In this way, a phoneme is described by a feature vector .Page 4, “Transliteration alignment techniques”

- We express the similarity between two phonemes by the Hamming distance, also called the phonological distance, between the two feature vectors .Page 4, “Transliteration alignment techniques”

See all papers in Proc. ACL 2009 that mention feature vector.

See all papers in Proc. ACL that mention feature vector.

Back to top.

conditional probabilities

- From the affinity matrix conditional probabilities P(ei|cj) can be estimated asPage 3, “Transliteration alignment techniques”

- We estimate conditional probability of Chinese phoneme cpk, after observing English character 6, asPage 3, “Transliteration alignment techniques”

- The joint probability is expressed as a chain product of a series of conditional probabilities of token pairs P({ei}, {cj}) = P((ék,ck)|(ék_1,ck_1)), k = 1 .Page 6, “Experiments”

- The conditional probabilities for token pairs are estimated from the aligned training corpus.Page 6, “Experiments”

See all papers in Proc. ACL 2009 that mention conditional probabilities.

See all papers in Proc. ACL that mention conditional probabilities.

Back to top.

cross-lingual

- Denoting the number of cross-lingual mappings that are common in both A and Q as CA0, the number of cross-lingual mappings in A as CA and the number of cross-lingual mappings in Q as Cg, precision Pr is given as CAglCA, recall Be as GAO/CG and F-score as 2P7“ - Rc/(Pr + Re).Page 2, “Related Work”

- We expect a good alignment to have a sharp cross-lingual mapping with low alignment entropy.Page 4, “Transliteration alignment entropy”

- We believe that this property would be useful in transliteration extraction, cross-lingual information retrieval applications.Page 8, “Conclusions”

See all papers in Proc. ACL 2009 that mention cross-lingual.

See all papers in Proc. ACL that mention cross-lingual.

Back to top.

models trained

- Although the direct orthographic mapping approach advocates a direct transfer of grapheme at runtime, we still need to establish the grapheme correspondence at the model training stage, when phoneme level alignment can help.Page 2, “Related Work”

- Figure 6: Mean reciprocal ratio on Xinhua test set vs. alignment entropy and F-score for models trained with different affinity alignments.Page 7, “Experiments”

- Figure 7: Mean reciprocal ratio on Xinhua test set vs. alignment entropy and F-score for models trained with different phonological alignments.Page 7, “Experiments”

See all papers in Proc. ACL 2009 that mention models trained.

See all papers in Proc. ACL that mention models trained.

Back to top.

parallel corpus

- Since the models are trained on an aligned parallel corpus , the resulting statistical models can only be as good as the alignment of the corpus.Page 1, “Introduction”

- The alignment can be performed via the Expectation-Maximization (EM) by starting with a random initial alignment and calculating the afi‘inity matrix count(ei, cj) over the whole parallel corpus , where element (2', j) is the number of times character 6, was aligned to 03-.Page 3, “Transliteration alignment techniques”

- In contrast, because the alignment entropy doesn’t depend on the gold standard, one can easily report the alignment performance on any unaligned parallel corpus .Page 5, “Experiments”

See all papers in Proc. ACL 2009 that mention parallel corpus.

See all papers in Proc. ACL that mention parallel corpus.

Back to top.