Article Structure

Abstract

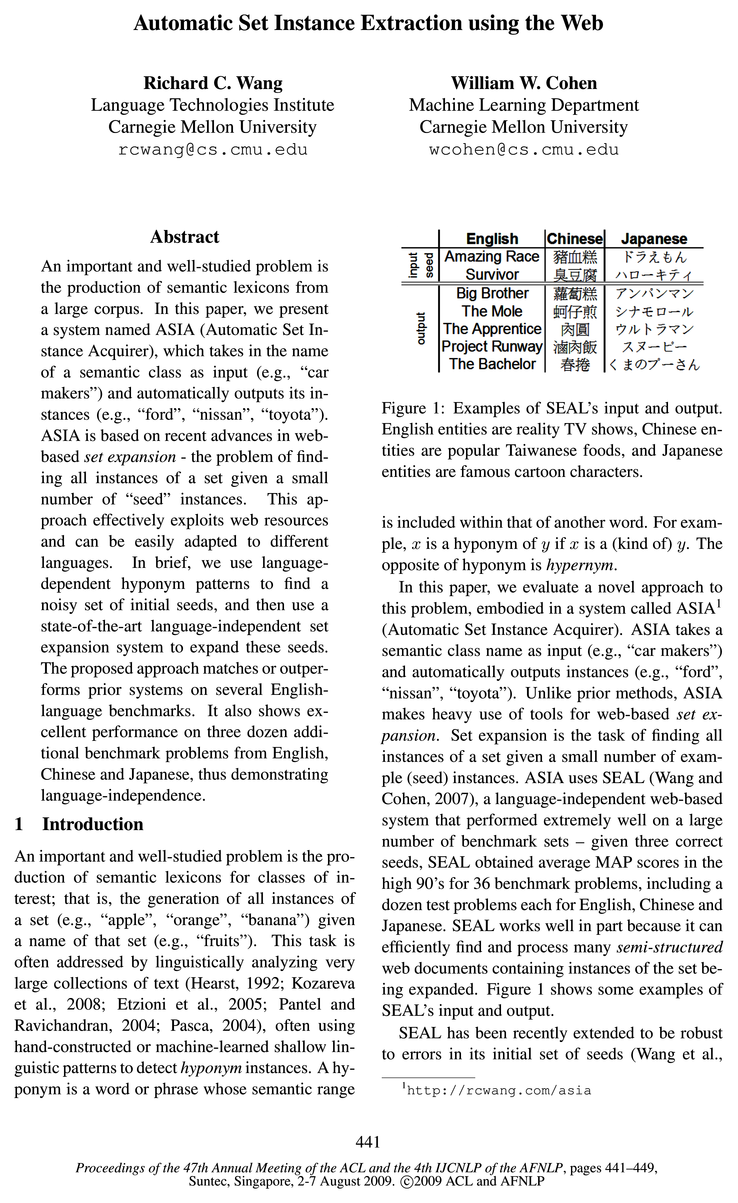

An important and well-studied problem is the production of semantic lexicons from a large corpus.

Introduction

An important and well-studied problem is the production of semantic lexicons for classes of interest; that is, the generation of all instances of a set (e.g., “apple”, “orange”, “banana”) given a name of that set (e.g., “fruits”).

Related Work

There has been a significant amount of research done in the area of semantic class learning (aka lexical acquisition, lexicon induction, hyponym extraction, or open-domain information extraction).

Proposed Approach

ASIA is composed of three main components: the Noisy Instance Provider, the Noisy Instance Expander, and the Bootstrapper.

Experiments

4.1 Datasets

Comparison to Prior Work

We compare ASIA’s performance to the results of three previously published work.

Conclusions

In this paper, we have shown that ASIA, a SEAL-based system, extracts set instances with high precision and recall in multiple languages given only the set name.

Acknowledgments

This work was supported by the Google Research Awards program.

Topics

WordNet

- We also compare ASIA on twelve additional benchmarks to the extended Wordnet 2.1 produced by Snow et al (Snow et al., 2006), and show that for these twelve sets, ASIA produces more than five times as many set instances with much higher precision (98% versus 70%).Page 2, “Introduction”

- Snow (Snow et al., 2006) has extended the WordNet 2.1 by adding thousands of entries (synsets) at a relatively high precision.Page 7, “Comparison to Prior Work”

- They have made several versions of extended WordNet available4.Page 7, “Comparison to Prior Work”

- For the experimental comparison, we focused on leaf semantic classes from the extended WordNet that have many hypernyms, so that a meaningful comparison could be made: specifically, we selected nouns that have at least three hypernyms, such that the hypernyms are the leaf nodes in the hypernym hierarchy of WordNet .Page 7, “Comparison to Prior Work”

- Preliminary experiments showed that (as in the experiments with Pasca’s classes above) ASIA did not always converge to the intended meaning; to avoid this problem, we instituted a second filter, and discarded ASIA’s results if the intersection of hypernyms from ASIA and WordNet constituted less than 50% of those in WordNet .Page 7, “Comparison to Prior Work”

- When we evaluated Snow’s extended WordNet , we assumed all instances thatPage 7, “Comparison to Prior Work”

- were in the original WordNet are correct.Page 8, “Comparison to Prior Work”

See all papers in Proc. ACL 2009 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.

hypernyms

- The opposite of hyponym is hypernym .Page 1, “Introduction”

- We addressed this problem by simply rerunning ASIA with a more specific class name (i.e., the first one returned); however, the result suggests that future work is needed to support automatic construction of hypernym hierarchy using semistructured webPage 7, “Comparison to Prior Work”

- For the experimental comparison, we focused on leaf semantic classes from the extended WordNet that have many hypernyms, so that a meaningful comparison could be made: specifically, we selected nouns that have at least three hypernyms, such that the hypernyms are the leaf nodes in the hypernym hierarchy of WordNet.Page 7, “Comparison to Prior Work”

- Preliminary experiments showed that (as in the experiments with Pasca’s classes above) ASIA did not always converge to the intended meaning; to avoid this problem, we instituted a second filter, and discarded ASIA’s results if the intersection of hypernyms from ASIA and WordNet constituted less than 50% of those in WordNet.Page 7, “Comparison to Prior Work”

See all papers in Proc. ACL 2009 that mention hypernyms.

See all papers in Proc. ACL that mention hypernyms.

Back to top.