Article Structure

Abstract

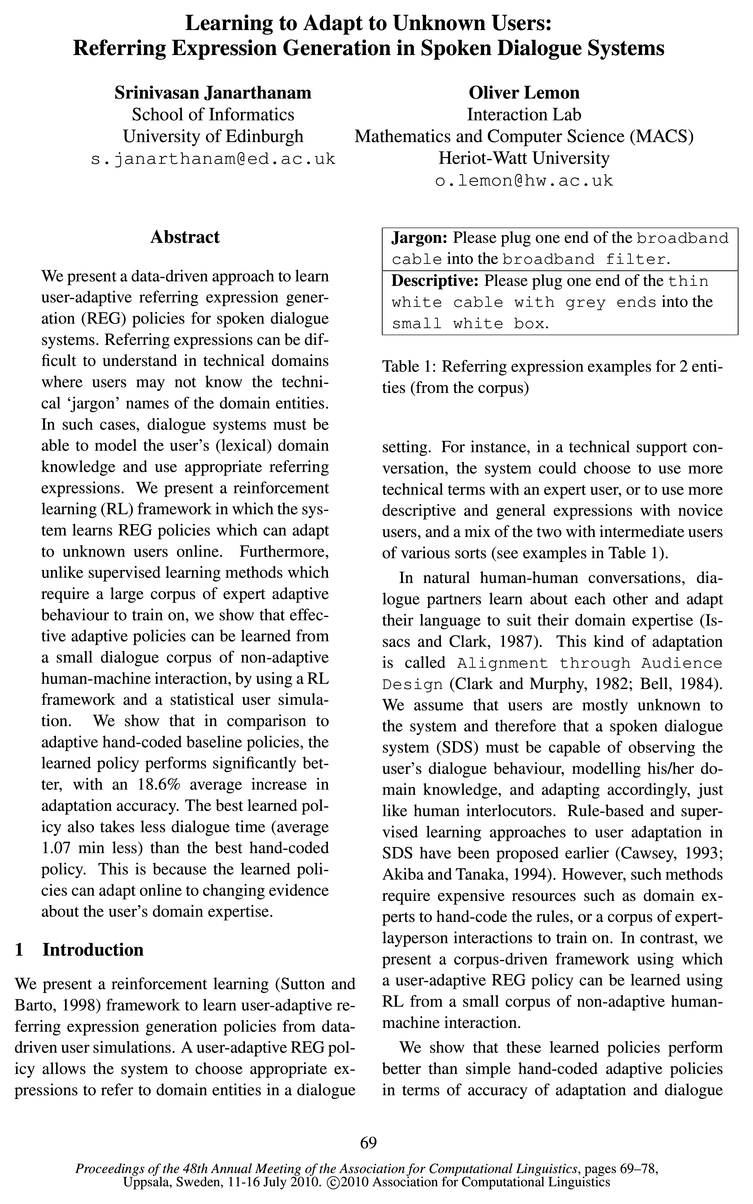

We present a data-driven approach to learn user-adaptive referring expression generation (REG) policies for spoken dialogue systems.

Introduction

We present a reinforcement learning (Sutton and Barto, 1998) framework to learn user-adaptive referring expression generation policies from data-driven user simulations.

Related work

There are several ways in which natural language generation (NLG) systems adapt to users.

The Wizard-of-Oz Corpus

We use a corpus of technical support dialogues collected from real human users using a Wizard-of-Oz method (Janarthanam and Lemon, 2009b).

The Dialogue System

In this section, we describe the different modules of the dialogue system.

User Simulations

In this section, we present user simulation models that simulate the dialogue behaviour of a real human user.

Training

The REG module was trained (operated in leam-ing mode) using the above simulations to learn REG policies that select referring expressions based on the user expertise in the domain.

Evaluation

In this section, we present the evaluation metrics used, the baseline policies that were hand-coded for comparison, and the results of evaluation.

Conclusion

In this study, we have shown that user-adaptive REG policies can be learned from a small corpus of nonadaptive dialogues between a dialogue system and users with different domain knowledge levels.

Topics

rule-based

- Rule-based and superVised learning approaches to user adaptation in SDS have been proposed earlier (Cawsey, 1993; Akiba and Tanaka, 1994).Page 1, “Introduction”

- We also compared the performance of policies learned using a hand-coded rule-based simulation and a data-driven statistical simulation and show that data-driven simulations produce better policies than rule-based ones.Page 2, “Introduction”

- Rule-based and supervised learning approaches have been proposed to learn and adapt during the conversation dynamically.Page 2, “Related work”

- It is also not clear how supervised and rule-based approaches choose between when to seek more information and when to adapt.Page 2, “Related work”

- Earlier, we reported a proof-of-concept work using a hand-coded rule-based user simulation (J anarthanam and Lemon, 2009c).Page 2, “Related work”

- We used two kinds of action selection models: corpus-driven statistical model and hand-coded rule-based model.Page 5, “User Simulations”

- 5.2 Rule-based action selection modelPage 5, “User Simulations”

- We also built a rule-based simulation using the above models but where some of the parameters were set manually instead of estimated from the data.Page 5, “User Simulations”

- The purpose of this simulation is to investigate how learning with a data-driven statistical simulation compares to learning with a simple hand-coded rule-based simulation.Page 5, “User Simulations”

- In order to compare the performance of the learned policy with hand-coded REG policies, three simple rule-based policies were built.Page 8, “Evaluation”

- The results show that using our RL framework, REG policies can be learned using data-driven simulations, and that such a policy can predict and adapt to a user’s knowledge pattern more accurately than policies trained using hand-coded rule-based simulations and hand-coded baseline policies.Page 9, “Evaluation”

See all papers in Proc. ACL 2010 that mention rule-based.

See all papers in Proc. ACL that mention rule-based.

Back to top.