Article Structure

Abstract

Tree-to-string translation rules are widely used in linguistically syntax-based statistical machine translation systems.

Introduction

Tree-to-string translation rules are generic and applicable to numerous linguistically syntax-based Statistical Machine Translation (SMT) systems, such as string-to-tree translation (Galley et al., 2004; Galley et al., 2006; Chiang et al., 2009), tree-to-string translation (Liu et al., 2006; Huang et al., 2006), and forest-to-string translation (Mi et al., 2008; Mi and Huang, 2008).

Related Work

2.1 Tree-to-string and string-to-tree translations

Fine-grained rule extraction

We now introduce the deep syntactic information generated by an HPSG parser and then describe our approaches for fine-grained tree-to-string rule extraction.

Experiments

4.1 Translation models

Conclusion

We have proposed approaches of using deep syntactic information for extracting fine-grained tree-to-string translation rules from aligned HPSG forest-string pairs.

Topics

fine-grained

- In this paper, we propose to use deep syntactic information for obtaining fine-grained translation rules.Page 1, “Abstract”

- A head-driven phrase structure grammar (HPSG) parser is used to obtain the deep syntactic information, which includes a fine-grained description of the syntactic property and a semantic representation of a sentence.Page 1, “Abstract”

- We extract fine-grained rules from aligned HPSG tree/forest-string pairs and use them in our tree-to-string and string-to-tree systems.Page 1, “Abstract”

- But, “VBN(killed)” is indeed separable into two fine-grained tree fragments of “VBN(killedzactive)” and “VBN(killed:passive)”1.Page 1, “Introduction”

- This motivates our proposal of using deep syntactic information to obtain a fine-grained translation rule set.Page 1, “Introduction”

- deep syntactic information of an English sentence, which includes a fine-grained description of the syntactic property and a semantic representation of the sentence.Page 2, “Introduction”

- We extract fine-grained translation rules from aligned HPSG tree/forest-string pairs.Page 2, “Introduction”

- The HPSG grammar and our proposal of fine-grained rule extraction algorithms are described in Section 3.Page 2, “Introduction”

- Section 4 gives the experiments for applying fine-grained translation rules to large-scale J apanese-English translation tasks.Page 2, “Introduction”

- Before describing our approaches of applying deep syntactic information yielded by an HPSG parser for fine-grained rule extraction, we would like to briefly review what kinds of deep syntactic information have been employed for SMT.Page 4, “Related Work”

- fine-grained tree-to-string rule extraction, rather than string-to-string translation (Hassan et al., 2007; Birch et al., 2007).Page 4, “Related Work”

See all papers in Proc. ACL 2010 that mention fine-grained.

See all papers in Proc. ACL that mention fine-grained.

Back to top.

parse tree

- Dealing with the parse error problem and rule sparseness problem, Mi and Huang (2008) replaced the l-best parse tree with a packed forest which compactly encodes exponentially many parses for tree-to-string rule extraction.Page 1, “Introduction”

- fi] is a sentence of a foreign language other than English, E5 is a l-best parse tree of an English sentence E = e{, and A = {(j, is an alignment between the words in F and E.Page 2, “Related Work”

- Considering the parse error problem in the l-best or k-best parse trees , Mi and Huang (2008) extracted tree-to-string translation rules from aligned packed forest-string pairs.Page 3, “Related Work”

- In an HPSG parse tree , these lexical syntactic descriptions are included in the LEXENTRY feature (refer to Table 2) of a lexical node (Matsuzaki et al., 2007).Page 4, “Related Work”

- Considering that a parse tree is a trivial packed forest, we only use the term forest to expand our discussion, hereafter.Page 5, “Fine-grained rule extraction”

See all papers in Proc. ACL 2010 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

semantic representation

- A head-driven phrase structure grammar (HPSG) parser is used to obtain the deep syntactic information, which includes a fine-grained description of the syntactic property and a semantic representation of a sentence.Page 1, “Abstract”

- deep syntactic information of an English sentence, which includes a fine-grained description of the syntactic property and a semantic representation of the sentence.Page 2, “Introduction”

- The Logon project2 (Oepen et al., 2007) for Norwegian-English translation integrates in-depth grammatical analysis of Norwegian (using lexical functional grammar, similar to (Riezler and Maxwell, 2006)) with semantic representations in the minimal recursion semantics framework, and fully grammar-based generation for English using HPSG.Page 4, “Related Work”

- The semantic representation of the new phrase is calculated at the same time.Page 4, “Fine-grained rule extraction”

- Second, we can identify sub-trees in a parse tree/forest that correspond to basic units of the semantics, namely sub-trees covering a predicate and its arguments, by using the semantic representation given in the signs.Page 5, “Fine-grained rule extraction”

See all papers in Proc. ACL 2010 that mention semantic representation.

See all papers in Proc. ACL that mention semantic representation.

Back to top.

significant improvements

- By introducing supertags into the target language side, i.e., the target language model and the target side of the phrase table, significant improvement was achieved for Arabic-to-English translation.Page 4, “Related Work”

- (2007) also reported a significant improvement for Dutch-English translation by applying CCG supertags at a word level to a factorized SMT system (Koehn et al., 2007).Page 4, “Related Work”

- Comparing the BLEU-4 scores of PTT+C'3;g and PTT+03, we gained 0.56 (t2s) and 0.57 (s2t) BLEU-4 points which are significant improvements (p < 0.05).Page 8, “Experiments”

- Furthermore, we gained 0.50 (t2s) and 0.62 (s2t) BLEU-4 points from PTT+FS to PTT+F, which are also significant improvements (p < 0.05).Page 8, “Experiments”

- Extensive experiments on large-scale bidirectional Japanese-English translations testified the significant improvements on BLEU score.Page 9, “Conclusion”

See all papers in Proc. ACL 2010 that mention significant improvements.

See all papers in Proc. ACL that mention significant improvements.

Back to top.

translation probabilities

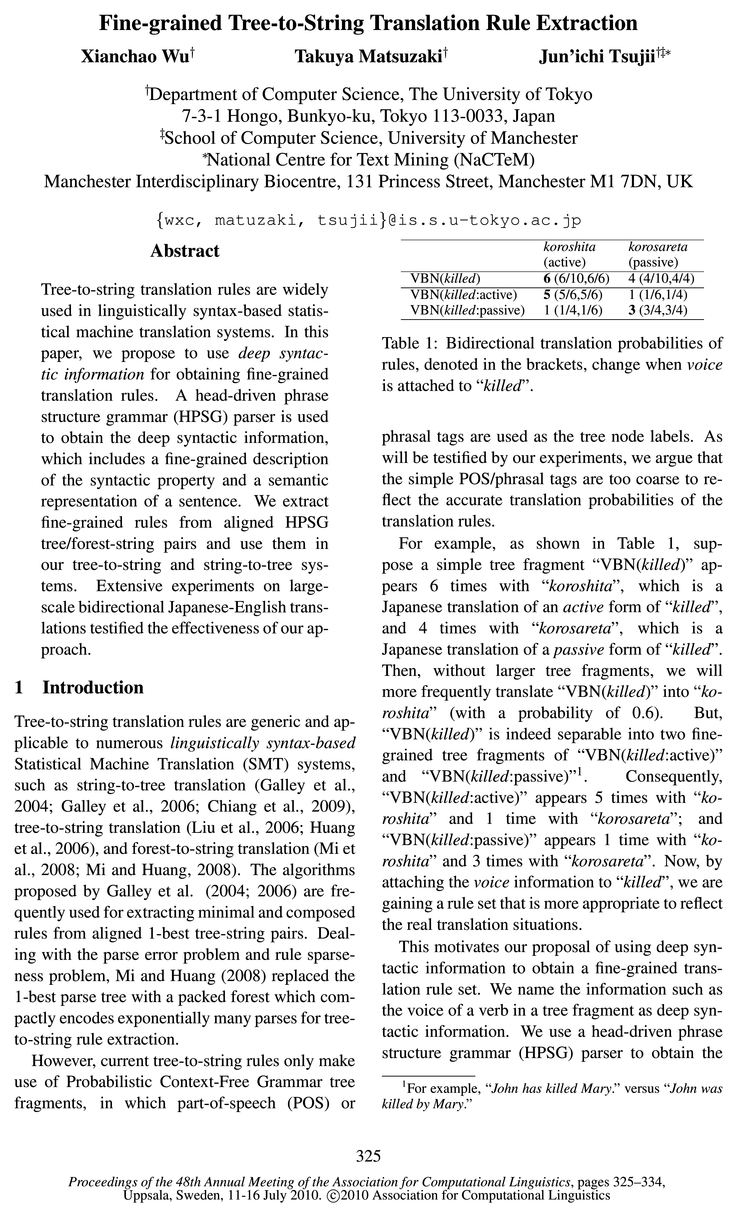

- Table l: Bidirectional translation probabilities of rules, denoted in the brackets, change when voice is attached to “killed”.Page 1, “Introduction”

- As will be testified by our experiments, we argue that the simple POS/phrasal tags are too coarse to reflect the accurate translation probabilities of the translation rules.Page 1, “Introduction”

- (2006) constructed a derivation-forest, in which composed rules were generated, unaligned words of foreign language were consistently attached, and the translation probabilities of rules were estimated by using Expectation-Maximization (EM) (Dempster et al., 1977) training.Page 3, “Related Work”

- Maximum likelihood estimation is used to calculate the translation probabilities of each rule.Page 6, “Fine-grained rule extraction”

- Here, s/t represent the source/target part of a rule in PTT, TRS, or PRS; and are translation probabilities and lexical weights of rules from PTT, TRS, and PRS.Page 7, “Experiments”

See all papers in Proc. ACL 2010 that mention translation probabilities.

See all papers in Proc. ACL that mention translation probabilities.

Back to top.

language model

- By introducing supertags into the target language side, i.e., the target language model and the target side of the phrase table, significant improvement was achieved for Arabic-to-English translation.Page 4, “Related Work”

- Here, the first item is the language model (LM) probability where 7'(d) is the target string of derivation d; the second item is the translation length penalty; and the third item is the translation score, which is decomposed into a product of feature values of rules:Page 7, “Experiments”

- SRI Language Modeling Toolkit (Stolcke, 2002) was employed to train 5-gram English and Japanese LMs on the training set.Page 8, “Experiments”

See all papers in Proc. ACL 2010 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

Lexicalized

- Two kinds of supertags, from Lexicalized Tree-Adjoining Grammar and Combinatory Categorial Grammar (CCG), have been used as lexical syntactic descriptions (Hassan et al., 2007) for phrase-based SMT (Koehn et al., 2007).Page 4, “Related Work”

- Head-driven phrase structure grammar (HPSG) is a lexicalist grammar framework.Page 4, “Fine-grained rule extraction”

- Based on TC, we can easily build a tree-to-string translation rule by further completing the right-hand-side string by sorting the spans of Tc’s leaf nodes, lexicalizing the terminal node’s span(s), and assigning a variable to each nonterminal node’s span.Page 6, “Fine-grained rule extraction”

See all papers in Proc. ACL 2010 that mention Lexicalized.

See all papers in Proc. ACL that mention Lexicalized.

Back to top.

translation models

- 4.1 Translation modelsPage 6, “Experiments”

- In our translation models , we have made use of three kinds of translation rule sets which are trained separately.Page 7, “Experiments”

- These tree-to-tree rules are applicable for forest-to-tree translation models (Liu et al., 2009a).Page 9, “Conclusion”

See all papers in Proc. ACL 2010 that mention translation models.

See all papers in Proc. ACL that mention translation models.

Back to top.