Article Structure

Abstract

Comprehending action preconditions and effects is an essential step in modeling the dynamics of the world.

Introduction

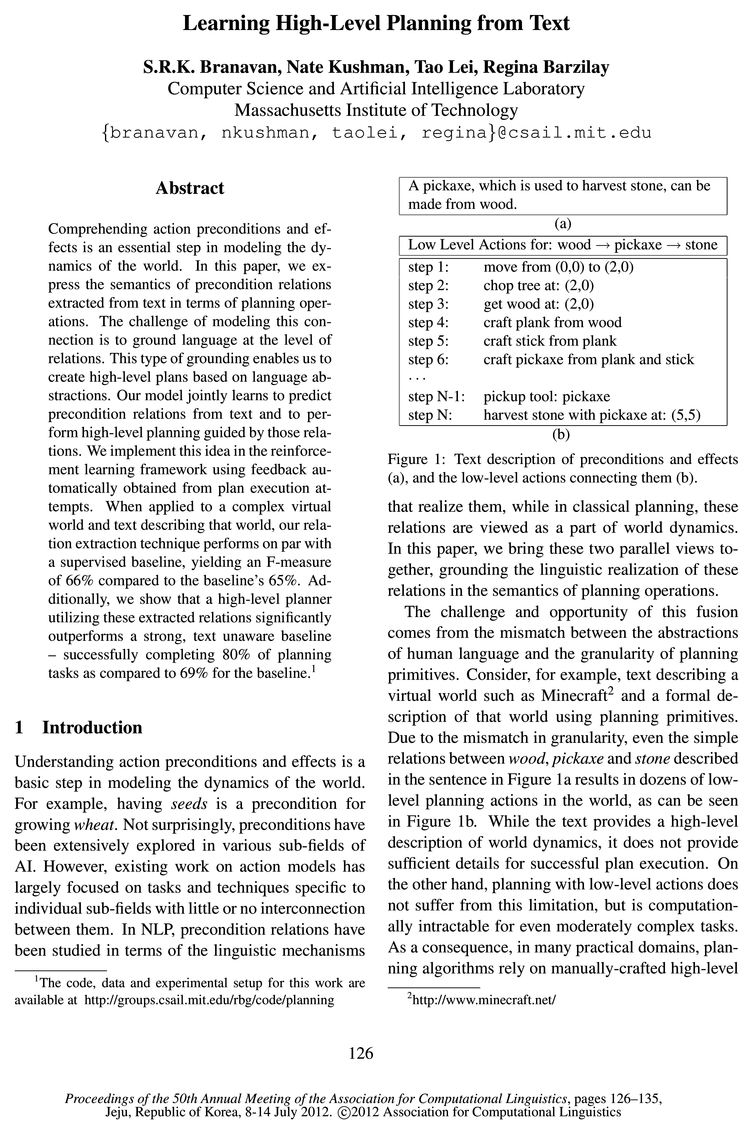

Understanding action preconditions and effects is a basic step in modeling the dynamics of the world.

Related Work

Extracting Event Semantics from Text The task of extracting preconditions and effects has previously been addressed in the context of lexical semantics (Sil et al., 2010; Sil and Yates, 2011).

Problem Formulation

Our task is twofold.

Model

The key idea behind our model is to leverage textual descriptions of preconditions and effects to guide the construction of high level plans.

In our dataset only 11% of Candidate Relations are valid.

Input: A document d, Set of planning tasks G, Set of candidate precondition relations Call, Reward function 7“(), Number of iterations T

Experimental Setup

Datasets As the text description of our virtual world, we use documents from the Minecraft Wiki,7 the most popular information source about the game.

Results

Relation Extraction Figure 5 shows the performance of our method on identifying preconditions in text.

Conclusions

In this paper, we presented a novel technique for inducing precondition relations from text by grounding them in the semantics of planning operations.

Topics

relation extraction

- In this paper, we express the semantics of precondition relations extracted from text in terms of planning operations.Page 1, “Abstract”

- When applied to a complex virtual world and text describing that world, our relation extraction technique performs on par with a supervised baseline, yielding an F-measure of 66% compared to the baseline’s 65%.Page 1, “Abstract”

- The central idea of our work is to express the semantics of precondition relations extracted from text in terms of planning operations.Page 2, “Introduction”

- We build on the intuition that the validity of precondition relations extracted from text can be informed by the execution of a low-level planner.3 This feedback can enable us to learn these relations without annotations.Page 2, “Introduction”

- Our results demonstrate the strength of our relation extraction technique — while using planning feedback as its only source of supervision, it achieves a precondition relation extraction accuracy on par with that of a supervised SVM baseline.Page 2, “Introduction”

- Evaluation Metrics We use our manual annotations to evaluate the type-level accuracy of relation extraction .Page 6, “Experimental Setup”

- Baselines To evaluate the performance of our relation extraction , we compare against an SVM classifier8 trained on the Gold Relations.Page 6, “Experimental Setup”

- Relation Extraction Figure 5 shows the performance of our method on identifying preconditions in text.Page 7, “Results”

- While using planning feedback as its only source of supervision, our method for relation extraction achieves a performance on par with that of a supervised baseline.Page 8, “Conclusions”

See all papers in Proc. ACL 2012 that mention relation extraction.

See all papers in Proc. ACL that mention relation extraction.

Back to top.

SVM

- Our results demonstrate the strength of our relation extraction technique — while using planning feedback as its only source of supervision, it achieves a precondition relation extraction accuracy on par with that of a supervised SVM baseline.Page 2, “Introduction”

- Baselines To evaluate the performance of our relation extraction, we compare against an SVM classifier8 trained on the Gold Relations.Page 6, “Experimental Setup”

- We test the SVM baseline in a leave-one-out fashion.Page 6, “Experimental Setup”

- Model F-score 0.4 _ ---- -- SVM F-score ---------- -- All-text F-scorePage 7, “Experimental Setup”

- Figure 5: The performance of our model and a supervised SVM baseline on the precondition prediction task.Page 7, “Experimental Setup”

- We also show the performance of the supervised SVM baseline.Page 7, “Results”

- Feature Analysis Figure 7 shows the top five positive features for our model and the SVM baseline.Page 8, “Results”

- Figure 7: The top five positive features on words and dependency types learned by our model (above) and by SVM (below) for precondition prediction.Page 8, “Results”

See all papers in Proc. ACL 2012 that mention SVM.

See all papers in Proc. ACL that mention SVM.

Back to top.

model parameters

- Update the model parameters , using the low-level planner’s success or failure as the source of supervision.Page 4, “Model”

- where 6C is the vector of model parameters .Page 4, “Model”

- Initialization: Model parameters 0,, = 0 and 00 = 0.Page 4, “In our dataset only 11% of Candidate Relations are valid.”

- As before, 633 is the vector of model parameters , and gbx is the feature function.Page 5, “In our dataset only 11% of Candidate Relations are valid.”

- Therefore, during learning, we need to find the model parameters that maximize expected future reward (Sutton and Barto, 1998).Page 5, “In our dataset only 11% of Candidate Relations are valid.”

See all papers in Proc. ACL 2012 that mention model parameters.

See all papers in Proc. ACL that mention model parameters.

Back to top.

dependency parse

- grounding, the sentence 13k, and a given dependency parse qk of the sentence.Page 4, “In our dataset only 11% of Candidate Relations are valid.”

- The text component features gbc are computed over sentences and their dependency parses .Page 6, “In our dataset only 11% of Candidate Relations are valid.”

- The Stanford parser (de Marneffe et al., 2006) was used to generate the dependency parse information for each sentence.Page 6, “In our dataset only 11% of Candidate Relations are valid.”

See all papers in Proc. ACL 2012 that mention dependency parse.

See all papers in Proc. ACL that mention dependency parse.

Back to top.

F-score

- Specifically, it yields an F-score of 66% compared to the 65% of the baseline.Page 2, “Introduction”

- Model F-score 0.4 _ ---- -- SVM F-score ---------- -- All-text F-scorePage 7, “Experimental Setup”

- Precondition prediction F-scorePage 7, “Experimental Setup”

See all papers in Proc. ACL 2012 that mention F-score.

See all papers in Proc. ACL that mention F-score.

Back to top.

manual annotations

- We also manually annotated the relations expressed in the text, identifying 94 of the Candidate Relations as valid.Page 6, “Experimental Setup”

- Evaluation Metrics We use our manual annotations to evaluate the type-level accuracy of relation extraction.Page 6, “Experimental Setup”

- The first, Manual Text, is a variant of our model which directly uses the links derived from manual annotations of preconditions in text.Page 7, “Experimental Setup”

See all papers in Proc. ACL 2012 that mention manual annotations.

See all papers in Proc. ACL that mention manual annotations.

Back to top.

significantly outperforms

- Additionally, we show that a high—level planner utilizing these extracted relations significantly outperforms a strong, text unaware baseline — successfully completing 80% of planning tasks as compared to 69% for the baseline.1Page 1, “Abstract”

- Our results show that our text-driven high-level planner significantly outperforms all baselines in terms of completed planning tasks — it successfully solves 80% as compared to 41% for the Metric-FF planner and 69% for the text unaware variant of our model.Page 2, “Introduction”

- We show that building high-level plans in this manner significantly outperforms traditional techniques in terms of task completion.Page 8, “Conclusions”

See all papers in Proc. ACL 2012 that mention significantly outperforms.

See all papers in Proc. ACL that mention significantly outperforms.

Back to top.