Article Structure

Abstract

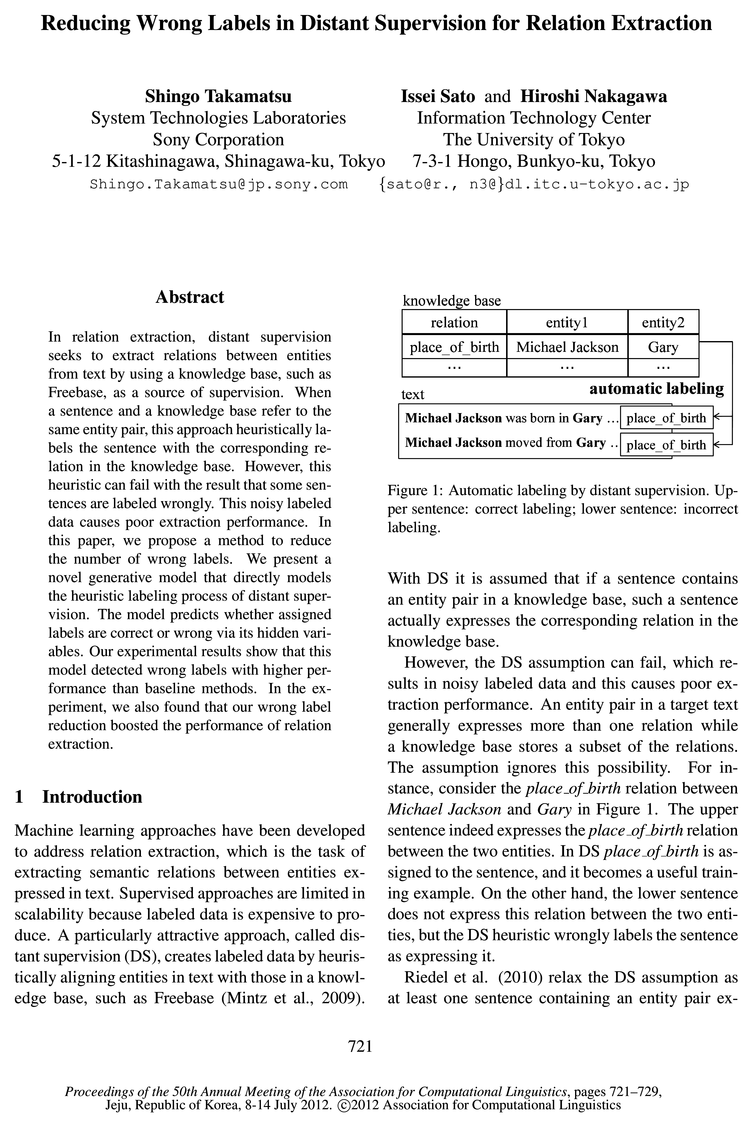

In relation extraction, distant supervision seeks to extract relations between entities from text by using a knowledge base, such as Freebase, as a source of supervision.

Introduction

Machine learning approaches have been developed to address relation extraction, which is the task of extracting semantic relations between entities expressed in text.

Related Work

The increasingly popular approach, called distant supervision (DS), or weak supervision, utilizes a knowledge base to heuristically label a corpus (Wu and Weld, 2007; Bellare and McCallum, 2007; Pal

Knowledge-based Distant Supervision

In this section, we describe DS for relation extraction.

Wrong Label Reduction

We define a pattern as the entity types of an entity pair2 as well as the sequence of words on the path of the dependency parse tree from the first entity to the second one.

Generative Model

We now describe our generative model, which predicts whether a pattern expresses relation 7“ or not via hidden variables.

Learning

We learn parameters ar, 67,, and d, and infer hidden variables Z. by maximizing the log likelihood given XT.

Experiments

We performed two sets of experiments.

Conclusion

We proposed a method that reduces the number of wrong labels created with the DS assumption, which is widely applied.

Topics

knowledge base

- In relation extraction, distant supervision seeks to extract relations between entities from text by using a knowledge base , such as Freebase, as a source of supervision.Page 1, “Abstract”

- When a sentence and a knowledge base refer to the same entity pair, this approach heuristically labels the sentence with the corresponding relation in the knowledge base .Page 1, “Abstract”

- A particularly attractive approach, called distant supervision (DS), creates labeled data by heuristically aligning entities in text with those in a knowledge base , such as Freebase (Mintz et al., 2009).Page 1, “Introduction”

- knowledge basePage 1, “Introduction”

- With DS it is assumed that if a sentence contains an entity pair in a knowledge base, such a sentence actually expresses the corresponding relation in the knowledge base .Page 1, “Introduction”

- An entity pair in a target text generally expresses more than one relation while a knowledge base stores a subset of the relations.Page 1, “Introduction”

- pressing the corresponding relation in the knowledge base .Page 2, “Introduction”

- 0 We applied our method to Wikipedia articles using Freebase as a knowledge base and found that (i) our model identified patterns expressing a given relation more accurately than baseline methods and (ii) our method led to better extraction performance than the original DS (Mintz et al., 2009) and MultiR (Hoffmann et al., 2011), which is a state-of-the-art multi-instance learning system for relation extraction (see Section 7).Page 2, “Introduction”

- The increasingly popular approach, called distant supervision (DS), or weak supervision, utilizes a knowledge base to heuristically label a corpus (Wu and Weld, 2007; Bellare and McCallum, 2007; PalPage 2, “Related Work”

- (2009) who used Freebase as a knowledge base by making the DS assumption and trained relation extractors on Wikipedia.Page 2, “Related Work”

- DS uses a knowledge base to create labeled data for relation extraction by heuristically matching entity pairs.Page 3, “Knowledge-based Distant Supervision”

See all papers in Proc. ACL 2012 that mention knowledge base.

See all papers in Proc. ACL that mention knowledge base.

Back to top.

relation extraction

- In relation extraction , distant supervision seeks to extract relations between entities from text by using a knowledge base, such as Freebase, as a source of supervision.Page 1, “Abstract”

- In the experiment, we also found that our wrong label reduction boosted the performance of relation extraction .Page 1, “Abstract”

- Machine learning approaches have been developed to address relation extraction , which is the task of extracting semantic relations between entities expressed in text.Page 1, “Introduction”

- 0 We applied our method to Wikipedia articles using Freebase as a knowledge base and found that (i) our model identified patterns expressing a given relation more accurately than baseline methods and (ii) our method led to better extraction performance than the original DS (Mintz et al., 2009) and MultiR (Hoffmann et al., 2011), which is a state-of-the-art multi-instance learning system for relation extraction (see Section 7).Page 2, “Introduction”

- (2009) who used Freebase as a knowledge base by making the DS assumption and trained relation extractors on Wikipedia.Page 2, “Related Work”

- Bootstrapping for relation extraction (Riloff and Jones, 1999; Pantel and Pennacchiotti, 2006; Carlson et al., 2010) is related to our method.Page 2, “Related Work”

- In this section, we describe DS for relation extraction .Page 2, “Knowledge-based Distant Supervision”

- Relation extraction seeks to extract relation instances from text.Page 2, “Knowledge-based Distant Supervision”

- DS uses a knowledge base to create labeled data for relation extraction by heuristically matching entity pairs.Page 3, “Knowledge-based Distant Supervision”

- For relation extraction , we train a classifier for entity pairs using the resultant labeled data.Page 3, “Wrong Label Reduction”

- Experiment 2 aimed to evaluate how much our wrong label reduction in Section 4 improved the performance of relation extraction .Page 6, “Experiments”

See all papers in Proc. ACL 2012 that mention relation extraction.

See all papers in Proc. ACL that mention relation extraction.

Back to top.

generative model

- We present a novel generative model that directly models the heuristic labeling process of distant supervision.Page 1, “Abstract”

- 0 To make the pattern prediction, we propose a generative model that directly models the process of automatic labeling in DS.Page 2, “Introduction”

- 0 Our variational inference for our generative model lets us automatically calibrate parameters for each relation, which are sensitive to the performance (see Section 6).Page 2, “Introduction”

- In our approach, parameters are calibrated for each relation by maximizing the likelihood of our generative model .Page 2, “Related Work”

- In the first step, we introduce the novel generative model that directly models DS’s labeling process and make the prediction (see Section 5).Page 3, “Wrong Label Reduction”

- We now describe our generative model , which predicts whether a pattern expresses relation 7“ or not via hidden variables.Page 3, “Generative Model”

- Experiment 1 aimed to evaluate the performance of our generative model itself, which predicts whether a pattern expresses a relation, given a labeled corpus created with the DS assumption.Page 6, “Experiments”

- In our method, we trained a classifier with a labeled corpus cleaned by Algorithm 1 using the negative pattern list predicted by the generative model .Page 6, “Experiments”

- While our generative model does not use unlabeled examples as negative ones in detecting wrong labels, classifier-based approaches including MultiR do, suffering from false negatives.Page 8, “Experiments”

- Our generative model directly models the labeling process of DS and predicts patterns that are wrongly labeled with a relation.Page 8, “Conclusion”

See all papers in Proc. ACL 2012 that mention generative model.

See all papers in Proc. ACL that mention generative model.

Back to top.

relation instances

- A relation instance is a tuple consisting of two entities and relation 7“.Page 2, “Knowledge-based Distant Supervision”

- For example, place_of_birth(Michael Jackson, Gary) in Figure 1 is a relation instance .Page 2, “Knowledge-based Distant Supervision”

- Relation extraction seeks to extract relation instances from text.Page 2, “Knowledge-based Distant Supervision”

- We extract a relation instance from aPage 2, “Knowledge-based Distant Supervision”

- A knowledge base is a set of relation instances about predefined relations.Page 3, “Knowledge-based Distant Supervision”

- Then, for each entity pair, we try to retrieve the relation instances about the entity pair from the knowledge base.Page 3, “Knowledge-based Distant Supervision”

- If we found such a relation instance , then the set of its relation, the entity pair, and the sentence is stored as a positive example.Page 3, “Knowledge-based Distant Supervision”

- In the held-out evaluation, relation instances discovered from testing articles were automatically compared with those in Freebase.Page 8, “Experiments”

- This let us calculate the precision of each method for the best n relation instances .Page 8, “Experiments”

- For manual evaluation, we picked the top ranked 50 relation instances for the most frequent 15 relations.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention relation instances.

See all papers in Proc. ACL that mention relation instances.

Back to top.

entity types

- (2011) applied a rule-based method to the problem by using popular entity types and keywords for each relation.Page 2, “Related Work”

- We define a pattern as the entity types of an entity pair2 as well as the sequence of words on the path of the dependency parse tree from the first entity to the second one.Page 3, “Wrong Label Reduction”

- 2If we use a standard named entity tagger, the entity types are Person, Location, and Organization.Page 3, “Wrong Label Reduction”

- documents 1,303,000 entity pairs 2,017,000 (matched to Freebase) 129,000 (with entity types ) 913,000 frequent patterns 3,084 relations 24Page 6, “Experiments”

- In Experiment 1, since we needed entity types for patterns, we restricted ourselves to entities matched with Freebase, which also provides entity types for entities.Page 6, “Experiments”

- Entity types are omitted in patterns.Page 7, “Experiments”

- (Several entities in the examples matched and had entity types of Freebase.)Page 7, “Experiments”

- used not only entity pairs matched to Freebase but also ones not matched to Freebase (i.e., entity pairs that do not have entity types ).Page 8, “Experiments”

- We used syntactic features (i.e., features obtained from the dependency parse tree of a sentence) and lexical features, and entity types , which essentially correspond to the ones developed by Mintz et a1.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention entity types.

See all papers in Proc. ACL that mention entity types.

Back to top.

labeled data

- This noisy labeled data causes poor extraction performance.Page 1, “Abstract”

- Supervised approaches are limited in scalability because labeled data is expensive to produce.Page 1, “Introduction”

- A particularly attractive approach, called distant supervision (DS), creates labeled data by heuristically aligning entities in text with those in a knowledge base, such as Freebase (Mintz et al., 2009).Page 1, “Introduction”

- However, the DS assumption can fail, which results in noisy labeled data and this causes poor extraction performance.Page 1, “Introduction”

- Previous works (Hoffmann et al., 2010; Yao et al., 2010) have pointed out that the DS assumption generates noisy labeled data , but did not directly address the problem.Page 2, “Related Work”

- DS uses a knowledge base to create labeled data for relation extraction by heuristically matching entity pairs.Page 3, “Knowledge-based Distant Supervision”

- labeled data generated by DS: LDPage 3, “Wrong Label Reduction”

- For relation extraction, we train a classifier for entity pairs using the resultant labeled data .Page 3, “Wrong Label Reduction”

- We compared the following methods: logistic regression with the labeled data cleaned by the proposed method (PROP), logistic regression with the standard DS labeled data (LR), and MultiR proposed in (Hoffmann et al., 2011) as a state-of-the-art multi-instance learning system.7 For logistic regression, when more than one relation is assigned to a sentence, we simply copied the feature vector and created a training example for each relation.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention labeled data.

See all papers in Proc. ACL that mention labeled data.

Back to top.

hyperparameter

- In this section, we consider relation 7“ since parameters are conditionally independent if relation 7“ and the hyperparameter are given.Page 3, “Generative Model”

- A is the hyperparameter and mst is constant.Page 4, “Generative Model”

- where 0 S A S 1 is the hyperparameter that controls how strongly brs is affected by the main labeling process explained in the previous subsection.Page 5, “Generative Model”

- The averages of hyperparameters of PROP were 0.84 d: 0.05 for A and 0.85 d: 0.10 for the threshold.Page 7, “Experiments”

- Proposed Model (PROP): Using the training data, we determined the two hyperparameters , A and the threshold to round gbrs to 1 or 0, so that they maximized the F value.Page 7, “Experiments”

- hand, our model learns parameters such as or for each relation and thus the hyperparameter of our model does not directly affect its performance.Page 7, “Experiments”

See all papers in Proc. ACL 2012 that mention hyperparameter.

See all papers in Proc. ACL that mention hyperparameter.

Back to top.

distant supervision

- In relation extraction, distant supervision seeks to extract relations between entities from text by using a knowledge base, such as Freebase, as a source of supervision.Page 1, “Abstract”

- We present a novel generative model that directly models the heuristic labeling process of distant supervision .Page 1, “Abstract”

- A particularly attractive approach, called distant supervision (DS), creates labeled data by heuristically aligning entities in text with those in a knowledge base, such as Freebase (Mintz et al., 2009).Page 1, “Introduction”

- Figure 1: Automatic labeling by distant supervision .Page 1, “Introduction”

- The increasingly popular approach, called distant supervision (DS), or weak supervision, utilizes a knowledge base to heuristically label a corpus (Wu and Weld, 2007; Bellare and McCallum, 2007; PalPage 2, “Related Work”

See all papers in Proc. ACL 2012 that mention distant supervision.

See all papers in Proc. ACL that mention distant supervision.

Back to top.

named entity

- An entity is mentioned as a named entity in text.Page 2, “Knowledge-based Distant Supervision”

- Since two entities mentioned in a sentence do not always have a relation, we select entity pairs from a corpus when: (i) the path of the dependency parse tree between the corresponding two named entities in the sentence is no longer than 4 and (ii) the path does not contain a sentence-like boundary, such as a relative clause1 (Banko et al., 2007; Banko and Etzioni, 2008).Page 3, “Knowledge-based Distant Supervision”

- 2If we use a standard named entity tagger, the entity types are Person, Location, and Organization.Page 3, “Wrong Label Reduction”

- In Wikipedia articles, named entities were identified by anchor text linking to another article and starting with a capital letter (Yan et al., 2009).Page 6, “Experiments”

- than one named entity .Page 6, “Experiments”

See all papers in Proc. ACL 2012 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.

manual evaluation

- (2009), we performed an automatic held-out evaluation and a manual evaluation .Page 7, “Experiments”

- 7.3.3 Manual EvaluationPage 8, “Experiments”

- For manual evaluation , we picked the top ranked 50 relation instances for the most frequent 15 relations.Page 8, “Experiments”

- The manually evaluated precisions averaged over the 15 relations are shown in table 4.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention manual evaluation.

See all papers in Proc. ACL that mention manual evaluation.

Back to top.

dependency parse

- Since two entities mentioned in a sentence do not always have a relation, we select entity pairs from a corpus when: (i) the path of the dependency parse tree between the corresponding two named entities in the sentence is no longer than 4 and (ii) the path does not contain a sentence-like boundary, such as a relative clause1 (Banko et al., 2007; Banko and Etzioni, 2008).Page 3, “Knowledge-based Distant Supervision”

- We define a pattern as the entity types of an entity pair2 as well as the sequence of words on the path of the dependency parse tree from the first entity to the second one.Page 3, “Wrong Label Reduction”

- We used syntactic features (i.e., features obtained from the dependency parse tree of a sentence) and lexical features, and entity types, which essentially correspond to the ones developed by Mintz et a1.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention dependency parse.

See all papers in Proc. ACL that mention dependency parse.

Back to top.

parse tree

- Since two entities mentioned in a sentence do not always have a relation, we select entity pairs from a corpus when: (i) the path of the dependency parse tree between the corresponding two named entities in the sentence is no longer than 4 and (ii) the path does not contain a sentence-like boundary, such as a relative clause1 (Banko et al., 2007; Banko and Etzioni, 2008).Page 3, “Knowledge-based Distant Supervision”

- We define a pattern as the entity types of an entity pair2 as well as the sequence of words on the path of the dependency parse tree from the first entity to the second one.Page 3, “Wrong Label Reduction”

- We used syntactic features (i.e., features obtained from the dependency parse tree of a sentence) and lexical features, and entity types, which essentially correspond to the ones developed by Mintz et a1.Page 8, “Experiments”

See all papers in Proc. ACL 2012 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.