Article Structure

Abstract

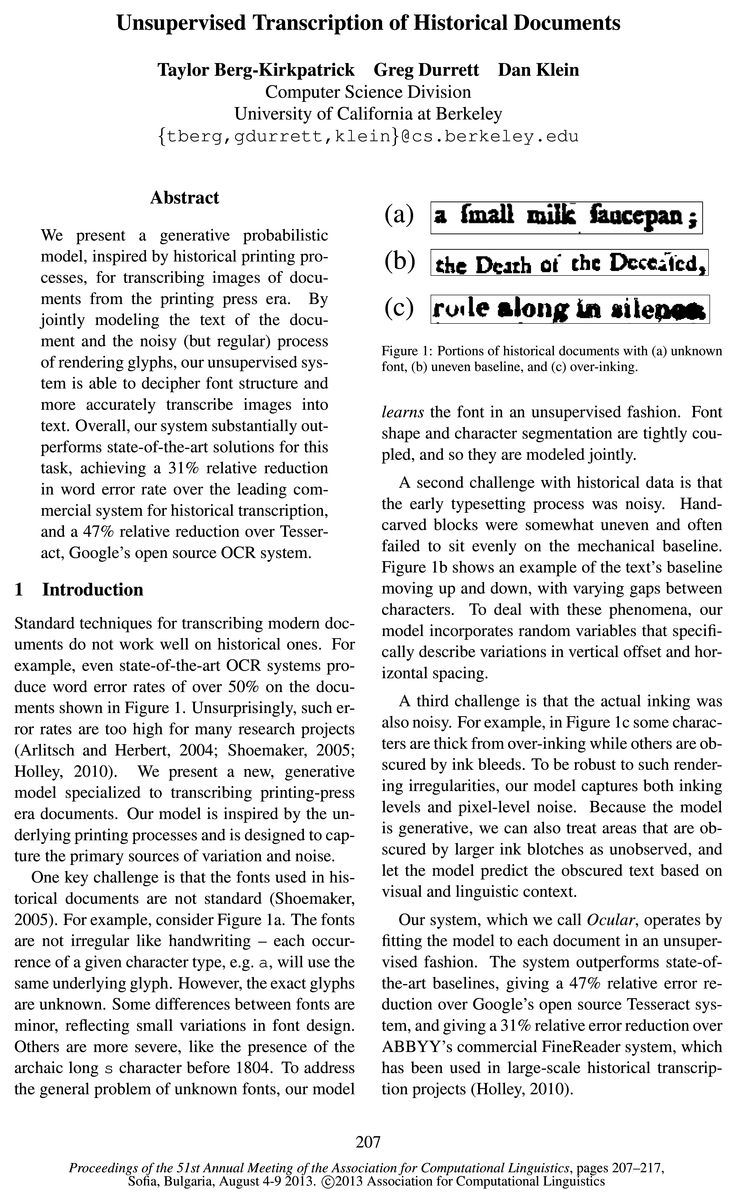

We present a generative probabilistic model, inspired by historical printing processes, for transcribing images of documents from the printing press era.

Introduction

Standard techniques for transcribing modern documents do not work well on historical ones.

Related Work

Relatively little prior work has built models specifically for transcribing historical documents.

Model

Most historical documents have unknown fonts, noisy typesetting layouts, and inconsistent ink levels, usually simultaneously.

Learning

We use the EM algorithm (Dempster et al., 1977) to find the maximum-likelihood font parameters: gbc, 6gp“), QELYPH, and QCRPAD.

Data

We perform experiments on two historical datasets consisting of images of documents printed between 1700 and 1900 in England and Australia.

Experiments

We evaluate our system by comparing our text recognition accuracy to that of two state-of-the-art systems.

Results and Analysis

The results of our experiments are summarized in Table 1.

Conclusion

We have demonstrated a model, based on the historical typesetting process, that effectively learns font structure in an unsupervised fashion to improve transcription of historical documents into text.

Topics

language model

- Work that has directly addressed historical documents has done so using a pipelined approach, and without fully integrating a strong language model (Vamvakas et al., 2008; Kluzner et al., 2009; Kae et al., 2010; Kluzner et al., 2011).Page 2, “Related Work”

- They integrated typesetting models with language models , but did not model noise.Page 2, “Related Work”

- Our approach is also similar in that we use a strong language model (in conjunction with the constraint that the correspondence be regular) to learn the correct mapping.Page 2, “Related Work”

- P(E, T, R, X) = P(E) [ Language model ] - P(T|E) [Typesetting model] - P(R) [Inking model] - P (X |E, T, R) [Noise model]Page 2, “Model”

- 3.1 Language Model P(E)Page 2, “Model”

- Our language model , P(E), is a Kneser-Ney smoothed character n-gram model (Kneser and Ney, 1995).Page 2, “Model”

- The number of states in the dynamic programming lattice grows exponentially with the order of the language model (Jelinek, 1998; Koehn, 2004).Page 5, “Learning”

- As a result, inference can become slow when the language model order n is large.Page 5, “Learning”

- On each iteration of EM, we perform two passes: a coarse pass using a low-order language model, and a fine pass using a high-order language model (Petrov et al., 2008; Zhang and Gildea, 2008).Page 5, “Learning”

- Then, Tesseract uses a classifier, aided by a word-unigram language model , to recognize whole words.Page 7, “Experiments”

- 6.3 Language ModelPage 7, “Experiments”

See all papers in Proc. ACL 2013 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

baseline systems

- We evaluate the output of our system and the baseline systems using two metrics: character error rate (CER) and word error rate (WER).Page 7, “Experiments”

- We compare with two baseline systems : Google’s open source OCR system, Tessearact, and a state-of-the-art commercial system, ABBYY FineReader.Page 7, “Experiments”

- This represents a substantial error reduction compared to both baseline systems .Page 8, “Results and Analysis”

- The baseline systems do not have special provisions for the long 3 glyph.Page 8, “Results and Analysis”

- Our system achieves state-of-the-art results, significantly outperforming two state-of-the-art baseline systems .Page 9, “Conclusion”

See all papers in Proc. ACL 2013 that mention baseline systems.

See all papers in Proc. ACL that mention baseline systems.

Back to top.

error rate

- Overall, our system substantially outperforms state-of-the-art solutions for this task, achieving a 31% relative reduction in word error rate over the leading commercial system for historical transcription, and a 47% relative reduction over Tesseract, Google’s open source OCR system.Page 1, “Abstract”

- For example, even state-of-the-art OCR systems produce word error rates of over 50% on the documents shown in Figure l. Unsurprisingly, such error rates are too high for many research projects (Arlitsch and Herbert, 2004; Shoemaker, 2005; Holley, 2010).Page 1, “Introduction”

- On document (a), which exhibits noisy typesetting, our system achieves a word error rate (WER) of 25.2.Page 6, “Learning”

- We evaluate the output of our system and the baseline systems using two metrics: character error rate (CER) and word error rate (WER).Page 7, “Experiments”

- Table 1: We evaluate the predicted transcriptions in terms of both character error rate (CER) and word error rate (WER), and report macro-averages across documents.Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention error rate.

See all papers in Proc. ACL that mention error rate.

Back to top.

generative model

- We present a new, generative model specialized to transcribing printing-press era documents.Page 1, “Introduction”

- In the NLP community, generative models have been developed specifically for correcting outputs of OCR systems (Kolak et al., 2003), but these do not deal directly with images.Page 2, “Related Work”

- We take a generative modeling approach inspired by the overall structure of the historical printing process.Page 2, “Model”

- Our generative model , which is depicted in Figure 3, reflects this process.Page 3, “Model”

- As noted earlier, one strength of our generative model is that we can make the values of certain pixels unobserved in the model, and let inference fill them in.Page 9, “Results and Analysis”

See all papers in Proc. ACL 2013 that mention generative model.

See all papers in Proc. ACL that mention generative model.

Back to top.

development set

- We used as a development set ten additional documents from the Old Bailey proceedings and five additional documents from Trove that were not part of our test set.Page 7, “Experiments”

- This slightly improves performance on our development set and can be thought of as placing a prior on the glyph shape parameters.Page 7, “Results and Analysis”

- We performed error analysis on our development set by randomly choosing 100 word errors from the WER alignment and manually annotating them with relevant features.Page 9, “Results and Analysis”

See all papers in Proc. ACL 2013 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

edit distance

- Both these metrics are based on edit distance .Page 7, “Experiments”

- CER is the edit distance between the predicted and gold transcriptions of the document, divided by the number of characters in the gold transcription.Page 7, “Experiments”

- WER is the word-level edit distance (words, instead of characters, are treated as tokens) between predicted and gold transcriptions, divided by the number of words in the gold transcription.Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention edit distance.

See all papers in Proc. ACL that mention edit distance.

Back to top.

log-linear

- logistic( Z [M917 kw - Wedgie/D k’ :1 The fact that the parameterization is log-linear will ensure that, during the unsupervised learning process, updating the shape parameters gbc is simple and feasible.Page 5, “Model”

- The noise model that gbc parameterizes is a local log-linear model, so we follow the approach of Berg-Kirkpatrick et al.Page 5, “Learning”

- (2010), we use a regularization term in the optimization of the log-linear model parameters (15¢ during the M-step.Page 7, “Results and Analysis”

See all papers in Proc. ACL 2013 that mention log-linear.

See all papers in Proc. ACL that mention log-linear.

Back to top.

manual annotations

- To investigate the effects of using an in domain language model, we created a corpus composed of the manual annotations of all the documents in the Old Bailey proceedings, excluding those used in our test set.Page 7, “Experiments”

- We manually annotated the ink blotches (shown in red), and made them unobserved in the model.Page 9, “Results and Analysis”

- We performed error analysis on our development set by randomly choosing 100 word errors from the WER alignment and manually annotating them with relevant features.Page 9, “Results and Analysis”

See all papers in Proc. ACL 2013 that mention manual annotations.

See all papers in Proc. ACL that mention manual annotations.

Back to top.

model parameters

- The Bernoulli parameter of a pixel inside a glyph bounding box depends on the pixel’s location inside the box (as well as on di and 21,-, but for simplicity of exposition, we temporarily suppress this dependence) and on the model parameters governing glyph shape (for each character type c, the parameter matrix gbc specifies the shape of the character’s glyph.)Page 4, “Model”

- (2010), we use a regularization term in the optimization of the log-linear model parameters (15¢ during the M-step.Page 7, “Results and Analysis”

- Figure 8: The central glyph is a representation of the initial model parameters for the glyph shape for g, and surrounding this are the learned parameters for documents from various years.Page 8, “Results and Analysis”

See all papers in Proc. ACL 2013 that mention model parameters.

See all papers in Proc. ACL that mention model parameters.

Back to top.