Article Structure

Abstract

Supervised training procedures for semantic parsers produce high-quality semantic parsers, but they have difficulty scaling to large databases because of the sheer number of logical constants for which they must see labeled training data.

Introduction

Semantic parsing is the task of translating natural language utterances to a formal meaning representation language (Chen et al., 2010; Liang et al., 2009; Clarke et al., 2010; Liang et al., 2011; Artzi and Zettlemoyer, 2011).

Previous Work

Two existing systems translate between natural language questions and database queries over large-scale databases.

Textual Schema Matching

3.1 Problem Formulation

Extending a Semantic Parser Using a Schema Alignment

An alignment between textual relations and database relations has many possible uses: for example, it might be used to allow queries over a database to be answered using additional information stored in an extracted relation store, or it might be used to deduce clusters of synonymous relation words in English.

Experiments

We conducted experiments to test the ability of MATCHER and LEXTENDER to produce a semantic parser for Freebase.

Conclusion

Scaling semantic parsing to large databases requires an engineering effort to handle large datasets, but also novel algorithms to extend se-

Topics

semantic parser

- Supervised training procedures for semantic parsers produce high-quality semantic parsers , but they have difficulty scaling to large databases because of the sheer number of logical constants for which they must see labeled training data.Page 1, “Abstract”

- We present a technique for developing semantic parsers for large databases based on a reduction to standard supervised training algorithms, schema matching, and pattern learning.Page 1, “Abstract”

- Leveraging techniques from each of these areas, we develop a semantic parser for Freebase that is capable of parsing questions with an F1 that improves by 0.42 over a purely-supervised learning algorithm.Page 1, “Abstract”

- Semantic parsing is the task of translating natural language utterances to a formal meaning representation language (Chen et al., 2010; Liang et al., 2009; Clarke et al., 2010; Liang et al., 2011; Artzi and Zettlemoyer, 2011).Page 1, “Introduction”

- There has been recent interest in producing such semantic parsers for large, heterogeneous databases like Freebase (Krishnamurthy and Mitchell, 2012; Cai and Yates, 2013) and Yago2 (Yahya et al., 2012), which has driven the development of semi-supervised and distantly-supervised training methods for semantic parsing .Page 1, “Introduction”

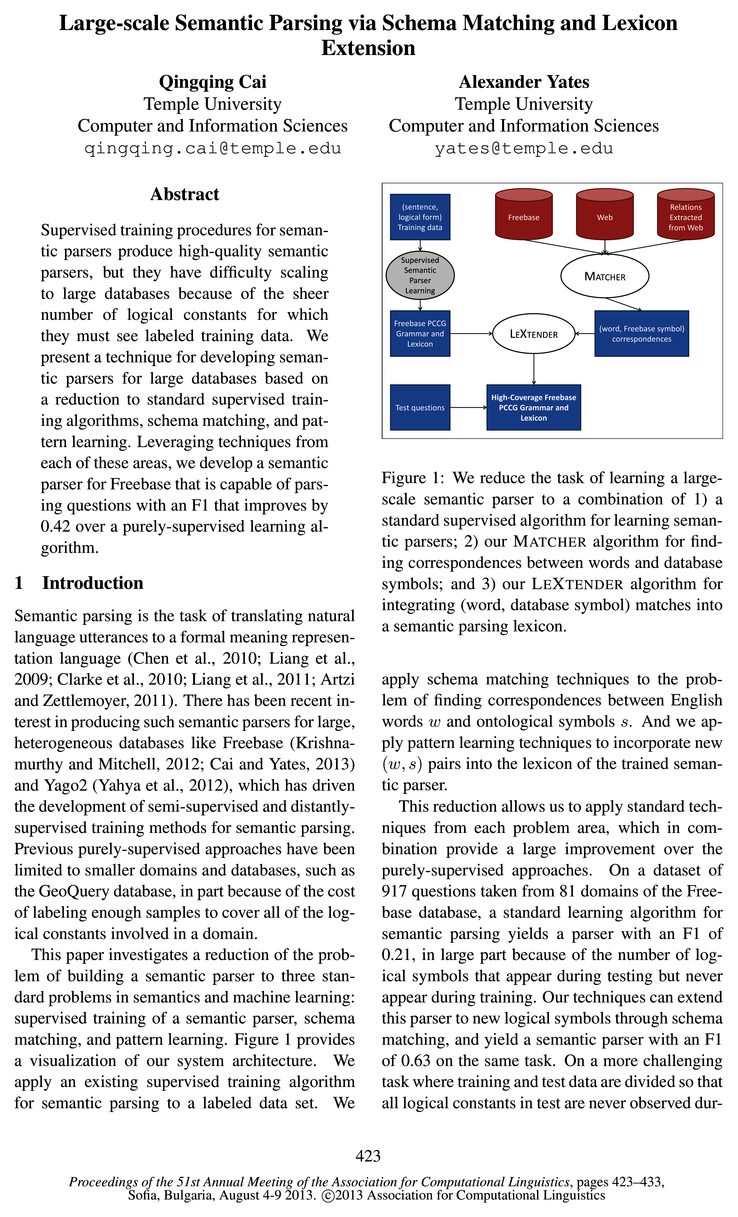

- This paper investigates a reduction of the problem of building a semantic parser to three standard problems in semantics and machine learning: supervised training of a semantic parser , schema matching, and pattern learning.Page 1, “Introduction”

- We apply an existing supervised training algorithm for semantic parsing to a labeled data set.Page 1, “Introduction”

- Supervised Semantic Parser LearningPage 1, “Introduction”

- Figure 1: We reduce the task of learning a large-scale semantic parser to a combination of l) a standard supervised algorithm for learning semantic parsers; 2) our MATCHER algorithm for finding correspondences between words and database symbols; and 3) our LEXTENDER algorithm for integrating (word, database symbol) matches into a semantic parsing lexicon.Page 1, “Introduction”

- And we apply pattern learning techniques to incorporate new (w, 3) pairs into the lexicon of the trained semantic parser .Page 1, “Introduction”

- On a dataset of 917 questions taken from 81 domains of the Freebase database, a standard learning algorithm for semantic parsing yields a parser with an F1 of 0.21, in large part because of the number of logical symbols that appear during testing but never appear during training.Page 1, “Introduction”

See all papers in Proc. ACL 2013 that mention semantic parser.

See all papers in Proc. ACL that mention semantic parser.

Back to top.

logical form

- (sentence, Relations logical form ) Freebase ExtractedPage 1, “Introduction”

- Using A, UBL selects a logical form 2 for a sentence 8 by selecting the z with the most likely parse derivations y: h(S) = arg maxz 2y p(y, z|$; 6, A).Page 6, “Extending a Semantic Parser Using a Schema Alignment”

- The probabilistic model is a log-linear model with features for lexical entries used in the parse, as well as indicator features for relation-argument pairs in the logical form , to capture selectional preferences.Page 6, “Extending a Semantic Parser Using a Schema Alignment”

- Inference (parsing) and parameter estimation are driven by standard dynamic programming algorithms (Clark and Curran, 2007), while lexicon induction is based on a novel search procedure through the space of possible higher-order logic unification operations that yield the desired logical form for a training sentence.Page 6, “Extending a Semantic Parser Using a Schema Alignment”

- Figure 2: Example questions with their logical forms .Page 7, “Experiments”

- The logical forms make use of Freebase symbols as logical constants, as well as a few additional symbols such as count and argmin, to allow for aggregation queries.Page 7, “Experiments”

- We also created a dataset of alignments from these annotated questions by creating an alignment for each Freebase relation mentioned in the logical form for a question, paired with a manually-selected word from the question.Page 7, “Experiments”

- Figure 4 shows the F1 scores for these semantic parsers, judged by exact match between the top-scoring logical form from the parser and the manually-produced logical form .Page 8, “Experiments”

- Exact-match tests are overly-strict, in the sense that the system may be judged incorrect even when the logical form that is produced is logically equivalent to the correct logical form .Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention logical form.

See all papers in Proc. ACL that mention logical form.

Back to top.

natural language

- Semantic parsing is the task of translating natural language utterances to a formal meaning representation language (Chen et al., 2010; Liang et al., 2009; Clarke et al., 2010; Liang et al., 2011; Artzi and Zettlemoyer, 2011).Page 1, “Introduction”

- Two existing systems translate between natural language questions and database queries over large-scale databases.Page 2, “Previous Work”

- (2012) report on a system for translating natural language queries to SPARQL queries over the Yago2 (Hoffart et al., 2013) database.Page 2, “Previous Work”

- The manual extraction patterns predefine a link between natural language terms and Yago2 relations.Page 2, “Previous Work”

- The textual schema matching task is to identify natural language words and phrases that correspond with each relation and entity in a fixed schema for a relational database.Page 3, “Textual Schema Matching”

- The problem would be greatly simplified if M were a 1-1 function, but in practice most database relations can be referred to in many ways by natural language users: for instance, f i lm_actor can be referenced by the English verbs “played,” “acted,” and “starred,” along with morphological variants of them.Page 3, “Textual Schema Matching”

- In particular, more research is needed to handle more complex matches between database and textual relations, and to handle more complex natural language queries.Page 9, “Conclusion”

See all papers in Proc. ACL 2013 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

regression model

- 3.5 Regression models for scoring candidatesPage 5, “Textual Schema Matching”

- MATCHER uses a regression model to combine these various statistics into a score for (77,719).Page 5, “Textual Schema Matching”

- The regression model is a linear regression with least-squares parameter estimation; we experimented with support vector regression models with nonlinear kernels, with no significant improvements in accuracy.Page 5, “Textual Schema Matching”

- For W, we use a linear regression model whose features are the score from MATCHER, the probabilities from the Syn and Sem NBC models, and the average weight of all lexical entries in UBL with matching syntax and semantics.Page 7, “Extending a Semantic Parser Using a Schema Alignment”

- Figure 3 shows a Precision-Recall (PR) curve for MATCHER and three baselines: a “Frequency” model that ranks candidate matches for TD by their frequency during the candidate identification step; a “Pattern” model that uses MATCHER’s linear regression model for ranking, but is restricted to only the pattern-based features; and an “Extractions” model that similarly restricts the ranking model to ReVerb features.Page 8, “Experiments”

- All regression models for learning alignments outperform the Frequency ranking by a wide margin.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention regression model.

See all papers in Proc. ACL that mention regression model.

Back to top.

CCG

- technique does require manual specification of rules that construct CCG lexical entries from dependency parses.Page 2, “Previous Work”

- In comparison, we fully automate the process of constructing CCG lexical entries for the semantic parser by making it a prediction task.Page 2, “Previous Work”

- Using a fixed CCG grammar and a procedure based on unification in second-order logic, UBL learns a lexicon A from the training data which includes entries like:Page 6, “Extending a Semantic Parser Using a Schema Alignment”

- Example CCG Grammar RulesPage 6, “Extending a Semantic Parser Using a Schema Alignment”

See all papers in Proc. ACL 2013 that mention CCG.

See all papers in Proc. ACL that mention CCG.

Back to top.

learning algorithm

- Leveraging techniques from each of these areas, we develop a semantic parser for Freebase that is capable of parsing questions with an F1 that improves by 0.42 over a purely-supervised learning algorithm .Page 1, “Abstract”

- On a dataset of 917 questions taken from 81 domains of the Freebase database, a standard learning algorithm for semantic parsing yields a parser with an F1 of 0.21, in large part because of the number of logical symbols that appear during testing but never appear during training.Page 1, “Introduction”

- Owing to the complexity of the general case, researchers have resorted to defining standard similarity metrics between relations and attributes, as well as machine learning algorithms for learning and predicting matches between relations (Doan et al., 2004; Wick et al., 2008b; Wick et al., 2008a; Nottelmann and Straccia, 2007; Berlin and Motro, 2006).Page 2, “Previous Work”

See all papers in Proc. ACL 2013 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

linear regression

- The regression model is a linear regression with least-squares parameter estimation; we experimented with support vector regression models with nonlinear kernels, with no significant improvements in accuracy.Page 5, “Textual Schema Matching”

- For W, we use a linear regression model whose features are the score from MATCHER, the probabilities from the Syn and Sem NBC models, and the average weight of all lexical entries in UBL with matching syntax and semantics.Page 7, “Extending a Semantic Parser Using a Schema Alignment”

- Figure 3 shows a Precision-Recall (PR) curve for MATCHER and three baselines: a “Frequency” model that ranks candidate matches for TD by their frequency during the candidate identification step; a “Pattern” model that uses MATCHER’s linear regression model for ranking, but is restricted to only the pattern-based features; and an “Extractions” model that similarly restricts the ranking model to ReVerb features.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention linear regression.

See all papers in Proc. ACL that mention linear regression.

Back to top.

relations extracted

- We also leverage synonym-matching techniques for comparing relations extracted from text with Freebase relations.Page 2, “Previous Work”

- Our techniques for comparing relations fit into this line of work, but they are novel in their application of these techniques to the task of comparing database relations and relations extracted from text.Page 2, “Previous Work”

- Schema matching in the database sense often considers complex matches between relations (Dhamanka et al., 2004), whereas as our techniques are currently restricted to matches involving one database relation and one relation extracted from text.Page 2, “Previous Work”

See all papers in Proc. ACL 2013 that mention relations extracted.

See all papers in Proc. ACL that mention relations extracted.

Back to top.