Article Structure

Abstract

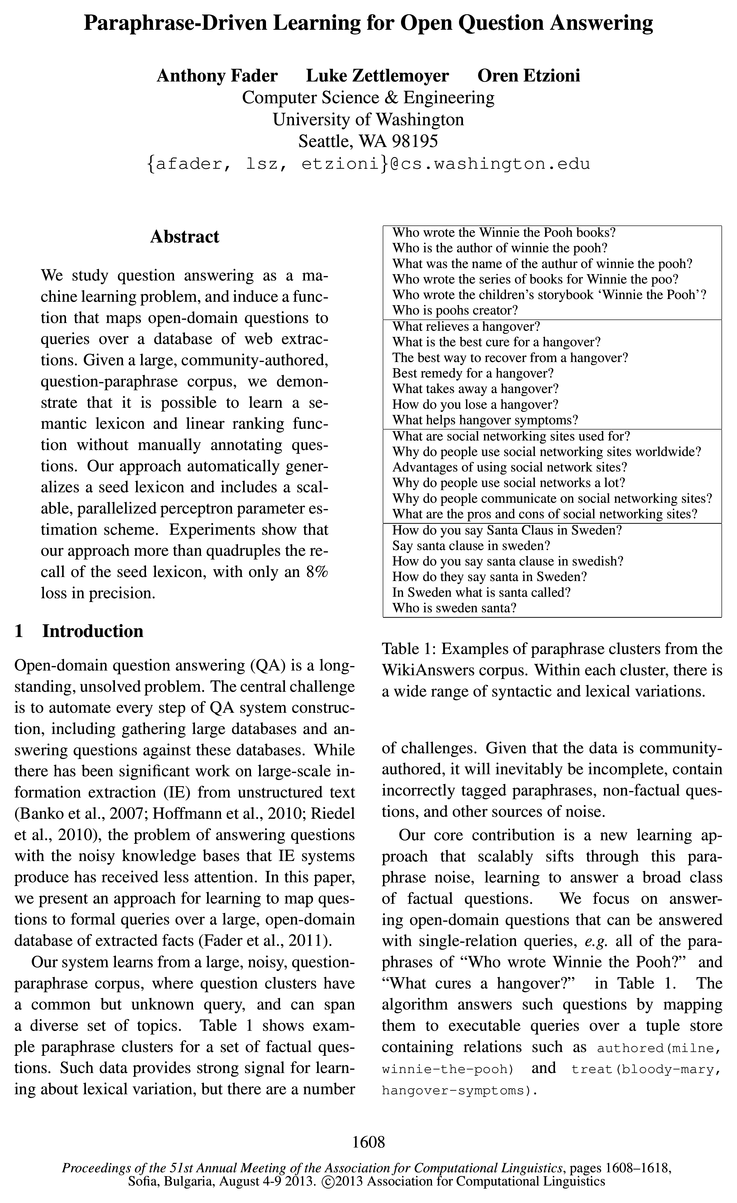

We study question answering as a machine learning problem, and induce a function that maps open-domain questions to queries over a database of web extractions.

Introduction

Open-domain question answering (QA) is a longstanding, unsolved problem.

Related Work

Our work builds upon two major threads of research in natural language processing: information extraction (IE), and natural language interfaces to databases (NLIDB).

Overview of the Approach

In this section, we give a high-level overview of the rest of the paper.

Question Answering Model

To answer questions, we must find the best query for a given natural language question.

Learning

PARALEX uses a two-part learning algorithm; it first induces an overly general lexicon (Section 5.1) and then learns to score derivations to increase accuracy (Section 5.2).

Data

For our database D, we use the publicly available set of 15 million REVERB extractions (Fader et al., 2011).3 The database consists of a set of triples 7“(€1,€2) over a vocabulary of approximately 600K relations and 2M entities, extracted from the ClueWeb()9 corpus.4 The REVERB database contains a large cross-section of general world-knowledge, and thus is a good testbed for developing an open-domain QA system.

Experimental Setup

We compare the following systems:

Results

Table 4 shows the performance of PARALEX on the test questions.

Error Analysis

To understand how close we are to the goal of open-domain QA, we ran PARALEX on an unrestricted sample of questions from WikiAnswers.

Conclusion

We introduced a new learning approach that induces a complete question-answering system from a large corpus of noisy question-paraphrases.

Topics

learning algorithm

- 0 We describe scalable learning algorithms that induce general question templates and lexical variants of entities and relations.Page 2, “Introduction”

- The learning algorithms presented in this paper are similar to algorithms used for paraphrase extraction from sentence-aligned corpora (Barzilay and McKeown, 2001; Barzilay and Lee, 2003; Quirk et al., 2004; Bannard and Callison-Burch, 2005; Callison-Burch, 2008; Marton et al., 2009).Page 2, “Related Work”

- Learning The learning algorithm induces a lexicon L and estimates the parameters 6 of the linear ranking function.Page 3, “Overview of the Approach”

- The final result is a scalable learning algorithm that requires no manual annotation of questions.Page 3, “Overview of the Approach”

- PARALEX uses a two-part learning algorithm ; it first induces an overly general lexicon (Section 5.1) and then learns to score derivations to increase accuracy (Section 5.2).Page 4, “Learning”

- The lexical learning algorithm constructs a lexicon L from a corpus of question paraphrases C =Page 4, “Learning”

- Figure 1: Our lexicon learning algorithm .Page 5, “Learning”

- Figure 1 shows the complete lexical learning algorithm .Page 5, “Learning”

- Figure 2 shows the parameter learning algorithm .Page 5, “Learning”

- The parameter learning algorithm operates in two stages.Page 5, “Learning”

- First, we use the initial lexicon L0 to automatically generate (question :5, query 2) training examples from the paraphrase corpus C. Then we feed the training examples into the learning algorithm , which estimates parameters for the learned lexicon L.Page 5, “Learning”

See all papers in Proc. ACL 2013 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

word alignment

- The algorithm uses learned word alignments to aggressively generalize the seeds, producing a large set of possible lexical equivalences.Page 2, “Introduction”

- We call this procedure InduceLex(:c, :c’, y, A), which takes a paraphrase pair (at, :c’ ), a derivation y of at, and a word alignment A, and returns a new set of lexical entries.Page 4, “Learning”

- , A word alignment A between at and :c’ is a subset of x [n’ A phrase alignment is a pair of index sets (1,1’) where I g and I’ g A phrase alignment (1,1’) is consistent with a word alignment A if for all (i, z") E A, i E I if and only if z" E I ’ .Page 4, “Learning”

- In other words, a phrase alignment is consistent with a word alignment if the words in the phrases are aligned only with each other, and not with any outside words.Page 4, “Learning”

- - A word alignment function WordAlign(a:,a:’ (Section 6)Page 5, “Learning”

- - A function InduceLeX(:1:, :13’, y, A) that induces new lexical items from the paraphrases (:13, :13’ ) using their word alignment A and a derivation y of :13.Page 5, “Learning”

- 2- The phrase pairs (pg, pg), (pmpfia), (196,192) are consistent with the word alignment A.Page 5, “Learning”

- We use an existing statistical word alignment algorithm for WordAlign (see Section 6).Page 5, “Learning”

- Parameter learning is necessary for filtering out derivations that use incorrect lexical entries like new mexico 2 mexico, which arise from noise in the paraphrases and noise in the word alignment .Page 5, “Learning”

See all papers in Proc. ACL 2013 that mention word alignment.

See all papers in Proc. ACL that mention word alignment.

Back to top.

perceptron

- Our approach automatically generalizes a seed lexicon and includes a scalable, parallelized perceptron parameter estimation scheme.Page 1, “Abstract”

- We use the hidden variable structured perceptron algorithm to learn 6 from a list of (question :5, query 2) training examples.Page 5, “Learning”

- We adopt the iterative parameter mixing variation of the perceptron (McDonald et al., 2010) to scale to a large number of training examples.Page 5, “Learning”

- Because the number of training examples is large, we adopt a parallel perceptron approach.Page 5, “Learning”

- We then perform perceptron learning on each partition in parallel.Page 5, “Learning”

- We use a hidden variable version of the perceptron algorithm (Collins, 2002), where the model parameters are updated using the highest scoring derivation y* that will generate the correct query 2 using the learned lexicon L.Page 5, “Learning”

- Number of perceptron epochs T.Page 6, “Data”

- A function PerceptronEpoch(T, 0, L) that runs a single epoch of the hidden-variable structured perceptron algorithm on training set T with initial parameters 0, returning a new parameter vector 0’ .Page 6, “Data”

See all papers in Proc. ACL 2013 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.

question answering

- We study question answering as a machine learning problem, and induce a function that maps open-domain questions to queries over a database of web extractions.Page 1, “Abstract”

- Open-domain question answering (QA) is a longstanding, unsolved problem.Page 1, “Introduction”

- 0 We introduce PARALEX, an end-to-end open-domain question answering system.Page 2, “Introduction”

- More recently, researchers have created systems that use machine learning techniques to automatically construct question answering systems from data (Zelle and Mooney, 1996; Popescu et al., 2004; Zettlemoyer and Collins, 2005 ; Clarke et al., 2010; Liang et al., 2011).Page 2, “Related Work”

- These systems have the ability to handle questions with complex semantics on small domain-specific databases like GeoQuery (Tang and Mooney, 2001) or subsets of Freebase (Cai and Yates, 2013), but have yet to scale to the task of general, open-domain question answering .Page 2, “Related Work”

- Model The question answering model includes a lexicon and a linear ranking function.Page 3, “Overview of the Approach”

- Evaluation In Section 8, we evaluate our system against various baselines on the end-task of question answering against a large database of facts extracted from the web.Page 3, “Overview of the Approach”

- proximately 6% of the questions answered at precision 0.4.Page 8, “Error Analysis”

See all papers in Proc. ACL 2013 that mention question answering.

See all papers in Proc. ACL that mention question answering.

Back to top.

natural language

- Our work builds upon two major threads of research in natural language processing: information extraction (IE), and natural language interfaces to databases (NLIDB).Page 2, “Related Work”

- While much progress has been made in converting text into structured knowledge, there has been little work on answering natural language questions over these databases.Page 2, “Related Work”

- However, we use a paraphrase corpus for extracting lexical items relating natural language patterns to database concepts, as opposed to relationships between pairs of natural language utterances.Page 2, “Related Work”

- Problem Our goal is to learn a function that will map a natural language question cc to a query 2 over a database D. The database D is a collection of assertions in the form r(el, 62) where 7“ is a bi-Page 2, “Overview of the Approach”

- The lexicon L associates natural language patterns to database concepts, thereby defining the space of queries that can be derived from the input question (see Table 2).Page 3, “Overview of the Approach”

- To answer questions, we must find the best query for a given natural language question.Page 3, “Question Answering Model”

- To define the space of possible queries, PARALEX uses a lexicon L that encodes mappings from natural language to database concepts (entities, relations, and queries).Page 3, “Question Answering Model”

See all papers in Proc. ACL 2013 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

baseline systems

- On known-answerable questions, the approach achieved 42% recall, with 77% precision, more than quadrupling the recall over a baseline system .Page 2, “Introduction”

- 0 We evaluate PARALEX on the end-task of answering questions from WikiAnswers using a database of web extractions, and show that it outperforms baseline systems .Page 2, “Introduction”

- PARALEX outperforms the baseline systems in terms of both F1 and MAP.Page 7, “Results”

See all papers in Proc. ACL 2013 that mention baseline systems.

See all papers in Proc. ACL that mention baseline systems.

Back to top.

end-to-end

- We performed an end-to-end evaluation against a database of 15 million facts automatically extracted from general web text (Fader et al., 2011).Page 2, “Introduction”

- 0 We introduce PARALEX, an end-to-end open-domain question answering system.Page 2, “Introduction”

- For the end-to-end QA task, we return a ranked list of answers from the k highest scoring queries.Page 4, “Question Answering Model”

See all papers in Proc. ACL 2013 that mention end-to-end.

See all papers in Proc. ACL that mention end-to-end.

Back to top.

highest scoring

- For the end-to-end QA task, we return a ranked list of answers from the k highest scoring queries.Page 4, “Question Answering Model”

- We score an answer a with the highest score of all derivations that generate a query with answer a.Page 4, “Question Answering Model”

- We use a hidden variable version of the perceptron algorithm (Collins, 2002), where the model parameters are updated using the highest scoring derivation y* that will generate the correct query 2 using the learned lexicon L.Page 5, “Learning”

See all papers in Proc. ACL 2013 that mention highest scoring.

See all papers in Proc. ACL that mention highest scoring.

Back to top.

manual annotation

- Given a large, community-authored, question-paraphrase corpus, we demonstrate that it is possible to learn a semantic lexicon and linear ranking function without manually annotating questions.Page 1, “Abstract”

- These algorithms require no manual annotation and can be applied to large, noisy databases of relational triples.Page 2, “Introduction”

- The final result is a scalable learning algorithm that requires no manual annotation of questions.Page 3, “Overview of the Approach”

See all papers in Proc. ACL 2013 that mention manual annotation.

See all papers in Proc. ACL that mention manual annotation.

Back to top.