Article Structure

Abstract

Semantic parsing is a domain-dependent process by nature, as its output is defined over a set of domain symbols.

Introduction

Natural Language (NL) understanding can be intuitively understood as a general capacity, mapping words to entities and their relationships.

Semantic Interpretation Model

Our model consists of both domain-dependent (mapping between text and a closed set of symbols) and domain independent (abstract predicate-argument structures) information.

Experimental Settings

Situated Language This dataset, introduced in (Bordes et al., 2010), describes situations in a simulated world.

Knowledge Transfer Experiments

We begin by studying the role of domain-independent information when very little domain information is available.

Conclusions

In this paper, we took a first step towards a new kind of generalization in semantic parsing: constructing a model that is able to generalize to a new domain defined over a different set of symbols.

Acknowledgement

The authors would like to thank Julia Hockenmaier, Gerald DeJong, Raymond Mooney and the anonymous reviewers for their efforts and insightful comments.

Topics

semantic parsing

- Semantic parsing is a domain-dependent process by nature, as its output is defined over a set of domain symbols.Page 1, “Abstract”

- However, current work on automated NL understanding (typically referenced as semantic parsing (Zettlemoyer and Collins, 2005; Wong and Mooney, 2007; Chen and Mooney, 2008; Kwiatkowski et al., 2010; Bo'rschinger et al., 2011)) is restricted to a given output domain1 (or task) consisting of a closed set of meaning representation symbols, describing domains such as robotic soccer, database queries and flight ordering systems.Page 1, “Introduction”

- In order to understand this difficulty, a closer look at semantic parsing is required.Page 1, “Introduction”

- : Information in Semantic ParsingPage 1, “Introduction”

- In this work, we attempt to develop a domain independent approach to semantic parsing .Page 1, “Introduction”

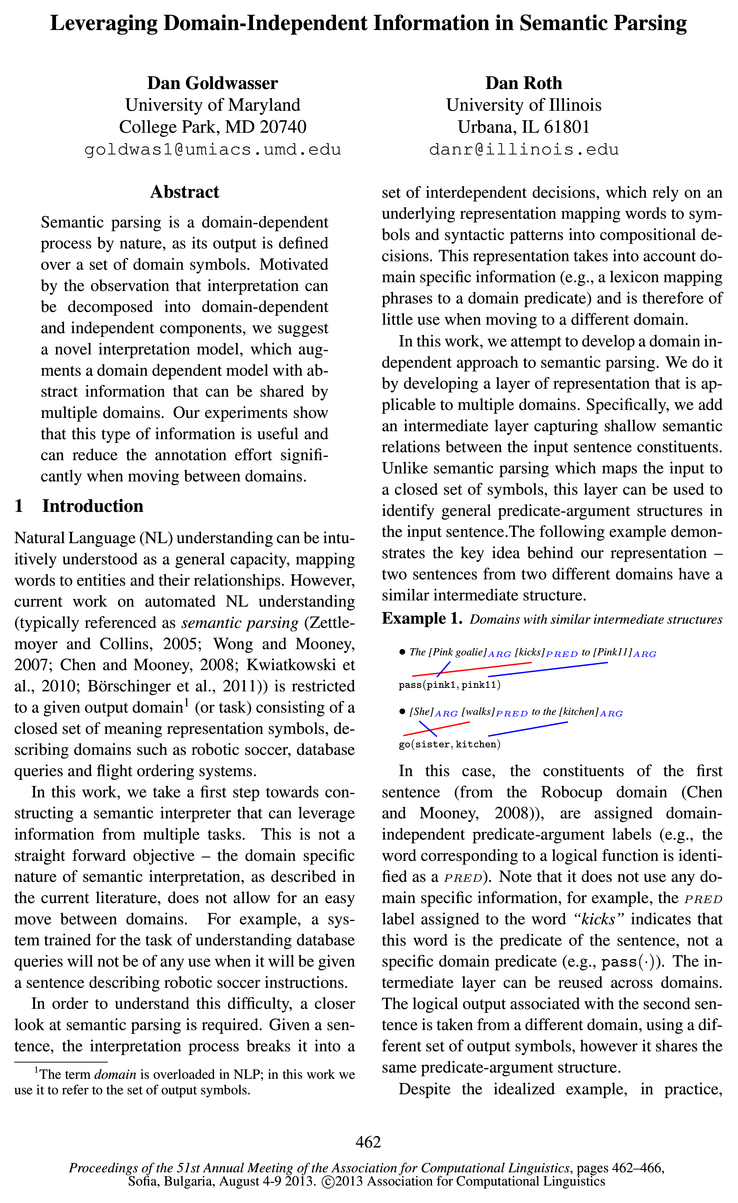

- Unlike semantic parsing which maps the input to a closed set of symbols, this layer can be used to identify general predicate-argument structures in the input sentence.The following example demonstrates the key idea behind our representation —two sentences from two different domains have a similar intermediate structure.Page 1, “Introduction”

- The dataset was collected for the purpose of constructing semantic parsers from ambiguous supervision and consists of both “noisy” and gold labeled data.Page 3, “Experimental Settings”

- Semantic Interpretation Tasks We consider two of the tasks described in (Chen and Mooney, 2008) (1) Semantic Parsing requires generating the correct logical form given an input sentence.Page 4, “Experimental Settings”

- In this paper, we took a first step towards a new kind of generalization in semantic parsing : constructing a model that is able to generalize to a new domain defined over a different set of symbols.Page 5, “Conclusions”

See all papers in Proc. ACL 2013 that mention semantic parsing.

See all papers in Proc. ACL that mention semantic parsing.

Back to top.

ILP

- We follow (Goldwasser et al., 2011; Clarke et al., 2010) and formalize semantic inference as an Integer Linear Program ( ILP ).Page 2, “Semantic Interpretation Model”

- We then proceed to augment this model with domain-independent information, and connect the two models by constraining the ILP model.Page 2, “Semantic Interpretation Model”

- We take advantage of the flexible ILP framework and encode these restrictions as global constraints.Page 2, “Semantic Interpretation Model”

See all papers in Proc. ACL 2013 that mention ILP.

See all papers in Proc. ACL that mention ILP.

Back to top.

labeled data

- Moreover, since learning this layer is a byproduct of the learning process (as it does not use any labeled data ) forcing the connection between the decisions is the mechanism that drives learning this model.Page 3, “Semantic Interpretation Model”

- The dataset was collected for the purpose of constructing semantic parsers from ambiguous supervision and consists of both “noisy” and gold labeled data .Page 3, “Experimental Settings”

- The gold labeled labeled data consists of pairs (X, y).Page 4, “Experimental Settings”

See all papers in Proc. ACL 2013 that mention labeled data.

See all papers in Proc. ACL that mention labeled data.

Back to top.

probabilistic model

- DOM-INIT W1: Noisy probabilistic model , described below.Page 4, “Experimental Settings”

- We used the noisy Robocup dataset to initialize DOM-INIT, a noisy probabilistic model , constructed by taking statistics over the noisy robocup data and computing p(y|X).Page 4, “Experimental Settings”

- Domain-independent information is learned from the situated domain and domain-specific information (Robocup) available is the simple probabilistic model (DOM-INIT).Page 4, “Knowledge Transfer Experiments”

See all papers in Proc. ACL 2013 that mention probabilistic model.

See all papers in Proc. ACL that mention probabilistic model.

Back to top.