Article Structure

Abstract

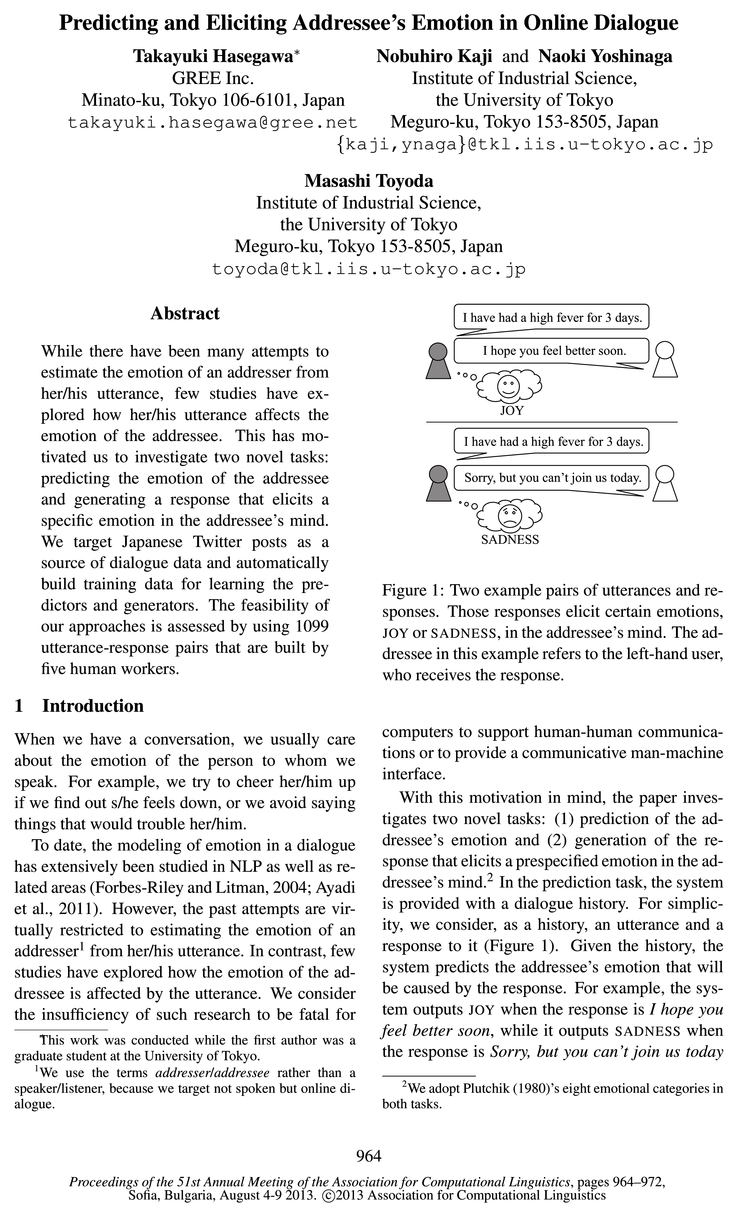

While there have been many attempts to estimate the emotion of an addresser from her/his utterance, few studies have explored how her/his utterance affects the emotion of the addressee.

Introduction

When we have a conversation, we usually care about the emotion of the person to whom we speak.

Emotion-tagged Dialogue Corpus

The key in making a supervised approach to predicting and eliciting addressee’s emotion successful is to obtain large-scale, reliable training data effectually.

Predicting Addressee’s Emotion

This section describes a method for predicting emotion elicited in an addressee when s/he receives a response to her/his utterance.

Eliciting Addressee’s Emotion

This section presents a method for generating a response that elicits the goal emotion, which is one of the emotional categories of Plutchik (1980), in the addressee.

Experiments

5.1 Test data

Related Work

There have been a tremendous amount of studies on predicting the emotion from text or speech data (Ayadi et al., 2011; Bandyopadhyay and Oku-mura, 2011; Balahur et al., 2011; Balahur et al., 2012).

Conclusion and Future Work

In this paper, we have explored predicting and eliciting the emotion of an addressee by using a large amount of dialogue data obtained from mi-croblog posts.

Topics

BLEU

- Each utterance in the test data has more than one responses that elicit the same goal emotion, because they are used to compute BLEU score (see section 5.3).Page 5, “Experiments”

- We first use BLEU score (Papineni et al., 2002) to perform automatic evaluation (Ritter et al., 2011).Page 7, “Experiments”

- In this evaluation, the system is provided with the utterance and the goal emotion in the test data and the generated responses are evaluated through BLEU score.Page 7, “Experiments”

- We tried 04 and [3 in {0.0,0.2,0.4,0.6,0.8, 1.0} and selected the weights that achieved the best BLEU score.Page 7, “Experiments”

- Table 10 compares BLEU scores of three methods including the proposed one.Page 7, “Experiments”

- System BLEUPage 7, “Experiments”

- Table 10: Comparison of BLEU scores.Page 7, “Experiments”

- The second row represents our method, while the last row represents the result of our method when the weights are set as optimal, i.e., those achieving the best BLEU on the test data.Page 7, “Experiments”

- This result can be considered as an upper bound on BLEU score.Page 7, “Experiments”

- We can clearly observe the improvement in the BLEU from 0.64 to 1.05.Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

translation model

- Following (Ritter et al., 2011), we apply the statistical machine translation model for generating a response to a given utterance.Page 4, “Eliciting Addressee’s Emotion”

- We use GIZA++8 and SRILM9 for learning translation model and 5-gram language model, re-Page 4, “Eliciting Addressee’s Emotion”

- We use the emotion-tagged dialogue corpus to learn eight translation models and language models, each of which is specialized in generating the response that elicits one of the eight emotions (Plutchik, 1980).Page 5, “Eliciting Addressee’s Emotion”

- In this case, the first two utterances are used to learn the translation model , while only the second utterance is used to learn the language model.Page 5, “Eliciting Addressee’s Emotion”

- For all the four features (i.e., two phrase translation probabilities and two lexical weights) derived from translation model , the weights of the adapted model are equally set as 04 (0 S 04 g 1.0).Page 5, “Eliciting Addressee’s Emotion”

- Table 6: The number of utterance pairs used for training classifiers in emotion prediction and learning the translation models and language models in response generation.Page 6, “Experiments”

- We use the utterance pairs summarized in Table 6 to learn the translation models and language models for eliciting each emotional category.Page 7, “Experiments”

- However, for learning the general translation models , we currently use 4 millions of utterance pairs sampled from the 640 millions of pairs due to the computational limitation.Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention translation model.

See all papers in Proc. ACL that mention translation model.

Back to top.

BLEU score

- Each utterance in the test data has more than one responses that elicit the same goal emotion, because they are used to compute BLEU score (see section 5.3).Page 5, “Experiments”

- We first use BLEU score (Papineni et al., 2002) to perform automatic evaluation (Ritter et al., 2011).Page 7, “Experiments”

- In this evaluation, the system is provided with the utterance and the goal emotion in the test data and the generated responses are evaluated through BLEU score .Page 7, “Experiments”

- We tried 04 and [3 in {0.0,0.2,0.4,0.6,0.8, 1.0} and selected the weights that achieved the best BLEU score .Page 7, “Experiments”

- Table 10 compares BLEU scores of three methods including the proposed one.Page 7, “Experiments”

- Table 10: Comparison of BLEU scores .Page 7, “Experiments”

- This result can be considered as an upper bound on BLEU score .Page 7, “Experiments”

See all papers in Proc. ACL 2013 that mention BLEU score.

See all papers in Proc. ACL that mention BLEU score.

Back to top.

language models

- We use GIZA++8 and SRILM9 for learning translation model and 5-gram language model , re-Page 4, “Eliciting Addressee’s Emotion”

- We use the emotion-tagged dialogue corpus to learn eight translation models and language models , each of which is specialized in generating the response that elicits one of the eight emotions (Plutchik, 1980).Page 5, “Eliciting Addressee’s Emotion”

- In this case, the first two utterances are used to learn the translation model, while only the second utterance is used to learn the language model .Page 5, “Eliciting Addressee’s Emotion”

- On the other hand, we use SRILM for the interpolation of language models .Page 5, “Eliciting Addressee’s Emotion”

- Table 6: The number of utterance pairs used for training classifiers in emotion prediction and learning the translation models and language models in response generation.Page 6, “Experiments”

- We use the utterance pairs summarized in Table 6 to learn the translation models and language models for eliciting each emotional category.Page 7, “Experiments”

- The linear interpolation of translation and/or language models is a widely-used technique for adapting machine translation systems to new domains (Sennrich, 2012).Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention language models.

See all papers in Proc. ACL that mention language models.

Back to top.

n-grams

- We extract all the n-grams (n g 3) in the response to induce (binary) n-gram features.Page 4, “Predicting Addressee’s Emotion”

- The extracted n-grams could indicate a certain action that elicits a specific emotion (e. g., ‘have a fever’ in Table 2), or a style or tone of speaking (e. g., ‘Sorry’).Page 4, “Predicting Addressee’s Emotion”

- Likewise, we extract word n-grams from the addressee’s utterance.Page 4, “Predicting Addressee’s Emotion”

- The extracted n-grams activate another set of binary n-gram features.Page 4, “Predicting Addressee’s Emotion”

- Because word n-grams themselves are likely to be sparse, we estimate the addressers’ emotions from their utterances and exploit them to induce emotion features.Page 4, “Predicting Addressee’s Emotion”

- The training data for these classifiers are the emotion-tagged utterances obtained in Section 2, while the features are n-grams (n g 3)7 in the utterance.Page 4, “Predicting Addressee’s Emotion”

- 7We have excluded n-grams that matched the emotional expressions used in Section 2 to avoid overfitting.Page 4, “Eliciting Addressee’s Emotion”

See all papers in Proc. ACL 2013 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

binary classifiers

- Although a response could elicit multiple emotions in the addressee, in this paper we focus on predicting the most salient emotion elicited in the addressee and cast the prediction as a single-label multi-class classification problem.5 We then construct a one-versus-the-rest classifier6 by combining eight binary classifiers , each of which predicts whether the response elicits each emotional category.Page 4, “Predicting Addressee’s Emotion”

- We use online passive-aggressive algorithm to train the eight binary classifiers .Page 4, “Predicting Addressee’s Emotion”

- Since the rule-based approach annotates utterances with emotions only when they contain emotional expressions, we independently train for each emotional category a binary classifier that estimates the addresser’s emotion from her/his utterance and apply it to the unlabeled utterances.Page 4, “Predicting Addressee’s Emotion”

- Table 6 lists the number of utterance-response pairs used to train eight binary classifiers for individual emotional categories, which form a one-versus-the rest classifier for the prediction task.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention binary classifiers.

See all papers in Proc. ACL that mention binary classifiers.

Back to top.

machine translation

- Following (Ritter et al., 2011), we apply the statistical machine translation model for generating a response to a given utterance.Page 4, “Eliciting Addressee’s Emotion”

- Similar to ordinary machine translation systems, the model is learned from pairs of an utterance and a response by using off-the-shelf tools for machine translation .Page 4, “Eliciting Addressee’s Emotion”

- Unlike machine translation , we do not use reordering models, because the positions of phrases are not considered to correlate strongly with the appropriateness of responses (Ritter et al., 2011).Page 5, “Eliciting Addressee’s Emotion”

- The linear interpolation of translation and/or language models is a widely-used technique for adapting machine translation systems to new domains (Sennrich, 2012).Page 8, “Related Work”

See all papers in Proc. ACL 2013 that mention machine translation.

See all papers in Proc. ACL that mention machine translation.

Back to top.

n-gram

- We extract all the n-grams (n g 3) in the response to induce (binary) n-gram features.Page 4, “Predicting Addressee’s Emotion”

- The extracted n-grams activate another set of binary n-gram features.Page 4, “Predicting Addressee’s Emotion”

- RESPONSE The n-gram and emotion features induced from the response.Page 6, “Experiments”

- The n-gram and emotion features induced from the response and the addressee’s utterance.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.