Article Structure

Abstract

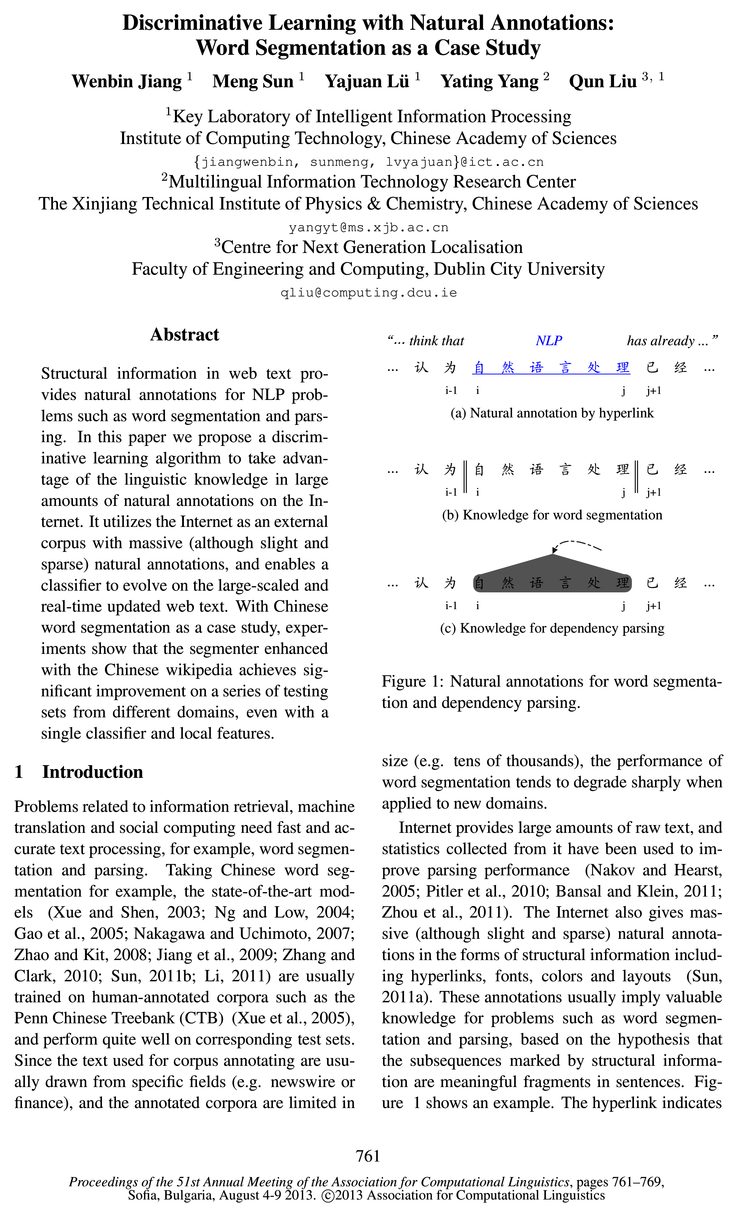

Structural information in web text provides natural annotations for NLP problems such as word segmentation and parsing.

Introduction

Problems related to information retrieval, machine translation and social computing need fast and accurate text processing, for example, word segmentation and parsing.

Character Classification Model

Character classification models for word segmentation factorize the whole prediction into atomic predictions on single characters (Xue and Shen, 2003; Ng and Low, 2004).

Knowledge in Natural Annotations

Web text gives massive natural annotations in the form of structural informations, including hyperlinks, fonts, colors and layouts (Sun, 2011a).

Learning with Natural Annotations

Different from the dense and accurate annotations in human-annotated corpora, natural annotations are sparse and slight, which makes direct training of NLP models impracticable.

Experiments

We use the Penn Chinese Treebank 5.0 (CTB) (Xue et al., 2005) as the existing annotated corpus for Chinese word segmentation.

Related Work

Li and Sun (2009) extracted character classification instances from raw text for Chinese word segmentation, resorting to the indication of punctuation marks between characters.

Conclusion and Future Work

This work presents a novel discriminative learning algorithm to utilize the knowledge in the massive natural annotations on the Internet.

Topics

word segmentation

- Structural information in web text provides natural annotations for NLP problems such as word segmentation and parsing.Page 1, “Abstract”

- With Chinese word segmentation as a case study, experiments show that the segmenter enhanced with the Chinese wikipedia achieves significant improvement on a series of testing sets from different domains, even with a single classifier and local features.Page 1, “Abstract”

- Problems related to information retrieval, machine translation and social computing need fast and accurate text processing, for example, word segmentation and parsing.Page 1, “Introduction”

- Taking Chinese word segmentation for example, the state-of-the-art models (Xue and Shen, 2003; Ng and Low, 2004; Gao et al., 2005; Nakagawa and Uchimoto, 2007; Zhao and Kit, 2008; J iang et al., 2009; Zhang and Clark, 2010; Sun, 2011b; Li, 2011) are usually trained on human-annotated corpora such as the Penn Chinese Treebank (CTB) (Xue et al., 2005), and perform quite well on corresponding test sets.Page 1, “Introduction”

- (b) Knowledge for word segmentationPage 1, “Introduction”

- Figure 1: Natural annotations for word segmentation and dependency parsing.Page 1, “Introduction”

- tens of thousands), the performance of word segmentation tends to degrade sharply when applied to new domains.Page 1, “Introduction”

- These annotations usually imply valuable knowledge for problems such as word segmentation and parsing, based on the hypothesis that the subsequences marked by structural information are meaningful fragments in sentences.Page 1, “Introduction”

- In this work we take for example a most important problem, word segmentation , and propose a novel discriminative learning algorithm to leverage the knowledge in massive natural annotations of web text.Page 2, “Introduction”

- Character classification models for word segmentation usually factorize the whole prediction into atomic predictions on characters (Xue and Shen, 2003; Ng and Low, 2004).Page 2, “Introduction”

- In the rest of the paper, we first briefly introduce the problems of Chinese word segmentation and the character classification model in sectionPage 2, “Introduction”

See all papers in Proc. ACL 2013 that mention word segmentation.

See all papers in Proc. ACL that mention word segmentation.

Back to top.

Chinese word

- With Chinese word segmentation as a case study, experiments show that the segmenter enhanced with the Chinese wikipedia achieves significant improvement on a series of testing sets from different domains, even with a single classifier and local features.Page 1, “Abstract”

- Taking Chinese word segmentation for example, the state-of-the-art models (Xue and Shen, 2003; Ng and Low, 2004; Gao et al., 2005; Nakagawa and Uchimoto, 2007; Zhao and Kit, 2008; J iang et al., 2009; Zhang and Clark, 2010; Sun, 2011b; Li, 2011) are usually trained on human-annotated corpora such as the Penn Chinese Treebank (CTB) (Xue et al., 2005), and perform quite well on corresponding test sets.Page 1, “Introduction”

- In the rest of the paper, we first briefly introduce the problems of Chinese word segmentation and the character classification model in sectionPage 2, “Introduction”

- We use the Penn Chinese Treebank 5.0 (CTB) (Xue et al., 2005) as the existing annotated corpus for Chinese word segmentation.Page 5, “Experiments”

- Table 4: Comparison with state-of-the-art work in Chinese word segmentation.Page 6, “Experiments”

- Table 4 shows the comparison with other work in Chinese word segmentation.Page 6, “Experiments”

- However, we believe this work shows another interesting way to improve Chinese word segmentation, it focuses on the utilization of fuzzy and sparse knowledge on the Internet rather than making full use of a specific human-annotated corpus.Page 6, “Experiments”

- Li and Sun (2009) extracted character classification instances from raw text for Chinese word segmentation, resorting to the indication of punctuation marks between characters.Page 7, “Related Work”

- Sun and Xu (Sun and Xu, 2011) utilized the features derived from large-scaled unlabeled text to improve Chinese word segmentation.Page 7, “Related Work”

- Experiments on Chinese word segmentation show that, the enhanced word segmenter achieves significant improvement on testing sets of different domains, although using a single classifier with only local features.Page 7, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention Chinese word.

See all papers in Proc. ACL that mention Chinese word.

Back to top.

Chinese word segmentation

- With Chinese word segmentation as a case study, experiments show that the segmenter enhanced with the Chinese wikipedia achieves significant improvement on a series of testing sets from different domains, even with a single classifier and local features.Page 1, “Abstract”

- Taking Chinese word segmentation for example, the state-of-the-art models (Xue and Shen, 2003; Ng and Low, 2004; Gao et al., 2005; Nakagawa and Uchimoto, 2007; Zhao and Kit, 2008; J iang et al., 2009; Zhang and Clark, 2010; Sun, 2011b; Li, 2011) are usually trained on human-annotated corpora such as the Penn Chinese Treebank (CTB) (Xue et al., 2005), and perform quite well on corresponding test sets.Page 1, “Introduction”

- In the rest of the paper, we first briefly introduce the problems of Chinese word segmentation and the character classification model in sectionPage 2, “Introduction”

- We use the Penn Chinese Treebank 5.0 (CTB) (Xue et al., 2005) as the existing annotated corpus for Chinese word segmentation .Page 5, “Experiments”

- Table 4: Comparison with state-of-the-art work in Chinese word segmentation .Page 6, “Experiments”

- Table 4 shows the comparison with other work in Chinese word segmentation .Page 6, “Experiments”

- However, we believe this work shows another interesting way to improve Chinese word segmentation , it focuses on the utilization of fuzzy and sparse knowledge on the Internet rather than making full use of a specific human-annotated corpus.Page 6, “Experiments”

- Li and Sun (2009) extracted character classification instances from raw text for Chinese word segmentation , resorting to the indication of punctuation marks between characters.Page 7, “Related Work”

- Sun and Xu (Sun and Xu, 2011) utilized the features derived from large-scaled unlabeled text to improve Chinese word segmentation .Page 7, “Related Work”

- Experiments on Chinese word segmentation show that, the enhanced word segmenter achieves significant improvement on testing sets of different domains, although using a single classifier with only local features.Page 7, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention Chinese word segmentation.

See all papers in Proc. ACL that mention Chinese word segmentation.

Back to top.

perceptron

- Algorithm 1 Perceptron training algorithm.Page 3, “Character Classification Model”

- The classifier can be trained with online learning algorithms such as perceptron , or offline learning models such as support vector machines.Page 3, “Character Classification Model”

- We choose the perceptron algorithm (Collins, 2002) to train the classifier for the character classification-based word segmentation model.Page 3, “Character Classification Model”

- Algorithm 1 shows the perceptron algorithm for tuning the parameter 07.Page 3, “Character Classification Model”

- Algorithm 2 Perceptron learning with natural annotations.Page 4, “Knowledge in Natural Annotations”

- Algorithm 3 Online version of perceptron learning with natural annotations.Page 4, “Learning with Natural Annotations”

- Considering the online characteristic of the perceptron algorithm, if we are able to leverage much more (than the Chinese wikipedia) data with natural annotations, an online version of learning procedure shown in Algorithm 3 would be a better choice.Page 4, “Learning with Natural Annotations”

- Figure 3: Learning curve of the averaged perceptron classifier on the CTB developing set.Page 5, “Experiments”

- We train the baseline perceptron classifier for word segmentation on the training set of CTB 5.0, using the developing set to determine the best training iterations.Page 5, “Experiments”

- Figure 3 shows the learning curve of the averaged perceptron on the developing set.Page 5, “Experiments”

See all papers in Proc. ACL 2013 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.

dependency parsing

- i-1 i j j+1 (c) Knowledge for dependency parsingPage 1, “Introduction”

- Figure 1: Natural annotations for word segmentation and dependency parsing .Page 1, “Introduction”

- a Chinese phrase (meaning NLP), and it probably corresponds to a connected subgraph for dependency parsing .Page 2, “Introduction”

- For dependency parsing , the subsequence P tends to form a connected dependency graph if it contains more than one word.Page 3, “Knowledge in Natural Annotations”

- When enriching the related work during writing, we found a work on dependency parsing (Spitkovsky et al., 2010) who utilized parsing constraints derived from hypertext annotations to improve the unsupervised dependency grammar induction.Page 7, “Related Work”

See all papers in Proc. ACL 2013 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

developing set

- Figure 3: Learning curve of the averaged perceptron classifier on the CTB developing set .Page 5, “Experiments”

- We train the baseline perceptron classifier for word segmentation on the training set of CTB 5.0, using the developing set to determine the best training iterations.Page 5, “Experiments”

- Figure 3 shows the learning curve of the averaged perceptron on the developing set .Page 5, “Experiments”

- Figure 4 shows the performance curve of the enhanced classifiers on the developing set of CTB.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention developing set.

See all papers in Proc. ACL that mention developing set.

Back to top.

domain adaptation

- It probably provides a simple and effective domain adaptation strategy for already trained models.Page 5, “Learning with Natural Annotations”

- The classifier performs much worse on the domains of chemistry, physics and machinery, it indicates the importance of domain adaptation for word segmentation (Gao et al., 2004; Ma and Way, 2009; Gao et al., 2010).Page 5, “Experiments”

- What is more, since the text on Internet is wide-coveraged and real-time updated, our strategy also helps a word segmenter be more domain adaptive and up to date.Page 7, “Experiments”

- Our strategy, therefore, enables us to build a classifier more domain adaptive and up to date.Page 8, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention domain adaptation.

See all papers in Proc. ACL that mention domain adaptation.

Back to top.

learning algorithm

- In this paper we propose a discriminative learning algorithm to take advantage of the linguistic knowledge in large amounts of natural annotations on the Internet.Page 1, “Abstract”

- In this work we take for example a most important problem, word segmentation, and propose a novel discriminative learning algorithm to leverage the knowledge in massive natural annotations of web text.Page 2, “Introduction”

- The classifier can be trained with online learning algorithms such as perceptron, or offline learning models such as support vector machines.Page 3, “Character Classification Model”

- This work presents a novel discriminative learning algorithm to utilize the knowledge in the massive natural annotations on the Internet.Page 7, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

segmentation model

- Table 1: Feature templates and instances for character classification-based word segmentation model .Page 2, “Introduction”

- Although natural annotations in web text do not directly support the discriminative training of segmentation models , they do get rid of the implausible candidates for predictions of related characters.Page 2, “Character Classification Model”

- We choose the perceptron algorithm (Collins, 2002) to train the classifier for the character classification-based word segmentation model .Page 3, “Character Classification Model”

- Figure 2: Shrink of searching space for the character classification-based word segmentation model .Page 3, “Character Classification Model”

See all papers in Proc. ACL 2013 that mention segmentation model.

See all papers in Proc. ACL that mention segmentation model.

Back to top.

semi-supervised

- resorting to complicated features, system combination and other semi-supervised technologies.Page 7, “Experiments”

- Lots of efforts have been devoted to semi-supervised methods in sequence labeling and word segmentation (Xu et al., 2008; Suzuki and Isozaki, 2008; Haffari and Sarkar, 2008; Tomanek and Hahn, 2009; Wang et al., 2011).Page 7, “Related Work”

- A semi-supervised method tries to find an optimal hyperplane of both annotated data and raw data, thus to result in a model with better coverage and higher accuracy.Page 7, “Related Work”

- It is fundamentally different from semi-supervised and unsupervised methods in that we aimed to excavate a totally different kind of knowledge, the natural annotations implied by the structural information in web text.Page 7, “Related Work”

See all papers in Proc. ACL 2013 that mention semi-supervised.

See all papers in Proc. ACL that mention semi-supervised.

Back to top.

F-measure

- Experimental results show that, the knowledge implied in the natural annotations can significantly improve the performance of a baseline segmenter trained on CTB 5.0, an F-measure increment of 0.93 points on CTB test set, and an average increment of 1.53 points on 7 other domains.Page 2, “Introduction”

- The performance measurement for word segmentation is balanced F-measure , F = 2PR/ (P + R), a function of precision P and recall R, where P is the percentage of words in segmentation results that are segmented correctly, and R is the percentage of correctly segmented words in the gold standard words.Page 5, “Experiments”

- wikipedia brings an F-measure increment of 0.93 points.Page 6, “Experiments”

See all papers in Proc. ACL 2013 that mention F-measure.

See all papers in Proc. ACL that mention F-measure.

Back to top.

significant improvement

- With Chinese word segmentation as a case study, experiments show that the segmenter enhanced with the Chinese wikipedia achieves significant improvement on a series of testing sets from different domains, even with a single classifier and local features.Page 1, “Abstract”

- Experimental results show that, the knowledge implied in the natural annotations can significantly improve the performance of a baseline segmenter trained on CTB 5.0, an F-measure increment of 0.93 points on CTB test set, and an average increment of 1.53 points on 7 other domains.Page 2, “Introduction”

- Experiments on Chinese word segmentation show that, the enhanced word segmenter achieves significant improvement on testing sets of different domains, although using a single classifier with only local features.Page 7, “Conclusion and Future Work”

See all papers in Proc. ACL 2013 that mention significant improvement.

See all papers in Proc. ACL that mention significant improvement.

Back to top.

Treebank

- Taking Chinese word segmentation for example, the state-of-the-art models (Xue and Shen, 2003; Ng and Low, 2004; Gao et al., 2005; Nakagawa and Uchimoto, 2007; Zhao and Kit, 2008; J iang et al., 2009; Zhang and Clark, 2010; Sun, 2011b; Li, 2011) are usually trained on human-annotated corpora such as the Penn Chinese Treebank (CTB) (Xue et al., 2005), and perform quite well on corresponding test sets.Page 1, “Introduction”

- We use the Penn Chinese Treebank 5.0 (CTB) (Xue et al., 2005) as the existing annotated corpus for Chinese word segmentation.Page 5, “Experiments”

- In parsing, Pereira and Schabes (1992) proposed an extended inside-outside algorithm that infers the parameters of a stochastic CFG from a partially parsed treebank .Page 7, “Related Work”

See all papers in Proc. ACL 2013 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.