Article Structure

Abstract

We present a method for automatically generating input parsers from English specifications of input file formats.

Introduction

The general problem of translating natural language specifications into executable code has been around since the field of computer science was founded.

Related Work

Learning Meaning Representation from Text Mapping sentences into structural meaning representations is an active and extensively studied task in NLP.

Problem Formulation

The task of translating text specifications to input parsers consists of two steps, as shown in Figure 3.

Model

We use two kinds of information to bias our model: (1) the quality of the generated code as measured by its ability to read the given input examples and (2) the features over the observed text 212i and the hidden specification tree ti (this is standard in traditional parsing problems).

Experimental Setup

Datasets: Our dataset consists of problem descriptions from ACM International Collegiate Programming Contests.6 We collected 106 problems from ACM-ICPC training websites.7 From each problem description, we extracted the portion that provides input specifications.

Experimental Results

Comparison with Baselines Table 3 presents the performance of various models in predicting correct specification trees.

Conclusion

It is standard practice to write English language specifications for input formats.

Topics

natural language

- We use a Bayesian generative model to capture relevant natural language phenomena and translate the English specification into a specification tree, which is then translated into a C++ input parser.Page 1, “Abstract”

- The general problem of translating natural language specifications into executable code has been around since the field of computer science was founded.Page 1, “Introduction”

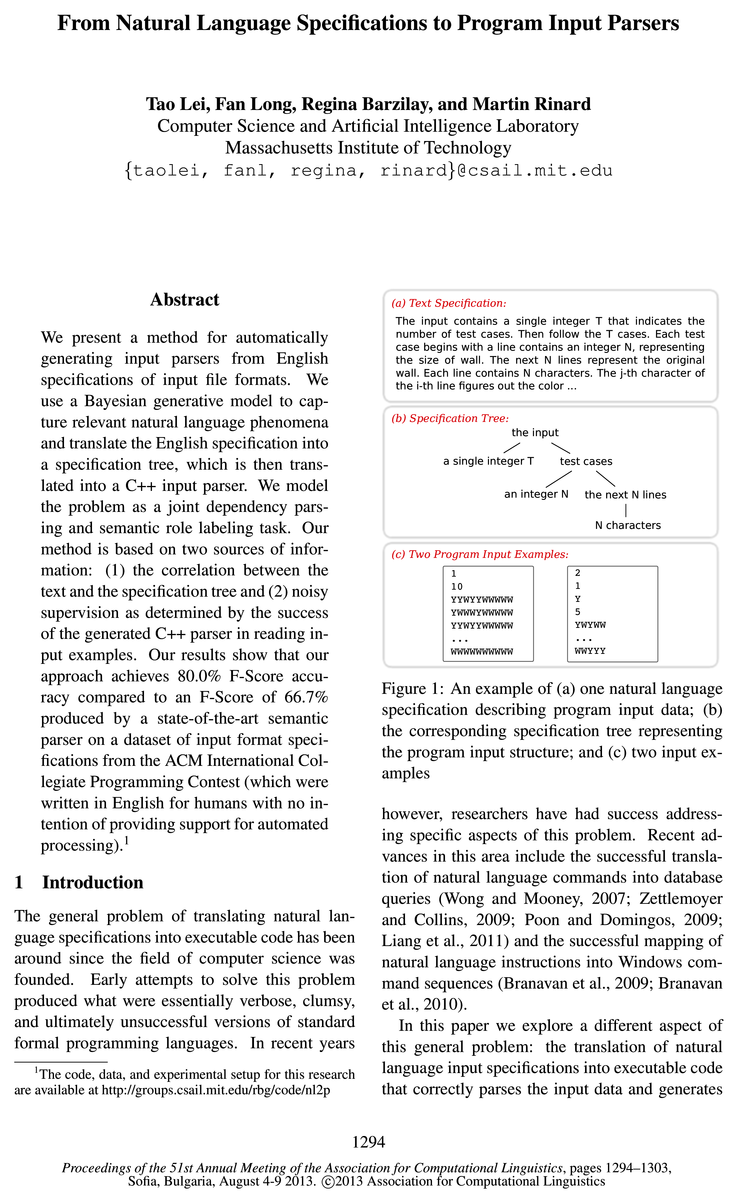

- Figure 1: An example of (a) one natural language specification describing program input data; (b) the corresponding specification tree representing the program input structure; and (c) two input examplesPage 1, “Introduction”

- Recent advances in this area include the successful translation of natural language commands into database queries (Wong and Mooney, 2007; Zettlemoyer and Collins, 2009; Poon and Domingos, 2009; Liang et al., 2011) and the successful mapping of natural language instructions into Windows command sequences (Branavan et al., 2009; Branavan et al., 2010).Page 1, “Introduction”

- In this paper we explore a different aspect of this general problem: the translation of natural language input specifications into executable code that correctly parses the input data and generatesPage 1, “Introduction”

- The need to automate this task arises because input format specifications are almost always described in natural languages , with these specifications then manually translated by a programmer into the code for reading the program inputs.Page 2, “Introduction”

- NLP in Software Engineering Researchers have recently developed a number of approaches that apply natural language processing techniques to software engineering problems.Page 3, “Related Work”

- This research analyzes natural language in documentation or comments to better understand existing application programs.Page 3, “Related Work”

- Our mechanism, in contrast, automatically generates parser programs from natural language input format descriptions.Page 3, “Related Work”

- Finally, it generates natural language feature observations conditioned on the hidden specification trees.Page 4, “Model”

- We define a range of features that capture the correspondence between the input format and its description in natural language .Page 5, “Model”

See all papers in Proc. ACL 2013 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

F-Score

- Our results show that our approach achieves 80.0% F-Score accuracy compared to an F-Score of 66.7% produced by a state-of-the-art semantic parser on a dataset of input format specifications from the ACM International Collegiate Programming Contest (which were written in English for humans with no intention of providing support for automated processing).1Page 1, “Abstract”

- However, when trained using the noisy supervision, our method achieves substantially more accurate translations than a state-of-the-art semantic parser (Clarke et al., 2010) (specifically, 80.0% in F—Score compared to an F-Score of 66.7%).Page 2, “Introduction”

- The strength of our model in the face of such weak supervision is also highlighted by the fact that it retains an F-Score of 77% even when only one input example is provided for each inputPage 2, “Introduction”

- Model | Recall ‘ Precision | F-Score ‘Page 7, “Experimental Setup”

- The two versions achieve very close performance (80% vs 84% in F-Score ), even though Full Model is trained with noisy feedback.Page 8, “Experimental Results”

See all papers in Proc. ACL 2013 that mention F-Score.

See all papers in Proc. ACL that mention F-Score.

Back to top.

noun phrases

- As input, we are given a set of text specifications w = {2121, - - - ,wN}, where each w is a text specification represented as a sequence of noun phrases We use UIUC shallow parser to preprocess each text specificaton into a sequence of the noun phrases.4 In addition, we are given a set of input examples for each wi.Page 3, “Problem Formulation”

- Our model predicts specification trees 1: = {751, - - - ,tN } for the text specifications, where each specification tree ti is a dependency tree over noun phrases In general many program input formats are nested tree structures, in which the tree root denotes the entire chunk of program input data and each chunk (tree node) can be further divided into sub-chunks or primitive fields that appear in the program input (see Figure 3).Page 4, “Problem Formulation”

- 0 Generating Specification Tree: For each text specification, draw a specification tree 75 from all possible trees over the sequence of noun phrases in this specification.Page 4, “Model”

- For example, at the unigram level we aim to capture that noun phrases containing specific words such as “cases” and “lines” may be key phrases (correspond to data chunks appear in the input), and that verbs such as “contain” may indicate that the next noun phrase is a key phrase.Page 5, “Model”

- Total # of words 7330 Total # of noun phrases 1829 Vocabulary size 781 Avg.Page 6, “Model”

See all papers in Proc. ACL 2013 that mention noun phrases.

See all papers in Proc. ACL that mention noun phrases.

Back to top.

generative model

- We use a Bayesian generative model to capture relevant natural language phenomena and translate the English specification into a specification tree, which is then translated into a C++ input parser.Page 1, “Abstract”

- We combine these two kinds of information into a Bayesian generative model in which the code quality of the specification tree is captured by the prior probability P (t) and the feature observations are encoded in the likelihood probability P (w|t).Page 4, “Model”

- We assume the generative model operates by first generating the model parameters from a set of Dirichlet distributions.Page 4, “Model”

- 0 Generating Model Parameters: For every pair of feature type f and phrase tag 2, draw a multinomial distribution parameter 63 from a Dirichlet prior P(6§;).Page 4, “Model”

See all papers in Proc. ACL 2013 that mention generative model.

See all papers in Proc. ACL that mention generative model.

Back to top.

generative process

- We model our problem as a joint dependency parsing and role labeling task, assuming a Bayesian generative process .Page 2, “Introduction”

- While previous approaches rely on the feedback to train a discriminative prediction model, our approach models a generative process to guide structure predictions when the feedback is noisy or unavailable.Page 3, “Related Work”

- Modeling the Generative Process .Page 4, “Model”

- The generative process is described formally as follows:Page 4, “Model”

See all papers in Proc. ACL 2013 that mention generative process.

See all papers in Proc. ACL that mention generative process.

Back to top.

model parameters

- We assume the generative model operates by first generating the model parameters from a set of Dirichlet distributions.Page 4, “Model”

- 0 Generating Model Parameters : For every pair of feature type f and phrase tag 2, draw a multinomial distribution parameter 63 from a Dirichlet prior P(6§;).Page 4, “Model”

- Learning the Model During inference, we want to estimate the hidden specification trees 1: given the observed natural language specifications w, after integrating the model parameters out, i.e.Page 5, “Model”

- Estimate model parameters : 0’ = E [0/‘W,t_i]Page 6, “Model”

See all papers in Proc. ACL 2013 that mention model parameters.

See all papers in Proc. ACL that mention model parameters.

Back to top.

role labeling

- We model the problem as a joint dependency parsing and semantic role labeling task.Page 1, “Abstract”

- We model our problem as a joint dependency parsing and role labeling task, assuming a Bayesian generative process.Page 2, “Introduction”

- We formalize the learning problem as a dependency parsing and role labeling problem.Page 4, “Problem Formulation”

- In addition, the role labeling problem is to assign a tag to each noun phrase in a specification tree, indicating whether the phrase is a key phrase or a background phrase.Page 4, “Problem Formulation”

See all papers in Proc. ACL 2013 that mention role labeling.

See all papers in Proc. ACL that mention role labeling.

Back to top.

dependency parsing

- We model the problem as a joint dependency parsing and semantic role labeling task.Page 1, “Abstract”

- We model our problem as a joint dependency parsing and role labeling task, assuming a Bayesian generative process.Page 2, “Introduction”

- We formalize the learning problem as a dependency parsing and role labeling problem.Page 4, “Problem Formulation”

See all papers in Proc. ACL 2013 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

semantic parser

- Our results show that our approach achieves 80.0% F-Score accuracy compared to an F-Score of 66.7% produced by a state-of-the-art semantic parser on a dataset of input format specifications from the ACM International Collegiate Programming Contest (which were written in English for humans with no intention of providing support for automated processing).1Page 1, “Abstract”

- However, when trained using the noisy supervision, our method achieves substantially more accurate translations than a state-of-the-art semantic parser (Clarke et al., 2010) (specifically, 80.0% in F—Score compared to an F-Score of 66.7%).Page 2, “Introduction”

- The second baseline Aggressive is a state-of-the-art semantic parsing framework (Clarke et al., 2010).8 The framework repeatedly predicts hidden structures (specification trees in our case) using a structure learner, and trains the structure learner based on the execution feedback of its predictions.Page 7, “Experimental Setup”

See all papers in Proc. ACL 2013 that mention semantic parser.

See all papers in Proc. ACL that mention semantic parser.

Back to top.