Article Structure

Abstract

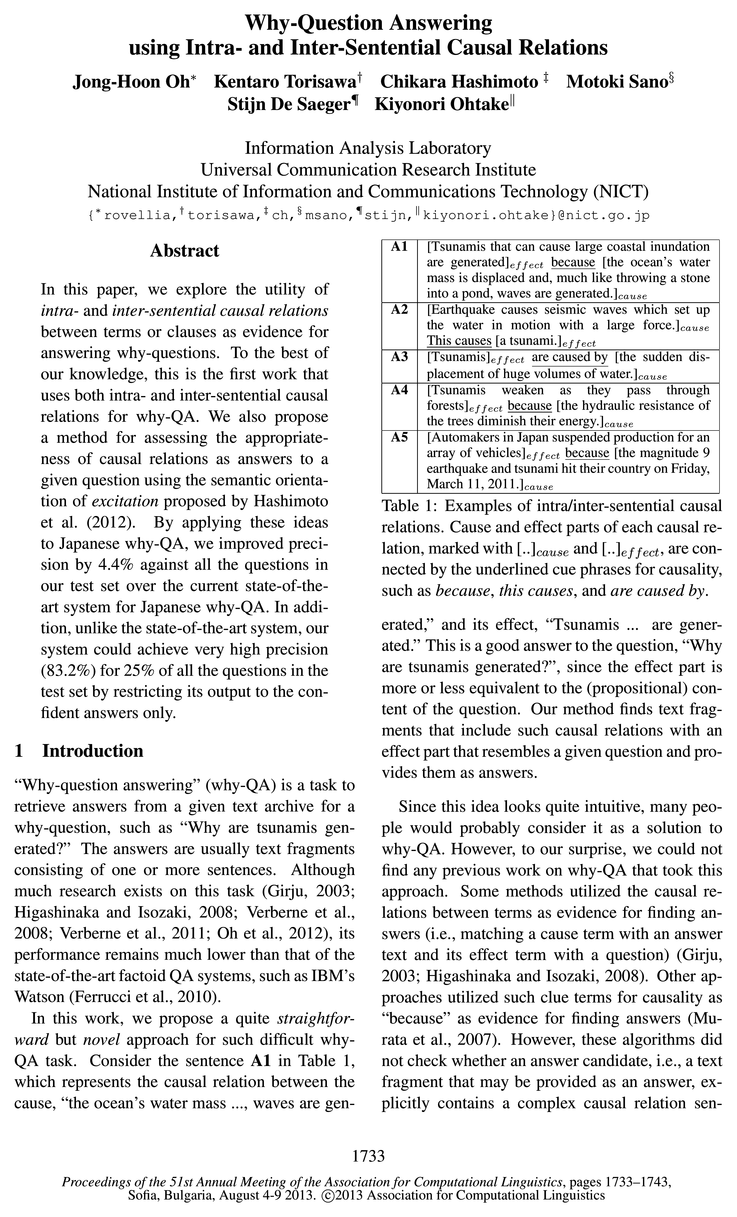

In this paper, we explore the utility of intra- and inter-sentential causal relations between terms or clauses as evidence for answering why-questions.

Introduction

“Why-question answering” (why-QA) is a task to retrieve answers from a given text archive for a why-question, such as “Why are tsunamis generated?” The answers are usually text fragments consisting of one or more sentences.

Related Work

Although there were many previous works on the acquisition of intra- and inter-sentential causal relations from texts (Khoo et al., 2000; Girju, 2003; Inui and Okumura, 2005; Chang and Choi, 2006; Torisawa, 2006; Blanco et al., 2008; De Saeger et al., 2009; De Saeger et al., 2011; Riaz and Girju, 2010; Do et al., 2011; Radinsky et al., 2012), their application to why-QA was limited to causal relations between terms (Girju, 2003; Higashinaka and Isozaki, 2008).

System Architecture

We first describe the system architecture of our QA system before describing our proposed method.

Causal Relations for Why-QA

We describe causal relation recognition in Section 4.1 and describe the features (of our re-ranker) generated from causal relations in Section 4.2.

Experiments

We experimented with causal relation recognition and why-QA with our causal relation features.

Conclusion

In this paper, we explored the utility of intra- and inter-sentential causal relations for ranking answer candidates to why-questions.

Topics

content word

- We retrieved documents from Japanese web texts using Boolean AND and OR queries generated from the content words in why-questions.Page 3, “System Architecture”

- A bansetsa is a syntactic constituent composed of a content word and several function words such as postpositions and case markers.Page 4, “Causal Relations for Why-QA”

- Our term matching method judges that a causal relation is a candidate of an appropriate causal relation if its effect part contains at least one content word (nouns, verbs, and adjectives) in the question.Page 5, “Causal Relations for Why-QA”

- The n-grams of 75 f1 and tfg are restricted to those containing at least one content word in a question.Page 5, “Causal Relations for Why-QA”

- Our partial tree matching method judges a causal relation as a candidate of an appropriate causal relation if its effect part contains at least one partial tree in a question, where the partial tree covers more than one content word .Page 6, “Causal Relations for Why-QA”

- The syntactic dependency n-grams in p f1 and p f2 are restricted to those that contain at least one content word in a question.Page 6, “Causal Relations for Why-QA”

- We distinguish this matched content word from the other content words in the n-gram by converting it to QW, which represents a content word in the question.Page 6, “Causal Relations for Why-QA”

See all papers in Proc. ACL 2013 that mention content word.

See all papers in Proc. ACL that mention content word.

Back to top.

n-grams

- These previous studies took basically bag-of-words approaches and used the semantic knowledge to identify certain semantic associations using terms and n-grams .Page 2, “Related Work”

- employed three types of features for training the re-ranker: morphosyntactic features ( n-grams of morphemes and syntactic dependency chains), semantic word class features (semantic word classes obtained by automatic word clustering (Kazama and Torisawa, 2008)) and sentiment polarity features (word and phrase polarities).Page 3, “System Architecture”

- Table 4: Causal relation features: n in n-grams is n = {2, 3} and n-grams in an effect part are distinguished from those in a cause part.Page 5, “Causal Relations for Why-QA”

- The n-grams of 75 f1 and tfg are restricted to those containing at least one content word in a question.Page 5, “Causal Relations for Why-QA”

- For example, word 3-gram “this/cause/QW” is extracted from This causes tsunamis in A2 for “Why is a tsunami generated?” Further, we create a word class version of word n-grams by converting the words in these word n-grams into their corresponding word class using the semantic word classes (500 classes for 5.5 million nouns) from our previous work (Oh et al., 2012).Page 5, “Causal Relations for Why-QA”

- The syntactic dependency n-grams in p f1 and p f2 are restricted to those that contain at least one content word in a question.Page 6, “Causal Relations for Why-QA”

See all papers in Proc. ACL 2013 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

sequence labeling

- To meet this challenge, we developed a sequence labeling method that identifies not only intra-sentential causal relations, i.e., the causal relations between two terms/phrases/clauses expressed in a single sentence (e.g., A1 in Table 1), but also the inter-sentential causal relations, which are the causal relations between two terms/phrases/clauses expressed in two adjacent sentences (e.g., A2) in a given text fragment.Page 2, “Introduction”

- We regard this task as a sequence labeling problem and use Conditional Random Fields (CRFs) (Laf-ferty et al., 2001) as a machine learning framework.Page 4, “Causal Relations for Why-QA”

- Fig 2 shows an example of such sequence labeling .Page 4, “Causal Relations for Why-QA”

- Although this example is about sequential labeling shown on English sentences for ease of explanation, it was actually done on Japanese sentences.Page 4, “Causal Relations for Why-QA”

- Figure 2: Recognizing causal relations by sequence labeling : Underlined text This causes represents a c-marker, and E08 and EOA represent end-of-sentence and end-of-answer candidates.Page 4, “Causal Relations for Why-QA”

See all papers in Proc. ACL 2013 that mention sequence labeling.

See all papers in Proc. ACL that mention sequence labeling.

Back to top.

CRFs

- We regard this task as a sequence labeling problem and use Conditional Random Fields ( CRFs ) (Laf-ferty et al., 2001) as a machine learning framework.Page 4, “Causal Relations for Why-QA”

- In our task, CRFs take three sentences of a causal relation candidate as input and generate their cause-effect annotations with a set of possible cause-effect IOB labels, including Begin-Cause (BC), Inside-Cause (IC), Begin-Effect (BE), Inside-Effect (IE), and Outside (0).Page 4, “Causal Relations for Why-QA”

- We used the three types of feature sets in Table 3 for training the CRFs , where j is in the range of z' — 4 g j g i + 4 for current position i in a causal relation candidate.Page 4, “Causal Relations for Why-QA”

- Table 3: Features for training CRFs , wherePage 4, “Causal Relations for Why-QA”

See all papers in Proc. ACL 2013 that mention CRFs.

See all papers in Proc. ACL that mention CRFs.

Back to top.

feature sets

- We used the three types of feature sets in Table 3 for training the CRFs, where j is in the range of z' — 4 g j g i + 4 for current position i in a causal relation candidate.Page 4, “Causal Relations for Why-QA”

- More detailed information concerning the configurations of all the nouns in all the candidates of an appropriate causal relation (including their cause parts) and the question are encoded into our feature set 6 f1—e f4 in Table 4 and the final judgment is done by our re-ranker.Page 7, “Causal Relations for Why-QA”

- We evaluated the performance when we removed one of the three types of features (ALL-“MORPH”, ALL-“SYNTACTIC” and ALL-“C-MARKER”) and compared the results in these settings with the one when all the feature sets were used (ALL).Page 8, “Experiments”

- We confirmed that all the feature sets improved the performance, and we got the best performance when using all of them.Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention feature sets.

See all papers in Proc. ACL that mention feature sets.

Back to top.

baseline system

- the result for our baseline system that recognizes a causal relation by simply taking the two phrases adjacent to a c-marker (i.e., before and after) as cause and effect parts of the causal relation.Page 8, “Experiments”

- From these results, we confirmed that our method recognized both intra- and inter-sentential causal relations with over 80% precision, and it significantly outperformed our baseline system in both precision and recall rates.Page 8, “Experiments”

- In this experiment, we compared five systems: four baseline systems (MURATA, OURCF, OH and OH+PREVCF) and our proposed method (PROPOSED).Page 8, “Experiments”

See all papers in Proc. ACL 2013 that mention baseline system.

See all papers in Proc. ACL that mention baseline system.

Back to top.

Regular expressions

- We identify cue phrases for causality in answer candidates using the regular expressions in Table 2.Page 3, “Causal Relations for Why-QA”

- Regular expressions ExamplesPage 4, “Causal Relations for Why-QA”

- Table 2: Regular expressions for identifying cue phrases for causality.Page 4, “Causal Relations for Why-QA”

See all papers in Proc. ACL 2013 that mention Regular expressions.

See all papers in Proc. ACL that mention Regular expressions.

Back to top.