Article Structure

Abstract

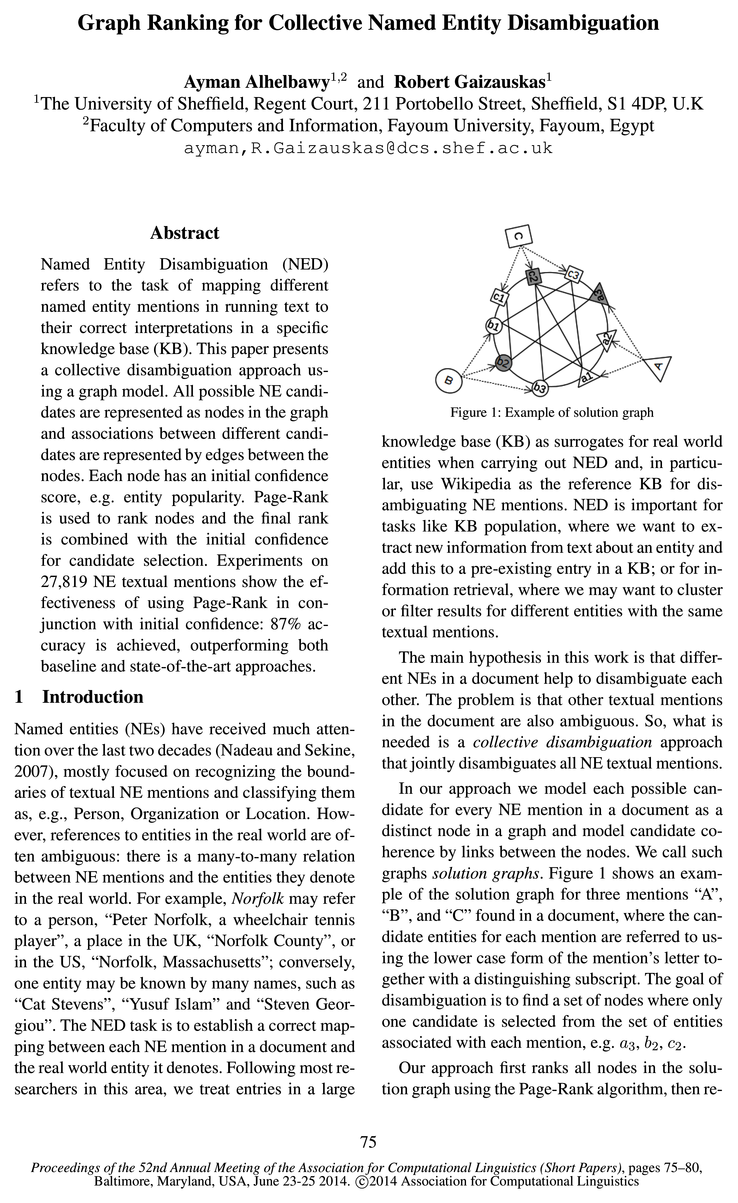

Named Entity Disambiguation (NED) refers to the task of mapping different named entity mentions in running text to their correct interpretations in a specific knowledge base (KB).

Introduction

Named entities (NEs) have received much attention over the last two decades (Nadeau and Sekine, 2007), mostly focused on recognizing the boundaries of textual NE mentions and classifying them as, e.g., Person, Organization or Location.

Related Work

In 2009, NIST proposed the shared task challenge of Entity Linking (EL) (McNamee and Dang, 2009).

Solution Graph

In this section we discuss the construction of a graph representation that we call the solution 1Graph models are also widely used in Word Sense Dis-

Topics

named entity

- Named Entity Disambiguation (NED) refers to the task of mapping different named entity mentions in running text to their correct interpretations in a specific knowledge base (KB).Page 1, “Abstract”

- Named entities (NEs) have received much attention over the last two decades (Nadeau and Sekine, 2007), mostly focused on recognizing the boundaries of textual NE mentions and classifying them as, e.g., Person, Organization or Location.Page 1, “Introduction”

- Named Entity DisambiguationPage 1, “Introduction”

- The second line of approach is collective named entity disambiguation (CNED), where all mentions of entities in the document are disambiguated jointly.Page 2, “Related Work”

- cos: The cosine similarity between the named entity textual mention and the KB entry title.Page 3, “Solution Graph”

- Named Entity Selection: The simplest approach is to select the highest ranked entity in the list for each mention mi according to equation 5, where R could refer to Rm or R5.Page 4, “Solution Graph”

- Our results show that Page-Rank in conjunction with re-ranking by initial confidence score can be used as an effective approach to collectively disambiguate named entity textual mentions in a document.Page 5, “Solution Graph”

See all papers in Proc. ACL 2014 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.

confidence score

- Each node has an initial confidence score , e.g.Page 1, “Abstract”

- Each candidate has associated with it an initial confidence score , also detailed below.Page 3, “Solution Graph”

- Initial confidence scores of all candidates for a single NE mention are normalized to sum to l.Page 3, “Solution Graph”

- One is a setup where a ranking based solely on different initial confidence scores is usedPage 4, “Solution Graph”

- We used the best initial confidence score (Freebase) for re-ranking.Page 5, “Solution Graph”

- Our results show that Page-Rank in conjunction with re-ranking by initial confidence score can be used as an effective approach to collectively disambiguate named entity textual mentions in a document.Page 5, “Solution Graph”

See all papers in Proc. ACL 2014 that mention confidence score.

See all papers in Proc. ACL that mention confidence score.

Back to top.

edge weights

- initial node and edge weights set to 1, edges being created wherever REF or J Prob are not zero).Page 5, “Solution Graph”

- In the first experiment, referred to as PR1, initial confidence is used as an initial node rank for PR and edge weights are uniform, edges, as in the PR baseline, being created wherever REF or J Prob are not zero.Page 5, “Solution Graph”

- In our second experiment, PRC, entity coherence features are tested by setting the edge weights to the coherence score and using uniform initial node weights.Page 5, “Solution Graph”

- edge weighting approaches, where for each approach edges were created only where the coherence score according to the approach was nonzero.Page 5, “Solution Graph”

- We also investigated a variant, called J Prob + Ref, in which the Ref edge weights are normalized to sum to 1 over the whole graph and then added to the J Prob edge weights (here edges result wherever J Prob or Ref scores are nonzero).Page 5, “Solution Graph”

See all papers in Proc. ACL 2014 that mention edge weights.

See all papers in Proc. ACL that mention edge weights.

Back to top.

cosine similarity

- cos: The cosine similarity between the named entity textual mention and the KB entry title.Page 3, “Solution Graph”

- ijim: While the cosine similarity between a textual mention in the document and the candidatePage 3, “Solution Graph”

- The cosine similarity between “Essex” and “Danbury, Essex” is higher than that between “Essex” and “Essex County Cricket Club”, which is not helpful in the NED setting.Page 3, “Solution Graph”

- ctxt: The cosine similarity between the sentence containing the NE mention in the query document and the textual description of the candidate NE in the KB (we use the first section of the Wikipedia article as the candidate entity description).Page 3, “Solution Graph”

See all papers in Proc. ACL 2014 that mention cosine similarity.

See all papers in Proc. ACL that mention cosine similarity.

Back to top.