Article Structure

Abstract

We present a structured learning approach to inducing hypernym taxonomies using a probabilistic graphical model formulation.

Introduction

Many tasks in natural language understanding, such as question answering, information extraction, and textual entailment, benefit from lexical semantic information in the form of types and hy-pernyms.

Structured Taxonomy Induction

Given an input term set a: = {$1,302, .

Features

While spanning trees are familiar from non-projective dependency parsing, features based on the linear order of the words or on lexical identi-

Related Work

In our work, we assume a known term set and do not address the problem of extracting related terms from text.

Experiments

5.1 Data and Experimental Regime

Analysis

Table 3 shows some of the hypernymy and siblinghood features given highest weight by our model (in general-setup development experiments).

Conclusion

Our approach to taxonomy induction allows heterogeneous information sources to be combined and balanced in an error-driven way.

Topics

WordNet

- To train the system, we extract substructures of WordNet and dis-criminatively learn to reproduce them, using adaptive subgradient stochastic optimization.Page 1, “Abstract”

- On the task of reproducing sub-hierarchies of WordNet , our approach achieves a 51% error reduction over a chance baseline, including a 15% error reduction due to the non-hypernym—factored sibling features.Page 1, “Abstract”

- However, currently available taxonomies such as WordNet are incomplete in coverage (Pennacchiotti and Pantel, 2006; Hovy et al., 2009), unavailable in many domains and languages, andPage 1, “Introduction”

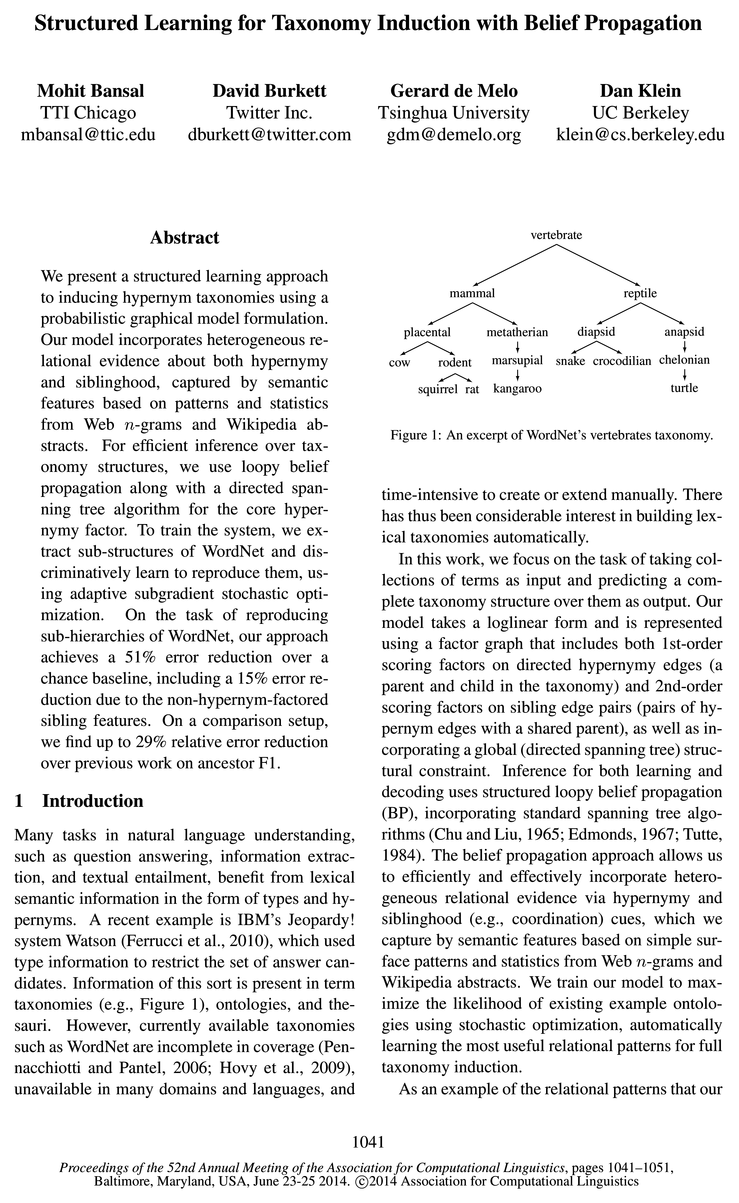

- Figure 1: An excerpt of WordNet’s vertebrates taxonomy.Page 1, “Introduction”

- First, on the task of recreating fragments of WordNet , we achieve a 51% error reduction on ancestor-based F1 over a chance baseline, including a 15% error reduction due to the non-hypernym-factored sibling features.Page 2, “Introduction”

- Second, we also compare to the results of Kozareva and Hovy (2010) by predicting the large animal subtree of WordNet .Page 2, “Introduction”

- We considered two distinct experimental setups, one that illustrates the general performance of our model by reproducing various medium-sized WordNet domains, and another that facilitates comparison to previous work by reproducing the much larger animal subtree provided by Kozareva and Hovy (2010).Page 7, “Experiments”

- General setup: In order to test the accuracy of structured prediction on medium-sized full-domain taxonomies, we extracted from WordNet 3.0 all bottomed-out full subtrees which had a tree-height of 3 (i.e., 4 nodes from root to leaf), and contained (10, 50] terms.11 This gives us 761 non-overlapping trees, which we partition intoPage 7, “Experiments”

- To project WordNet synsets to terms, we used the first (most frequent) term in each synset.Page 7, “Experiments”

- A few WordNet synsets have multiple parents so we only keep the first of each such pair of overlapping trees.Page 7, “Experiments”

- Comparison setup: We also compare our method (as closely as possible) with related previous work by testing on the much larger animal subtree made available by Kozareva and Hovy (2010), who created this dataset by selecting a set of ‘harvested’ terms and retrieving all the WordNet hypernyms between each input term and the root (i.e., animal), resulting in N700 terms and ~4,300 isa ancestor-child links.12 Our training set for this animal test case was generated from WordNet using the following process: First, we strictly remove the full animal subtree from WordNet in order to avoid any possible overlap with the test data.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.

spanning tree

- For efficient inference over taxonomy structures, we use loopy belief propagation along with a directed spanning tree algorithm for the core hypernymy factor.Page 1, “Abstract”

- Our model takes a loglinear form and is represented using a factor graph that includes both lst—order scoring factors on directed hypernymy edges (a parent and child in the taxonomy) and 2nd-order scoring factors on sibling edge pairs (pairs of hypernym edges with a shared parent), as well as incorporating a global (directed spanning tree ) structural constraint.Page 1, “Introduction”

- Inference for both learning and decoding uses structured loopy belief propagation (BP), incorporating standard spanning tree algorithms (Chu and Liu, 1965; Edmonds, 1967; Tutte, 1984).Page 1, “Introduction”

- (2006), the longest path approach of Kozareva and Hovy (2010), and the maximum spanning tree (MST) approach of Navigli et al.Page 2, “Introduction”

- Of course, not all variable assignments y form legal taxonomy trees (i.e., directed spanning trees ).Page 3, “Structured Taxonomy Induction”

- Note that finding taxonomy trees is a structurally identical problem to directed spanning trees (and thereby non-proj ective dependency parsing), for which belief propagation has previously been worked out in depth (Smith and Eisner, 2008).Page 3, “Structured Taxonomy Induction”

- ever, due to the structure of that particular factor, all of its outgoing messages can be computed simultaneously in 0(n3) time via an efficient adaptation of Kirchhoff ’s Matrix Tree Theorem (MTT) (Tutte, 1984) which computes partition functions and marginals for directed spanning trees .Page 4, “Structured Taxonomy Induction”

- While spanning trees are familiar from non-projective dependency parsing, features based on the linear order of the words or on lexical identi-Page 4, “Features”

- Our work differs from the two systems above in that ours is the first discriminatively trained, structured probabilistic model over the full space of taxonomy trees that uses structured inference via spanning tree algorithms (MST and MTT) through both the learning and decoding phases.Page 7, “Related Work”

- We present a development set ablation study where we start with the edges-only model (Figure 2a) and its random tree baseline (which chooses any arbitrary spanning tree for the term set).Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention spanning tree.

See all papers in Proc. ACL that mention spanning tree.

Back to top.

n-grams

- Our model incorporates heterogeneous relational evidence about both hypernymy and siblinghood, captured by semantic features based on patterns and statistics from Web n-grams and Wikipedia abstracts.Page 1, “Abstract”

- The belief propagation approach allows us to efficiently and effectively incorporate heterogeneous relational evidence via hypernymy and siblinghood (e.g., coordination) cues, which we capture by semantic features based on simple surface patterns and statistics from Web n-grams and Wikipedia abstracts.Page 1, “Introduction”

- The Web n-grams corpus has broad coverage but is limited to up to 5-grams, so it may not contain pattem-based evidence for various longer multi-word terms and pairs.Page 5, “Features”

- Similar to the Web n-grams case, we also fire Wikipedia-based pattern order features.Page 6, “Features”

- 8All the patterns and counts for our Web and Wikipedia edge and sibling features described above are extracted after stemming the words in the terms, the n-grams , and the abstracts (using the Porter stemmer).Page 6, “Related Work”

- Feature sources: The n-gram semantic features are extracted from the Google n-grams corpus (Brants and Franz, 2006), a large collection of English n-grams (for n = 1 to 5) and their frequencies computed from almost 1 trillion tokens (95 billion sentences) of Web text.Page 7, “Experiments”

- For this, we use a hash-trie on term pairs (similar to that of Bansal and Klein (2011)), and scan once through the 71- gram (or abstract) set, skipping many n-grams (or abstracts) based on fast checks of missing unigrams, exceeding length, suffix mismatches, etc.Page 7, “Experiments”

- Here, our Web 71- grams dataset (which only contains frequent n-grams ) and Wikipedia abstracts do not suffice and we would need to add richer Web data for such world knowledge to be reflected in the features.Page 9, “Analysis”

See all papers in Proc. ACL 2014 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

precision and recall

- We note that our approach falls at a different point in the space of performance tradeoffs from past work — by producing complete, highly articulated trees, we naturally see a more even balance between precision and recall , while past work generally focused on precision.1 ToPage 2, “Introduction”

- 1While different applications will value precision and recall differently, and past work was often intentionally precision-focused, it is certainly the case that an ideal solution would maximize both.Page 2, “Introduction”

- We note that previous work achieves higher ancestor precision, while our approach achieves a more even balance between precision and recall .Page 8, “Experiments”

- Of course, precision and recall should both ideally be high, even if some applications weigh one over the other.Page 8, “Experiments”

- We also present results on the precision and recall tradeoffs inherent in this task.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention precision and recall.

See all papers in Proc. ACL that mention precision and recall.

Back to top.

scoring function

- Each factor F has an associated scoring function W, with the probability of a total assignment determined by the product of all these scores:Page 2, “Structured Taxonomy Induction”

- We score each edge by extracting a set of features f (55¢, :33) and weighting them by the (learned) weight vector w. So, the factor scoring function is:Page 2, “Structured Taxonomy Induction”

- The scoring function is similar to the one above:Page 3, “Structured Taxonomy Induction”

- We encode this in our factor graph setting using a single global factor T (shown as a large red square in Figure 2) with the following scoring function:Page 3, “Structured Taxonomy Induction”

- Note that by substituting our model’s factor scoring functions into Equation 1, we get:Page 3, “Structured Taxonomy Induction”

See all papers in Proc. ACL 2014 that mention scoring function.

See all papers in Proc. ACL that mention scoring function.

Back to top.

co-occurrence

- Our model is also the first to directly learn relational patterns as part of the process of training an end-to-end taxonomic induction system, rather than using patterns that were hand-selected or learned via pairwise classifiers on manually annotated co-occurrence patterns.Page 2, “Introduction”

- Presence and distance: For each potential edge (stump, we mine patterns from all abstracts in which the two terms co-occur in either order, allowing a maximum term distance of 20 (because beyond that, co-occurrence may not imply a relation).Page 5, “Features”

- Both of these systems use a process that starts by finding basic level terms (leaves of the final taxonomy tree, typically) and then using relational patterns (hand-selected ones in the case of Kozareva and Hovy (2010), and ones learned separately by a pairwise classifier on manually annotated co-occurrence patterns for Navigli and Velardi (2010), Navigli et al.Page 6, “Related Work”

- Our model also automatically learns relational patterns as a part of the taxonomic training phase, instead of relying on handpicked rules or pairwise classifiers on manually annotated co-occurrence patterns, and it is the first end-to-end (i.e., non-incremental) system to include heterogeneous relational information via sibling (e.g., coordination) patterns.Page 7, “Related Work”

See all papers in Proc. ACL 2014 that mention co-occurrence.

See all papers in Proc. ACL that mention co-occurrence.

Back to top.

hypernym

- We present a structured learning approach to inducing hypernym taxonomies using a probabilistic graphical model formulation.Page 1, “Abstract”

- Our model takes a loglinear form and is represented using a factor graph that includes both lst—order scoring factors on directed hypernymy edges (a parent and child in the taxonomy) and 2nd-order scoring factors on sibling edge pairs (pairs of hypernym edges with a shared parent), as well as incorporating a global (directed spanning tree) structural constraint.Page 1, “Introduction”

- Comparison setup: We also compare our method (as closely as possible) with related previous work by testing on the much larger animal subtree made available by Kozareva and Hovy (2010), who created this dataset by selecting a set of ‘harvested’ terms and retrieving all the WordNet hypernyms between each input term and the root (i.e., animal), resulting in N700 terms and ~4,300 isa ancestor-child links.12 Our training set for this animal test case was generated from WordNet using the following process: First, we strictly remove the full animal subtree from WordNet in order to avoid any possible overlap with the test data.Page 7, “Experiments”

- (2011) and see a small gain in F1, but regardless, we should note that their results are incomparable (denoted by *‘k in Table 2) because they have a different ground-truth data condition: their definition and hypernym extraction phase involves using the Google ole fine keyword, which often returns WordNet glosses itself.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention hypernym.

See all papers in Proc. ACL that mention hypernym.

Back to top.

dependency parsing

- Note that finding taxonomy trees is a structurally identical problem to directed spanning trees (and thereby non-proj ective dependency parsing ), for which belief propagation has previously been worked out in depth (Smith and Eisner, 2008).Page 3, “Structured Taxonomy Induction”

- While spanning trees are familiar from non-projective dependency parsing , features based on the linear order of the words or on lexical identi-Page 4, “Features”

- ties or syntactic word classes, which are primary drivers for dependency parsing , are mostly uninformative for taxonomy induction.Page 5, “Features”

See all papers in Proc. ACL 2014 that mention dependency parsing.

See all papers in Proc. ACL that mention dependency parsing.

Back to top.

end-to-end

- Our model is also the first to directly learn relational patterns as part of the process of training an end-to-end taxonomic induction system, rather than using patterns that were hand-selected or learned via pairwise classifiers on manually annotated co-occurrence patterns.Page 2, “Introduction”

- Finally, it is the first end-to-end (i.e., non-incremental) system to include sibling (e.g., coordination) patterns at all.Page 2, “Introduction”

- Our model also automatically learns relational patterns as a part of the taxonomic training phase, instead of relying on handpicked rules or pairwise classifiers on manually annotated co-occurrence patterns, and it is the first end-to-end (i.e., non-incremental) system to include heterogeneous relational information via sibling (e.g., coordination) patterns.Page 7, “Related Work”

See all papers in Proc. ACL 2014 that mention end-to-end.

See all papers in Proc. ACL that mention end-to-end.

Back to top.

manually annotated

- Our model is also the first to directly learn relational patterns as part of the process of training an end-to-end taxonomic induction system, rather than using patterns that were hand-selected or learned via pairwise classifiers on manually annotated co-occurrence patterns.Page 2, “Introduction”

- Both of these systems use a process that starts by finding basic level terms (leaves of the final taxonomy tree, typically) and then using relational patterns (hand-selected ones in the case of Kozareva and Hovy (2010), and ones learned separately by a pairwise classifier on manually annotated co-occurrence patterns for Navigli and Velardi (2010), Navigli et al.Page 6, “Related Work”

- Our model also automatically learns relational patterns as a part of the taxonomic training phase, instead of relying on handpicked rules or pairwise classifiers on manually annotated co-occurrence patterns, and it is the first end-to-end (i.e., non-incremental) system to include heterogeneous relational information via sibling (e.g., coordination) patterns.Page 7, “Related Work”

See all papers in Proc. ACL 2014 that mention manually annotated.

See all papers in Proc. ACL that mention manually annotated.

Back to top.

n-gram

- 3.2 Semantic Features 3.2.1 Web n-gram Features Patterns and counts: Hypernymy for a term pairPage 5, “Features”

- Hence, we fire Web n-gram pattern features and Wikipedia presence, distance, and pattern features, similar to those described above, on each potential sibling term pair.7 The main difference here from the edge factors is that the sibling factors are symmetric (in the sense that Sig-k, is redundant to Sim) and hence the patterns are undirected.Page 6, “Features”

- Feature sources: The n-gram semantic features are extracted from the Google n-grams corpus (Brants and Franz, 2006), a large collection of English n-grams (for n = 1 to 5) and their frequencies computed from almost 1 trillion tokens (95 billion sentences) of Web text.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

subtrees

- General setup: In order to test the accuracy of structured prediction on medium-sized full-domain taxonomies, we extracted from WordNet 3.0 all bottomed-out full subtrees which had a tree-height of 3 (i.e., 4 nodes from root to leaf), and contained (10, 50] terms.11 This gives us 761 non-overlapping trees, which we partition intoPage 7, “Experiments”

- 13We tried this training regimen as different from that of the general setup (which contains only bottomed-out subtrees ), so as to match the animal test tree, which is of depth 12 and has intermediate nodes from higher up in WordNet.Page 7, “Experiments”

- For scaling the 2nd order sibling model, one can use approximations, e. g., pruning the set of sibling factors based on lst order link marginals, or a hierarchical coarse-to-fine approach based on taxonomy induction on subtrees , or a greedy approach of adding a few sibling factors at a time.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention subtrees.

See all papers in Proc. ACL that mention subtrees.

Back to top.

synsets

- To project WordNet synsets to terms, we used the first (most frequent) term in each synset .Page 7, “Experiments”

- A few WordNet synsets have multiple parents so we only keep the first of each such pair of overlapping trees.Page 7, “Experiments”

- We also discard a few trees with duplicate terms because this is mostly due to the projection of different synsets to the same term, and theoretically makes the tree a graph.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention synsets.

See all papers in Proc. ACL that mention synsets.

Back to top.