Article Structure

Abstract

We investigate different ways of learning structured perceptron models for coreference resolution when using nonlocal features and beam search.

Introduction

This paper studies and extends previous work using the structured perceptron (Collins, 2002) for complex NLP tasks.

Background

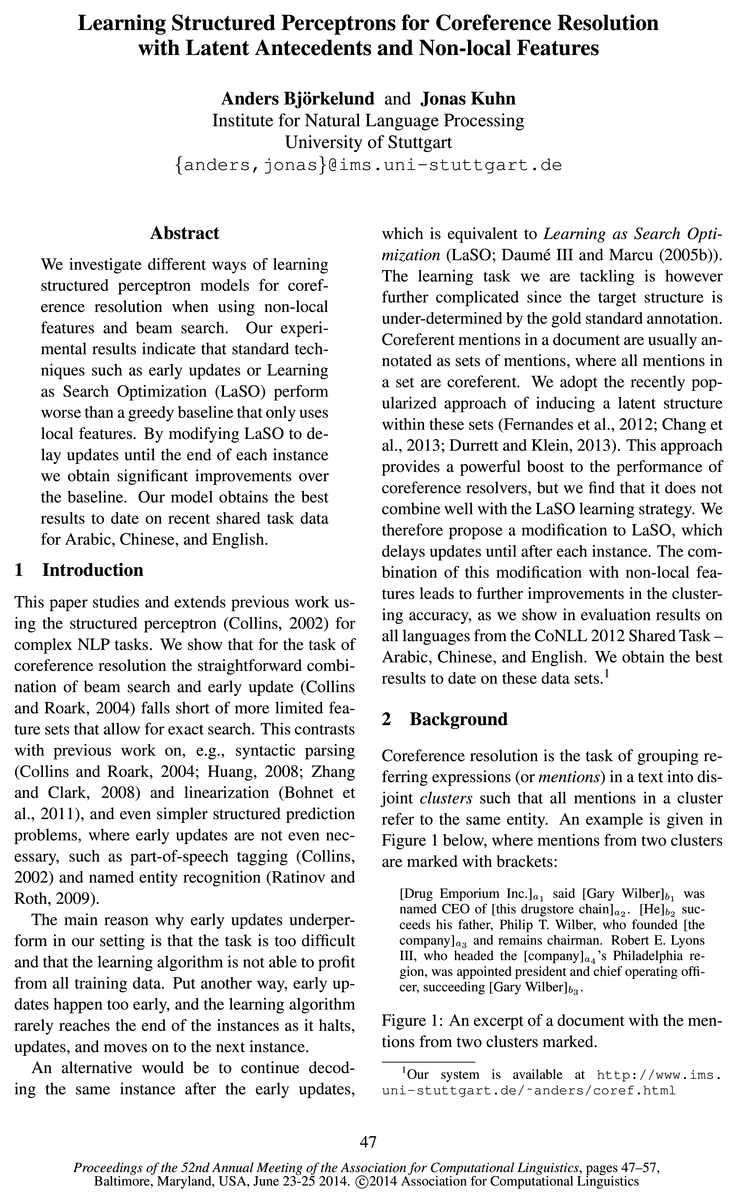

Coreference resolution is the task of grouping referring expressions (or mentions) in a text into disjoint clusters such that all mentions in a cluster refer to the same entity.

Representation and Learning

Let M = {7710, m1, ..., mn} denote the set of mentions in a document, including the artificial root mention (denoted by me).

Incremental Search

We now show that the search problem in (2) can equivalently be solved by the more intuitive best-first decoder (Ng and Cardie, 2002), rather than using the CLE decoder.

Introducing Nonlocal Features

Since the best-first decoder makes a left-to-right pass, it is possible to extract features on the partial structure on the left.

Features

In this section we briefly outline the type of features we use.

Experimental Setup

We apply our model to the CoNLL 2012 Shared Task data, which includes a training, development, and test set split for three languages: Arabic, Chinese and English.

Results

Learning strategies.

Related Work

On the machine learning side Collins and Roark’s (2004) work on the early update constitutes our starting point.

Conclusion

We presented experiments with a coreference resolver that leverages nonlocal features to improve its performance.

Topics

coreference

- We investigate different ways of learning structured perceptron models for coreference resolution when using nonlocal features and beam search.Page 1, “Abstract”

- We show that for the task of coreference resolution the straightforward combination of beam search and early update (Collins and Roark, 2004) falls short of more limited feature sets that allow for exact search.Page 1, “Introduction”

- Coreferent mentions in a document are usually annotated as sets of mentions, where all mentions in a set are coreferent .Page 1, “Introduction”

- This approach provides a powerful boost to the performance of coreference resolvers, but we find that it does not combine well with the LaSO learning strategy.Page 1, “Introduction”

- Coreference resolution is the task of grouping referring expressions (or mentions) in a text into disjoint clusters such that all mentions in a cluster refer to the same entity.Page 1, “Background”

- In recent years much work on coreference resolution has been devoted to increasing the ex-pressivity of the classical mention-pair model, in which each coreference classification decision is limited to information about two mentions that make up a pair.Page 2, “Background”

- This shortcoming has been addressed by entity-mention models, which relate a candidate mention to the full cluster of mentions predicted to be coreferent so far (for more discussion on the model types, see, e.g., (Ng, 2010)).Page 2, “Background”

- Nevertheless, the two best systems in the latest CoNLL Shared Task on coreference resolution (Pradhan et al., 2012) were both variants of the mention-pair model.Page 2, “Background”

- (2012) construes the representation of coreference clusters as a rooted tree.Page 2, “Background”

- Every mention corresponds to a node in the tree, and arcs between mentions indicate that they are coreferent .Page 2, “Background”

- Every subtree under the root node corresponds to a cluster of coreferent mentions.Page 2, “Background”

See all papers in Proc. ACL 2014 that mention coreference.

See all papers in Proc. ACL that mention coreference.

Back to top.

CoNLL

- The combination of this modification with nonlocal features leads to further improvements in the clustering accuracy, as we show in evaluation results on all languages from the CoNLL 2012 Shared Task —Arabic, Chinese, and English.Page 1, “Introduction”

- Nevertheless, the two best systems in the latest CoNLL Shared Task on coreference resolution (Pradhan et al., 2012) were both variants of the mention-pair model.Page 2, “Background”

- As a baseline we use the features from Bjorkelund and Farkas (2012), who ranked second in the 2012 CoNLL shared task and is publicly available.Page 6, “Features”

- Feature templates were incrementally added or removed in order to optimize the mean of MUC, B3, and CEAFe (i.e., the CoNLL average).Page 6, “Features”

- We apply our model to the CoNLL 2012 Shared Task data, which includes a training, development, and test set split for three languages: Arabic, Chinese and English.Page 7, “Experimental Setup”

- We evaluate our system using the CoNLL 2012 scorer, which computes several coreference metrics: MUC (Vilain et al., 1995), B3 (Bagga and Baldwin, 1998), and CEAFe and CEAFm (Luo, 2005).Page 7, “Experimental Setup”

- We also report the CoNLL average (also known as MELA; Denis and Baldridge (2009)), i.e., the arithmetic mean of MUC, B3, and CEAFe.Page 7, “Experimental Setup”

- We use the most recent version of the CoNLL scorer (version 7), which implements the original definitions of these metrics.8Page 7, “Experimental Setup”

- Figure 3 shows the CoNLL average onPage 7, “Results”

- 8Available at http: //conll .Page 7, “Results”

- Table 1 displays the differences in F-measures and CoNLL average between the local and nonlocal systems when applied to the development sets for each language.Page 7, “Results”

See all papers in Proc. ACL 2014 that mention CoNLL.

See all papers in Proc. ACL that mention CoNLL.

Back to top.

weight vector

- The score of an arc (ai, mi) is defined as the scalar product between a weight vector 21) and a feature vector (13((ai, m»), where (I) is a feature extraction function over an arc (thus extracting features from the antecedent and the anaphor).Page 2, “Representation and Learning”

- We find the weight vector 21) by online learning using a variant of the structured perceptron (Collins, 2002).Page 3, “Representation and Learning”

- For each instance it uses the current weight vector 21) to make a prediction 3),- given the input 30,.Page 3, “Representation and Learning”

- If the prediction is incorrect, the weight vector is updated in favor of the correct structure.Page 3, “Representation and Learning”

- Otherwise the weight vector is left untouched.Page 3, “Representation and Learning”

- In order to have a tree structure to update against, we use the current weight vector and apply the decoder to a constrained antecedent set and obtain a latent tree over the mentions in a document, where each mention is assigned a single correct antecedent (Femandes et al., 2012).Page 3, “Representation and Learning”

- for updates is then defined to be the optimal tree over 32(A), subject to the current weight vector:Page 3, “Representation and Learning”

- The intuition behind the latent tree is that during online learning, the weight vector will start favoring latent trees that are easier to learn (such as the one in Figure 2).Page 3, “Representation and Learning”

- Algorithm 1 PA algorithm with latent trees Input: Training data D, number of iterations T Output: Weight vector w a l: w = 0 2: fort 6 LT doPage 3, “Representation and Learning”

- We now outline three different ways of learning the weight vector 21) with nonlocal features.Page 4, “Introducing Nonlocal Features”

- In other words, it is unlikely that we can devise a feature set that is informative enough to allow the weight vector to converge towards a solution that lets the learning algorithm see the entire documents during training, at least in the situation when no external knowledge sources are used.Page 4, “Introducing Nonlocal Features”

See all papers in Proc. ACL 2014 that mention weight vector.

See all papers in Proc. ACL that mention weight vector.

Back to top.

coreference resolution

- We investigate different ways of learning structured perceptron models for coreference resolution when using nonlocal features and beam search.Page 1, “Abstract”

- We show that for the task of coreference resolution the straightforward combination of beam search and early update (Collins and Roark, 2004) falls short of more limited feature sets that allow for exact search.Page 1, “Introduction”

- This approach provides a powerful boost to the performance of coreference resolvers , but we find that it does not combine well with the LaSO learning strategy.Page 1, “Introduction”

- Coreference resolution is the task of grouping referring expressions (or mentions) in a text into disjoint clusters such that all mentions in a cluster refer to the same entity.Page 1, “Background”

- In recent years much work on coreference resolution has been devoted to increasing the ex-pressivity of the classical mention-pair model, in which each coreference classification decision is limited to information about two mentions that make up a pair.Page 2, “Background”

- Nevertheless, the two best systems in the latest CoNLL Shared Task on coreference resolution (Pradhan et al., 2012) were both variants of the mention-pair model.Page 2, “Background”

- (2012) proposed the use of latent trees that are induced during the training phase of a coreference resolver .Page 2, “Background”

- Comparing the two alternative antecedents of Gary Wilberb3, the tree in Figure 2 provides a more reliable basis for training a coreference resolver , as the two mentions of Gary Wilber are both proper names and have an exact string match.Page 2, “Background”

- While beam search and early updates have been successfully applied to other NLP applications, our task differs in two important aspects: First, coreference resolution is a much more difficult task, which relies on more (world) knowledge than what is available in the training data.Page 4, “Introducing Nonlocal Features”

- For English we also compare it to the Berkeley system (Durrett and Klein, 2013), which, to our knowledge, is the best publicly available system for English coreference resolution (denoted D&K).Page 8, “Results”

- The perceptron has previously been used to train coreference resolvers either by casting the problem as a binary classification problem that considers pairs of mentions in isolation (Bengtson and Roth, 2008; Stoyanov et al., 2009; Chang et al., 2012, inter alia) or in the structured manner, where a clustering for an entire document is predicted in one go (Fernandes et al., 2012).Page 8, “Related Work”

See all papers in Proc. ACL 2014 that mention coreference resolution.

See all papers in Proc. ACL that mention coreference resolution.

Back to top.

learning algorithm

- The main reason why early updates underper-form in our setting is that the task is too difficult and that the learning algorithm is not able to profit from all training data.Page 1, “Introduction”

- Put another way, early updates happen too early, and the learning algorithm rarely reaches the end of the instances as it halts, updates, and moves on to the next instance.Page 1, “Introduction”

- Algorithm 1 shows pseudocode for the leam-ing algorithm, which we will refer to as the baseline learning algorithm .Page 3, “Representation and Learning”

- In other words, it is unlikely that we can devise a feature set that is informative enough to allow the weight vector to converge towards a solution that lets the learning algorithm see the entire documents during training, at least in the situation when no external knowledge sources are used.Page 4, “Introducing Nonlocal Features”

- Thus the learning algorithm always reaches the end of a document, avoiding the problem that early updates discard parts of the training data.Page 5, “Introducing Nonlocal Features”

- When we applied LaSO, we noticed that it performed worse than the baseline learning algorithm when only using local features.Page 5, “Introducing Nonlocal Features”

- When only local features are used, this update is equivalent to the updates in the baseline learning algorithm .Page 6, “Introducing Nonlocal Features”

- Algorithm 3 shows the pseudocode for the delayed LaSO learning algorithm .Page 6, “Introducing Nonlocal Features”

- Since early updates do not always make use of the complete documents during training, it can be expected that it will require either a very wide beam or more iterations to get up to par with the baseline learning algorithm .Page 7, “Results”

- Recall that with only local features, delayed LaSO is equivalent to the baseline learning algorithm .Page 7, “Results”

- From these results we conclude that we are better off when the learning algorithm handles one document at a time, instead of getting feedback within documents.Page 7, “Results”

See all papers in Proc. ACL 2014 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

feature sets

- We show that for the task of coreference resolution the straightforward combination of beam search and early update (Collins and Roark, 2004) falls short of more limited feature sets that allow for exact search.Page 1, “Introduction”

- In other words, it is unlikely that we can devise a feature set that is informative enough to allow the weight vector to converge towards a solution that lets the learning algorithm see the entire documents during training, at least in the situation when no external knowledge sources are used.Page 4, “Introducing Nonlocal Features”

- The feature sets are customized for each language.Page 6, “Features”

- The exact definitions and feature sets that we use are available as part of the download package of our system.Page 6, “Features”

- nonlocal features were selected with the same greedy forward strategy as the local features, starting from the optimized local feature sets .Page 7, “Features”

- We experiment with two feature sets for each language: the optimized local feature sets (denoted local), and the optimized local feature sets extended with nonlocal features (denoted nonlocal).Page 7, “Experimental Setup”

- the English development set as a function of number of training iterations with two different beam sizes, 20 and 100, over the local and nonlocal feature sets .Page 7, “Results”

- The left half uses the local feature set, and the right the extended nonlocal feature set .Page 7, “Results”

- Local vs. Nonlocal feature sets .Page 7, “Results”

- All metrics improve when more informative nonlocal features are added to the local feature set .Page 7, “Results”

- Table 1: Comparison of local and nonlocal feature sets on the development sets.Page 8, “Results”

See all papers in Proc. ACL 2014 that mention feature sets.

See all papers in Proc. ACL that mention feature sets.

Back to top.

perceptron

- We investigate different ways of learning structured perceptron models for coreference resolution when using nonlocal features and beam search.Page 1, “Abstract”

- This paper studies and extends previous work using the structured perceptron (Collins, 2002) for complex NLP tasks.Page 1, “Introduction”

- We find the weight vector 21) by online learning using a variant of the structured perceptron (Collins, 2002).Page 3, “Representation and Learning”

- The structured perceptron iterates over training instances (55,, 3),), where :10, are inputs and y, are outputs.Page 3, “Representation and Learning”

- If 7' is set to l, the update reduces to the standard structured perceptron update.Page 3, “Representation and Learning”

- Unless otherwise stated we use 25 iterations of perceptron training and a beam size of 20.Page 7, “Experimental Setup”

- Perceptrons for coreference.Page 8, “Related Work”

- The perceptron has previously been used to train coreference resolvers either by casting the problem as a binary classification problem that considers pairs of mentions in isolation (Bengtson and Roth, 2008; Stoyanov et al., 2009; Chang et al., 2012, inter alia) or in the structured manner, where a clustering for an entire document is predicted in one go (Fernandes et al., 2012).Page 8, “Related Work”

- Stoyanov and Eisner (2012) train an Easy-First coreference system with the perceptron to learn a sequence of join operations between arbitrary mentions in a document and accesses nonlocal features through previous merge operations in later stages.Page 8, “Related Work”

- We evaluated standard perceptron learning techniques for this setting both using early updates and LaSO.Page 9, “Conclusion”

- In the special case where only local features are used, this method coincides with standard structured perceptron learning that uses exact search.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention perceptron.

See all papers in Proc. ACL that mention perceptron.

Back to top.

beam search

- We investigate different ways of learning structured perceptron models for coreference resolution when using nonlocal features and beam search .Page 1, “Abstract”

- We show that for the task of coreference resolution the straightforward combination of beam search and early update (Collins and Roark, 2004) falls short of more limited feature sets that allow for exact search.Page 1, “Introduction”

- In order to keep some options around during search, we extend the best-first decoder with beam search .Page 4, “Introducing Nonlocal Features”

- Beam search works incrementally by keeping an agenda of state items.Page 4, “Introducing Nonlocal Features”

- The beam search decoder can be plugged into the training algorithm, replacing the calls to arg max.Page 4, “Introducing Nonlocal Features”

- While beam search and early updates have been successfully applied to other NLP applications, our task differs in two important aspects: First, coreference resolution is a much more difficult task, which relies on more (world) knowledge than what is available in the training data.Page 4, “Introducing Nonlocal Features”

- We therefore also apply beam search to find the latent tree to have a partial gold structure for every mention in a document.Page 4, “Introducing Nonlocal Features”

- Algorithm 2 shows pseudocode for the beam search and early update training procedure.Page 4, “Introducing Nonlocal Features”

- Algorithm 2 Beam search and early update Input: Data set D, epochs T, beam size k: Output: weight vector to a 1: w = 0 2: fort 6 LT doPage 5, “Introducing Nonlocal Features”

- (2004) also apply beam search at test time, but use a static assignment of antecedents and learns log-linear model using batch learning.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

Shared Task

- Our model obtains the best results to date on recent shared task data for Arabic, Chinese, and English.Page 1, “Abstract”

- The combination of this modification with nonlocal features leads to further improvements in the clustering accuracy, as we show in evaluation results on all languages from the CoNLL 2012 Shared Task —Arabic, Chinese, and English.Page 1, “Introduction”

- Nevertheless, the two best systems in the latest CoNLL Shared Task on coreference resolution (Pradhan et al., 2012) were both variants of the mention-pair model.Page 2, “Background”

- As a baseline we use the features from Bjorkelund and Farkas (2012), who ranked second in the 2012 CoNLL shared task and is publicly available.Page 6, “Features”

- We apply our model to the CoNLL 2012 Shared Task data, which includes a training, development, and test set split for three languages: Arabic, Chinese and English.Page 7, “Experimental Setup”

- As a general baseline, we also include Bjorkelund and Farkas’ (2012) system (denoted B&F), which was the second best system in the shared task .Page 8, “Results”

- Latent antecedents have recently gained popularity and were used by two systems in the CoNLL 2012 Shared Task , including the winning system (Femandes et al., 2012; Chang et al., 2012).Page 9, “Related Work”

- We evaluated our system on all three languages from the CoNLL 2012 Shared Task and present the best results to date on these data sets.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention Shared Task.

See all papers in Proc. ACL that mention Shared Task.

Back to top.

highest scoring

- works incrementally by making a left-to-right pass over the mentions, selecting for each mention the highest scoring antecedent.Page 4, “Incremental Search”

- We sketch a proof that this decoder also returns the highest scoring tree.Page 4, “Incremental Search”

- Second, this tree is the highest scoring tree.Page 4, “Incremental Search”

- This contradicts the fact that the best-first decoder selects the highest scoring antecedent for each mention.5Page 4, “Incremental Search”

- When only local features are used, greedy search (either with CLE or the best-first decoder) suffices to find the highest scoring tree.Page 4, “Introducing Nonlocal Features”

- The subset of size k (the beam size) of the highest scoring expansions are retained and put back into the agenda for the next step.Page 4, “Introducing Nonlocal Features”

See all papers in Proc. ACL 2014 that mention highest scoring.

See all papers in Proc. ACL that mention highest scoring.

Back to top.

beam size

- The subset of size k (the beam size ) of the highest scoring expansions are retained and put back into the agenda for the next step.Page 4, “Introducing Nonlocal Features”

- Algorithm 2 Beam search and early update Input: Data set D, epochs T, beam size k: Output: weight vector to a 1: w = 0 2: fort 6 LT doPage 5, “Introducing Nonlocal Features”

- Input: Data set D, iterations T, beam size k: Output: weight vector to 1: w = 6) 2: fort 6 LT do ~ for <M¢,Ai,¢4¢> E D do Agendaa = Agendap = Aacc : 6) lossacc = 0 for j 6 Ln do ~ Agendaa = EXPAND(AgendaG, Aj, mj, k) Agendap = EXPAND(Agendap, Aj, mj, k) if fl CONTAINsCORRECT(Agendap) then 3] = EXTRACTBEST(AgendaG) g = EXTRACTBEST(Agendap) Aacc = Aacc + _ lossacc = lossacc + LOSS(jI]) Agendap = Agendaa g = EXTRACTBEST(Agendap) if fl CORRECTQQ) then g = EXTRACTBEST(AgendaG) Aace = Aacc + _ lossacc = lossacc + LOSS(:IQ) if A... 7e 6’ then update w.r.t.Page 6, “Introducing Nonlocal Features”

- Unless otherwise stated we use 25 iterations of perceptron training and a beam size of 20.Page 7, “Experimental Setup”

- the English development set as a function of number of training iterations with two different beam sizes , 20 and 100, over the local and nonlocal feature sets.Page 7, “Results”

See all papers in Proc. ACL 2014 that mention beam size.

See all papers in Proc. ACL that mention beam size.

Back to top.

development set

- the English development set as a function of number of training iterations with two different beam sizes, 20 and 100, over the local and nonlocal feature sets.Page 7, “Results”

- In Figure 4 we compare early update with LaSO and delayed LaSO on the English development set .Page 7, “Results”

- Table 1 displays the differences in F-measures and CoNLL average between the local and nonlocal systems when applied to the development sets for each language.Page 7, “Results”

- Figure 4: Comparison of learning algorithms evaluated on the English development set .Page 8, “Results”

- Table 1: Comparison of local and nonlocal feature sets on the development sets .Page 8, “Results”

See all papers in Proc. ACL 2014 that mention development set.

See all papers in Proc. ACL that mention development set.

Back to top.

best results

- Our model obtains the best results to date on recent shared task data for Arabic, Chinese, and English.Page 1, “Abstract”

- We obtain the best results to date on these data sets.1Page 1, “Introduction”

- In Table 2 we compare the results of the nonlocal system (This paper) to the best results from the CoNLL 2012 Shared Task.10 Specifically, this includes Fernandes et al.’s (2012) system for Arabic and English (denoted Fernandes), and Chen and Ng’s (2012) system for Chinese (denoted C&N).Page 8, “Results”

- We evaluated our system on all three languages from the CoNLL 2012 Shared Task and present the best results to date on these data sets.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention best results.

See all papers in Proc. ACL that mention best results.

Back to top.

loss function

- A loss function LOSS that quantifies the error in the prediction is used to compute a scalar 7' that controls how much the weights are moved in each update.Page 3, “Representation and Learning”

- The loss function can be an arbitrarily complex function that returns a numerical value of how bad the prediction is.Page 3, “Representation and Learning”

- Durrett and Klein (2013) present a coreference resolver with latent antecedents that predicts clusterings over entire documents and fit a log-linear model with a custom task-specific loss function using AdaGrad (Duchi et al., 2011).Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention loss function.

See all papers in Proc. ACL that mention loss function.

Back to top.

maximum spanning

- Edmonds, 1967), which is a maximum spanning tree algorithm that finds the optimal tree over a connected directed graph.Page 3, “Representation and Learning”

- 5 In case there are multiple maximum spanning trees, the best-first decoder will return one of them.Page 4, “Introducing Nonlocal Features”

- With proper definitions, the proof can be constructed to show that both search algorithms return trees belonging to the set of maximum spanning trees over a graph.Page 4, “Introducing Nonlocal Features”

See all papers in Proc. ACL 2014 that mention maximum spanning.

See all papers in Proc. ACL that mention maximum spanning.

Back to top.

spanning trees

- Edmonds, 1967), which is a maximum spanning tree algorithm that finds the optimal tree over a connected directed graph.Page 3, “Representation and Learning”

- 5 In case there are multiple maximum spanning trees , the best-first decoder will return one of them.Page 4, “Introducing Nonlocal Features”

- With proper definitions, the proof can be constructed to show that both search algorithms return trees belonging to the set of maximum spanning trees over a graph.Page 4, “Introducing Nonlocal Features”

See all papers in Proc. ACL 2014 that mention spanning trees.

See all papers in Proc. ACL that mention spanning trees.

Back to top.