Article Structure

Abstract

Image description is a new natural language generation task, where the aim is to generate a human-like description of an image.

Introduction

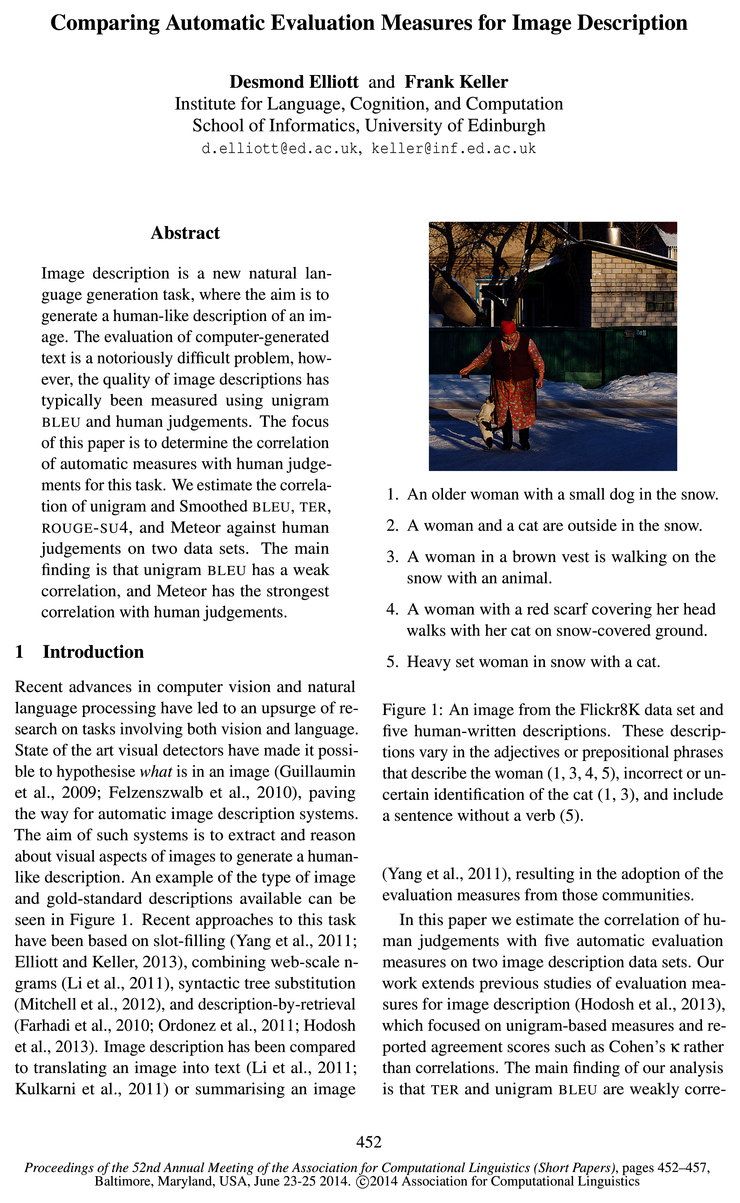

Recent advances in computer vision and natural language processing have led to an upsurge of research on tasks involving both vision and language.

Methodology

We estimate Spearman’s p for five different automatic evaluation measures against human judgements for the automatic image description task.

Results

Table 1 shows the correlation coefficients between automatic measures and human judgements and Figures 2(a) and (b) show the distribution of scores for each measure against human judgements.

Discussion

There are several differences between our analysis and that of Hodosh et a1.

Conclusions

In this paper we performed a sentence-level correlation analysis of automatic evaluation measures against expert human judgements for the automatic image description task.

Topics

human judgements

- The evaluation of computer-generated text is a notoriously difficult problem, however, the quality of image descriptions has typically been measured using unigram BLEU and human judgements .Page 1, “Abstract”

- The focus of this paper is to determine the correlation of automatic measures with human judgements for this task.Page 1, “Abstract”

- We estimate the correlation of unigram and Smoothed BLEU, TER, ROUGE-SU4, and Meteor against human judgements on two data sets.Page 1, “Abstract”

- The main finding is that unigram BLEU has a weak correlation, and Meteor has the strongest correlation with human judgements .Page 1, “Abstract”

- In this paper we estimate the correlation of human judgements with five automatic evaluation measures on two image description data sets.Page 1, “Introduction”

- lated against human judgements , ROUGE-SU4 and Smoothed BLEU are moderately correlated, and the strongest correlation is found with Meteor.Page 2, “Introduction”

- We estimate Spearman’s p for five different automatic evaluation measures against human judgements for the automatic image description task.Page 2, “Methodology”

- The automatic measures are calculated on the sentence level and correlated against human judgements of semantic correctness.Page 2, “Methodology”

- The images were retrieved from Flickr, the reference descriptions were collected from Mechanical Turk, and the human judgements were collected from expert annotators as follows: each image in the test data was paired with the highest scoring sentence(s) retrieved from all possible test sentences by the TRIS SEM model in Hodosh et al.Page 2, “Methodology”

- Each image—description pairing in the test data was judged for semantic correctness by three expert human judges on a scale of 1—4.Page 2, “Methodology”

- Elliott and Keller (2013) generated two-sentence descriptions for each of the test images using four variants of a slot-filling model, and collected five human judgements of the semantic correctness and grammatical correctness of the description on a scale of 1—5 for each image—description pair, resulting in a total of 2,042 human judgement—description pairings.Page 2, “Methodology”

See all papers in Proc. ACL 2014 that mention human judgements.

See all papers in Proc. ACL that mention human judgements.

Back to top.

BLEU

- The evaluation of computer-generated text is a notoriously difficult problem, however, the quality of image descriptions has typically been measured using unigram BLEU and human judgements.Page 1, “Abstract”

- We estimate the correlation of unigram and Smoothed BLEU , TER, ROUGE-SU4, and Meteor against human judgements on two data sets.Page 1, “Abstract”

- The main finding is that unigram BLEU has a weak correlation, and Meteor has the strongest correlation with human judgements.Page 1, “Abstract”

- The main finding of our analysis is that TER and unigram BLEU are weakly corre-Page 1, “Introduction”

- lated against human judgements, ROUGE-SU4 and Smoothed BLEU are moderately correlated, and the strongest correlation is found with Meteor.Page 2, “Introduction”

- BLEU measures the effective overlap between a reference sentence X and a candidate sentence Y.Page 2, “Methodology”

- N BLEU = BP-exp < wn logpn> n=1Page 2, “Methodology”

- Unigram BLEU without a brevity penalty has been reported by Kulkarni et a1.Page 2, “Methodology”

- (2012); to the best of our knowledge, the only image description work to use higher-order n-grams with BLEU is Elliott and Keller (2013).Page 2, “Methodology”

- In this paper we use the smoothed BLEU implementation of Clark et a1.Page 2, “Methodology”

- (2011) to perform a sentence-level analysis, setting n = 1 and no brevity penalty to get the unigram BLEU measure, or n = 4 with the brevity penalty to get the Smoothed BLEU measure.Page 2, “Methodology”

See all papers in Proc. ACL 2014 that mention BLEU.

See all papers in Proc. ACL that mention BLEU.

Back to top.

unigram

- The evaluation of computer-generated text is a notoriously difficult problem, however, the quality of image descriptions has typically been measured using unigram BLEU and human judgements.Page 1, “Abstract”

- We estimate the correlation of unigram and Smoothed BLEU, TER, ROUGE-SU4, and Meteor against human judgements on two data sets.Page 1, “Abstract”

- The main finding is that unigram BLEU has a weak correlation, and Meteor has the strongest correlation with human judgements.Page 1, “Abstract”

- The main finding of our analysis is that TER and unigram BLEU are weakly corre-Page 1, “Introduction”

- Unigram BLEU without a brevity penalty has been reported by Kulkarni et a1.Page 2, “Methodology”

- (2011) to perform a sentence-level analysis, setting n = 1 and no brevity penalty to get the unigram BLEU measure, or n = 4 with the brevity penalty to get the Smoothed BLEU measure.Page 2, “Methodology”

- We set dskip = 4 and award partial credit for unigram only matches, otherwise known as ROUGE-SU4.Page 3, “Methodology”

- Meteor is the harmonic mean of unigram precision and recall that allows for exact, synonym, and paraphrase matchings between candidates and references.Page 3, “Methodology”

- We can calculate precision, recall, and F-measure, where m is the number of aligned unigrams between candidate and reference.Page 3, “Methodology”

- : |unigrams in candidate| lmlPage 3, “Methodology”

- |unigrams in reference|Page 3, “Methodology”

See all papers in Proc. ACL 2014 that mention unigram.

See all papers in Proc. ACL that mention unigram.

Back to top.

TER

- We estimate the correlation of unigram and Smoothed BLEU, TER , ROUGE-SU4, and Meteor against human judgements on two data sets.Page 1, “Abstract”

- The main finding of our analysis is that TER and unigram BLEU are weakly corre-Page 1, “Introduction”

- TER measures the number of modifications a human would need to make to transform a candidate Y into a reference X.Page 3, “Methodology”

- TER is expressed as the percentage of the sentence that needs to be changed, and can be greater than 100 if the candidate is longer than the reference.Page 3, “Methodology”

- TER = —|reference tokens|Page 3, “Methodology”

- TER has not yet been used to evaluate image description models.Page 3, “Methodology”

- We use v.0.8.0 of the TER evaluation tool, and a lower TER is better.Page 3, “Methodology”

- METEOR 0.524 0.233 ROUGE SU-4 0.435 0.188 Smoothed BLEU 0.429 0.177 Unigram BLEU 0.345 0.097 TER -0.279 -0.044Page 3, “Methodology”

- We collected the BLEU, TER , and Meteor scores using MultEval (Clark et al., 2011), and the ROUGE-SU4 scores using the RELEASE-1.5.5.p1 script.Page 3, “Methodology”

- TER is only weakly correlated with human judgements but could prove useful in comparing the types of differences between models.Page 3, “Results”

- An analysis of the distribution of TER scores in Figure 2(a) shows that differences in candidate and reference length are prevalent in the image description task.Page 3, “Results”

See all papers in Proc. ACL 2014 that mention TER.

See all papers in Proc. ACL that mention TER.

Back to top.

sentence-level

- (2011) to perform a sentence-level analysis, setting n = 1 and no brevity penalty to get the unigram BLEU measure, or n = 4 with the brevity penalty to get the Smoothed BLEU measure.Page 2, “Methodology”

- The sentence-level evaluation measures were calculated for each image—description—reference tuple.Page 3, “Methodology”

- The evaluation measure scores were then compared with the human judgements using Spearman’s correlation estimated at the sentence-level .Page 3, “Methodology”

- Sentence-level automated measure scorePage 4, “Results”

- Sentence-level automated measure scorePage 4, “Results”

- In this paper we performed a sentence-level correlation analysis of automatic evaluation measures against expert human judgements for the automatic image description task.Page 5, “Conclusions”

- We found that sentence-level unigram BLEU is only weakly correlated with human judgements, even though it has extensively reported in the literature for this task.Page 5, “Conclusions”

See all papers in Proc. ACL 2014 that mention sentence-level.

See all papers in Proc. ACL that mention sentence-level.

Back to top.

n-grams

- Recent approaches to this task have been based on slot-filling (Yang et al., 2011; Elliott and Keller, 2013), combining web-scale n-grams (Li et al., 2011), syntactic tree substitution (Mitchell et al., 2012), and description-by-retrieval (Farhadi et al., 2010; Ordonez et al., 2011; Hodosh et al., 2013).Page 1, “Introduction”

- pn measures the effective overlap by calculating the proportion of the maximum number of n-grams co-occurring between a candidate and a reference and the total number of n-grams in the candidate text.Page 2, “Methodology”

- (2012); to the best of our knowledge, the only image description work to use higher-order n-grams with BLEU is Elliott and Keller (2013).Page 2, “Methodology”

See all papers in Proc. ACL 2014 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.