Article Structure

Abstract

Semantic hierarchy construction aims to build structures of concepts linked by hypernym—hyponym (“isa”) relations.

Introduction

Semantic hierarchies are natural ways to organize knowledge.

Background

As main components of ontologies, semantic hierarchies have been studied by many researchers.

Method

In this section, we first define the task formally.

Experimental Setup

4.1 Experimental Data

Results and Analysis 5.1 Varying the Amount of Clusters

We first evaluate the effect of different number of clusters based on the development data.

Related Work

In addition to the works mentioned in Section 2, we introduce another set of related studies in this section.

Conclusion and Future Work

This paper proposes a novel method for semantic hierarchy construction based on word embeddings, which are trained using a large-scale corpus.

Topics

hypernyms

- We identify whether a candidate word pair has hypernym—hyponym relation by using the word-embedding-based semantic projections between words and their hypernyms .Page 1, “Abstract”

- Here, “canine” is called a hypernym of “dog.” Conversely, “dog” is a hyponym of “canine.” As key sources of knowledge, semantic thesauri and ontologies can support many natural language processing applications.Page 1, “Introduction”

- (2013) propose a distant supervision method to extract hypernyms for entities from multiple sources.Page 1, “Introduction”

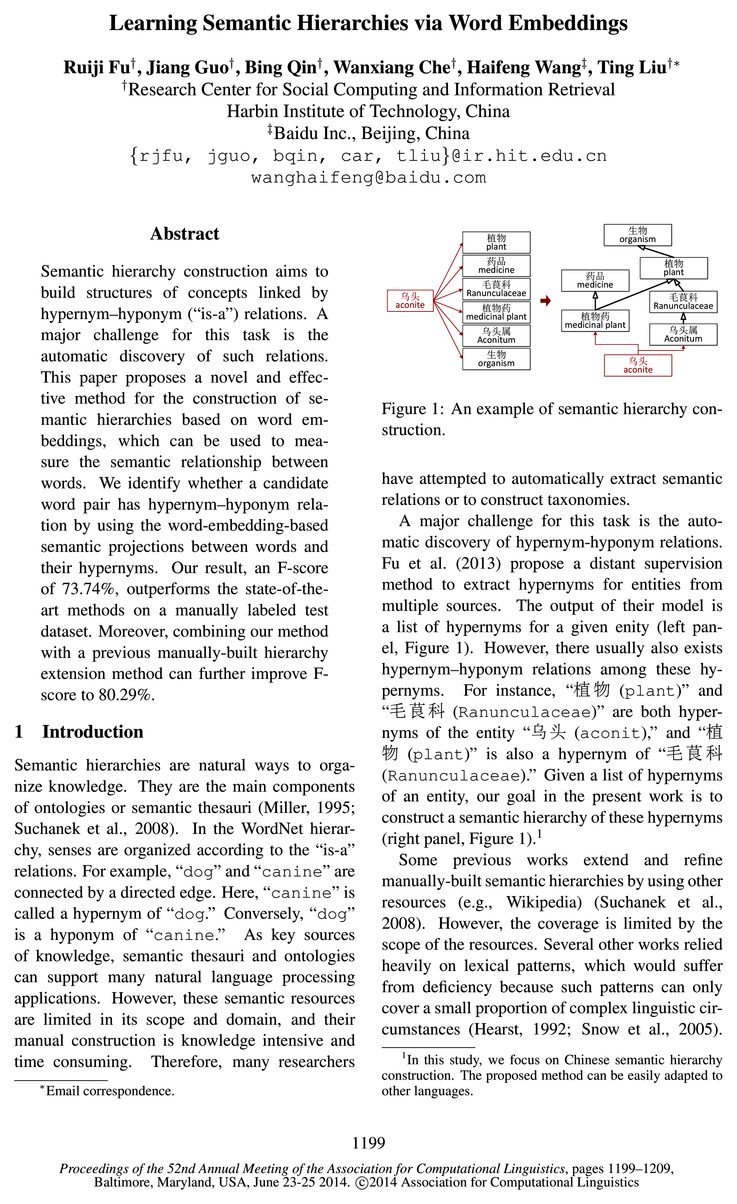

- The output of their model is a list of hypernyms for a given enity (left panel, Figure 1).Page 1, “Introduction”

- However, there usually also exists hypernym—hyponym relations among these hypernyms .Page 1, “Introduction”

- For instance, “FEW (plant)” and “iégfil (Ranunculaceae)” are both hypernyms of the entity “91% (aconit),” and “7E 5% (plant)” is also a hypernym of “ingfl (Ranunculaceae).” Given a list of hypernyms of an entity, our goal in the present work is to construct a semantic hierarchy of these hypernyms (right panel, Figure 1).1Page 1, “Introduction”

- Our previous method based on web mining (Fu et al., 2013) works well for hypernym extraction of entity names, but it is unsuitable for semantic hierarchy construction which involves many words with broad semantics.Page 2, “Introduction”

- To address this challenge, we propose a more sophisticated and general method — learning a linear projection which maps words to their hypernyms (Section 3.3.1).Page 2, “Introduction”

- For evaluation, we manually annotate a dataset containing 418 Chinese entities and their hypernym hierarchies, which is the first dataset for this task as far as we know.Page 2, “Introduction”

- The pioneer work by Hearst (1992) has found out that linking two noun phrases (NPs) via certain lexical constructions often implies hypernym relations.Page 2, “Background”

- For example, NP1 is a hypernym of NP2 in the lexical pattern “such NP1 as NP2 Snow et al.Page 2, “Background”

See all papers in Proc. ACL 2014 that mention hypernyms.

See all papers in Proc. ACL that mention hypernyms.

Back to top.

word embeddings

- This paper proposes a novel and effective method for the construction of semantic hierarchies based on word embeddings , which can be used to measure the semantic relationship between words.Page 1, “Abstract”

- This paper proposes a novel approach for semantic hierarchy construction based on word embeddings .Page 2, “Introduction”

- Word embeddings , also known as distributed word representations, typically represent words with dense, low-dimensional and real-valued vectors.Page 2, “Introduction”

- Word embeddings have been empirically shown to preserve linguistic regularities, such as the semantic relationship between words (Mikolov et al., 2013b).Page 2, “Introduction”

- To the best of our knowledge, we are the first to apply word embeddings to this task.Page 2, “Introduction”

- In this paper, we aim to identify hypemym—hyponym relations using word embeddings , which have been shown to preserve good properties for capturing semantic relationship between words.Page 3, “Background”

- Then we elaborate on our proposed method composed of three major steps, namely, word embedding training, projection learning, and hypernym—hyponym relation identification.Page 3, “Method”

- 3.2 Word Embedding TrainingPage 3, “Method”

- Various models for learning word embeddings have been proposed, including neural net language models (Bengio et al., 2003; Mnih and Hinton, 2008; Mikolov et al., 2013b) and spectral models (Dhillon et al., 2011).Page 3, “Method”

- (2013a) propose two log-linear models, namely the Skip-gram and CBOW model, to efficiently induce word embeddings .Page 3, “Method”

- Therefore, we employ the Skip- gram model for estimating word embeddings in this study.Page 3, “Method”

See all papers in Proc. ACL 2014 that mention word embeddings.

See all papers in Proc. ACL that mention word embeddings.

Back to top.

embeddings

- This paper proposes a novel and effective method for the construction of semantic hierarchies based on word embeddings , which can be used to measure the semantic relationship between words.Page 1, “Abstract”

- This paper proposes a novel approach for semantic hierarchy construction based on word embeddings .Page 2, “Introduction”

- Word embeddings , also known as distributed word representations, typically represent words with dense, low-dimensional and real-valued vectors.Page 2, “Introduction”

- Word embeddings have been empirically shown to preserve linguistic regularities, such as the semantic relationship between words (Mikolov et al., 2013b).Page 2, “Introduction”

- To the best of our knowledge, we are the first to apply word embeddings to this task.Page 2, “Introduction”

- In this paper, we aim to identify hypemym—hyponym relations using word embeddings , which have been shown to preserve good properties for capturing semantic relationship between words.Page 3, “Background”

- Various models for learning word embeddings have been proposed, including neural net language models (Bengio et al., 2003; Mnih and Hinton, 2008; Mikolov et al., 2013b) and spectral models (Dhillon et al., 2011).Page 3, “Method”

- (2013a) propose two log-linear models, namely the Skip-gram and CBOW model, to efficiently induce word embeddings .Page 3, “Method”

- Therefore, we employ the Skip- gram model for estimating word embeddings in this study.Page 3, “Method”

- After maximizing the log-likelihood over the entire dataset using stochastic gradient descent (SGD), the embeddings are learned.Page 3, “Method”

- (2013b) observe that word embeddings preserve interesting linguistic regularities, capturing a considerable amount of syntac-tic/semantic relations.Page 3, “Method”

See all papers in Proc. ACL 2014 that mention embeddings.

See all papers in Proc. ACL that mention embeddings.

Back to top.

word pairs

- We identify whether a candidate word pair has hypernym—hyponym relation by using the word-embedding-based semantic projections between words and their hypernyms.Page 1, “Abstract”

- Subsequently, we identify whether an unknown word pair is a hypernym—hyponym relation using the projections (Section 3.4).Page 2, “Introduction”

- Table l: Embedding offsets on a sample of hypernym—hyponym word pairs .Page 3, “Method”

- Looking at the well-known example: v(king) — v(queen) % v(man) —v(woman), it indicates that the embedding offsets indeed represent the shared semantic relation between the two word pairs .Page 3, “Method”

- As a preliminary experiment, we compute the embedding offsets between some randomly sampled hypernym—hyponym word pairs and measure their similarities.Page 3, “Method”

- hyponym word pairs in our training data and visualize them.2 Figure 2 shows that the relations are adequately distributed in the clusters, which implies that hypernym—hyponym relations indeed can be decomposed into more fine-grained relations.Page 4, “Method”

- where N is the number of (ac, y) word pairs in the training data.Page 4, “Method”

- A uniform linear projection may still be under-representative for fitting all of the hypernym—hyponym word pairs , because the relations are rather diverse, as shown in Figure 2.Page 4, “Method”

- That is, all word pairs (cc, y) in the training data are first clustered into several groups, where word pairs in each group are expected to exhibit similar hypernym—hyponym relations.Page 4, “Method”

- Each word pair (cc, y) is represented with their vector offsets: y — cc for clustering.Page 4, “Method”

- (2) The vector offsets distribute in clusters well, and the word pairs which are close indeed represent similar relations, as shown in Figure 2.Page 4, “Method”

See all papers in Proc. ACL 2014 that mention word pairs.

See all papers in Proc. ACL that mention word pairs.

Back to top.

F-score

- Our result, an F-score of 73.74%, outperforms the state-of-the-art methods on a manually labeled test dataset.Page 1, “Abstract”

- Moreover, combining our method with a previous manually-built hierarchy extension method can further improve F-score to 80.29%.Page 1, “Abstract”

- The experimental results show that our method achieves an F-score of 73.74% which significantly outperforms the previous state-of-the-art methods.Page 2, “Introduction”

- (2008) can further improve F-score to 80.29%.Page 2, “Introduction”

- We use precision, recall, and F-score as our metrics to evaluate the performances of the methods.Page 6, “Experimental Setup”

- Table 3 shows that the proposed method achieves a better recall and F-score than all of the previous methods do.Page 7, “Results and Analysis 5.1 Varying the Amount of Clusters”

- It can significantly (p < 0.01) improve the F-score over the state-of-the-art method MWikHCilmE.Page 7, “Results and Analysis 5.1 Varying the Amount of Clusters”

- The F-score is further improved from 73.74% to 76.29%.Page 7, “Results and Analysis 5.1 Varying the Amount of Clusters”

- Combining M Emb with MWikHCilmE achieves a 7% F-score improvement over the best baseline MWikHCilmE.Page 8, “Results and Analysis 5.1 Varying the Amount of Clusters”

- By contrast, our method can discover more hypemym—hyponym relations with some loss of precision, thereby achieving a more than 29% F-score improvement.Page 8, “Results and Analysis 5.1 Varying the Amount of Clusters”

- The combination of these two methods achieves a further 4.5% F-score improvement over M Emma-gm E. Generally speaking, the proposed method greatly improves the recall but damages the precision.Page 8, “Results and Analysis 5.1 Varying the Amount of Clusters”

See all papers in Proc. ACL 2014 that mention F-score.

See all papers in Proc. ACL that mention F-score.

Back to top.

semantic relationship

- This paper proposes a novel and effective method for the construction of semantic hierarchies based on word embeddings, which can be used to measure the semantic relationship between words.Page 1, “Abstract”

- have attempted to automatically extract semantic relations or to construct taxonomies.Page 1, “Introduction”

- Word embeddings have been empirically shown to preserve linguistic regularities, such as the semantic relationship between words (Mikolov et al., 2013b).Page 2, “Introduction”

- In this paper, we aim to identify hypemym—hyponym relations using word embeddings, which have been shown to preserve good properties for capturing semantic relationship between words.Page 3, “Background”

- Additionally, their experiment results have shown that the Skip-gram model performs best in identifying semantic relationship among words.Page 3, “Method”

- Looking at the well-known example: v(king) — v(queen) % v(man) —v(woman), it indicates that the embedding offsets indeed represent the shared semantic relation between the two word pairs.Page 3, “Method”

- The reasons are twofold: (l) Mikolov’s work has shown that the vector offsets imply a certain level of semantic relationship .Page 4, “Method”

- (2013b) further observe that the semantic relationship of words can be induced by performing simple algebraic operations with word vectors.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention semantic relationship.

See all papers in Proc. ACL that mention semantic relationship.

Back to top.

WordNet

- In the WordNet hierarchy, senses are organized according to the “isa” relations.Page 1, “Introduction”

- Some have established concept hierarchies based on manually-built semantic resources such as WordNet (Miller, 1995).Page 2, “Background”

- Such hierarchies have good structures and high accuracy, but their coverage is limited to fine-grained concepts (e.g., “Ranunculaceae” is not included in WordNet .).Page 2, “Background”

- (2008) link the categories in Wikipedia onto WordNet .Page 2, “Background”

- (2006) provides a global optimization scheme for extending WordNet , which is different from the above-mentioned pairwise relationships identification methods.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.

fine-grained

- Such hierarchies have good structures and high accuracy, but their coverage is limited to fine-grained concepts (e.g., “Ranunculaceae” is not included in WordNet.).Page 2, “Background”

- hyponym word pairs in our training data and visualize them.2 Figure 2 shows that the relations are adequately distributed in the clusters, which implies that hypernym—hyponym relations indeed can be decomposed into more fine-grained relations.Page 4, “Method”

- Some fine-grained relations exist in Wikipedia, but the coverage is limited.Page 8, “Results and Analysis 5.1 Varying the Amount of Clusters”

- Further improvements are made using a cluster-based approach in order to model the more fine-grained relations.Page 9, “Conclusion and Future Work”

See all papers in Proc. ACL 2014 that mention fine-grained.

See all papers in Proc. ACL that mention fine-grained.

Back to top.

distributional similarity

- Besides, distributional similarity methods (Kotlerman et al., 2010; Lenci and Benotto, 2012) are based on the assumption that a term can only be used in contexts where its hypemyms can be used and that a term might be used in any contexts where its hyponyms are used.Page 2, “Introduction”

- For distributional similarity computing, each word is represented as a semantic vector composed of the pointwise mutual information (PMI) with its contexts.Page 2, “Background”

- (2010) and Lenci and Benotto (2012), other researchers also propose directional distributional similarity methods (Weeds et al., 2004; Geffet and Dagan, 2005; Bhagat et al., 2007; Szpektor et al., 2007; Clarke, 2009).Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention distributional similarity.

See all papers in Proc. ACL that mention distributional similarity.

Back to top.

log-linear

- (2013a) propose two log-linear models, namely the Skip-gram and CBOW model, to efficiently induce word embeddings.Page 3, “Method”

- The Skip-gram model adopts log-linear classifiers to predict context words given the current word w(t) as input.Page 3, “Method”

- Then, log-linear classifiers are employed, taking the embedding as input and predict w(t)’s context words within a certain range, e.g.Page 3, “Method”

See all papers in Proc. ACL 2014 that mention log-linear.

See all papers in Proc. ACL that mention log-linear.

Back to top.

named entity

- It works well for named entities .Page 3, “Background”

- The red paths refer to the relations between the named entity and its hypernyms extracted using the web mining method (Fu et al., 2013).Page 8, “Results and Analysis 5.1 Varying the Amount of Clusters”

- Word embeddings have been successfully applied in many applications, such as in sentiment analysis (Socher et al., 2011b), paraphrase detection (Socher et al., 2011a), chunking, and named entity recognition (Turian et al., 2010; Collobert et al., 2011).Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention named entity.

See all papers in Proc. ACL that mention named entity.

Back to top.