Article Structure

Abstract

Text-level discourse parsing is notoriously difficult, as distinctions between discourse relations require subtle semantic judgments that are not easily captured using standard features.

Introduction

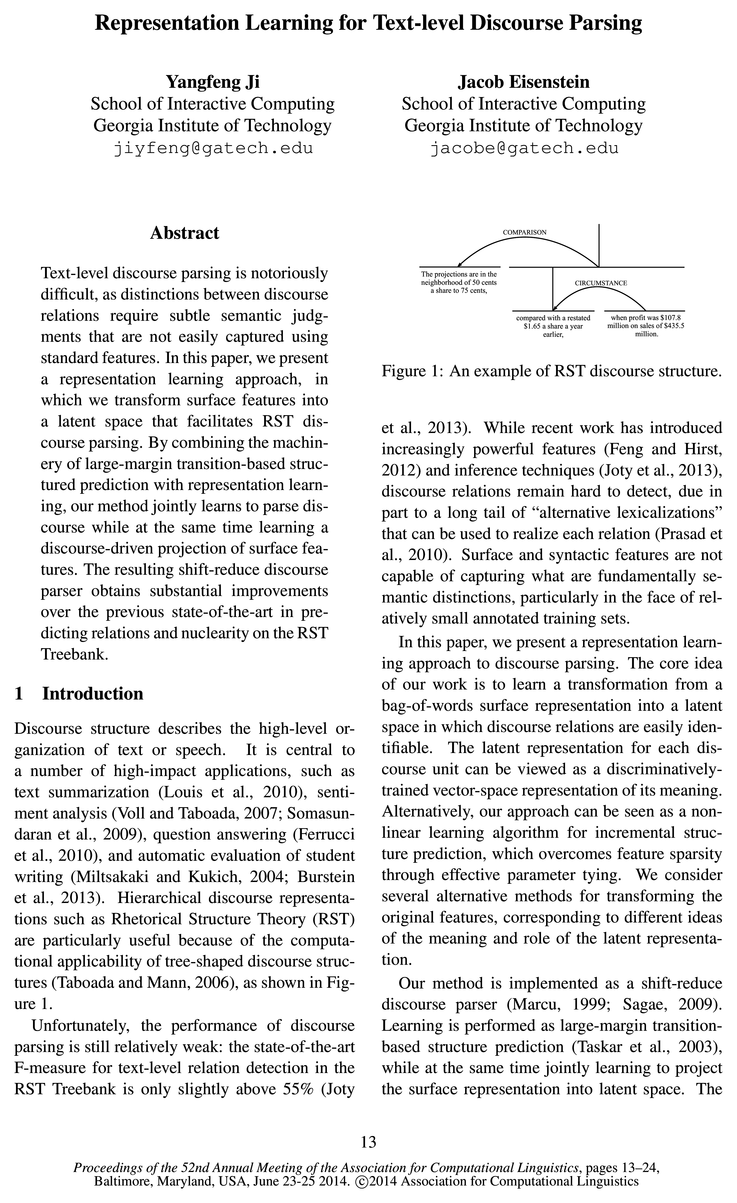

Discourse structure describes the high-level organization of text or speech.

Model

The core idea of this paper is to project lexical features into a latent space that facilitates discourse parsing.

Large-Margin Learning Framework

We apply a large margin structure prediction approach to train the model.

Implementation

The learning algorithm is applied in a shift-reduce parser, where the training data consists of the (unique) list of shift and reduce operations required to produce the gold RST parses.

Experiments

We evaluate DPLP on the RST Discourse Treebank (Carlson et al., 2001), comparing against state-of-the-art results.

Related Work

Early work on document-level discourse parsing applied handcrafted rules and heuristics to build trees in the framework of Rhetorical Structure Theory (Sumita et al., 1992; Corston-Oliver, 1998; Marcu, 2000a).

Conclusion

We have presented a framework to perform discourse parsing while jointly learning to project to a low-dimensional representation of the discourse

Topics

discourse parsing

- Text-level discourse parsing is notoriously difficult, as distinctions between discourse relations require subtle semantic judgments that are not easily captured using standard features.Page 1, “Abstract”

- In this paper, we present a representation learning approach, in which we transform surface features into a latent space that facilitates RST discourse parsing .Page 1, “Abstract”

- The resulting shift-reduce discourse parser obtains substantial improvements over the previous state-of-the-art in predicting relations and nuclearity on the RST Treebank.Page 1, “Abstract”

- Unfortunately, the performance of discourse parsing is still relatively weak: the state-of-the-art F—measure for text-level relation detection in the RST Treebank is only slightly above 55% (JotyPage 1, “Introduction”

- In this paper, we present a representation leam-ing approach to discourse parsing .Page 1, “Introduction”

- Our method is implemented as a shift-reduce discourse parser (Marcu, 1999; Sagae, 2009).Page 1, “Introduction”

- The core idea of this paper is to project lexical features into a latent space that facilitates discourse parsing .Page 2, “Model”

- Thus, we name the approach DPLP: Discourse Parsing from Linear Projection.Page 2, “Model”

- We apply transition-based (incremental) structured prediction to obtain a discourse parse , training a predictor to make the correct incremental moves to match the annotations of training data in the RST Treebank.Page 2, “Model”

- 2.1 Shift-reduce discourse parsingPage 2, “Model”

- 2.2 Discourse parsing with projected featuresPage 2, “Model”

See all papers in Proc. ACL 2014 that mention discourse parsing.

See all papers in Proc. ACL that mention discourse parsing.

Back to top.

shift-reduce

- The resulting shift-reduce discourse parser obtains substantial improvements over the previous state-of-the-art in predicting relations and nuclearity on the RST Treebank.Page 1, “Abstract”

- Our method is implemented as a shift-reduce discourse parser (Marcu, 1999; Sagae, 2009).Page 1, “Introduction”

- 2.1 Shift-reduce discourse parsingPage 2, “Model”

- We construct RST Trees using shift-reduce parsing, as first proposed by Marcu (1999).Page 2, “Model”

- C Total number of classes, which correspond to possible shift-reduce operationsPage 2, “Model”

- Shift-reduce parsing can be learned as a classification task, where the classifier uses features of the elements in the stack and queue to decide what move to take.Page 2, “Model”

- During shift-reduce parsing, we consider features of three EDUs:2 the top two elements onPage 2, “Model”

- In general, we can formulate the decision function for the multi-class shift-reduce classifier asPage 3, “Model”

- The score for class m (in our case, the value of taking the m-th shift-reduce operation) is computed by the inner product w;f(v; A).Page 3, “Model”

- The specific shift-reduce operation is chosen by maximizing the decision value in Equation 1.Page 3, “Model”

- If there are 0 total classes (possible shift-reduce operations), then a linear classifier must learn 3V0 parameters, while DPLP must learn (3V + C)K parameters, which will be smaller under the assumption that K < 0 < V. This can be seen as a form of parameter tying on the linear weights v'vm, which allows statistical strength to be shared across training instances.Page 3, “Model”

See all papers in Proc. ACL 2014 that mention shift-reduce.

See all papers in Proc. ACL that mention shift-reduce.

Back to top.

EDUs

- 2After applying a reduce operation, the stack will include a span that contains multiple EDUs .Page 2, “Model”

- where A 6 1Rwa is projects the surface representation v of three EDUs into a latent space of size K < V.Page 3, “Model”

- In this form, we transform the representation of each EDU separately, but do not attempt to represent interrelationships between the EDUs in the latent space.Page 3, “Model”

- In the difference form, we explicitly represent the difi‘erences between adjacent EDUs , by constructing A as a block difference matrix,Page 3, “Model”

- The result of this projection is that the latent representation has the form [C(v1 —v2); C (v1 — V3)] , representing the difference between the top two EDUs on the stack, and between the top EDU on the stack and the first EDU in the queue.Page 3, “Model”

- This is intended to capture semantic similarity, so that reductions between related EDUs will be preferred.Page 3, “Model”

- These templates are applied to individual EDUs, as well as pairs of EDUs: (1) the two EDUs on top of the stack, and (2) the EDU on top of the stack and the EDU in front of the queue.Page 5, “Implementation”

- Distance between EDUsPage 6, “Experiments”

- This suggests that using the projection matrix to model interrelationships between EDUs does not substantially improve performance, and the simpler concatenation construction may be preferred.Page 7, “Experiments”

- Using the vector-space representation of EDUs , our shift-reduce parsing system substantially outperforms existing systems on nuclearity detection and discourse relation identification.Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention EDUs.

See all papers in Proc. ACL that mention EDUs.

Back to top.

SVM

- As we will see, it is possible to learn {Wm} using standard support vector machine ( SVM ) training (holding A fixed), and then make a simple gradient-based update to A (holding {Wm} fixed).Page 3, “Large-Margin Learning Framework”

- As is standard in the multi-class linear SVM (Crammer and Singer, 2001), we can solve the problem defined in Equation 6 via Lagrangian optimization:Page 4, “Large-Margin Learning Framework”

- If A is fixed, then the optimization problem is equivalent to a standard multi-class SVM , in the transformed feature space f (vi; A).Page 4, “Large-Margin Learning Framework”

- We can obtain the weights {who} and dual variables {7711,10} from a standard dual-form SVM solver.Page 4, “Large-Margin Learning Framework”

- This iterative procedure is similar to the latent variable structural SVM (Yu and J oachims, 2009), although the specific detailsPage 4, “Large-Margin Learning Framework”

- Randomly choose a subset of training samples 1),; from D Train SVM with At_1 to obtain {W52} and {77%} Update At using Equation 11 with at = % if W < 5 then Return end if end while Retrain SVM with D and the final A Output: Projection matrix A, SVM classifier with weights wPage 5, “Large-Margin Learning Framework”

- Solving the quadratic programming defined by the dual form of the SVM is time-consuming, especially on a large-scale dataset.Page 5, “Large-Margin Learning Framework”

- This idea is similar to the mini-batch learning, which has been used in large-scale SVM problem (Nelakanti et al., 2013) and deep learning models (Le et al., 2011).Page 5, “Large-Margin Learning Framework”

- In iteration t, we choose at = After convergence, we obtain the weights w by applying the SVM over the entire dataset, using the final A.Page 5, “Large-Margin Learning Framework”

- While minibatch learning requires more iterations, the SVM training is much faster in each batch, and the overall algorithm is several times faster than using the entire training set for each update.Page 5, “Large-Margin Learning Framework”

See all papers in Proc. ACL 2014 that mention SVM.

See all papers in Proc. ACL that mention SVM.

Back to top.

Treebank

- The resulting shift-reduce discourse parser obtains substantial improvements over the previous state-of-the-art in predicting relations and nuclearity on the RST Treebank .Page 1, “Abstract”

- Unfortunately, the performance of discourse parsing is still relatively weak: the state-of-the-art F—measure for text-level relation detection in the RST Treebank is only slightly above 55% (JotyPage 1, “Introduction”

- In addition, we show that the latent representation coheres well with the characterization of discourse connectives in the Penn Discourse Treebank (Prasad et al., 2008).Page 2, “Introduction”

- (2010) show that there is a long tail of alternative lexicalizations for discourse relations in the Penn Discourse Treebank , posing obvious challenges for approaches based on directly matching lexical features observed in the training data.Page 2, “Model”

- We apply transition-based (incremental) structured prediction to obtain a discourse parse, training a predictor to make the correct incremental moves to match the annotations of training data in the RST Treebank .Page 2, “Model”

- We consider the values K E {30,60,90, 150}, A E {1,10,50, 100} and 7' E {1.0, 0.1, 0.01, 0.001}, and search over this space using a development set of thirty document randomly selected from within the RST Treebank training data.Page 5, “Implementation”

- We evaluate DPLP on the RST Discourse Treebank (Carlson et al., 2001), comparing against state-of-the-art results.Page 5, “Experiments”

- Dataset The RST Discourse Treebank (RST-DT) consists of 385 documents, with 347 for train-Page 5, “Experiments”

- (2009) in the context of the Penn Discourse Treebank (Prasad et al., 2008).Page 8, “Related Work”

See all papers in Proc. ACL 2014 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.

discourse structure

- Discourse structure describes the high-level organization of text or speech.Page 1, “Introduction”

- Figure 1: An example of RST discourse structure .Page 1, “Introduction”

- Based on this observation, our goal is to learn a function that transforms lexical features into a much lower-dimensional latent representation, while simultaneously learning to predict discourse structure based on this latent representation.Page 2, “Model”

- We cannot compare with the results of Feng and Hirst (2012), because they do not evaluate on the overall discourse structure , but rather treat each relation as an indiVidual classification problem.Page 6, “Experiments”

- Prior learning-based work has largely focused on lexical, syntactic, and structural features, but the close relationship between discourse structure and semantics (Forbes-Riley et al., 2006) suggests that shallow feature sets may struggle to capture the long tail of alternative lexicalizations that can be used to realize discourse relations (Prasad et al., 2010; Marcu and Echihabi, 2002).Page 8, “Related Work”

- In this work, we show how discourse structure annotations can function as a superVision signal to discriminatively learn a transformation from lexical features to a latent space that is well-suited for discourse parsing.Page 9, “Related Work”

See all papers in Proc. ACL 2014 that mention discourse structure.

See all papers in Proc. ACL that mention discourse structure.

Back to top.

word embeddings

- Another way to construct B is to use neural word embeddings (Collobert and Weston, 2008).Page 6, “Experiments”

- In this case, we can view the product Bv as a composition of the word embeddings , using the simple additive composition model proposed by MitchellPage 6, “Experiments”

- We used the word embeddings from Collobert and Weston (2008) with dimension {25, 50, 100}.Page 6, “Experiments”

- Grid search over heldout training data was used to select the optimum latent dimension for both the NMF and word embedding baselines.Page 6, “Experiments”

- Word embeddings 6.Page 7, “Experiments”

- Lines 5 and 6 show that discriminative learning of the projection matrix is crucial, as fixed projections obtained from NMF or neural word embeddings perform substantially worse.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention word embeddings.

See all papers in Proc. ACL that mention word embeddings.

Back to top.

embeddings

- Another way to construct B is to use neural word embeddings (Collobert and Weston, 2008).Page 6, “Experiments”

- In this case, we can view the product Bv as a composition of the word embeddings , using the simple additive composition model proposed by MitchellPage 6, “Experiments”

- We used the word embeddings from Collobert and Weston (2008) with dimension {25, 50, 100}.Page 6, “Experiments”

- Word embeddings 6.Page 7, “Experiments”

- Lines 5 and 6 show that discriminative learning of the projection matrix is crucial, as fixed projections obtained from NMF or neural word embeddings perform substantially worse.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention embeddings.

See all papers in Proc. ACL that mention embeddings.

Back to top.

learning algorithm

- Alternatively, our approach can be seen as a nonlinear learning algorithm for incremental structure prediction, which overcomes feature sparsity through effective parameter tying.Page 1, “Introduction”

- of our learning algorithm are different.Page 4, “Large-Margin Learning Framework”

- Algorithm 1 Mini-batch learning algorithm Input: Training set D, Regularization parameters A and 7', Number of iteration T, Initialization matrix A0, and Threshold 5 whilet = l,...,TdoPage 5, “Large-Margin Learning Framework”

- The learning algorithm is applied in a shift-reduce parser, where the training data consists of the (unique) list of shift and reduce operations required to produce the gold RST parses.Page 5, “Implementation”

See all papers in Proc. ACL 2014 that mention learning algorithm.

See all papers in Proc. ACL that mention learning algorithm.

Back to top.

support vector

- As we will see, it is possible to learn {Wm} using standard support vector machine (SVM) training (holding A fixed), and then make a simple gradient-based update to A (holding {Wm} fixed).Page 3, “Large-Margin Learning Framework”

- One property of the dual variables is that f (vi; A) is a support vector only if the dual variable nwi < 1.Page 4, “Large-Margin Learning Framework”

- In other words, the contribution from non support vectors to the projection matrix A is 0.Page 4, “Large-Margin Learning Framework”

- This is computationally advantageous since many instances are not support vectors , and it shows that the discriminatively-trained projection matrix only incorporates information from each instance to the extent that the correct classification receives low confidence.Page 4, “Large-Margin Learning Framework”

See all papers in Proc. ACL 2014 that mention support vector.

See all papers in Proc. ACL that mention support vector.

Back to top.

Deep Learning

- This idea is similar to the mini-batch learning, which has been used in large-scale SVM problem (Nelakanti et al., 2013) and deep learning models (Le et al., 2011).Page 5, “Large-Margin Learning Framework”

- Also called Deep Learning , such approaches have recently been applied in a number of NLP tasks (Collobert et al., 2011; Socher et al., 2012).Page 9, “Related Work”

- Deep learning approaches typically apply a nonlinear transformation such as the sigmoid function (Bengio et al., 2013).Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention Deep Learning.

See all papers in Proc. ACL that mention Deep Learning.

Back to top.

lexicalizations

- While recent work has introduced increasingly powerful features (Feng and Hirst, 2012) and inference techniques (J oty et al., 2013), discourse relations remain hard to detect, due in part to a long tail of “alternative lexicalizations” that can be used to realize each relation (Prasad et al., 2010).Page 1, “Introduction”

- (2010) show that there is a long tail of alternative lexicalizations for discourse relations in the Penn Discourse Treebank, posing obvious challenges for approaches based on directly matching lexical features observed in the training data.Page 2, “Model”

- Prior learning-based work has largely focused on lexical, syntactic, and structural features, but the close relationship between discourse structure and semantics (Forbes-Riley et al., 2006) suggests that shallow feature sets may struggle to capture the long tail of alternative lexicalizations that can be used to realize discourse relations (Prasad et al., 2010; Marcu and Echihabi, 2002).Page 8, “Related Work”

See all papers in Proc. ACL 2014 that mention lexicalizations.

See all papers in Proc. ACL that mention lexicalizations.

Back to top.

POS tag

- While such feature learning approaches have proven to increase robustness for parsing, POS tagging , and NER (Miller et al., 2004; Koo et al., 2008; Turian et al., 2010), they would seem to have an especially promising role for discourse, where training data is relatively sparse and ambiguity is considerable.Page 2, “Model”

- The dependency structure and POS tags are obtained from MALT-Parser (Nivre et al., 2007).Page 5, “Implementation”

- POS tag at beginning and end of the EDUPage 6, “Experiments”

See all papers in Proc. ACL 2014 that mention POS tag.

See all papers in Proc. ACL that mention POS tag.

Back to top.

unigrams

- The vocabulary V includes all unigrams after down-casing.Page 6, “Experiments”

- In total, there are 16250 unique unigrams in V.Page 6, “Experiments”

- fication for visualization is we consider only the top 1000 frequent unigrams in the RST—DT training set.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention unigrams.

See all papers in Proc. ACL that mention unigrams.

Back to top.