Article Structure

Abstract

The ability to accurately represent sentences is central to language understanding.

Introduction

The aim of a sentence model is to analyse and represent the semantic content of a sentence for purposes of classification or generation.

Background

The layers of the DCNN are formed by a convolution operation followed by a pooling operation.

Convolutional Neural Networks with Dynamic k-Max Pooling

We model sentences using a convolutional architecture that alternates wide convolutional layers

Properties of the Sentence Model

We describe some of the properties of the sentence model based on the DCNN.

Experiments

We test the network on four different experiments.

Conclusion

We have described a dynamic convolutional neural network that uses the dynamic k-max pooling operator as a nonlinear subsampling function.

Topics

Neural Network

- We describe a convolutional architecture dubbed the Dynamic Convolutional Neural Network (DCNN) that we adopt for the semantic modelling of sentences.Page 1, “Abstract”

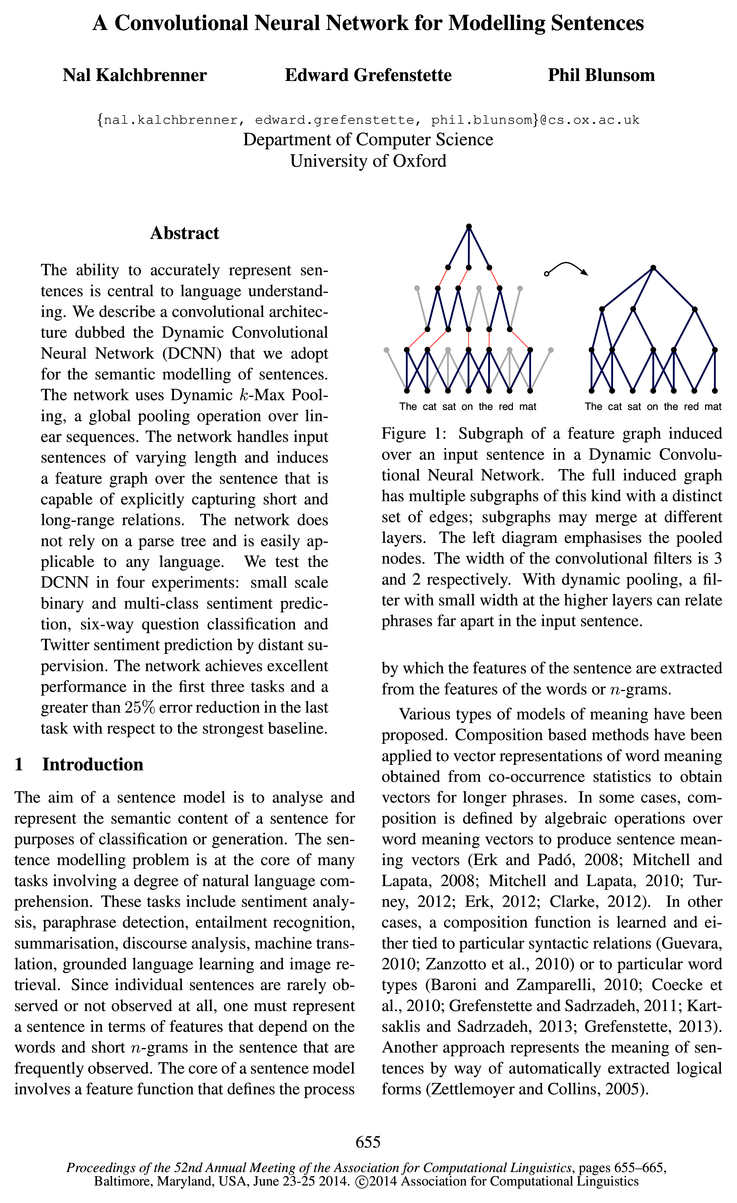

- Figure 1: Subgraph of a feature graph induced over an input sentence in a Dynamic Convolutional Neural Network .Page 1, “Introduction”

- A central class of models are those based on neural networks .Page 2, “Introduction”

- These range from basic neural bag-of-words or bag-of-n-grams models to the more structured recursive neural networks and to time-delay neural networks based on convolutional operations (Collobert and Weston, 2008; Socher et al., 2011; Kalchbrenner and Blunsom, 2013b).Page 2, “Introduction”

- We define a convolutional neural network architecture and apply it to the semantic modelling of sentences.Page 2, “Introduction”

- The resulting architecture is dubbed a Dynamic Convolutional Neural Network .Page 2, “Introduction”

- The structure is not tied to purely syntactic relations and is internal to the neural network .Page 2, “Introduction”

- Then we describe the operation of one-dimensional convolution and the classical Time-Delay Neural Network (TDNN) (Hinton, 1989; Waibel et al., 1990).Page 2, “Background”

- A model that adopts a more general structure provided by an external parse tree is the Recursive Neural Network (RecNN) (Pollack, 1990; Kiichler and Goller, 1996; Socher et al., 2011; Hermann and Blunsom, 2013).Page 3, “Background”

- The Recurrent Neural Network (RNN) is a special case of the recursive network where the structure that is followed is a simple linear chain (Gers and Schmidhuber, 2001; Mikolov et al., 2011).Page 3, “Background”

- 2.3 Time-Delay Neural NetworksPage 3, “Background”

See all papers in Proc. ACL 2014 that mention Neural Network.

See all papers in Proc. ACL that mention Neural Network.

Back to top.

n-grams

- Since individual sentences are rarely observed or not observed at all, one must represent a sentence in terms of features that depend on the words and short n-grams in the sentence that are frequently observed.Page 1, “Introduction”

- by which the features of the sentence are extracted from the features of the words or n-grams .Page 1, “Introduction”

- These generally consist of a projection layer that maps words, sub-word units or n-grams to high dimensional embeddings; the latter are then combined component-wise with an operation such as summation.Page 3, “Background”

- The trained weights in the filter m correspond to a linguistic feature detector that learns to recognise a specific class of n-grams .Page 3, “Background”

- These n-grams have size n g m, where m is the width of the filter.Page 3, “Background”

- The filters m of the wide convolution in the first layer can learn to recognise specific n-grams that have size less or equal to the filter width m; as we see in the experiments, m in the first layer is often set to a relatively large valuePage 6, “Properties of the Sentence Model”

- The subsequence of n-grams extracted by the generalised pooling operation induces in-variance to absolute positions, but maintains their order and relative positions.Page 6, “Properties of the Sentence Model”

- This gives the RNN excellent performance at language modelling, but it is suboptimal for remembering at once the n-grams further back in the input sentence.Page 6, “Properties of the Sentence Model”

- tures based on long n-grams and to hierarchically combine these features is highly beneficial.Page 9, “Experiments”

- In the first layer, the sequence is a continuous n-gram from the input sentence; in higher layers, sequences can be made of multiple separate n-grams .Page 9, “Experiments”

- The feature detectors learn to recognise not just single n-grams, but patterns within n-grams that have syntactic, semantic or structural significance.Page 9, “Experiments”

See all papers in Proc. ACL 2014 that mention n-grams.

See all papers in Proc. ACL that mention n-grams.

Back to top.

unigram

- On the hand-labelled test set, the network achieves a greater than 25% reduction in the prediction error with respect to the strongest unigram and bigram baseline reported in Go et al.Page 2, “Introduction”

- The baselines NB and BINB are Naive Bayes classifiers with, respectively, unigram features and unigram and bigram features.Page 7, “Experiments”

- SVM is a support vector machine with unigram and bigram features.Page 7, “Experiments”

- unigram , POS, head chunks 91.0Page 8, “Experiments”

- unigram , bigram, trigram 92.6 MAXENT POS, chunks, NE, supertagsPage 8, “Experiments”

- unigram , bigram, trigram 93.6 MAxENT POS, wh-word, head wordPage 8, “Experiments”

- unigram , POS, wh-word 95.0Page 8, “Experiments”

- The three non-neural classifiers are based on unigram and bigram features; the results are reported from (Go et al., 2009).Page 8, “Experiments”

- (2006) present a Maximum Entropy model that relies on 26 sets of syntactic and semantic features including unigrams , bigrams, trigrams, POS tags, named entity tags, structural relations from a CCG parse and WordNet synsets.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention unigram.

See all papers in Proc. ACL that mention unigram.

Back to top.

n-gram

- Convolving the same filter with the n-gram at every position in the sentence allows the features to be extracted independently of their position in the sentence.Page 2, “Introduction”

- 4.1 Word and n-Gram OrderPage 6, “Properties of the Sentence Model”

- For most applications and in order to learn fine-grained feature detectors, it is beneficial for a model to be able to discriminate whether a specific n-gram occurs in the input.Page 6, “Properties of the Sentence Model”

- 2.3, the Max-TDNN is sensitive to word order, but max pooling only picks out a single n-gram feature in each row of the sentence matrix.Page 6, “Properties of the Sentence Model”

- The NBoW performs similarly to the non-neural n-gram based classifiers.Page 8, “Experiments”

- Besides the RecNN that uses an external parser to produce structural features for the model, the other models use n-gram based or neural features that do not require external resources or additional annotations.Page 8, “Experiments”

- We see a significant increase in the performance of the DCNN with respect to the non-neural n-gram based classifiers; in the presence of large amounts of training data these classifiers constitute particularly strong baselines.Page 9, “Experiments”

- In the first layer, the sequence is a continuous n-gram from the input sentence; in higher layers, sequences can be made of multiple separate n-grams.Page 9, “Experiments”

See all papers in Proc. ACL 2014 that mention n-gram.

See all papers in Proc. ACL that mention n-gram.

Back to top.

parse tree

- The network does not rely on a parse tree and is easily applicable to any language.Page 1, “Abstract”

- The feature graph induces a hierarchical structure somewhat akin to that in a syntactic parse tree .Page 2, “Introduction”

- A model that adopts a more general structure provided by an external parse tree is the Recursive Neural Network (RecNN) (Pollack, 1990; Kiichler and Goller, 1996; Socher et al., 2011; Hermann and Blunsom, 2013).Page 3, “Background”

- It is sensitive to the order of the words in the sentence and it does not depend on external language-specific features such as dependency or constituency parse trees .Page 4, “Background”

- The recursive neural network follows the structure of an external parse tree .Page 7, “Properties of the Sentence Model”

- Likewise, the induced graph structure in a DCNN is more general than a parse tree in that it is not limited to syntactically dictated phrases; the graph structure can capture short or long-range semantic relations between words that do not necessarily correspond to the syntactic relations in a parse tree .Page 7, “Properties of the Sentence Model”

- The DCNN has internal input-dependent structure and does not rely on externally provided parse trees , which makes the DCNN directly applicable to hard-to-parse sentences such as tweets and to sentences from any language.Page 7, “Properties of the Sentence Model”

- RECNTN is a recursive neural network with a tensor-based feature function, which relies on external structural features given by a parse tree and performs best among the RecNNs.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention parse tree.

See all papers in Proc. ACL that mention parse tree.

Back to top.

bigram

- On the hand-labelled test set, the network achieves a greater than 25% reduction in the prediction error with respect to the strongest unigram and bigram baseline reported in Go et al.Page 2, “Introduction”

- The baselines NB and BINB are Naive Bayes classifiers with, respectively, unigram features and unigram and bigram features.Page 7, “Experiments”

- SVM is a support vector machine with unigram and bigram features.Page 7, “Experiments”

- unigram, bigram , trigram 92.6 MAXENT POS, chunks, NE, supertagsPage 8, “Experiments”

- unigram, bigram , trigram 93.6 MAxENT POS, wh-word, head wordPage 8, “Experiments”

- The three non-neural classifiers are based on unigram and bigram features; the results are reported from (Go et al., 2009).Page 8, “Experiments”

- (2006) present a Maximum Entropy model that relies on 26 sets of syntactic and semantic features including unigrams, bigrams , trigrams, POS tags, named entity tags, structural relations from a CCG parse and WordNet synsets.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention bigram.

See all papers in Proc. ACL that mention bigram.

Back to top.

embeddings

- These generally consist of a projection layer that maps words, sub-word units or n-grams to high dimensional embeddings ; the latter are then combined component-wise with an operation such as summation.Page 3, “Background”

- Word embeddings have size d = 4.Page 4, “Convolutional Neural Networks with Dynamic k-Max Pooling”

- The values in the embeddings wi are parameters that are op-timised during training.Page 4, “Convolutional Neural Networks with Dynamic k-Max Pooling”

- The set of parameters comprises the word embeddings , the filter weights and the weights from the fully connected layers.Page 7, “Experiments”

- As the dataset is rather small, we use lower-dimensional word vectors with d = 32 that are initialised with embeddings trained in an unsupervised way to predict contexts of occurrence (Turian et al., 2010).Page 9, “Experiments”

- The randomly initialised word embeddings are increased in length to a dimension of d = 60.Page 9, “Experiments”

See all papers in Proc. ACL 2014 that mention embeddings.

See all papers in Proc. ACL that mention embeddings.

Back to top.

recursive

- These range from basic neural bag-of-words or bag-of-n-grams models to the more structured recursive neural networks and to time-delay neural networks based on convolutional operations (Collobert and Weston, 2008; Socher et al., 2011; Kalchbrenner and Blunsom, 2013b).Page 2, “Introduction”

- A model that adopts a more general structure provided by an external parse tree is the Recursive Neural Network (RecNN) (Pollack, 1990; Kiichler and Goller, 1996; Socher et al., 2011; Hermann and Blunsom, 2013).Page 3, “Background”

- The Recurrent Neural Network (RNN) is a special case of the recursive network where the structure that is followed is a simple linear chain (Gers and Schmidhuber, 2001; Mikolov et al., 2011).Page 3, “Background”

- Similarly, a recursive neural network is sensitive to word order but has a bias towards the topmost nodes in the tree; shallower trees mitigate this effect to some extent (Socher et al., 2013a).Page 6, “Properties of the Sentence Model”

- The recursive neural network follows the structure of an external parse tree.Page 7, “Properties of the Sentence Model”

- RECNTN is a recursive neural network with a tensor-based feature function, which relies on external structural features given by a parse tree and performs best among the RecNNs.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention recursive.

See all papers in Proc. ACL that mention recursive.

Back to top.

recursive neural

- These range from basic neural bag-of-words or bag-of-n-grams models to the more structured recursive neural networks and to time-delay neural networks based on convolutional operations (Collobert and Weston, 2008; Socher et al., 2011; Kalchbrenner and Blunsom, 2013b).Page 2, “Introduction”

- A model that adopts a more general structure provided by an external parse tree is the Recursive Neural Network (RecNN) (Pollack, 1990; Kiichler and Goller, 1996; Socher et al., 2011; Hermann and Blunsom, 2013).Page 3, “Background”

- Similarly, a recursive neural network is sensitive to word order but has a bias towards the topmost nodes in the tree; shallower trees mitigate this effect to some extent (Socher et al., 2013a).Page 6, “Properties of the Sentence Model”

- The recursive neural network follows the structure of an external parse tree.Page 7, “Properties of the Sentence Model”

- RECNTN is a recursive neural network with a tensor-based feature function, which relies on external structural features given by a parse tree and performs best among the RecNNs.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention recursive neural.

See all papers in Proc. ACL that mention recursive neural.

Back to top.

recursive neural network

- These range from basic neural bag-of-words or bag-of-n-grams models to the more structured recursive neural networks and to time-delay neural networks based on convolutional operations (Collobert and Weston, 2008; Socher et al., 2011; Kalchbrenner and Blunsom, 2013b).Page 2, “Introduction”

- A model that adopts a more general structure provided by an external parse tree is the Recursive Neural Network (RecNN) (Pollack, 1990; Kiichler and Goller, 1996; Socher et al., 2011; Hermann and Blunsom, 2013).Page 3, “Background”

- Similarly, a recursive neural network is sensitive to word order but has a bias towards the topmost nodes in the tree; shallower trees mitigate this effect to some extent (Socher et al., 2013a).Page 6, “Properties of the Sentence Model”

- The recursive neural network follows the structure of an external parse tree.Page 7, “Properties of the Sentence Model”

- RECNTN is a recursive neural network with a tensor-based feature function, which relies on external structural features given by a parse tree and performs best among the RecNNs.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention recursive neural network.

See all papers in Proc. ACL that mention recursive neural network.

Back to top.

WordNet

- CCG parser, WordNetPage 8, “Experiments”

- hypernyms, WordNetPage 8, “Experiments”

- head word, parser SVM hypernyms, WordNetPage 8, “Experiments”

- (2006) present a Maximum Entropy model that relies on 26 sets of syntactic and semantic features including unigrams, bigrams, trigrams, POS tags, named entity tags, structural relations from a CCG parse and WordNet synsets.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.

word order

- As regards the other neural sentence models, the class of NBoW models is by definition insensitive to word order .Page 6, “Properties of the Sentence Model”

- A sentence model based on a recurrent neural network is sensitive to word order , but it has a bias towards the latest words that it takes as input (Mikolov et al., 2011).Page 6, “Properties of the Sentence Model”

- Similarly, a recursive neural network is sensitive to word order but has a bias towards the topmost nodes in the tree; shallower trees mitigate this effect to some extent (Socher et al., 2013a).Page 6, “Properties of the Sentence Model”

- 2.3, the Max-TDNN is sensitive to word order , but max pooling only picks out a single n-gram feature in each row of the sentence matrix.Page 6, “Properties of the Sentence Model”

See all papers in Proc. ACL 2014 that mention word order.

See all papers in Proc. ACL that mention word order.

Back to top.

SVM

- NB 41.0 81.8 BINB 41.9 83.1 SVM 40.7 79.4 REcNTN 45.7 85.4 MAX-TDNN 37.4 77.1 NBOW 42.4 80.5 DCNN 48.5 86.8Page 7, “Experiments”

- SVM is a support vector machine with unigram and bigram features.Page 7, “Experiments”

- head word, parser SVM hypernyms, WordNetPage 8, “Experiments”

- SVM 81.6 BINB 82.7 MAXENT 83.0 MAX-TDNN 78.8 NBOW 80.9 DCNN 87.4Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention SVM.

See all papers in Proc. ACL that mention SVM.

Back to top.

fine-grained

- For most applications and in order to learn fine-grained feature detectors, it is beneficial for a model to be able to discriminate whether a specific n-gram occurs in the input.Page 6, “Properties of the Sentence Model”

- Classifier Fine-grained (%) Binary (%)Page 7, “Experiments”

- Likewise, in the fine-grained case, we use the standard 8544/1101/2210 splits.Page 7, “Experiments”

- The DCNN for the fine-grained result has the same architecture, but the filters have size 10 and 7, the top pooling parameter k is 5 and the number of maps is, respectively, 6 and 12.Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention fine-grained.

See all papers in Proc. ACL that mention fine-grained.

Back to top.

semantic relations

- Small filters at higher layers can capture syntactic or semantic relations between noncontinuous phrases that are far apart in the input sentence.Page 2, “Introduction”

- Likewise, the induced graph structure in a DCNN is more general than a parse tree in that it is not limited to syntactically dictated phrases; the graph structure can capture short or long-range semantic relations between words that do not necessarily correspond to the syntactic relations in a parse tree.Page 7, “Properties of the Sentence Model”

- HIER NE, semantic relationsPage 8, “Experiments”

See all papers in Proc. ACL 2014 that mention semantic relations.

See all papers in Proc. ACL that mention semantic relations.

Back to top.

MAXENT

- unigram, bigram, trigram 92.6 MAXENT POS, chunks, NE, supertagsPage 8, “Experiments”

- unigram, bigram, trigram 93.6 MAxENT POS, wh-word, head wordPage 8, “Experiments”

- SVM 81.6 BINB 82.7 MAXENT 83.0 MAX-TDNN 78.8 NBOW 80.9 DCNN 87.4Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention MAXENT.

See all papers in Proc. ACL that mention MAXENT.

Back to top.

language model

- Besides comprising powerful classifiers as part of their architecture, neural sentence models can be used to condition a neural language model to generate sentences word by word (Schwenk, 2012; Mikolov and Zweig, 2012; Kalchbrenner and Blunsom, 2013a).Page 2, “Introduction”

- The RNN is primarily used as a language model , but may also be viewed as a sentence model with a linear structure.Page 3, “Background”

- This gives the RNN excellent performance at language modelling , but it is suboptimal for remembering at once the n-grams further back in the input sentence.Page 6, “Properties of the Sentence Model”

See all papers in Proc. ACL 2014 that mention language model.

See all papers in Proc. ACL that mention language model.

Back to top.

word embeddings

- Word embeddings have size d = 4.Page 4, “Convolutional Neural Networks with Dynamic k-Max Pooling”

- The set of parameters comprises the word embeddings , the filter weights and the weights from the fully connected layers.Page 7, “Experiments”

- The randomly initialised word embeddings are increased in length to a dimension of d = 60.Page 9, “Experiments”

See all papers in Proc. ACL 2014 that mention word embeddings.

See all papers in Proc. ACL that mention word embeddings.

Back to top.

distant supervision

- We test the DCNN in four experiments: small scale binary and multi-class sentiment prediction, six-way question classification and Twitter sentiment prediction by distant supervision .Page 1, “Abstract”

- The fourth experiment involves predicting the sentiment of Twitter posts using distant supervision (Go et al., 2009).Page 2, “Introduction”

- 5.4 TWitter Sentiment Prediction with Distant SupervisionPage 9, “Experiments”

See all papers in Proc. ACL 2014 that mention distant supervision.

See all papers in Proc. ACL that mention distant supervision.

Back to top.