Article Structure

Abstract

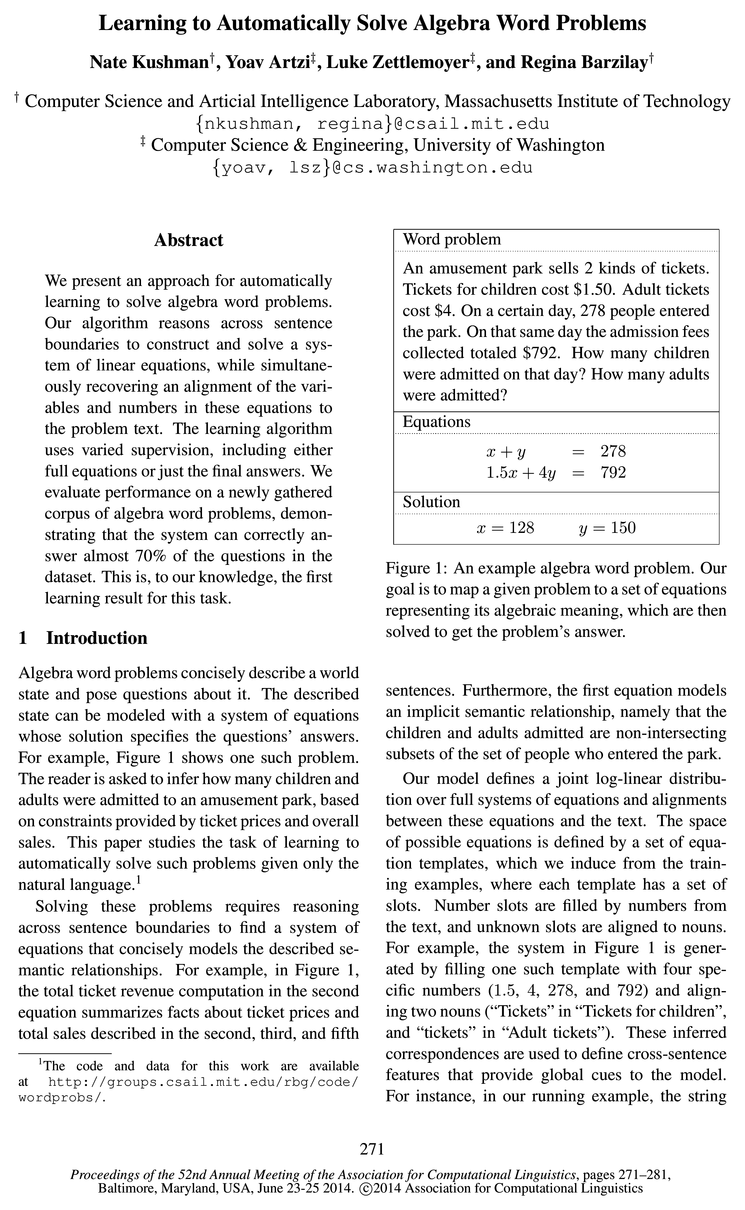

We present an approach for automatically learning to solve algebra word problems.

Introduction

Algebra word problems concisely describe a world state and pose questions about it.

Related Work

Our work is related to three main areas of research: situated semantic interpretation, information extraction, and automatic word problem solvers.

Mapping Word Problems to Equations

We define a two step process to map word problems to equations.

Learning

To learn our model, we need to induce the structure of system templates in ’2' and estimate the model parameters 6.

Inference

Computing the normalization constant for Equation 1 requires summing over all templates and all possible ways to instantiate them.

Model Details

Template Canonicalization There are many syntactically different but semantically equivalent ways to express a given system of equations.

Experimental Setup

Dataset We collected a new dataset of algebra word problems from Algebra.com, a crowd-sourced tutoring website.

Results

8.1 Impact of Supervision

Conclusion

We presented an approach for automatically leam-ing to solve algebra word problems.

Topics

natural language

- Situated Semantic Interpretation There is a large body of research on learning to map natural language to formal meaning representations, given varied forms of supervision.Page 2, “Related Work”

- This allows for a tighter mapping between the natural language and the system template, where the words aligned to the first equation in the template come from the first two sentences, and the words aligned to the second equation come from the third.Page 3, “Mapping Word Problems to Equations”

- Document level features Oftentimes the natural language in ac will contain words or phrases which are indicative of a certain template, but are not associated with any of the words aligned to slots in the template.Page 6, “Model Details”

- Single Slot Features The natural language cc always contains one or more questions or commands indicating the queried quantities.Page 7, “Model Details”

- As the questions are posted to a web forum, the posts often contained additional comments which were not part of the word problems and the solutions are embedded in long freeform natural language descriptions.Page 7, “Experimental Setup”

- Eventually, we hope to extend the techniques to synthesize even more complex structures, such as computer programs, from natural language .Page 9, “Conclusion”

See all papers in Proc. ACL 2014 that mention natural language.

See all papers in Proc. ACL that mention natural language.

Back to top.

beam search

- We use a beam search inference procedure to approximately compute Equation 1, as described in Section 5.Page 5, “Mapping Word Problems to Equations”

- Section 5 describes how we approximate the two terms of the gradient using beam search .Page 5, “Learning”

- Therefore, we approximate this computation using beam search .Page 5, “Inference”

- During learning we compute the second term in the gradient (Equation 2) using our beam search approximation.Page 6, “Inference”

- Parameters and Solver In our experiments we set k in our beam search algorithm (Section 5) to 200, and l to 20.Page 8, “Experimental Setup”

See all papers in Proc. ACL 2014 that mention beam search.

See all papers in Proc. ACL that mention beam search.

Back to top.

latent variables

- In both cases, the available labeled equations (either the seed set, or the full set) are abstracted to provide the model’s equation templates, while the slot filling and alignment decisions are latent variables whose settings are estimated by directly optimizing the marginal data log-likelihood.Page 2, “Introduction”

- In our approach, systems of equations are relatively easy to specify, providing a type of template structure, and the alignment of the slots in these templates to the text is modeled primarily with latent variables during learning.Page 2, “Related Work”

- In this way, the distribution over derivations 3/ is modeled as a latent variable .Page 5, “Mapping Word Problems to Equations”

See all papers in Proc. ACL 2014 that mention latent variables.

See all papers in Proc. ACL that mention latent variables.

Back to top.

question answering

- The described state can be modeled with a system of equations whose solution specifies the questions’ answers .Page 1, “Introduction”

- Examples include question answering (Clarke et al., 2010; Cai and Yates, 2013a; Cai and Yates, 2013b; Berant et al., 2013; Kwiatkowski et al.,Page 2, “Related Work”

- We focus on learning from varied supervision, including question answers and equation systems, both can be obtained reliably from annotators with no linguistic training and only basic math knowledge.Page 2, “Related Work”

See all papers in Proc. ACL 2014 that mention question answering.

See all papers in Proc. ACL that mention question answering.

Back to top.

semi-supervised

- Also, using different types of validation functions on different subsets of the data enables semi-supervised learning.Page 5, “Learning”

- Forms of Supervision We consider both semi-supervised and supervised learning.Page 8, “Experimental Setup”

- In the semi-supervised scenario, we assume access to the numerical answers of all problems in the training corpus and to a small number of problems paired with full equation systems.Page 8, “Experimental Setup”

See all papers in Proc. ACL 2014 that mention semi-supervised.

See all papers in Proc. ACL that mention semi-supervised.

Back to top.