Article Structure

Abstract

Tree kernel is an effective technique for relation extraction.

Introduction

Relation Extraction (RE) aims to identify a set of predefined relations between pairs of entities in text.

Topics

tree kernel

- Tree kernel is an effective technique for relation extraction.Page 1, “Abstract”

- In this paper, we propose a new tree kernel, called feature-enriched tree kernel (F TK ), which can enhance the traditional tree kernel by: 1) refining the syntactic tree representation by annotating each tree node with a set of discriminant features; and 2) proposing a new tree kernel which can better measure the syntactic tree similarity by taking all features into consideration.Page 1, “Abstract”

- Experimental results show that our method can achieve a 5.4% F—measure improvement over the traditional convolution tree kernel .Page 1, “Abstract”

- An effective technique is the tree kernel (Zelenko et al., 2003; Zhou et al., 2007; Zhang et al., 2006; Qian et al., 2008), which can exploit syntactic parse tree information for relation extraction.Page 1, “Introduction”

- Then the similarity between two trees are computed using a tree kernel, e. g., the convolution tree kernel proposed by Collins and Duffy (2001).Page 1, “Introduction”

- Unfortunately, one main shortcoming of the traditional tree kernel is that the syntactic tree representation usually cannot accurately capture thePage 1, “Introduction”

- This paper proposes a new tree kernel, referred as feature-enriched tree kernel (F TK ), which can effectively resolve the above problems by enhancing the traditional tree kernel in following ways:Page 1, “Introduction”

- 2) Based on the refined syntactic tree representation, we propose a new tree kernel —featnre-enriched tree kernel , which can better measure the similarity between two trees by also taking all features into consideration.Page 2, “Introduction”

- Experimental results show that our method can achieve a 5.4% F-measure improvement over the traditional convolution tree kernel based method.Page 2, “Introduction”

- Section 2 describes the feature-enriched tree kernel .Page 2, “Introduction”

- 2 The Feature-Enriched Tree KernelPage 2, “Introduction”

See all papers in Proc. ACL 2014 that mention tree kernel.

See all papers in Proc. ACL that mention tree kernel.

Back to top.

context information

- The feature we used includes characteristics of relation instance, phrase properties and context information (See Section 3 for details).Page 2, “Introduction”

- 3.3 Context Information FeaturePage 4, “Introduction”

- The context information of a phrase node is critical for identifying the role and the importance of a subtree in the whole relation instance.Page 4, “Introduction”

- This paper captures the following context information:Page 4, “Introduction”

- (2007), the context path from root to the phrase node is an effective context information feature.Page 4, “Introduction”

- Using these five relative positions, we capture the context information using the following features:Page 4, “Introduction”

- We experiment our method with four different feature settings, correspondingly: 1) FTK with only instance features — FTK( instance); 2) FTK with only phrase features — F TK( phrase ); 3) FTK with only context information features — FTK( context); and 4) FTK with all features — F TK.Page 4, “Introduction”

- 2) All types of features can improve the performance of relation extraction: FTK can correspondingly get 2.6%, 2.2% and 4.9% F-measure improvements using instance features, phrase features and context information features.Page 5, “Introduction”

- 3) Within the three types of features, context information feature can achieve the highest F-measure improvement.Page 5, “Introduction”

- We believe this may because: ® The context information is useful in providing clues for identifying the role and the importance of a subtree; and @ The context-free assumption of CTK is too strong, some critical information will lost in the CTK computation.Page 5, “Introduction”

See all papers in Proc. ACL 2014 that mention context information.

See all papers in Proc. ACL that mention context information.

Back to top.

relation extraction

- Tree kernel is an effective technique for relation extraction .Page 1, “Abstract”

- Relation Extraction (RE) aims to identify a set of predefined relations between pairs of entities in text.Page 1, “Introduction”

- In recent years, relation extraction has received considerable research attention.Page 1, “Introduction”

- An effective technique is the tree kernel (Zelenko et al., 2003; Zhou et al., 2007; Zhang et al., 2006; Qian et al., 2008), which can exploit syntactic parse tree information for relation extraction .Page 1, “Introduction”

- In this section, we describe the proposed feature-enriched tree kernel (FTK) for relation extraction .Page 2, “Introduction”

- 3 Features for Relation ExtractionPage 3, “Introduction”

- 1) By refining the syntactic tree with discriminant features and incorporating these features into the final tree similarity, FTK can significantly improve the relation extraction performance: compared with the convolution tree kernel baseline CTK, our method can achieve a 5.4% F-meas-ure improvement.Page 4, “Introduction”

- 2) All types of features can improve the performance of relation extraction : FTK can correspondingly get 2.6%, 2.2% and 4.9% F-measure improvements using instance features, phrase features and context information features.Page 5, “Introduction”

- A classical technique for relation extraction is to model the task as a feature-based classification problem (Kambhatla, 2004; Zhou et al., 2005; J iang & Zhai, 2007; Chan & Roth, 2010; Chan & Roth, 2011), and feature engineering is obviously the key for performance improvement.Page 5, “Introduction”

See all papers in Proc. ACL 2014 that mention relation extraction.

See all papers in Proc. ACL that mention relation extraction.

Back to top.

relation instance

- Finally, new relation instances are extracted using kernel based classifiers, e. g., the SVM classifier.Page 1, “Introduction”

- The feature we used includes characteristics of relation instance , phrase properties and context information (See Section 3 for details).Page 2, “Introduction”

- Relation instances of the same type often share some common characteristics.Page 3, “Introduction”

- A feature indicates whether a relation instance has the following four syntactico—semantic structures in (Chan & Roth, 2011) — Premodifiers, Possessive, Preposition, Formulaic and Verbal.Page 3, “Introduction”

- The context information of a phrase node is critical for identifying the role and the importance of a subtree in the whole relation instance .Page 4, “Introduction”

- We observed that a phrase’s relative position with the relation’s arguments is useful for identifying the role of the phrase node in the whole relation instance .Page 4, “Introduction”

- That is, we parse all sentences using the Charniak’s parser (Charniak, 2001), relation instances are generated by iterating over all pairs of entity mentions occurring in the same sentence.Page 4, “Introduction”

See all papers in Proc. ACL 2014 that mention relation instance.

See all papers in Proc. ACL that mention relation instance.

Back to top.

F-measure

- Experimental results show that our method can achieve a 5.4% F-measure improvement over the traditional convolution tree kernel based method.Page 2, “Introduction”

- The overall performance of CTK and FTK is shown in Table 1, the F-measure improvements over CTK are also shown inside the parentheses.Page 4, “Introduction”

- FTK on the 7 major relation types and their F-measure improvement over CTKPage 4, “Introduction”

- 2) All types of features can improve the performance of relation extraction: FTK can correspondingly get 2.6%, 2.2% and 4.9% F-measure improvements using instance features, phrase features and context information features.Page 5, “Introduction”

- 3) Within the three types of features, context information feature can achieve the highest F-measure improvement.Page 5, “Introduction”

- From Table 3, we can see that FTK can achieve competitive performance: Q) It achieves a 0.8% F-measure improvement over the feature-based system of J iang & Zhai (2007); @ It achieves a 0.5% F-measure improvement over a state-of-the-art tree kernel: context sensitive CTK with CSPT of Zhou et al., (2007); C3) The F-measure of our system is slightly lower than the current best performance on ACE 2004 (Qian et al., 2008) — 73.Page 5, “Introduction”

See all papers in Proc. ACL 2014 that mention F-measure.

See all papers in Proc. ACL that mention F-measure.

Back to top.

semantic relation

- However, the traditional syntactic tree representation is often too coarse or ambiguous to accurately capture the semantic relation information between two entities.Page 1, “Abstract”

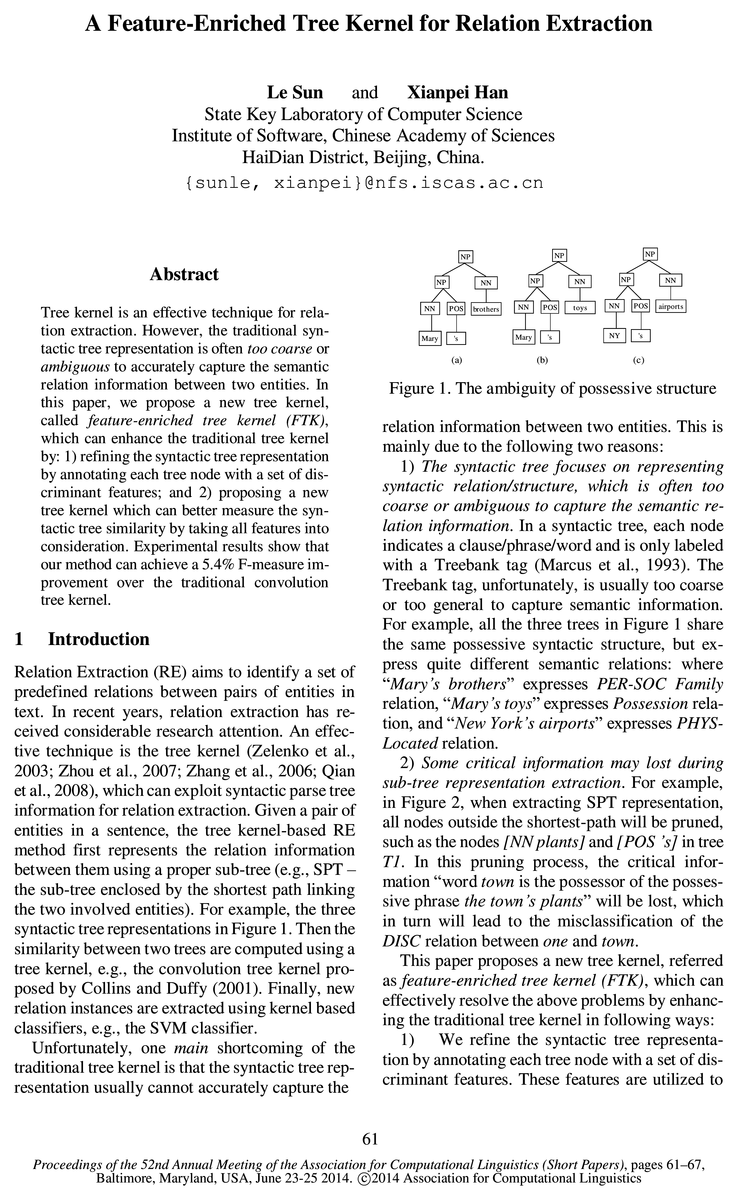

- 1) The syntactic tree focuses on representing syntactic relation/structure, which is often too coarse or ambiguous to capture the semantic relation information.Page 1, “Introduction”

- For example, all the three trees in Figure 1 share the same possessive syntactic structure, but express quite different semantic relations : where “Mary’s brothers” expresses PER-SOC Family relation, “Mary ’s toys” expresses Possession relation, and “New York’s airports” expresses PH YS-Located relation.Page 1, “Introduction”

- better capture the semantic relation information between two entities.Page 2, “Introduction”

- As described in above, syntactic tree is often too coarse or too ambiguous to represent the semantic relation information between two entities.Page 2, “Introduction”

See all papers in Proc. ACL 2014 that mention semantic relation.

See all papers in Proc. ACL that mention semantic relation.

Back to top.

Treebank

- In a syntactic tree, each node indicates a clause/phrase/word and is only labeled with a Treebank tag (Marcus et al., 1993).Page 1, “Introduction”

- The Treebank tag, unfortunately, is usually too coarse or too general to capture semantic information.Page 1, “Introduction”

- where Ln is its phrase label (i.e., its Treebank tag), and F7, is a feature vector which indicates the characteristics of node n, which is represented as:Page 2, “Introduction”

- As discussed in above, the Treebank tag is too coarse to capture the property of a phrase node.Page 3, “Introduction”

See all papers in Proc. ACL 2014 that mention Treebank.

See all papers in Proc. ACL that mention Treebank.

Back to top.

entity type

- Features about the entity information of arguments, including: a) #TP]-#TP2: the concat of the major entity types of arguments; b) #STI-#ST2: the concat of the sub entity types of arguments; c) #MT] -#MT2: the concat of the mention types of arguments.Page 3, “Introduction”

- We capture the property of a node’s content using the following features: a) MB_#Num: The number of mentions contained in the phrase; b) MB_C_#Type: A feature indicates that the phrase contains a mention with major entity type #Type; c) M W_#Num: The number of words within the phrase.Page 3, “Introduction”

- a) #RP_Arg]Head_#Arg] Type: a feature indicates the relative position of a phrase node with argument 1’s head phrase, where #RP is the relative position (one of match, cover, within, overlap, other), and #Arg] Type is the major entity type of argument 1.Page 4, “Introduction”

See all papers in Proc. ACL 2014 that mention entity type.

See all papers in Proc. ACL that mention entity type.

Back to top.

feature vector

- Feature VectorPage 2, “Introduction”

- where Ln is its phrase label (i.e., its Treebank tag), and F7, is a feature vector which indicates the characteristics of node n, which is represented as:Page 2, “Introduction”

- where 6 (t1, t2) is the same indicator function as in CTK; (m, nj)is a pair of aligned nodes between 151 and t2, where m and nj are correspondingly in the same position of tree t1 and t2; E (t1, 752) is the set of all aligned node pairs; sim(n,-, nj) is the feature vector similarity between nodeni and nj, computed as the dot product between their feature vectors Fm and Fnj.Page 3, “Introduction”

See all papers in Proc. ACL 2014 that mention feature vector.

See all papers in Proc. ACL that mention feature vector.

Back to top.

Feature weighting

- Feature weighting .Page 4, “Introduction”

- Currently, we set all features with an uniform weight w E (0, 1), which is used to control the relative importance of the feature in the final tree similarity: the larger the feature weight , the more important the feature in the final tree similarity.Page 4, “Introduction”

- feature weighting algorithm which can accuratelyPage 5, “Introduction”

See all papers in Proc. ACL 2014 that mention Feature weighting.

See all papers in Proc. ACL that mention Feature weighting.

Back to top.

Lexical Semantics

- Therefore, we enrich each phrase node with features about its lexical pattern, its content information, and its lexical semantics:Page 3, “Introduction”

- 3) Lexical Semantics .Page 3, “Introduction”

- If the node is a preterminal node, we capture its lexical semantic by adding features indicating its WordNet sense information.Page 3, “Introduction”

See all papers in Proc. ACL 2014 that mention Lexical Semantics.

See all papers in Proc. ACL that mention Lexical Semantics.

Back to top.

SVM

- Finally, new relation instances are extracted using kernel based classifiers, e. g., the SVM classifier.Page 1, “Introduction”

- We apply the one vs. others strategy for multiple classification using SVM .Page 4, “Introduction”

- For SVM training, the parameter C is set to 2.4 for all experiments, and the tree kernel parameter A is tuned to 0.2 for FTK and 0.4 (the optimal parameter setting used in Qian et al.Page 4, “Introduction”

See all papers in Proc. ACL 2014 that mention SVM.

See all papers in Proc. ACL that mention SVM.

Back to top.

WordNet

- If the node is a preterminal node, we capture its lexical semantic by adding features indicating its WordNet sense information.Page 3, “Introduction”

- Specifically, the first WordNet sense of the terminal word, and all this sense’s hyponym senses will be added as features.Page 3, “Introduction”

- For example, WordNet senses {New Y0rk#], city#], district#],Page 3, “Introduction”

See all papers in Proc. ACL 2014 that mention WordNet.

See all papers in Proc. ACL that mention WordNet.

Back to top.