Article Structure

Abstract

Product feature mining is a key subtask in fine-grained opinion mining.

Introduction

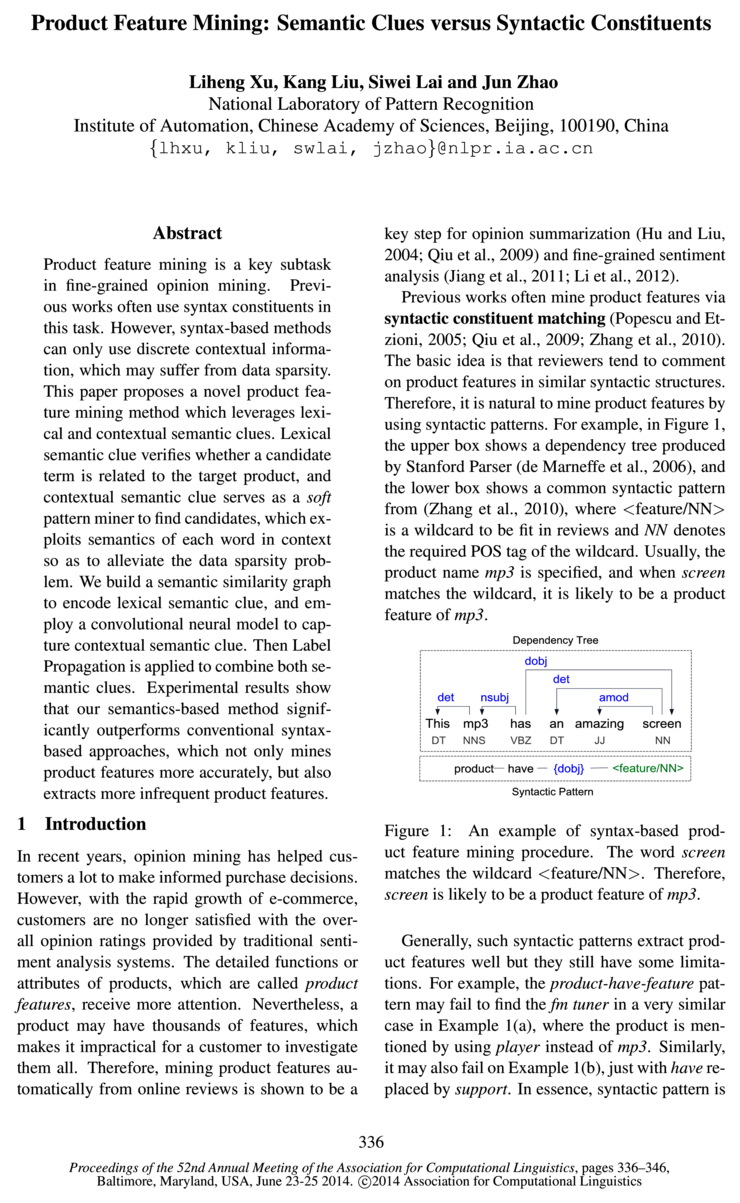

In recent years, opinion mining has helped customers a lot to make informed purchase decisions.

Related Work

In product feature mining task, Hu and Liu (2004) proposed a pioneer research.

The Proposed Method

We propose a semantics-based bootstrapping method for product feature mining.

Experiments

4.1 Datasets and Evaluation Metrics

Conclusion and Future Work

This paper proposes a product feature mining method by leveraging contextual and lexical semantic clues.

Topics

lexical semantic

- Lexical semantic clue verifies whether a candidate term is related to the target product, and contextual semantic clue serves as a soft pattern miner to find candidates, which exploits semantics of each word in context so as to alleviate the data sparsity problem.Page 1, “Abstract”

- We build a semantic similarity graph to encode lexical semantic clue, and employ a convolutional neural model to capture contextual semantic clue.Page 1, “Abstract”

- We call it lexical semantic clue.Page 2, “Introduction”

- Then, based on the assumption that terms that are more semantically similar to the seeds are more likely to be product features, a graph which measures semantic similarities between terms is built to capture lexical semantic clue.Page 2, “Introduction”

- Then, a semantic similarity graph is created to capture lexical semantic clue, and a Convolutional Neural Network (CNN) (Collobert et al., 2011) is trained in each bootstrapping iteration to encode contextual semantic clue.Page 3, “The Proposed Method”

- 3.2 Capturing Lexical Semantic Clue in a Semantic Similarity GraphPage 3, “The Proposed Method”

- To capture lexical semantic clue, each word is first converted into word embedding, which is a continuous vector with each dimension’s value corresponds to a semantic or grammatical interpretation (Turian et al., 2010).Page 3, “The Proposed Method”

- Lexical semantic clue is captured by measuring semantic similarity between terms.Page 4, “The Proposed Method”

- Particularly, when 04 = 0, we can set the prior knowledge I without V0 to L0 so that only lexical semantic clue is used; otherwise if 04 = 1, only contextual semantic clue is used.Page 5, “The Proposed Method”

- LEX only uses lexical semantic clue.Page 6, “Experiments”

- Furthermore, LEX gets better recall than CONT and all syntax-based methods, which indicates that lexical semantic clue does aid to mine more infrequent features as expected.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention lexical semantic.

See all papers in Proc. ACL that mention lexical semantic.

Back to top.

semantic similarity

- We build a semantic similarity graph to encode lexical semantic clue, and employ a convolutional neural model to capture contextual semantic clue.Page 1, “Abstract”

- Then, based on the assumption that terms that are more semantically similar to the seeds are more likely to be product features, a graph which measures semantic similarities between terms is built to capture lexical semantic clue.Page 2, “Introduction”

- 0 It exploits semantic similarity between words to capture lexical clues, which is shown to be more effective than co-occurrence relation between words and syntactic patterns.Page 2, “Introduction”

- In addition, experiments show that the semantic similarity has the advantage of mining infrequent product features, which is crucial for this task.Page 2, “Introduction”

- Then, a semantic similarity graph is created to capture lexical semantic clue, and a Convolutional Neural Network (CNN) (Collobert et al., 2011) is trained in each bootstrapping iteration to encode contextual semantic clue.Page 3, “The Proposed Method”

- 3.2 Capturing Lexical Semantic Clue in a Semantic Similarity GraphPage 3, “The Proposed Method”

- 3.2.2 Building the Semantic Similarity GraphPage 4, “The Proposed Method”

- Lexical semantic clue is captured by measuring semantic similarity between terms.Page 4, “The Proposed Method”

- The underlying motivation is that if we have known some product feature seeds, then terms that are more semantically similar to these seeds are more likely to be product features.Page 4, “The Proposed Method”

- For example, if screen is known to be a product feature of mp3, and led is of high semantic similarity with screen, we can infer that led is also a product feature.Page 4, “The Proposed Method”

- Analogously, terms that are semantically similar to negative labeled seeds are not product features.Page 4, “The Proposed Method”

See all papers in Proc. ACL 2014 that mention semantic similarity.

See all papers in Proc. ACL that mention semantic similarity.

Back to top.

word embedding

- To capture lexical semantic clue, each word is first converted into word embedding , which is a continuous vector with each dimension’s value corresponds to a semantic or grammatical interpretation (Turian et al., 2010).Page 3, “The Proposed Method”

- Learning large-scale word embeddings is very time-consuming (Collobert et al., 2011), we thus employ a faster method named Skip-gram model (Mikolov et al., 2013).Page 3, “The Proposed Method”

- 3.2.1 Learning Word Embedding for Semantic RepresentationPage 3, “The Proposed Method”

- Given a sequence of training words W = {2121, ’LU2, ..., mm}, the goal of the Skip-gram model is to learn a continuous vector space EB = {61, 62, ..., em}, where e,- is the word embedding of mi.Page 3, “The Proposed Method”

- Word embedding naturally meets the demand above: words that are more semantically similar to each other are located closer in the embedding space (Collobert et al., 2011).Page 4, “The Proposed Method”

- To get the output score, q,- is first converted into a concatenated vector :10, [61; 62; ...; 6;], where ej is the word embedding of the j-th word.Page 4, “The Proposed Method”

- where ya) is the output score of the i-th layer, and W) is the bias of the i-th layer; W“) 6 WWW) and W(3) E R2Xh are parameter matrixes, where n is the dimension of word embedding , and h is the size of nodes in the hidden layer.Page 5, “The Proposed Method”

- Input: The review corpus R, a large corpus C Output: The mined product feature list P Initialization: Train word embedding set EB first on C, and then on RPage 6, “The Proposed Method”

- The dimension of word embedding n = 100, the convergence threshold 5 = 10—7, and the number of expanded seeds T = 40.Page 6, “Experiments”

- In contrast, CONT exploits latent semantics of each word in context, and LEX takes advantage of word embedding , which is induced from global word co-occurrence statistic.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention word embedding.

See all papers in Proc. ACL that mention word embedding.

Back to top.

data sparsity

- However, syntax-based methods can only use discrete contextual information, which may suffer from data sparsity .Page 1, “Abstract”

- Lexical semantic clue verifies whether a candidate term is related to the target product, and contextual semantic clue serves as a soft pattern miner to find candidates, which exploits semantics of each word in context so as to alleviate the data sparsity problem.Page 1, “Abstract”

- Therefore, such a representation often suffers from the data sparsity problem (Turian et al., 2010).Page 2, “Introduction”

- This enables our method to be less sensitive to lexicon change, so that the data sparsity problem can be alleviated .Page 2, “Introduction”

- As discussed in the first section, syntactic patterns often suffer from data sparsity .Page 3, “Related Work”

- Thus, the data sparsity problem can be alleviated.Page 3, “Related Work”

- To alleviate the data sparsity problem, EB is first trained on a very large corpus3 (denoted by C), and then fine-tuned on the target review corpus R. Particularly, for phrasal product features, a statistic-based method in (Zhu et al., 2009) is used to detect noun phrases in R. Then, an Unfolding Recursive Autoencoder (Socher et al., 2011) is trained on C to obtain embedding vectors for noun phrases.Page 4, “The Proposed Method”

- As for SGW-TSVM, the features they used for the TSVM suffer from the data sparsity problem for infrequent terms.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention data sparsity.

See all papers in Proc. ACL that mention data sparsity.

Back to top.

Neural Network

- Then, a semantic similarity graph is created to capture lexical semantic clue, and a Convolutional Neural Network (CNN) (Collobert et al., 2011) is trained in each bootstrapping iteration to encode contextual semantic clue.Page 3, “The Proposed Method”

- 3.3 Encoding Contextual Semantic Clue Using Convolutional Neural NetworkPage 4, “The Proposed Method”

- 3.3.1 The architecture of the Convolutional Neural NetworkPage 4, “The Proposed Method”

- The architecture of the Convolutional Neural Network is shown in Figure 2.Page 4, “The Proposed Method”

- Figure 2: The architecture of the Convolutional Neural Network .Page 4, “The Proposed Method”

- F W-5 uses a traditional neural network with a fixed window size of 5 to replace the CNN in CONT, and the candidate term to be classified is placed in the center of the window.Page 8, “Experiments”

- A semantic similarity graph is built to capture lexical semantic clue, and a convolutional neural network is used to encode contextual semantic clue.Page 9, “Conclusion and Future Work”

See all papers in Proc. ACL 2014 that mention Neural Network.

See all papers in Proc. ACL that mention Neural Network.

Back to top.

F-Measure

- Evaluation Metrics: We evaluate the proposed method in terms of precision(P), recall(R) and F-measure (F).Page 6, “Experiments”

- Figure 5 shows the performance under different N, where the F-Measure saturates when N equates to 40 and beyond.Page 9, “Experiments”

- F-MeasurePage 9, “Experiments”

- Figure 5: F-Measure vs. N for the final results.Page 9, “Experiments”

- Figure 6 shows F-Measure under different window size 1.Page 9, “Experiments”

- Figure 6: F-Measure vs.Page 9, “Experiments”

See all papers in Proc. ACL 2014 that mention F-Measure.

See all papers in Proc. ACL that mention F-Measure.

Back to top.

co-occurrence

- 0 It exploits semantic similarity between words to capture lexical clues, which is shown to be more effective than co-occurrence relation between words and syntactic patterns.Page 2, “Introduction”

- A recent research (Xu et al., 2013) extracted infrequent product features by a semi-supervised classifier, which used word-syntactic pattern co-occurrence statistics as features for the classifier.Page 3, “Related Work”

- Afterwards, word-syntactic pattern co-occurrence statistic is used as feature for a semi-supervised classifier TSVM (J oachims, 1999) to further refine the results.Page 6, “Experiments”

- In contrast, CONT exploits latent semantics of each word in context, and LEX takes advantage of word embedding, which is induced from global word co-occurrence statistic.Page 7, “Experiments”

See all papers in Proc. ACL 2014 that mention co-occurrence.

See all papers in Proc. ACL that mention co-occurrence.

Back to top.

Evaluation Metrics

- 4.1 Datasets and Evaluation MetricsPage 6, “Experiments”

- Evaluation Metrics : We evaluate the proposed method in terms of precision(P), recall(R) and F-measure(F).Page 6, “Experiments”

- To take into account the correctly expanded terms for both positive and negative seeds, we use Accuracy as the evaluation metric,Page 8, “Experiments”

See all papers in Proc. ACL 2014 that mention Evaluation Metrics.

See all papers in Proc. ACL that mention Evaluation Metrics.

Back to top.

semi-supervised

- At the same time, a semi-supervised convolutional neural model (Collobert et al., 2011) is employed to encode contextual semantic clue.Page 2, “Introduction”

- A recent research (Xu et al., 2013) extracted infrequent product features by a semi-supervised classifier, which used word-syntactic pattern co-occurrence statistics as features for the classifier.Page 3, “Related Work”

- Afterwards, word-syntactic pattern co-occurrence statistic is used as feature for a semi-supervised classifier TSVM (J oachims, 1999) to further refine the results.Page 6, “Experiments”

See all papers in Proc. ACL 2014 that mention semi-supervised.

See all papers in Proc. ACL that mention semi-supervised.

Back to top.